如果你也是看准了Python,想自学Python,在这里为大家准备了丰厚的免费学习大礼包,带大家一起学习,给大家剖析Python兼职、就业行情前景的这些事儿。

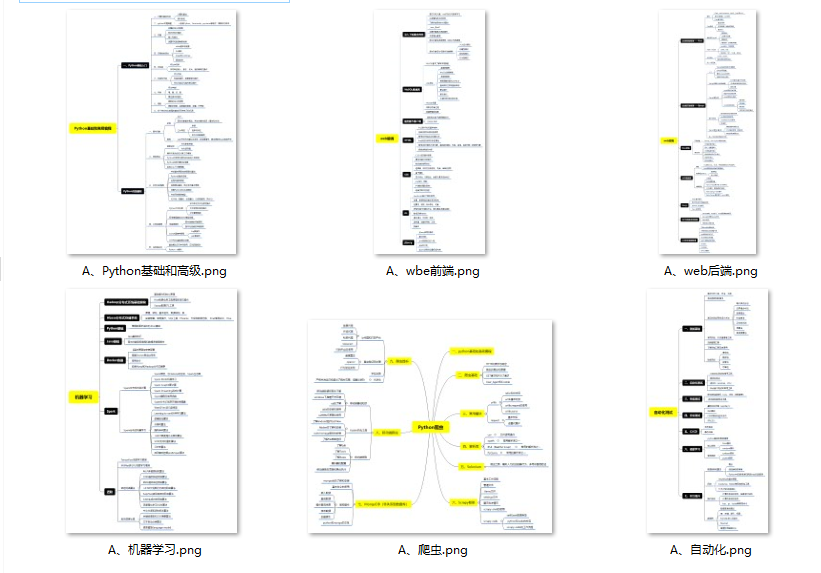

一、Python所有方向的学习路线

Python所有方向路线就是把Python常用的技术点做整理,形成各个领域的知识点汇总,它的用处就在于,你可以按照上面的知识点去找对应的学习资源,保证自己学得较为全面。

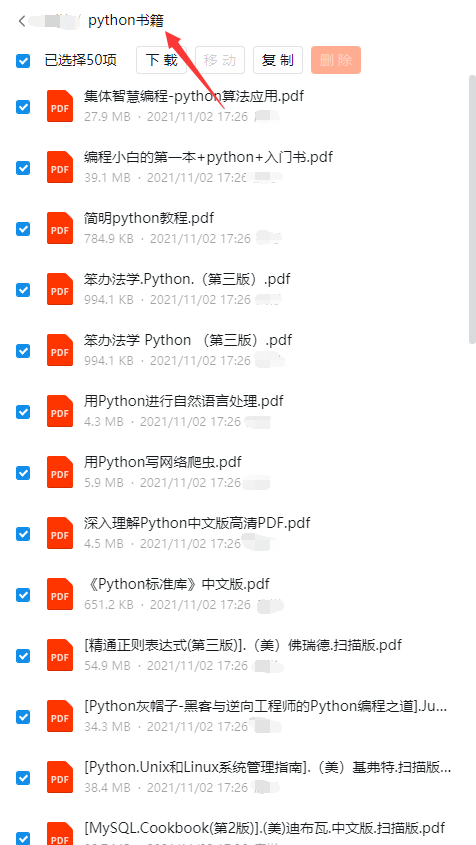

二、学习软件

工欲善其必先利其器。学习Python常用的开发软件都在这里了,给大家节省了很多时间。

三、全套PDF电子书

书籍的好处就在于权威和体系健全,刚开始学习的时候你可以只看视频或者听某个人讲课,但等你学完之后,你觉得你掌握了,这时候建议还是得去看一下书籍,看权威技术书籍也是每个程序员必经之路。

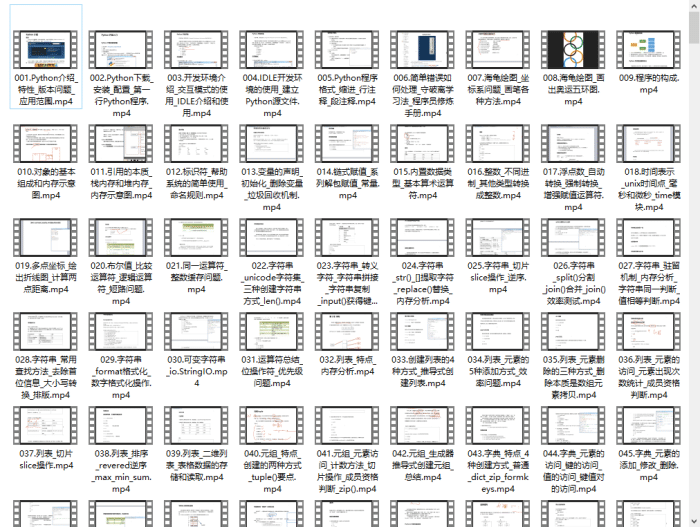

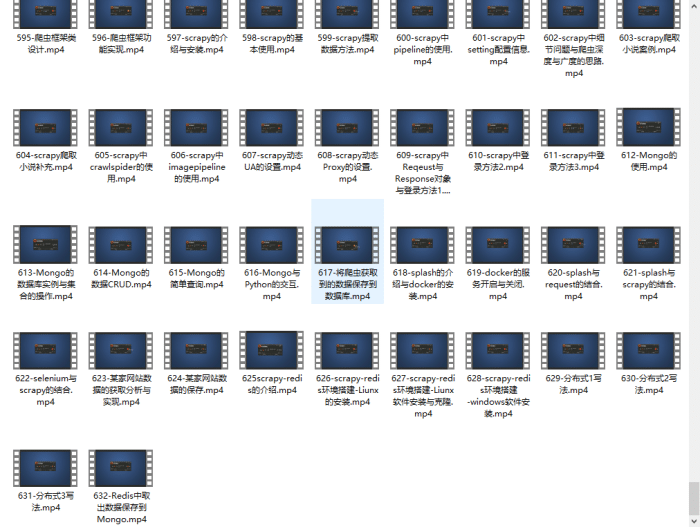

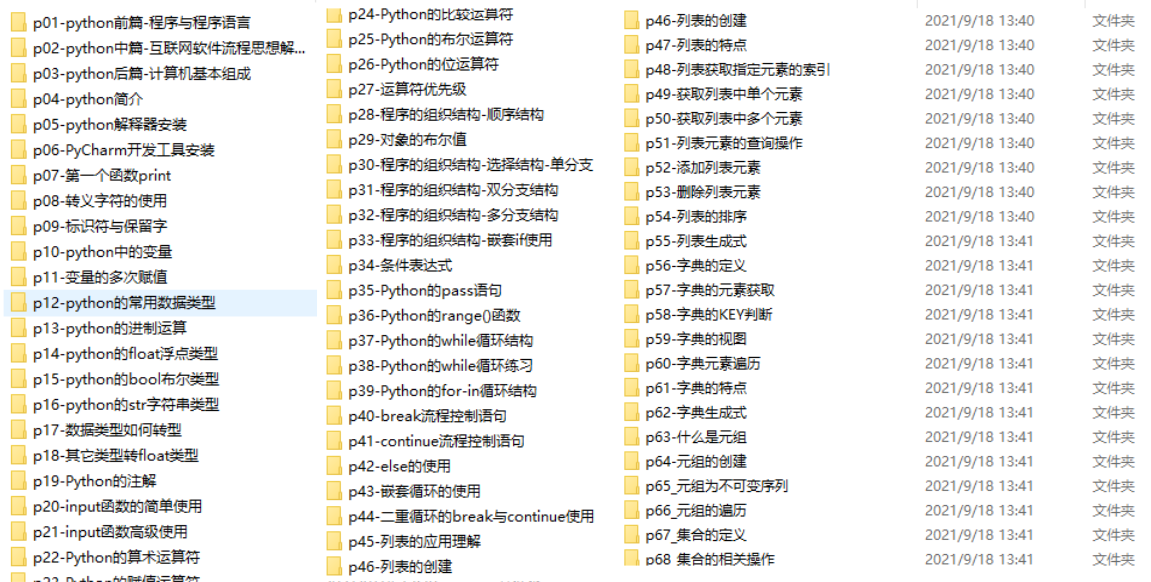

四、入门学习视频

我们在看视频学习的时候,不能光动眼动脑不动手,比较科学的学习方法是在理解之后运用它们,这时候练手项目就很适合了。

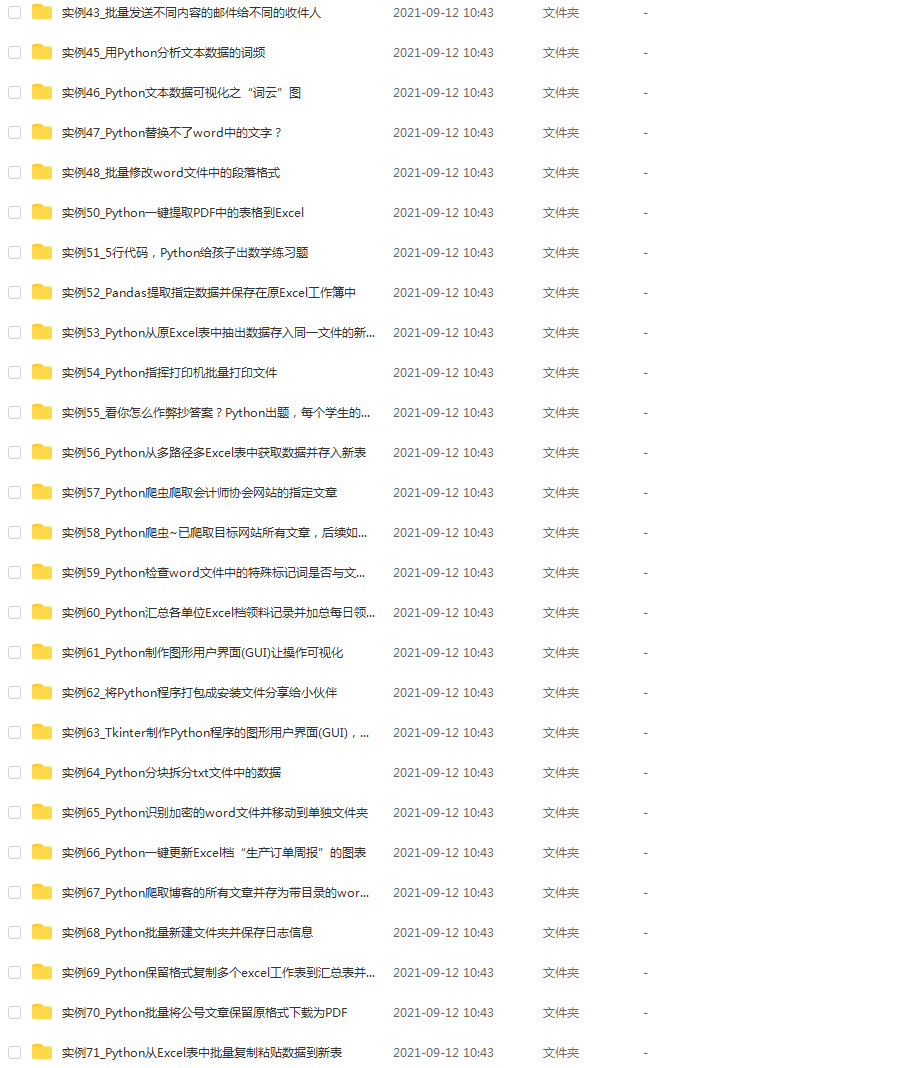

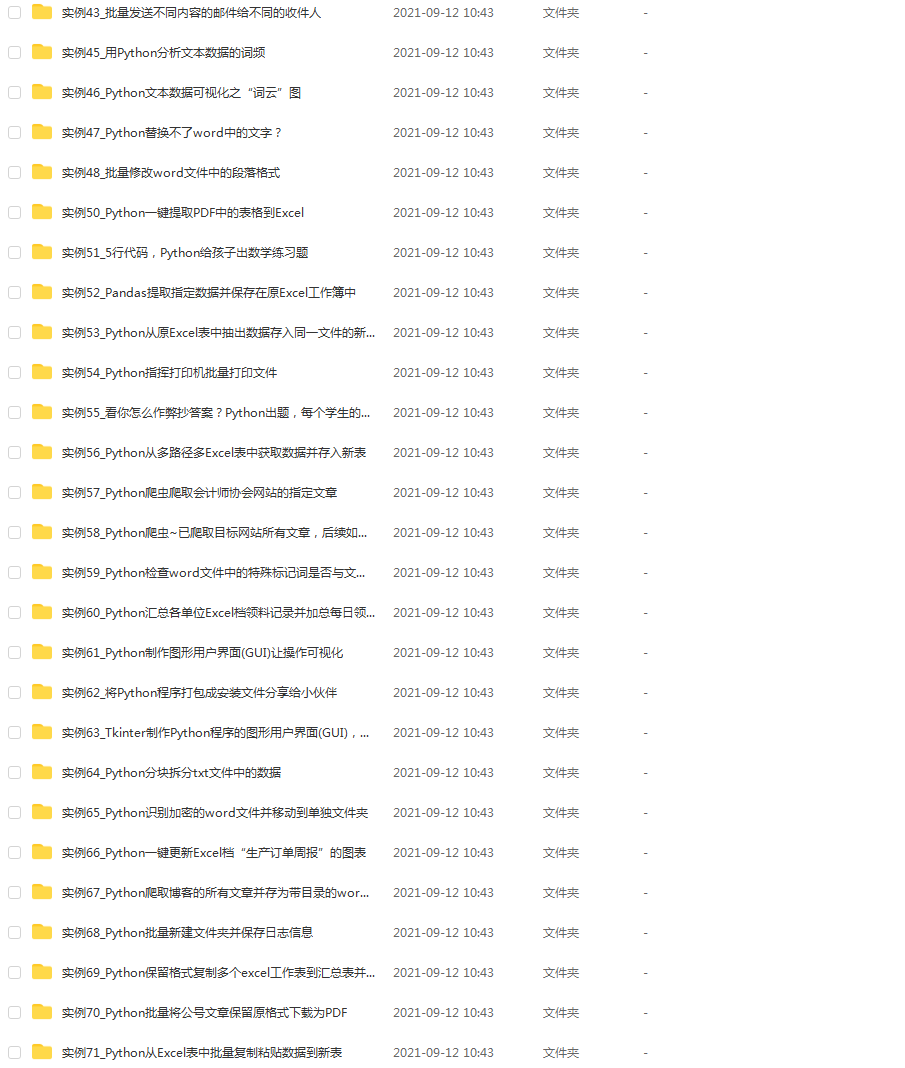

四、实战案例

光学理论是没用的,要学会跟着一起敲,要动手实操,才能将自己的所学运用到实际当中去,这时候可以搞点实战案例来学习。

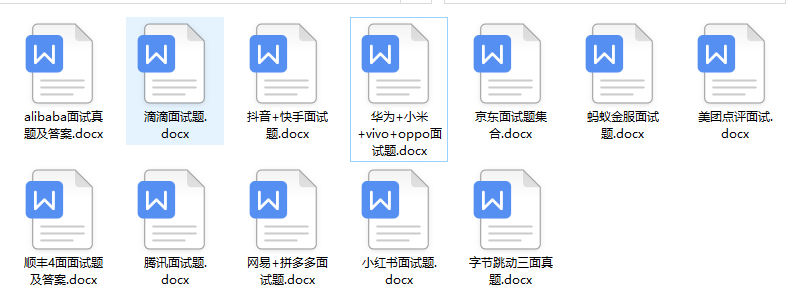

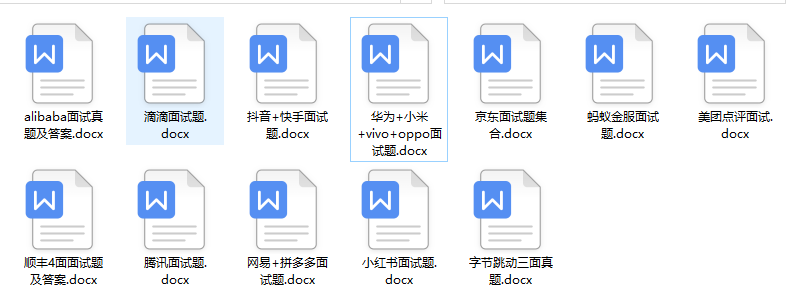

五、面试资料

我们学习Python必然是为了找到高薪的工作,下面这些面试题是来自阿里、腾讯、字节等一线互联网大厂最新的面试资料,并且有阿里大佬给出了权威的解答,刷完这一套面试资料相信大家都能找到满意的工作。

成为一个Python程序员专家或许需要花费数年时间,但是打下坚实的基础只要几周就可以,如果你按照我提供的学习路线以及资料有意识地去实践,你就有很大可能成功!

最后祝你好运!!!

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

- 代码:https://github.com/aim-uofa/AdelaiDet

Deep Snake for Real-Time Instance Segmentation

-

论文:https://arxiv.org/abs/2001.01629

-

代码:https://github.com/zju3dv/snake

Mask Encoding for Single Shot Instance Segmentation

-

论文:https://arxiv.org/abs/2003.11712

-

代码:https://github.com/aim-uofa/AdelaiDet

===============================================================

Video Panoptic Segmentation

-

论文:https://arxiv.org/abs/2006.11339

-

代码:https://github.com/mcahny/vps

-

数据集:https://www.dropbox.com/s/ecem4kq0fdkver4/cityscapes-vps-dataset-1.0.zip?dl=0

Pixel Consensus Voting for Panoptic Segmentation

-

论文:https://arxiv.org/abs/2004.01849

-

代码:还未公布

BANet: Bidirectional Aggregation Network with Occlusion Handling for Panoptic Segmentation

论文:https://arxiv.org/abs/2003.14031

代码:https://github.com/Mooonside/BANet

=================================================================

A Transductive Approach for Video Object Segmentation

-

论文:https://arxiv.org/abs/2004.07193

-

代码:https://github.com/microsoft/transductive-vos.pytorch

State-Aware Tracker for Real-Time Video Object Segmentation

-

论文:https://arxiv.org/abs/2003.00482

-

代码:https://github.com/MegviiDetection/video_analyst

Learning Fast and Robust Target Models for Video Object Segmentation

-

论文:https://arxiv.org/abs/2003.00908

-

代码:https://github.com/andr345/frtm-vos

Learning Video Object Segmentation from Unlabeled Videos

-

论文:https://arxiv.org/abs/2003.05020

-

代码:https://github.com/carrierlxk/MuG

================================================================

Superpixel Segmentation with Fully Convolutional Networks

-

论文:https://arxiv.org/abs/2003.12929

-

代码:https://github.com/fuy34/superpixel_fcn

==================================================================

Interactive Object Segmentation with Inside-Outside Guidance

-

论文下载链接:http://openaccess.thecvf.com/content_CVPR_2020/papers/Zhang_Interactive_Object_Segmentation_With_Inside-Outside_Guidance_CVPR_2020_paper.pdf

-

代码:https://github.com/shiyinzhang/Inside-Outside-Guidance

-

数据集:https://github.com/shiyinzhang/Pixel-ImageNet

==============================================================

AOWS: Adaptive and optimal network width search with latency constraints

-

论文:https://arxiv.org/abs/2005.10481

-

代码:https://github.com/bermanmaxim/AOWS

Densely Connected Search Space for More Flexible Neural Architecture Search

-

论文:https://arxiv.org/abs/1906.09607

-

代码:https://github.com/JaminFong/DenseNAS

MTL-NAS: Task-Agnostic Neural Architecture Search towards General-Purpose Multi-Task Learning

-

论文:https://arxiv.org/abs/2003.14058

-

代码:https://github.com/bhpfelix/MTLNAS

FBNetV2: Differentiable Neural Architecture Search for Spatial and Channel Dimensions

-

论文下载链接:https://arxiv.org/abs/2004.05565

-

代码:https://github.com/facebookresearch/mobile-vision

Neural Architecture Search for Lightweight Non-Local Networks

-

论文:https://arxiv.org/abs/2004.01961

-

代码:https://github.com/LiYingwei/AutoNL

Rethinking Performance Estimation in Neural Architecture Search

-

论文:https://arxiv.org/abs/2005.09917

-

代码:https://github.com/zhengxiawu/rethinking_performance_estimation_in_NAS

-

解读1:https://www.zhihu.com/question/372070853/answer/1035234510

-

解读2:https://zhuanlan.zhihu.com/p/111167409

CARS: Continuous Evolution for Efficient Neural Architecture Search

-

论文:https://arxiv.org/abs/1909.04977

-

代码(即将开源):https://github.com/huawei-noah/CARS

==============================================================

SEAN: Image Synthesis with Semantic Region-Adaptive Normalization

-

论文:https://arxiv.org/abs/1911.12861

-

代码:https://github.com/ZPdesu/SEAN

Reusing Discriminators for Encoding: Towards Unsupervised Image-to-Image Translation

-

论文地址:http://openaccess.thecvf.com/content_CVPR_2020/html/Chen_Reusing_Discriminators_for_Encoding_Towards_Unsupervised_Image-to-Image_Translation_CVPR_2020_paper.html

-

代码地址:https://github.com/alpc91/NICE-GAN-pytorch

Distribution-induced Bidirectional Generative Adversarial Network for Graph Representation Learning

-

论文:https://arxiv.org/abs/1912.01899

-

代码:https://github.com/SsGood/DBGAN

PSGAN: Pose and Expression Robust Spatial-Aware GAN for Customizable Makeup Transfer

-

论文:https://arxiv.org/abs/1909.06956

-

代码:https://github.com/wtjiang98/PSGAN

Semantically Mutil-modal Image Synthesis

-

主页:http://seanseattle.github.io/SMIS

-

论文:https://arxiv.org/abs/2003.12697

-

代码:https://github.com/Seanseattle/SMIS

Unpaired Portrait Drawing Generation via Asymmetric Cycle Mapping

-

论文:https://yiranran.github.io/files/CVPR2020_Unpaired%20Portrait%20Drawing%20Generation%20via%20Asymmetric%20Cycle%20Mapping.pdf

-

代码:https://github.com/yiranran/Unpaired-Portrait-Drawing

Learning to Cartoonize Using White-box Cartoon Representations

-

论文:https://github.com/SystemErrorWang/White-box-Cartoonization/blob/master/paper/06791.pdf

-

主页:https://systemerrorwang.github.io/White-box-Cartoonization/

-

代码:https://github.com/SystemErrorWang/White-box-Cartoonization

-

解读:https://zhuanlan.zhihu.com/p/117422157

-

Demo视频:https://www.bilibili.com/video/av56708333

GAN Compression: Efficient Architectures for Interactive Conditional GANs

-

论文:https://arxiv.org/abs/2003.08936

-

代码:https://github.com/mit-han-lab/gan-compression

Watch your Up-Convolution: CNN Based Generative Deep Neural Networks are Failing to Reproduce Spectral Distributions

-

论文:https://arxiv.org/abs/2003.01826

-

代码:https://github.com/cc-hpc-itwm/UpConv

================================================================

High-Order Information Matters: Learning Relation and Topology for Occluded Person Re-Identification

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/html/Wang_High-Order_Information_Matters_Learning_Relation_and_Topology_for_Occluded_Person_CVPR_2020_paper.html

-

代码:https://github.com/wangguanan/HOReID

COCAS: A Large-Scale Clothes Changing Person Dataset for Re-identification

-

论文:https://arxiv.org/abs/2005.07862

-

数据集:暂无

Transferable, Controllable, and Inconspicuous Adversarial Attacks on Person Re-identification With Deep Mis-Ranking

-

论文:https://arxiv.org/abs/2004.04199

-

代码:https://github.com/whj363636/Adversarial-attack-on-Person-ReID-With-Deep-Mis-Ranking

Pose-guided Visible Part Matching for Occluded Person ReID

-

论文:https://arxiv.org/abs/2004.00230

-

代码:https://github.com/hh23333/PVPM

Weakly supervised discriminative feature learning with state information for person identification

-

论文:https://arxiv.org/abs/2002.11939

-

代码:https://github.com/KovenYu/state-information

==========================================================================

PointASNL: Robust Point Clouds Processing using Nonlocal Neural Networks with Adaptive Sampling

-

论文:https://arxiv.org/abs/2003.00492

-

代码:https://github.com/yanx27/PointASNL

Global-Local Bidirectional Reasoning for Unsupervised Representation Learning of 3D Point Clouds

-

论文下载链接:https://arxiv.org/abs/2003.12971

-

代码:https://github.com/raoyongming/PointGLR

Grid-GCN for Fast and Scalable Point Cloud Learning

-

论文:https://arxiv.org/abs/1912.02984

-

代码:https://github.com/Xharlie/Grid-GCN

FPConv: Learning Local Flattening for Point Convolution

-

论文:https://arxiv.org/abs/2002.10701

-

代码:https://github.com/lyqun/FPConv

PointAugment: an Auto-Augmentation Framework for Point Cloud Classification

-

论文:https://arxiv.org/abs/2002.10876

-

代码(即将开源): https://github.com/liruihui/PointAugment/

RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds

-

论文:https://arxiv.org/abs/1911.11236

-

代码:https://github.com/QingyongHu/RandLA-Net

-

解读:https://zhuanlan.zhihu.com/p/105433460

Weakly Supervised Semantic Point Cloud Segmentation:Towards 10X Fewer Labels

-

论文:https://arxiv.org/abs/2004.04091

-

代码:https://github.com/alex-xun-xu/WeakSupPointCloudSeg

PolarNet: An Improved Grid Representation for Online LiDAR Point Clouds Semantic Segmentation

-

论文:https://arxiv.org/abs/2003.14032

-

代码:https://github.com/edwardzhou130/PolarSeg

Learning to Segment 3D Point Clouds in 2D Image Space

-

论文:https://arxiv.org/abs/2003.05593

-

代码:https://github.com/WPI-VISLab/Learning-to-Segment-3D-Point-Clouds-in-2D-Image-Space

PointGroup: Dual-Set Point Grouping for 3D Instance Segmentation

-

论文:https://arxiv.org/abs/2004.01658

-

代码:https://github.com/Jia-Research-Lab/PointGroup

Feature-metric Registration: A Fast Semi-supervised Approach for Robust Point Cloud Registration without Correspondences

-

论文:https://arxiv.org/abs/2005.01014

-

代码:https://github.com/XiaoshuiHuang/fmr

D3Feat: Joint Learning of Dense Detection and Description of 3D Local Features

-

论文:https://arxiv.org/abs/2003.03164

-

代码:https://github.com/XuyangBai/D3Feat

RPM-Net: Robust Point Matching using Learned Features

-

论文:https://arxiv.org/abs/2003.13479

-

代码:https://github.com/yewzijian/RPMNet

Cascaded Refinement Network for Point Cloud Completion

-

论文:https://arxiv.org/abs/2004.03327

-

代码:https://github.com/xiaogangw/cascaded-point-completion

P2B: Point-to-Box Network for 3D Object Tracking in Point Clouds

-

论文:https://arxiv.org/abs/2005.13888

-

代码:https://github.com/HaozheQi/P2B

An Efficient PointLSTM for Point Clouds Based Gesture Recognition

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/html/Min_An_Efficient_PointLSTM_for_Point_Clouds_Based_Gesture_Recognition_CVPR_2020_paper.html

-

代码:https://github.com/Blueprintf/pointlstm-gesture-recognition-pytorch

=============================================================

CurricularFace: Adaptive Curriculum Learning Loss for Deep Face Recognition

-

论文:https://arxiv.org/abs/2004.00288

-

代码:https://github.com/HuangYG123/CurricularFace

Learning Meta Face Recognition in Unseen Domains

-

论文:https://arxiv.org/abs/2003.07733

-

代码:https://github.com/cleardusk/MFR

-

解读:https://mp.weixin.qq.com/s/YZoEnjpnlvb90qSI3xdJqQ

Searching Central Difference Convolutional Networks for Face Anti-Spoofing

-

论文:https://arxiv.org/abs/2003.04092

-

代码:https://github.com/ZitongYu/CDCN

Suppressing Uncertainties for Large-Scale Facial Expression Recognition

-

论文:https://arxiv.org/abs/2002.10392

-

代码(即将开源):https://github.com/kaiwang960112/Self-Cure-Network

Rotate-and-Render: Unsupervised Photorealistic Face Rotation from Single-View Images

-

论文:https://arxiv.org/abs/2003.08124

-

代码:https://github.com/Hangz-nju-cuhk/Rotate-and-Render

AvatarMe: Realistically Renderable 3D Facial Reconstruction “in-the-wild”

-

论文:https://arxiv.org/abs/2003.13845

-

数据集:https://github.com/lattas/AvatarMe

FaceScape: a Large-scale High Quality 3D Face Dataset and Detailed Riggable 3D Face Prediction

-

论文:https://arxiv.org/abs/2003.13989

-

代码:https://github.com/zhuhao-nju/facescape

========================================================================

TransMoMo: Invariance-Driven Unsupervised Video Motion Retargeting

-

主页:https://yzhq97.github.io/transmomo/

-

论文:https://arxiv.org/abs/2003.14401

-

代码:https://github.com/yzhq97/transmomo.pytorch

HigherHRNet: Scale-Aware Representation Learning for Bottom-Up Human Pose Estimation

-

论文:https://arxiv.org/abs/1908.10357

-

代码:https://github.com/HRNet/HigherHRNet-Human-Pose-Estimation

The Devil is in the Details: Delving into Unbiased Data Processing for Human Pose Estimation

-

论文:https://arxiv.org/abs/1911.07524

-

代码:https://github.com/HuangJunJie2017/UDP-Pose

-

解读:https://zhuanlan.zhihu.com/p/92525039

Distribution-Aware Coordinate Representation for Human Pose Estimation

-

主页:https://ilovepose.github.io/coco/

-

论文:https://arxiv.org/abs/1910.06278

-

代码:https://github.com/ilovepose/DarkPose

Cascaded Deep Monocular 3D Human Pose Estimation With Evolutionary Training Data

-

论文:https://arxiv.org/abs/2006.07778

-

代码:https://github.com/Nicholasli1995/EvoSkeleton

Fusing Wearable IMUs with Multi-View Images for Human Pose Estimation: A Geometric Approach

-

主页:https://www.zhe-zhang.com/cvpr2020

-

论文:https://arxiv.org/abs/2003.11163

-

代码:https://github.com/CHUNYUWANG/imu-human-pose-pytorch

Bodies at Rest: 3D Human Pose and Shape Estimation from a Pressure Image using Synthetic Data

-

论文下载链接:https://arxiv.org/abs/2004.01166

-

代码:https://github.com/Healthcare-Robotics/bodies-at-rest

-

数据集:https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/KOA4ML

Self-Supervised 3D Human Pose Estimation via Part Guided Novel Image Synthesis

-

主页:http://val.cds.iisc.ac.in/pgp-human/

-

论文:https://arxiv.org/abs/2004.04400

Compressed Volumetric Heatmaps for Multi-Person 3D Pose Estimation

-

论文:https://arxiv.org/abs/2004.00329

-

代码:https://github.com/fabbrimatteo/LoCO

VIBE: Video Inference for Human Body Pose and Shape Estimation

-

论文:https://arxiv.org/abs/1912.05656

-

代码:https://github.com/mkocabas/VIBE

Back to the Future: Joint Aware Temporal Deep Learning 3D Human Pose Estimation

-

论文:https://arxiv.org/abs/2002.11251

-

代码:https://github.com/vnmr/JointVideoPose3D

Cross-View Tracking for Multi-Human 3D Pose Estimation at over 100 FPS

-

论文:https://arxiv.org/abs/2003.03972

-

数据集:暂无

===============================================================

Correlating Edge, Pose with Parsing

-

论文:https://arxiv.org/abs/2005.01431

-

代码:https://github.com/ziwei-zh/CorrPM

=================================================================

STEFANN: Scene Text Editor using Font Adaptive Neural Network

-

主页:https://prasunroy.github.io/stefann/

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/html/Roy_STEFANN_Scene_Text_Editor_Using_Font_Adaptive_Neural_Network_CVPR_2020_paper.html

-

代码:https://github.com/prasunroy/stefann

-

数据集:https://drive.google.com/open?id=1sEDiX_jORh2X-HSzUnjIyZr-G9LJIw1k

ContourNet: Taking a Further Step Toward Accurate Arbitrary-Shaped Scene Text Detection

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/papers/Wang_ContourNet_Taking_a_Further_Step_Toward_Accurate_Arbitrary-Shaped_Scene_Text_CVPR_2020_paper.pdf

-

代码:https://github.com/wangyuxin87/ContourNet

UnrealText: Synthesizing Realistic Scene Text Images from the Unreal World

-

论文:https://arxiv.org/abs/2003.10608

-

代码和数据集:https://github.com/Jyouhou/UnrealText/

ABCNet: Real-time Scene Text Spotting with Adaptive Bezier-Curve Network

-

论文:https://arxiv.org/abs/2002.10200

-

代码(即将开源):https://github.com/Yuliang-Liu/bezier_curve_text_spotting

-

代码(即将开源):https://github.com/aim-uofa/adet

Deep Relational Reasoning Graph Network for Arbitrary Shape Text Detection

-

论文:https://arxiv.org/abs/2003.07493

-

代码:https://github.com/GXYM/DRRG

=================================================================

SEED: Semantics Enhanced Encoder-Decoder Framework for Scene Text Recognition

-

论文:https://arxiv.org/abs/2005.10977

-

代码:https://github.com/Pay20Y/SEED

UnrealText: Synthesizing Realistic Scene Text Images from the Unreal World

-

论文:https://arxiv.org/abs/2003.10608

-

代码和数据集:https://github.com/Jyouhou/UnrealText/

ABCNet: Real-time Scene Text Spotting with Adaptive Bezier-Curve Network

-

论文:https://arxiv.org/abs/2002.10200

-

代码(即将开源):https://github.com/aim-uofa/adet

Learn to Augment: Joint Data Augmentation and Network Optimization for Text Recognition

-

论文:https://arxiv.org/abs/2003.06606

-

代码:https://github.com/Canjie-Luo/Text-Image-Augmentation

=====================================================================

SuperGlue: Learning Feature Matching with Graph Neural Networks

-

论文:https://arxiv.org/abs/1911.11763

-

代码:https://github.com/magicleap/SuperGluePretrainedNetwork

===============================================================

Closed-Loop Matters: Dual Regression Networks for Single Image Super-Resolution

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/html/Guo_Closed-Loop_Matters_Dual_Regression_Networks_for_Single_Image_Super-Resolution_CVPR_2020_paper.html

-

代码:https://github.com/guoyongcs/DRN

Learning Texture Transformer Network for Image Super-Resolution

-

论文:https://arxiv.org/abs/2006.04139

-

代码:https://github.com/FuzhiYang/TTSR

Image Super-Resolution with Cross-Scale Non-Local Attention and Exhaustive Self-Exemplars Mining

-

论文:https://arxiv.org/abs/2006.01424

-

代码:https://github.com/SHI-Labs/Cross-Scale-Non-Local-Attention

Structure-Preserving Super Resolution with Gradient Guidance

-

论文:https://arxiv.org/abs/2003.13081

-

代码:https://github.com/Maclory/SPSR

Rethinking Data Augmentation for Image Super-resolution: A Comprehensive Analysis and a New Strategy

论文:https://arxiv.org/abs/2004.00448

代码:https://github.com/clovaai/cutblur

TDAN: Temporally-Deformable Alignment Network for Video Super-Resolution

-

论文:https://arxiv.org/abs/1812.02898

-

代码:https://github.com/YapengTian/TDAN-VSR-CVPR-2020

Space-Time-Aware Multi-Resolution Video Enhancement

-

主页:https://alterzero.github.io/projects/STAR.html

-

论文:http://arxiv.org/abs/2003.13170

-

代码:https://github.com/alterzero/STARnet

Zooming Slow-Mo: Fast and Accurate One-Stage Space-Time Video Super-Resolution

-

论文:https://arxiv.org/abs/2002.11616

-

代码:https://github.com/Mukosame/Zooming-Slow-Mo-CVPR-2020

==================================================================

DMCP: Differentiable Markov Channel Pruning for Neural Networks

-

论文:https://arxiv.org/abs/2005.03354

-

代码:https://github.com/zx55/dmcp

Forward and Backward Information Retention for Accurate Binary Neural Networks

-

论文:https://arxiv.org/abs/1909.10788

-

代码:https://github.com/htqin/IR-Net

Towards Efficient Model Compression via Learned Global Ranking

-

论文:https://arxiv.org/abs/1904.12368

-

代码:https://github.com/cmu-enyac/LeGR

HRank: Filter Pruning using High-Rank Feature Map

-

论文:http://arxiv.org/abs/2002.10179

-

代码:https://github.com/lmbxmu/HRank

GAN Compression: Efficient Architectures for Interactive Conditional GANs

-

论文:https://arxiv.org/abs/2003.08936

-

代码:https://github.com/mit-han-lab/gan-compression

Group Sparsity: The Hinge Between Filter Pruning and Decomposition for Network Compression

-

论文:https://arxiv.org/abs/2003.08935

-

代码:https://github.com/ofsoundof/group_sparsity

====================================================================

Oops! Predicting Unintentional Action in Video

-

主页:https://oops.cs.columbia.edu/

-

论文:https://arxiv.org/abs/1911.11206

-

代码:https://github.com/cvlab-columbia/oops

-

数据集:https://oops.cs.columbia.edu/data

PREDICT & CLUSTER: Unsupervised Skeleton Based Action Recognition

-

论文:https://arxiv.org/abs/1911.12409

-

代码:https://github.com/shlizee/Predict-Cluster

Intra- and Inter-Action Understanding via Temporal Action Parsing

-

论文:https://arxiv.org/abs/2005.10229

-

主页和数据集:https://sdolivia.github.io/TAPOS/

3DV: 3D Dynamic Voxel for Action Recognition in Depth Video

-

论文:https://arxiv.org/abs/2005.05501

-

代码:https://github.com/3huo/3DV-Action

FineGym: A Hierarchical Video Dataset for Fine-grained Action Understanding

-

主页:https://sdolivia.github.io/FineGym/

-

论文:https://arxiv.org/abs/2004.06704

TEA: Temporal Excitation and Aggregation for Action Recognition

-

论文:https://arxiv.org/abs/2004.01398

-

代码:https://github.com/Phoenix1327/tea-action-recognition

X3D: Expanding Architectures for Efficient Video Recognition

-

论文:https://arxiv.org/abs/2004.04730

-

代码:https://github.com/facebookresearch/SlowFast

Temporal Pyramid Network for Action Recognition

-

主页:https://decisionforce.github.io/TPN

-

论文:https://arxiv.org/abs/2004.03548

-

代码:https://github.com/decisionforce/TPN

Disentangling and Unifying Graph Convolutions for Skeleton-Based Action Recognition

-

论文:https://arxiv.org/abs/2003.14111

-

代码:https://github.com/kenziyuliu/ms-g3d

===============================================================

===============================================================

BiFuse: Monocular 360◦ Depth Estimation via Bi-Projection Fusion

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/papers/Wang_BiFuse_Monocular_360_Depth_Estimation_via_Bi-Projection_Fusion_CVPR_2020_paper.pdf

-

代码:https://github.com/Yeh-yu-hsuan/BiFuse

Focus on defocus: bridging the synthetic to real domain gap for depth estimation

-

论文:https://arxiv.org/abs/2005.09623

-

代码:https://github.com/dvl-tum/defocus-net

Bi3D: Stereo Depth Estimation via Binary Classifications

-

论文:https://arxiv.org/abs/2005.07274

-

代码:https://github.com/NVlabs/Bi3D

AANet: Adaptive Aggregation Network for Efficient Stereo Matching

-

论文:https://arxiv.org/abs/2004.09548

-

代码:https://github.com/haofeixu/aanet

Towards Better Generalization: Joint Depth-Pose Learning without PoseNet

-

论文:https://github.com/B1ueber2y/TrianFlow

-

代码:https://github.com/B1ueber2y/TrianFlow

On the uncertainty of self-supervised monocular depth estimation

-

论文:https://arxiv.org/abs/2005.06209

-

代码:https://github.com/mattpoggi/mono-uncertainty

3D Packing for Self-Supervised Monocular Depth Estimation

-

论文:https://arxiv.org/abs/1905.02693

-

代码:https://github.com/TRI-ML/packnet-sfm

-

Demo视频:https://www.bilibili.com/video/av70562892/

Domain Decluttering: Simplifying Images to Mitigate Synthetic-Real Domain Shift and Improve Depth Estimation

-

论文:https://arxiv.org/abs/2002.12114

-

代码:https://github.com/yzhao520/ARC

===================================================================

PVN3D: A Deep Point-wise 3D Keypoints Voting Network for 6DoF Pose Estimation

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/papers/He_PVN3D_A_Deep_Point-Wise_3D_Keypoints_Voting_Network_for_6DoF_CVPR_2020_paper.pdf

-

代码:https://github.com/ethnhe/PVN3D

MoreFusion: Multi-object Reasoning for 6D Pose Estimation from Volumetric Fusion

-

论文:https://arxiv.org/abs/2004.04336

-

代码:https://github.com/wkentaro/morefusion

EPOS: Estimating 6D Pose of Objects with Symmetries

主页:http://cmp.felk.cvut.cz/epos

论文:https://arxiv.org/abs/2004.00605

G2L-Net: Global to Local Network for Real-time 6D Pose Estimation with Embedding Vector Features

-

论文:https://arxiv.org/abs/2003.11089

-

代码:https://github.com/DC1991/G2L_Net

===============================================================

HOPE-Net: A Graph-based Model for Hand-Object Pose Estimation

-

论文:https://arxiv.org/abs/2004.00060

-

主页:http://vision.sice.indiana.edu/projects/hopenet

Monocular Real-time Hand Shape and Motion Capture using Multi-modal Data

-

论文:https://arxiv.org/abs/2003.09572

-

代码:https://github.com/CalciferZh/minimal-hand

================================================================

JL-DCF: Joint Learning and Densely-Cooperative Fusion Framework for RGB-D Salient Object Detection

-

论文:https://arxiv.org/abs/2004.08515

-

代码:https://github.com/kerenfu/JLDCF/

UC-Net: Uncertainty Inspired RGB-D Saliency Detection via Conditional Variational Autoencoders

-

主页:http://dpfan.net/d3netbenchmark/

-

论文:https://arxiv.org/abs/2004.05763

-

代码:https://github.com/JingZhang617/UCNet

=============================================================

A Physics-based Noise Formation Model for Extreme Low-light Raw Denoising

-

论文:https://arxiv.org/abs/2003.12751

-

代码:https://github.com/Vandermode/NoiseModel

CycleISP: Real Image Restoration via Improved Data Synthesis

-

论文:https://arxiv.org/abs/2003.07761

-

代码:https://github.com/swz30/CycleISP

=============================================================

Multi-Scale Progressive Fusion Network for Single Image Deraining

-

论文:https://arxiv.org/abs/2003.10985

-

代码:https://github.com/kuihua/MSPFN

Detail-recovery Image Deraining via Context Aggregation Networks

-

论文:https://openaccess.thecvf.com/content_CVPR_2020/html/Deng_Detail-recovery_Image_Deraining_via_Context_Aggregation_Networks_CVPR_2020_paper.html

-

代码:https://github.com/Dengsgithub/DRD-Net

==============================================================

Cascaded Deep Video Deblurring Using Temporal Sharpness Prior

-

主页:https://csbhr.github.io/projects/cdvd-tsp/index.html

-

论文:https://arxiv.org/abs/2004.02501

-

代码:https://github.com/csbhr/CDVD-TSP

=============================================================

Domain Adaptation for Image Dehazing

-

论文:https://arxiv.org/abs/2005.04668

-

代码:https://github.com/HUSTSYJ/DA_dahazing

Multi-Scale Boosted Dehazing Network with Dense Feature Fusion

-

论文:https://arxiv.org/abs/2004.13388

-

代码:https://github.com/BookerDeWitt/MSBDN-DFF

===================================================================

ASLFeat: Learning Local Features of Accurate Shape and Localization

-

论文:https://arxiv.org/abs/2003.10071

-

代码:https://github.com/lzx551402/aslfeat

====================================================================

VC R-CNN:Visual Commonsense R-CNN

-

论文:https://arxiv.org/abs/2002.12204

-

代码:https://github.com/Wangt-CN/VC-R-CNN

========================================================================

Hierarchical Conditional Relation Networks for Video Question Answering

-

论文:https://arxiv.org/abs/2002.10698

-

代码:https://github.com/thaolmk54/hcrn-videoqa

=================================================================

Towards Learning a Generic Agent for Vision-and-Language Navigation via Pre-training

-

论文:https://arxiv.org/abs/2002.10638

-

代码(即将开源):https://github.com/weituo12321/PREVALENT

===============================================================

Learning for Video Compression with Hierarchical Quality and Recurrent Enhancement

-

论文:https://arxiv.org/abs/2003.01966

-

代码:https://github.com/RenYang-home/HLVC

===============================================================

AdaCoF: Adaptive Collaboration of Flows for Video Frame Interpolation

-

论文:https://arxiv.org/abs/1907.10244

-

代码:https://github.com/HyeongminLEE/AdaCoF-pytorch

FeatureFlow: Robust Video Interpolation via Structure-to-Texture Generation

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/html/Gui_FeatureFlow_Robust_Video_Interpolation_via_Structure-to-Texture_Generation_CVPR_2020_paper.html

-

代码:https://github.com/CM-BF/FeatureFlow

Zooming Slow-Mo: Fast and Accurate One-Stage Space-Time Video Super-Resolution

-

论文:https://arxiv.org/abs/2002.11616

-

代码:https://github.com/Mukosame/Zooming-Slow-Mo-CVPR-2020

Space-Time-Aware Multi-Resolution Video Enhancement

-

主页:https://alterzero.github.io/projects/STAR.html

-

论文:http://arxiv.org/abs/2003.13170

-

代码:https://github.com/alterzero/STARnet

Scene-Adaptive Video Frame Interpolation via Meta-Learning

-

论文:https://arxiv.org/abs/2004.00779

-

代码:https://github.com/myungsub/meta-interpolation

Softmax Splatting for Video Frame Interpolation

-

主页:http://sniklaus.com/papers/softsplat

-

论文:https://arxiv.org/abs/2003.05534

-

代码:https://github.com/sniklaus/softmax-splatting

===============================================================

Diversified Arbitrary Style Transfer via Deep Feature Perturbation

-

论文:https://arxiv.org/abs/1909.08223

-

代码:https://github.com/EndyWon/Deep-Feature-Perturbation

Collaborative Distillation for Ultra-Resolution Universal Style Transfer

-

论文:https://arxiv.org/abs/2003.08436

-

代码:https://github.com/mingsun-tse/collaborative-distillation

================================================================

Inter-Region Affinity Distillation for Road Marking Segmentation

-

论文:https://arxiv.org/abs/2004.05304

-

代码:https://github.com/cardwing/Codes-for-IntRA-KD

=========================================================================

PPDM: Parallel Point Detection and Matching for Real-time Human-Object Interaction Detection

-

论文:https://arxiv.org/abs/1912.12898

-

代码:https://github.com/YueLiao/PPDM

Detailed 2D-3D Joint Representation for Human-Object Interaction

-

论文:https://arxiv.org/abs/2004.08154

-

代码:https://github.com/DirtyHarryLYL/DJ-RN

Cascaded Human-Object Interaction Recognition

-

论文:https://arxiv.org/abs/2003.04262

-

代码:https://github.com/tfzhou/C-HOI

VSGNet: Spatial Attention Network for Detecting Human Object Interactions Using Graph Convolutions

-

论文:https://arxiv.org/abs/2003.05541

-

代码:https://github.com/ASMIftekhar/VSGNet

===============================================================

The Garden of Forking Paths: Towards Multi-Future Trajectory Prediction

-

论文:https://arxiv.org/abs/1912.06445

-

代码:https://github.com/JunweiLiang/Multiverse

-

数据集:https://next.cs.cmu.edu/multiverse/

Social-STGCNN: A Social Spatio-Temporal Graph Convolutional Neural Network for Human Trajectory Prediction

-

论文:https://arxiv.org/abs/2002.11927

-

代码:https://github.com/abduallahmohamed/Social-STGCNN

===============================================================

Collaborative Motion Prediction via Neural Motion Message Passing

-

论文:https://arxiv.org/abs/2003.06594

-

代码:https://github.com/PhyllisH/NMMP

MotionNet: Joint Perception and Motion Prediction for Autonomous Driving Based on Bird’s Eye View Maps

-

论文:https://arxiv.org/abs/2003.06754

-

代码:https://github.com/pxiangwu/MotionNet

===============================================================

Learning by Analogy: Reliable Supervision from Transformations for Unsupervised Optical Flow Estimation

-

论文:https://arxiv.org/abs/2003.13045

-

代码:https://github.com/lliuz/ARFlow

===============================================================

Evade Deep Image Retrieval by Stashing Private Images in the Hash Space

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/html/Xiao_Evade_Deep_Image_Retrieval_by_Stashing_Private_Images_in_the_CVPR_2020_paper.html

-

代码:https://github.com/sugarruy/hashstash

===============================================================

Towards Photo-Realistic Virtual Try-On by Adaptively Generating↔Preserving Image Content

-

论文:https://arxiv.org/abs/2003.05863

-

代码:https://github.com/switchablenorms/DeepFashion_Try_On

==============================================================

Single-Image HDR Reconstruction by Learning to Reverse the Camera Pipeline

-

主页:https://www.cmlab.csie.ntu.edu.tw/~yulunliu/SingleHDR

-

论文下载链接:https://www.cmlab.csie.ntu.edu.tw/~yulunliu/SingleHDR_/00942.pdf

-

代码:https://github.com/alex04072000/SingleHDR

===============================================================

Enhancing Cross-Task Black-Box Transferability of Adversarial Examples With Dispersion Reduction

-

论文:https://openaccess.thecvf.com/content_CVPR_2020/papers/Lu_Enhancing_Cross-Task_Black-Box_Transferability_of_Adversarial_Examples_With_Dispersion_Reduction_CVPR_2020_paper.pdf

-

代码:https://github.com/erbloo/dr_cvpr20

Towards Large yet Imperceptible Adversarial Image Perturbations with Perceptual Color Distance

-

论文:https://arxiv.org/abs/1911.02466

-

代码:https://github.com/ZhengyuZhao/PerC-Adversarial

===============================================================

Unsupervised Learning of Probably Symmetric Deformable 3D Objects from Images in the Wild

-

CVPR 2020 Best Paper

-

主页:https://elliottwu.com/projects/unsup3d/

-

论文:https://arxiv.org/abs/1911.11130

-

代码:https://github.com/elliottwu/unsup3d

Multi-Level Pixel-Aligned Implicit Function for High-Resolution 3D Human Digitization

-

主页:https://shunsukesaito.github.io/PIFuHD/

-

论文:https://arxiv.org/abs/2004.00452

-

代码:https://github.com/facebookresearch/pifuhd

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/papers/Patel_TailorNet_Predicting_Clothing_in_3D_as_a_Function_of_Human_CVPR_2020_paper.pdf

-

代码:https://github.com/chaitanya100100/TailorNet

-

数据集:https://github.com/zycliao/TailorNet_dataset

Implicit Functions in Feature Space for 3D Shape Reconstruction and Completion

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/papers/Chibane_Implicit_Functions_in_Feature_Space_for_3D_Shape_Reconstruction_and_CVPR_2020_paper.pdf

-

代码:https://github.com/jchibane/if-net

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/papers/Mir_Learning_to_Transfer_Texture_From_Clothing_Images_to_3D_Humans_CVPR_2020_paper.pdf

-

代码:https://github.com/aymenmir1/pix2surf

===============================================================

Uncertainty-Aware CNNs for Depth Completion: Uncertainty from Beginning to End

论文:https://arxiv.org/abs/2006.03349

代码:https://github.com/abdo-eldesokey/pncnn

=================================================================

3D Sketch-aware Semantic Scene Completion via Semi-supervised Structure Prior

-

论文:https://arxiv.org/abs/2003.14052

-

代码:https://github.com/charlesCXK/TorchSSC

==================================================================

Syntax-Aware Action Targeting for Video Captioning

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/papers/Zheng_Syntax-Aware_Action_Targeting_for_Video_Captioning_CVPR_2020_paper.pdf

-

代码:https://github.com/SydCaption/SAAT

===============================================================

Holistically-Attracted Wireframe Parser

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/html/Xue_Holistically-Attracted_Wireframe_Parsing_CVPR_2020_paper.html

-

代码:https://github.com/cherubicXN/hawp

==============================================================

OASIS: A Large-Scale Dataset for Single Image 3D in the Wild

-

论文:https://arxiv.org/abs/2007.13215

-

数据集:https://oasis.cs.princeton.edu/

STEFANN: Scene Text Editor using Font Adaptive Neural Network

-

主页:https://prasunroy.github.io/stefann/

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/html/Roy_STEFANN_Scene_Text_Editor_Using_Font_Adaptive_Neural_Network_CVPR_2020_paper.html

-

代码:https://github.com/prasunroy/stefann

-

数据集:https://drive.google.com/open?id=1sEDiX_jORh2X-HSzUnjIyZr-G9LJIw1k

Interactive Object Segmentation with Inside-Outside Guidance

-

论文下载链接:http://openaccess.thecvf.com/content_CVPR_2020/papers/Zhang_Interactive_Object_Segmentation_With_Inside-Outside_Guidance_CVPR_2020_paper.pdf

-

代码:https://github.com/shiyinzhang/Inside-Outside-Guidance

-

数据集:https://github.com/shiyinzhang/Pixel-ImageNet

Video Panoptic Segmentation

-

论文:https://arxiv.org/abs/2006.11339

-

代码:https://github.com/mcahny/vps

-

数据集:https://www.dropbox.com/s/ecem4kq0fdkver4/cityscapes-vps-dataset-1.0.zip?dl=0

FSS-1000: A 1000-Class Dataset for Few-Shot Segmentation

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/html/Li_FSS-1000_A_1000-Class_Dataset_for_Few-Shot_Segmentation_CVPR_2020_paper.html

-

代码:https://github.com/HKUSTCV/FSS-1000

-

数据集:https://github.com/HKUSTCV/FSS-1000

3D-ZeF: A 3D Zebrafish Tracking Benchmark Dataset

-

主页:https://vap.aau.dk/3d-zef/

-

论文:https://arxiv.org/abs/2006.08466

-

代码:https://bitbucket.org/aauvap/3d-zef/src/master/

-

数据集:https://motchallenge.net/data/3D-ZeF20

TailorNet: Predicting Clothing in 3D as a Function of Human Pose, Shape and Garment Style

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/papers/Patel_TailorNet_Predicting_Clothing_in_3D_as_a_Function_of_Human_CVPR_2020_paper.pdf

-

代码:https://github.com/chaitanya100100/TailorNet

-

数据集:https://github.com/zycliao/TailorNet_dataset

Oops! Predicting Unintentional Action in Video

-

主页:https://oops.cs.columbia.edu/

-

论文:https://arxiv.org/abs/1911.11206

-

代码:https://github.com/cvlab-columbia/oops

-

数据集:https://oops.cs.columbia.edu/data

The Garden of Forking Paths: Towards Multi-Future Trajectory Prediction

-

论文:https://arxiv.org/abs/1912.06445

-

代码:https://github.com/JunweiLiang/Multiverse

-

数据集:https://next.cs.cmu.edu/multiverse/

Open Compound Domain Adaptation

-

主页:https://liuziwei7.github.io/projects/CompoundDomain.html

-

数据集:https://drive.google.com/drive/folders/1_uNTF8RdvhS_sqVTnYx17hEOQpefmE2r?usp=sharing

-

论文:https://arxiv.org/abs/1909.03403

-

代码:https://github.com/zhmiao/OpenCompoundDomainAdaptation-OCDA

Intra- and Inter-Action Understanding via Temporal Action Parsing

-

论文:https://arxiv.org/abs/2005.10229

-

主页和数据集:https://sdolivia.github.io/TAPOS/

Dynamic Refinement Network for Oriented and Densely Packed Object Detection

-

论文下载链接:https://arxiv.org/abs/2005.09973

-

代码和数据集:https://github.com/Anymake/DRN_CVPR2020

COCAS: A Large-Scale Clothes Changing Person Dataset for Re-identification

-

论文:https://arxiv.org/abs/2005.07862

-

数据集:暂无

KeypointNet: A Large-scale 3D Keypoint Dataset Aggregated from Numerous Human Annotations

-

论文:https://arxiv.org/abs/2002.12687

-

数据集:https://github.com/qq456cvb/KeypointNet

MSeg: A Composite Dataset for Multi-domain Semantic Segmentation

-

论文:http://vladlen.info/papers/MSeg.pdf

-

代码:https://github.com/mseg-dataset/mseg-api

-

数据集:https://github.com/mseg-dataset/mseg-semantic

AvatarMe: Realistically Renderable 3D Facial Reconstruction “in-the-wild”

-

论文:https://arxiv.org/abs/2003.13845

-

数据集:https://github.com/lattas/AvatarMe

Learning to Autofocus

-

论文:https://arxiv.org/abs/2004.12260

-

数据集:暂无

FaceScape: a Large-scale High Quality 3D Face Dataset and Detailed Riggable 3D Face Prediction

-

论文:https://arxiv.org/abs/2003.13989

-

代码:https://github.com/zhuhao-nju/facescape

Bodies at Rest: 3D Human Pose and Shape Estimation from a Pressure Image using Synthetic Data

-

论文下载链接:https://arxiv.org/abs/2004.01166

-

代码:https://github.com/Healthcare-Robotics/bodies-at-rest

-

数据集:https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/KOA4ML

FineGym: A Hierarchical Video Dataset for Fine-grained Action Understanding

-

主页:https://sdolivia.github.io/FineGym/

-

论文:https://arxiv.org/abs/2004.06704

A Local-to-Global Approach to Multi-modal Movie Scene Segmentation

-

主页:https://anyirao.com/projects/SceneSeg.html

-

论文下载链接:https://arxiv.org/abs/2004.02678

-

代码:https://github.com/AnyiRao/SceneSeg

Deep Homography Estimation for Dynamic Scenes

-

论文:https://arxiv.org/abs/2004.02132

-

数据集:https://github.com/lcmhoang/hmg-dynamics

Assessing Image Quality Issues for Real-World Problems

-

主页:https://vizwiz.org/tasks-and-datasets/image-quality-issues/

-

论文:https://arxiv.org/abs/2003.12511

UnrealText: Synthesizing Realistic Scene Text Images from the Unreal World

-

论文:https://arxiv.org/abs/2003.10608

-

代码和数据集:https://github.com/Jyouhou/UnrealText/

PANDA: A Gigapixel-level Human-centric Video Dataset

-

论文:https://arxiv.org/abs/2003.04852

-

数据集:http://www.panda-dataset.com/

IntrA: 3D Intracranial Aneurysm Dataset for Deep Learning

-

论文:https://arxiv.org/abs/2003.02920

-

数据集:https://github.com/intra3d2019/IntrA

Cross-View Tracking for Multi-Human 3D Pose Estimation at over 100 FPS

-

论文:https://arxiv.org/abs/2003.03972

-

数据集:暂无

=============================================================

CONSAC: Robust Multi-Model Fitting by Conditional Sample Consensus

-

论文:http://openaccess.thecvf.com/content_CVPR_2020/html/Kluger_CONSAC_Robust_Multi-Model_Fitting_by_Conditional_Sample_Consensus_CVPR_2020_paper.html

-

代码:https://github.com/fkluger/consac

Learning to Learn Single Domain Generalization

-

论文:https://arxiv.org/abs/2003.13216

-

代码:https://github.com/joffery/M-ADA

Open Compound Domain Adaptation

-

主页:https://liuziwei7.github.io/projects/CompoundDomain.html

-

数据集:https://drive.google.com/drive/folders/1_uNTF8RdvhS_sqVTnYx17hEOQpefmE2r?usp=sharing

-

论文:https://arxiv.org/abs/1909.03403

-

代码:https://github.com/zhmiao/OpenCompoundDomainAdaptation-OCDA

Differentiable Volumetric Rendering: Learning Implicit 3D Representations without 3D Supervision

-

论文:http://www.cvlibs.net/publications/Niemeyer2020CVPR.pdf

-

代码:https://github.com/autonomousvision/differentiable_volumetric_rendering

QEBA: Query-Efficient Boundary-Based Blackbox Attack

-

论文:https://arxiv.org/abs/2005.14137

-

代码:https://github.com/AI-secure/QEBA

Equalization Loss for Long-Tailed Object Recognition

-

论文:https://arxiv.org/abs/2003.05176

-

代码:https://github.com/tztztztztz/eql.detectron2

Instance-aware Image Colorization

-

主页:https://ericsujw.github.io/InstColorization/

-

论文:https://arxiv.org/abs/2005.10825

-

代码:https://github.com/ericsujw/InstColorization

Contextual Residual Aggregation for Ultra High-Resolution Image Inpainting

-

论文:https://arxiv.org/abs/2005.09704

-

代码:https://github.com/Atlas200dk/sample-imageinpainting-HiFill

Where am I looking at? Joint Location and Orientation Estimation by Cross-View Matching

-

论文:https://arxiv.org/abs/2005.03860

-

代码:https://github.com/shiyujiao/cross_view_localization_DSM

Epipolar Transformers

-

论文:https://arxiv.org/abs/2005.04551

-

代码:https://github.com/yihui-he/epipolar-transformers

Bringing Old Photos Back to Life

-

主页:http://raywzy.com/Old_Photo/

-

论文:https://arxiv.org/abs/2004.09484

MaskFlownet: Asymmetric Feature Matching with Learnable Occlusion Mask

-

论文:https://arxiv.org/abs/2003.10955

-

代码:https://github.com/microsoft/MaskFlownet

Self-Supervised Viewpoint Learning from Image Collections

-

论文:https://arxiv.org/abs/2004.01793

-

论文2:https://research.nvidia.com/sites/default/files/pubs/2020-03_Self-Supervised-Viewpoint-Learning/SSV-CVPR2020.pdf

-

代码:https://github.com/NVlabs/SSV

Towards Discriminability and Diversity: Batch Nuclear-norm Maximization under Label Insufficient Situations

-

Oral

-

论文:https://arxiv.org/abs/2003.12237

-

代码:https://github.com/cuishuhao/BNM

Towards Learning Structure via Consensus for Face Segmentation and Parsing

-

论文:https://arxiv.org/abs/1911.00957

-

代码:https://github.com/isi-vista/structure_via_consensus

Plug-and-Play Algorithms for Large-scale Snapshot Compressive Imaging

-

Oral

-

论文:https://arxiv.org/abs/2003.13654

-

代码:https://github.com/liuyang12/PnP-SCI

Lightweight Photometric Stereo for Facial Details Recovery

-

论文:https://arxiv.org/abs/2003.12307

-

代码:https://github.com/Juyong/FacePSNet

Footprints and Free Space from a Single Color Image

-

论文:https://arxiv.org/abs/2004.06376

-

代码:https://github.com/nianticlabs/footprints

Self-Supervised Monocular Scene Flow Estimation

-

论文:https://arxiv.org/abs/2004.04143

-

代码:https://github.com/visinf/self-mono-sf

Quasi-Newton Solver for Robust Non-Rigid Registration

-

论文:https://arxiv.org/abs/2004.04322

-

代码:https://github.com/Juyong/Fast_RNRR

A Local-to-Global Approach to Multi-modal Movie Scene Segmentation

-

主页:https://anyirao.com/projects/SceneSeg.html

-

论文下载链接:https://arxiv.org/abs/2004.02678

-

代码:https://github.com/AnyiRao/SceneSeg

DeepFLASH: An Efficient Network for Learning-based Medical Image Registration

-

论文:https://arxiv.org/abs/2004.02097

-

代码:https://github.com/jw4hv/deepflash

Self-Supervised Scene De-occlusion

-

主页:https://xiaohangzhan.github.io/projects/deocclusion/

-

论文:https://arxiv.org/abs/2004.02788

-

代码:https://github.com/XiaohangZhan/deocclusion

Polarized Reflection Removal with Perfect Alignment in the Wild

-

主页:https://leichenyang.weebly.com/project-polarized.html

-

代码:https://github.com/ChenyangLEI/CVPR2020-Polarized-Reflection-Removal-with-Perfect-Alignment

Background Matting: The World is Your Green Screen

-

论文:https://arxiv.org/abs/2004.00626

-

代码:http://github.com/senguptaumd/Background-Matting

What Deep CNNs Benefit from Global Covariance Pooling: An Optimization Perspective

-

论文:https://arxiv.org/abs/2003.11241

-

代码:https://github.com/ZhangLi-CS/GCP_Optimization

Look-into-Object: Self-supervised Structure Modeling for Object Recognition

-

论文:暂无

-

代码:https://github.com/JDAI-CV/LIO

Video Object Grounding using Semantic Roles in Language Description

-

论文:https://arxiv.org/abs/2003.10606

-

代码:https://github.com/TheShadow29/vognet-pytorch

Dynamic Hierarchical Mimicking Towards Consistent Optimization Objectives

-

论文:https://arxiv.org/abs/2003.10739

-

代码:https://github.com/d-li14/DHM

SDFDiff: Differentiable Rendering of Signed Distance Fields for 3D Shape Optimization

-

论文:http://www.cs.umd.edu/~yuejiang/papers/SDFDiff.pdf

-

代码:https://github.com/YueJiang-nj/CVPR2020-SDFDiff

最后

Python崛起并且风靡,因为优点多、应用领域广、被大牛们认可。学习 Python 门槛很低,但它的晋级路线很多,通过它你能进入机器学习、数据挖掘、大数据,CS等更加高级的领域。Python可以做网络应用,可以做科学计算,数据分析,可以做网络爬虫,可以做机器学习、自然语言处理、可以写游戏、可以做桌面应用…Python可以做的很多,你需要学好基础,再选择明确的方向。这里给大家分享一份全套的 Python 学习资料,给那些想学习 Python 的小伙伴们一点帮助!

👉Python所有方向的学习路线👈

Python所有方向的技术点做的整理,形成各个领域的知识点汇总,它的用处就在于,你可以按照上面的知识点去找对应的学习资源,保证自己学得较为全面。

👉Python必备开发工具👈

工欲善其事必先利其器。学习Python常用的开发软件都在这里了,给大家节省了很多时间。

👉Python全套学习视频👈

我们在看视频学习的时候,不能光动眼动脑不动手,比较科学的学习方法是在理解之后运用它们,这时候练手项目就很适合了。

👉实战案例👈

学python就与学数学一样,是不能只看书不做题的,直接看步骤和答案会让人误以为自己全都掌握了,但是碰到生题的时候还是会一筹莫展。

因此在学习python的过程中一定要记得多动手写代码,教程只需要看一两遍即可。

👉大厂面试真题👈

我们学习Python必然是为了找到高薪的工作,下面这些面试题是来自阿里、腾讯、字节等一线互联网大厂最新的面试资料,并且有阿里大佬给出了权威的解答,刷完这一套面试资料相信大家都能找到满意的工作。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

1145

1145

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?