秋招面试专栏推荐 :深度学习算法工程师面试问题总结【百面算法工程师】——点击即可跳转

💡💡💡本专栏所有程序均经过测试,可成功执行💡💡💡

专栏目录 :《YOLOv8改进有效涨点》专栏介绍 & 专栏目录 | 目前已有80+篇内容,内含各种Head检测头、损失函数Loss、Backbone、Neck、NMS等创新点改进——点击即可跳转

本文介绍了CSWin Transformer,这是一种用于通用视觉任务的高效且有效的基于Transformer的骨干网络。Transformer设计中的一个挑战性问题在于全局自注意力计算非常昂贵,而局部自注意力往往限制了每个token的交互范围。为了解决这个问题,我们开发了十字形窗口自注意力机制,该机制通过将输入特征分割成等宽的条带来并行计算水平线和垂直线上的自注意力,这些线条形成一个十字形窗口。此外,还引入了局部增强的位置编码(LePE),它比现有的编码方案更好地处理局部位置信息。LePE自然支持任意的输入分辨率,因此对于下游任务特别有效和友好。结合这些设计和层次结构,CSWin Transformer在常见视觉任务上展示了竞争性性能。文章在介绍主要的原理后,将手把手教学如何进行模块的代码添加和修改,并将修改后的完整代码放在文章的最后,方便大家一键运行,小白也可轻松上手实践。以帮助您更好地学习深度学习目标检测YOLO系列的挑战。

专栏地址:YOLOv8改进——更新各种有效涨点方法——点击即可跳转 订阅学习不迷路

目录

2. 将CSWin Transformer添加到YOLOv8中

1. 原理

论文地址:CSWin Transformer: A General Vision Transformer Backbone with Cross-Shaped Windows——点击即可跳转

官方代码: 官方代码仓库——点击即可跳转

CSWin Transformer 引入了一种新颖的视觉转换器中的自注意力机制方法,解决了效率和计算挑战。下面是其主要原理的详细分解:

十字形窗口 (CSWin) 自注意力

机制:

-

条纹中的并行计算:CSWin 不应用计算成本高昂的全局自注意力,而是在输入特征图的水平和垂直条纹内并行执行自注意力。这会产生一个十字形的注意力窗口,其覆盖面积比传统的局部自注意力机制更大。

-

条纹宽度变化:条纹 (sw) 的宽度根据网络深度进行调整。较浅的层使用较小的宽度,较深的层使用较大的宽度。这平衡了计算成本和建模能力之间的权衡。

数学分析:

-

本文通过数学分析展示了条纹宽度如何影响建模能力和计算成本,强调了 CSWin 在保持高性能的同时降低计算开销的效率。

局部增强位置编码 (LePE)

增强位置信息:

-

与传统位置编码方法 (APE、RPE) 不同,LePE 将位置信息直接集成到每个 Transformer 块中,作为自注意力操作的并行模块。这有助于在适应不同的输入分辨率的同时保持位置上下文。

-

LePE 对自注意力机制中的值 (V) 进行操作,在注意力计算后添加位置信息,从而提供更强的局部归纳偏差并提高物体检测和分割等任务的性能。

层次结构

多尺度表示:

-

CSWin Transformer 遵循与传统 CNN 类似的层次结构,其中 token 的数量逐渐减少,通道维度增加。这种层次设计支持使用多尺度特征并降低计算复杂度。

-

层次结构中的每个阶段由多个 CSWin Transformer 块组成,在阶段之间,使用卷积层对特征图进行下采样并增加通道维度。

性能

最先进的结果:

-

与之前最先进的模型(例如 Swin Transformer)相比,CSWin Transformer 在各种视觉任务上都取得了卓越的性能。值得注意的是,在类似的计算预算下,它在图像分类、对象检测和语义分割任务中表现出显着的改进。

-

例如,基础变体 (CSWin-B) 在 ImageNet-1K 上实现了 85.4% 的 Top-1 准确率,明显超过 Swin Transformer。

总结

CSWin Transformer 通过以下方式增强了视觉变换器:

-

引入十字形窗口自注意力机制,实现高效的长距离依赖关系建模。

-

利用分层结构来利用多尺度特征。

-

采用局部增强位置编码,更好地处理不同任务和分辨率下的位置信息。

-

通过高效的计算在各种视觉任务中实现最先进的性能。

这些创新共同使 CSWin Transformer 能够保持高性能,同时有效管理计算成本,使其成为通用视觉任务的强大支柱。

2. 将CSWin Transformer添加到YOLOv8中

2.1 CSWin Transformer的代码实现

关键步骤一: 在/ultralytics/ultralytics/nn/modules/下新建backbone.py,并粘贴下面代码

# ------------------------------------------

# CSWin Transformer

# Copyright (c) Microsoft Corporation.

# Licensed under the MIT License.

# written By Xiaoyi Dong

# ------------------------------------------

import torch

import torch.nn as nn

import torch.nn.functional as F

from functools import partial

from timm.data import IMAGENET_DEFAULT_MEAN, IMAGENET_DEFAULT_STD

from timm.models.helpers import load_pretrained

from timm.models.layers import DropPath, to_2tuple, trunc_normal_

from timm.models.registry import register_model

from einops.layers.torch import Rearrange

import torch.utils.checkpoint as checkpoint

import numpy as np

import time

__all__ = ['CSWin_tiny', 'CSWin_small', 'CSWin_base', 'CSWin_large']

class Mlp(nn.Module):

def __init__(self, in_features, hidden_features=None, out_features=None, act_layer=nn.GELU, drop=0.):

super().__init__()

out_features = out_features or in_features

hidden_features = hidden_features or in_features

self.fc1 = nn.Linear(in_features, hidden_features)

self.act = act_layer()

self.fc2 = nn.Linear(hidden_features, out_features)

self.drop = nn.Dropout(drop)

def forward(self, x):

x = self.fc1(x)

x = self.act(x)

x = self.drop(x)

x = self.fc2(x)

x = self.drop(x)

return x

class LePEAttention(nn.Module):

def __init__(self, dim, resolution, idx, split_size=7, dim_out=None, num_heads=8, attn_drop=0., proj_drop=0., qk_scale=None):

super().__init__()

self.dim = dim

self.dim_out = dim_out or dim

self.resolution = resolution

self.split_size = split_size

self.num_heads = num_heads

head_dim = dim // num_heads

# NOTE scale factor was wrong in my original version, can set manually to be compat with prev weights

self.scale = qk_scale or head_dim ** -0.5

if idx == -1:

H_sp, W_sp = self.resolution, self.resolution

elif idx == 0:

H_sp, W_sp = self.resolution, self.split_size

elif idx == 1:

W_sp, H_sp = self.resolution, self.split_size

else:

print ("ERROR MODE", idx)

exit(0)

self.H_sp = H_sp

self.W_sp = W_sp

stride = 1

self.get_v = nn.Conv2d(dim, dim, kernel_size=3, stride=1, padding=1,groups=dim)

self.attn_drop = nn.Dropout(attn_drop)

def im2cswin(self, x):

B, N, C = x.shape

H = W = int(np.sqrt(N))

x = x.transpose(-2,-1).contiguous().view(B, C, H, W)

x = img2windows(x, self.H_sp, self.W_sp)

x = x.reshape(-1, self.H_sp* self.W_sp, self.num_heads, C // self.num_heads).permute(0, 2, 1, 3).contiguous()

return x

def get_lepe(self, x, func):

B, N, C = x.shape

H = W = int(np.sqrt(N))

x = x.transpose(-2,-1).contiguous().view(B, C, H, W)

H_sp, W_sp = self.H_sp, self.W_sp

x = x.view(B, C, H // H_sp, H_sp, W // W_sp, W_sp)

x = x.permute(0, 2, 4, 1, 3, 5).contiguous().reshape(-1, C, H_sp, W_sp) ### B', C, H', W'

lepe = func(x) ### B', C, H', W'

lepe = lepe.reshape(-1, self.num_heads, C // self.num_heads, H_sp * W_sp).permute(0, 1, 3, 2).contiguous()

x = x.reshape(-1, self.num_heads, C // self.num_heads, self.H_sp* self.W_sp).permute(0, 1, 3, 2).contiguous()

return x, lepe

def forward(self, qkv):

"""

x: B L C

"""

q,k,v = qkv[0], qkv[1], qkv[2]

### Img2Window

H = W = self.resolution

B, L, C = q.shape

assert L == H * W, "flatten img_tokens has wrong size"

q = self.im2cswin(q)

k = self.im2cswin(k)

v, lepe = self.get_lepe(v, self.get_v)

q = q * self.scale

attn = (q @ k.transpose(-2, -1)) # B head N C @ B head C N --> B head N N

attn = nn.functional.softmax(attn, dim=-1, dtype=attn.dtype)

attn = self.attn_drop(attn)

x = (attn @ v) + lepe

x = x.transpose(1, 2).reshape(-1, self.H_sp* self.W_sp, C) # B head N N @ B head N C

### Window2Img

x = windows2img(x, self.H_sp, self.W_sp, H, W).view(B, -1, C) # B H' W' C

return x

class CSWinBlock(nn.Module):

def __init__(self, dim, reso, num_heads,

split_size=7, mlp_ratio=4., qkv_bias=False, qk_scale=None,

drop=0., attn_drop=0., drop_path=0.,

act_layer=nn.GELU, norm_layer=nn.LayerNorm,

last_stage=False):

super().__init__()

self.dim = dim

self.num_heads = num_heads

self.patches_resolution = reso

self.split_size = split_size

self.mlp_ratio = mlp_ratio

self.qkv = nn.Linear(dim, dim * 3, bias=qkv_bias)

self.norm1 = norm_layer(dim)

if self.patches_resolution == split_size:

last_stage = True

if last_stage:

self.branch_num = 1

else:

self.branch_num = 2

self.proj = nn.Linear(dim, dim)

self.proj_drop = nn.Dropout(drop)

if last_stage:

self.attns = nn.ModuleList([

LePEAttention(

dim, resolution=self.patches_resolution, idx = -1,

split_size=split_size, num_heads=num_heads, dim_out=dim,

qk_scale=qk_scale, attn_drop=attn_drop, proj_drop=drop)

for i in range(self.branch_num)])

else:

self.attns = nn.ModuleList([

LePEAttention(

dim//2, resolution=self.patches_resolution, idx = i,

split_size=split_size, num_heads=num_heads//2, dim_out=dim//2,

qk_scale=qk_scale, attn_drop=attn_drop, proj_drop=drop)

for i in range(self.branch_num)])

mlp_hidden_dim = int(dim * mlp_ratio)

self.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()

self.mlp = Mlp(in_features=dim, hidden_features=mlp_hidden_dim, out_features=dim, act_layer=act_layer, drop=drop)

self.norm2 = norm_layer(dim)

def forward(self, x):

"""

x: B, H*W, C

"""

H = W = self.patches_resolution

B, L, C = x.shape

assert L == H * W, "flatten img_tokens has wrong size"

img = self.norm1(x)

qkv = self.qkv(img).reshape(B, -1, 3, C).permute(2, 0, 1, 3)

if self.branch_num == 2:

x1 = self.attns[0](qkv[:,:,:,:C//2])

x2 = self.attns[1](qkv[:,:,:,C//2:])

attened_x = torch.cat([x1,x2], dim=2)

else:

attened_x = self.attns[0](qkv)

attened_x = self.proj(attened_x)

x = x + self.drop_path(attened_x)

x = x + self.drop_path(self.mlp(self.norm2(x)))

return x

def img2windows(img, H_sp, W_sp):

"""

img: B C H W

"""

B, C, H, W = img.shape

img_reshape = img.view(B, C, H // H_sp, H_sp, W // W_sp, W_sp)

img_perm = img_reshape.permute(0, 2, 4, 3, 5, 1).contiguous().reshape(-1, H_sp* W_sp, C)

return img_perm

def windows2img(img_splits_hw, H_sp, W_sp, H, W):

"""

img_splits_hw: B' H W C

"""

B = int(img_splits_hw.shape[0] / (H * W / H_sp / W_sp))

img = img_splits_hw.view(B, H // H_sp, W // W_sp, H_sp, W_sp, -1)

img = img.permute(0, 1, 3, 2, 4, 5).contiguous().view(B, H, W, -1)

return img

class Merge_Block(nn.Module):

def __init__(self, dim, dim_out, norm_layer=nn.LayerNorm):

super().__init__()

self.conv = nn.Conv2d(dim, dim_out, 3, 2, 1)

self.norm = norm_layer(dim_out)

def forward(self, x):

B, new_HW, C = x.shape

H = W = int(np.sqrt(new_HW))

x = x.transpose(-2, -1).contiguous().view(B, C, H, W)

x = self.conv(x)

B, C = x.shape[:2]

x = x.view(B, C, -1).transpose(-2, -1).contiguous()

x = self.norm(x)

return x

class CSWinTransformer(nn.Module):

""" Vision Transformer with support for patch or hybrid CNN input stage

"""

def __init__(self, img_size=640, patch_size=16, in_chans=3, num_classes=1000, embed_dim=96, depth=[2,2,6,2], split_size = [3,5,7],

num_heads=12, mlp_ratio=4., qkv_bias=True, qk_scale=None, drop_rate=0., attn_drop_rate=0.,

drop_path_rate=0., hybrid_backbone=None, norm_layer=nn.LayerNorm, use_chk=False):

super().__init__()

self.use_chk = use_chk

self.num_classes = num_classes

self.num_features = self.embed_dim = embed_dim # num_features for consistency with other models

heads=num_heads

self.stage1_conv_embed = nn.Sequential(

nn.Conv2d(in_chans, embed_dim, 7, 4, 2),

Rearrange('b c h w -> b (h w) c', h = img_size//4, w = img_size//4),

nn.LayerNorm(embed_dim)

)

curr_dim = embed_dim

dpr = [x.item() for x in torch.linspace(0, drop_path_rate, np.sum(depth))] # stochastic depth decay rule

self.stage1 = nn.ModuleList([

CSWinBlock(

dim=curr_dim, num_heads=heads[0], reso=img_size//4, mlp_ratio=mlp_ratio,

qkv_bias=qkv_bias, qk_scale=qk_scale, split_size=split_size[0],

drop=drop_rate, attn_drop=attn_drop_rate,

drop_path=dpr[i], norm_layer=norm_layer)

for i in range(depth[0])])

self.merge1 = Merge_Block(curr_dim, curr_dim*2)

curr_dim = curr_dim*2

self.stage2 = nn.ModuleList(

[CSWinBlock(

dim=curr_dim, num_heads=heads[1], reso=img_size//8, mlp_ratio=mlp_ratio,

qkv_bias=qkv_bias, qk_scale=qk_scale, split_size=split_size[1],

drop=drop_rate, attn_drop=attn_drop_rate,

drop_path=dpr[np.sum(depth[:1])+i], norm_layer=norm_layer)

for i in range(depth[1])])

self.merge2 = Merge_Block(curr_dim, curr_dim*2)

curr_dim = curr_dim*2

temp_stage3 = []

temp_stage3.extend(

[CSWinBlock(

dim=curr_dim, num_heads=heads[2], reso=img_size//16, mlp_ratio=mlp_ratio,

qkv_bias=qkv_bias, qk_scale=qk_scale, split_size=split_size[2],

drop=drop_rate, attn_drop=attn_drop_rate,

drop_path=dpr[np.sum(depth[:2])+i], norm_layer=norm_layer)

for i in range(depth[2])])

self.stage3 = nn.ModuleList(temp_stage3)

self.merge3 = Merge_Block(curr_dim, curr_dim*2)

curr_dim = curr_dim*2

self.stage4 = nn.ModuleList(

[CSWinBlock(

dim=curr_dim, num_heads=heads[3], reso=img_size//32, mlp_ratio=mlp_ratio,

qkv_bias=qkv_bias, qk_scale=qk_scale, split_size=split_size[-1],

drop=drop_rate, attn_drop=attn_drop_rate,

drop_path=dpr[np.sum(depth[:-1])+i], norm_layer=norm_layer, last_stage=True)

for i in range(depth[-1])])

self.apply(self._init_weights)

self.channel = [i.size(1) for i in self.forward(torch.randn(1, 3, 640, 640))]

def _init_weights(self, m):

if isinstance(m, nn.Linear):

trunc_normal_(m.weight, std=.02)

if isinstance(m, nn.Linear) and m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, (nn.LayerNorm, nn.BatchNorm2d)):

nn.init.constant_(m.bias, 0)

nn.init.constant_(m.weight, 1.0)

def forward_features(self, x):

input_size = x.size(2)

scale = [4, 8, 16, 32]

features = [None, None, None, None]

B = x.shape[0]

x = self.stage1_conv_embed(x)

for blk in self.stage1:

if self.use_chk:

x = checkpoint.checkpoint(blk, x)

else:

x = blk(x)

if input_size // int(x.size(1) ** 0.5) in scale:

features[scale.index(input_size // int(x.size(1) ** 0.5))] = x.reshape((x.size(0), int(x.size(1) ** 0.5), int(x.size(1) ** 0.5), x.size(2))).permute(0, 3, 1, 2)

for pre, blocks in zip([self.merge1, self.merge2, self.merge3],

[self.stage2, self.stage3, self.stage4]):

x = pre(x)

for blk in blocks:

if self.use_chk:

x = checkpoint.checkpoint(blk, x)

else:

x = blk(x)

if input_size // int(x.size(1) ** 0.5) in scale:

features[scale.index(input_size // int(x.size(1) ** 0.5))] = x.reshape((x.size(0), int(x.size(1) ** 0.5), int(x.size(1) ** 0.5), x.size(2))).permute(0, 3, 1, 2)

return features

def forward(self, x):

x = self.forward_features(x)

return x

def _conv_filter(state_dict, patch_size=16):

""" convert patch embedding weight from manual patchify + linear proj to conv"""

out_dict = {}

for k, v in state_dict.items():

if 'patch_embed.proj.weight' in k:

v = v.reshape((v.shape[0], 3, patch_size, patch_size))

out_dict[k] = v

return out_dict

def update_weight(model_dict, weight_dict):

idx, temp_dict = 0, {}

for k, v in weight_dict.items():

# k = k[9:]

if k in model_dict.keys() and np.shape(model_dict[k]) == np.shape(v):

temp_dict[k] = v

idx += 1

model_dict.update(temp_dict)

print(f'loading weights... {idx}/{len(model_dict)} items')

return model_dict

def CSWin_tiny(pretrained=False, **kwargs):

model = CSWinTransformer(patch_size=4, embed_dim=64, depth=[1,2,21,1],

split_size=[1,2,8,8], num_heads=[2,4,8,16], mlp_ratio=4., **kwargs)

if pretrained:

model.load_state_dict(update_weight(model.state_dict(), torch.load(pretrained)['state_dict_ema']))

return model

def CSWin_small(pretrained=False, **kwargs):

model = CSWinTransformer(patch_size=4, embed_dim=64, depth=[2,4,32,2],

split_size=[1,2,8,8], num_heads=[2,4,8,16], mlp_ratio=4., **kwargs)

if pretrained:

model.load_state_dict(update_weight(model.state_dict(), torch.load(pretrained)['state_dict_ema']))

return model

def CSWin_base(pretrained=False, **kwargs):

model = CSWinTransformer(patch_size=4, embed_dim=96, depth=[2,4,32,2],

split_size=[1,2,8,8], num_heads=[4,8,16,32], mlp_ratio=4., **kwargs)

if pretrained:

model.load_state_dict(update_weight(model.state_dict(), torch.load(pretrained)['state_dict_ema']))

return model

def CSWin_large(pretrained=False, **kwargs):

model = CSWinTransformer(patch_size=4, embed_dim=144, depth=[2,4,32,2],

split_size=[1,2,8,8], num_heads=[6,12,24,24], mlp_ratio=4., **kwargs)

if pretrained:

model.load_state_dict(update_weight(model.state_dict(), torch.load(pretrained)['state_dict_ema']))

return model

CSWin Transformer在处理图像提取特征的流程中,采用了一种结合多层次结构和跨形窗口自注意力机制的方法。下面是详细的流程解析:

1. 输入图像和初始卷积嵌入

输入图像:

-

输入图像大小为

。

卷积嵌入:

-

使用一个

的卷积层,步长为4,将输入图像嵌入到特征空间中,得到大小为

的特征图,通道数为 ( C )。

2. 分层结构

CSWin Transformer由四个阶段组成,每个阶段包含若干个CSWin Transformer块。各阶段之间通过卷积层进行连接,以减少特征图的尺寸并增加通道数。

阶段划分:

-

阶段1:特征图大小为

,包含 ( N1 ) 个CSWin Transformer块。

-

阶段2:通过一个 ( 3 \times 3 ) 的卷积层(步长为2)下采样,特征图大小变为 ( \frac{H}{8} \times \frac{W}{8} \times 2C ),包含 ( N2 ) 个CSWin Transformer块。

-

阶段3:再次通过卷积层下采样,特征图大小变为

,包含 ( N3 ) 个CSWin Transformer块。

-

阶段4:最后通过卷积层下采样,特征图大小变为

,包含 ( N4 ) 个CSWin Transformer块。

3. CSWin Transformer块

每个CSWin Transformer块包含以下几个关键组件:

Cross-Shaped Window Self-Attention:

-

横纵条带划分:输入特征图在每个注意力头上被划分成若干条横向和纵向的条带。每个条带包含等宽的特征块。

-

并行计算:在每个头上分别计算横向和纵向条带的自注意力,这样可以覆盖更大范围的特征,同时减少计算复杂度。

-

条带宽度调整:根据网络深度调整条带宽度,浅层使用较小宽度,深层使用较大宽度,以平衡计算开销和建模能力。

Locally-enhanced Positional Encoding (LePE):

-

位置编码增强:LePE将位置编码信息直接添加到自注意力计算后的值(V)上,而不是在计算注意力之前。这种方式增强了局部特征的表达能力,并且更适合处理不同分辨率的输入图像。

4. 特征提取流程总结

-

初始卷积嵌入:输入图像通过卷积层嵌入到特征空间。

-

分层处理:

-

每一层的特征图通过若干个CSWin Transformer块进行处理。

-

在每个CSWin Transformer块中,应用Cross-Shaped Window Self-Attention和LePE,逐步提取和融合特征。

-

-

特征下采样:通过卷积层进行下采样,减少特征图尺寸并增加通道数。

-

多层次特征融合:通过四个阶段的处理,逐步提取多尺度的特征,形成最终的特征表示。

总结

CSWin Transformer通过结合跨形窗口自注意力机制和分层结构,有效地提取和融合图像特征,同时保持了计算效率。这种设计不仅提高了长距离依赖的建模能力,还增强了处理高分辨率图像的效率,使其在各种视觉任务中表现出色【3†source】。

2.2 更改init.py文件

关键步骤二:修改modules文件夹下的__init__.py文件,先导入函数

然后在下面的__all__中声明函数

2.3 添加yaml文件

关键步骤三:在/ultralytics/ultralytics/cfg/models/v8下面新建文件yolov8_cswin.yaml文件,粘贴下面的内容

- OD【目标检测】

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs

s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs

m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs

l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs

x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs

# 0-P1/2

# 1-P2/4

# 2-P3/8

# 3-P4/16

# 4-P5/32

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, CSWin_tiny, []] # 4

- [-1, 1, SPPF, [1024, 5]] # 5

# YOLOv8.0n head

head:

- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 6

- [[-1, 3], 1, Concat, [1]] # 7 cat backbone P4

- [-1, 3, C2f, [512]] # 8

- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 9

- [[-1, 2], 1, Concat, [1]] # 10 cat backbone P3

- [-1, 3, C2f, [256]] # 11 (P3/8-small)

- [-1, 1, Conv, [256, 3, 2]] # 12

- [[-1, 8], 1, Concat, [1]] # 13 cat head P4

- [-1, 3, C2f, [512]] # 14 (P4/16-medium)

- [-1, 1, Conv, [512, 3, 2]] # 15

- [[-1, 5], 1, Concat, [1]] # 16 cat head P5

- [-1, 3, C2f, [1024]] # 17 (P5/32-large)

- [[11, 14, 17], 1, Detect, [nc]] # Detect(P3, P4, P5)- Seg【语义分割】

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs

s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs

m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs

l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs

x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs

# 0-P1/2

# 1-P2/4

# 2-P3/8

# 3-P4/16

# 4-P5/32

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, CSWin_tiny, []] # 4

- [-1, 1, SPPF, [1024, 5]] # 5

# YOLOv8.0n head

head:

- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 6

- [[-1, 3], 1, Concat, [1]] # 7 cat backbone P4

- [-1, 3, C2f, [512]] # 8

- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 9

- [[-1, 2], 1, Concat, [1]] # 10 cat backbone P3

- [-1, 3, C2f, [256]] # 11 (P3/8-small)

- [-1, 1, Conv, [256, 3, 2]] # 12

- [[-1, 8], 1, Concat, [1]] # 13 cat head P4

- [-1, 3, C2f, [512]] # 14 (P4/16-medium)

- [-1, 1, Conv, [512, 3, 2]] # 15

- [[-1, 5], 1, Concat, [1]] # 16 cat head P5

- [-1, 3, C2f, [1024]] # 17 (P5/32-large)

- [[11, 14, 17], 1,Segment, [nc, 32, 256]] # Segment(P3, P4, P5)温馨提示:因为本文只是对yolov8基础上添加模块,如果要对yolov8n/l/m/x进行添加则只需要指定对应的depth_multiple 和 width_multiple。

# YOLOv8n

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.25 # layer channel multiple

max_channels: 1024 # max_channels

# YOLOv8s

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

max_channels: 1024 # max_channels

# YOLOv8l

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

max_channels: 512 # max_channels

# YOLOv8m

depth_multiple: 0.67 # model depth multiple

width_multiple: 0.75 # layer channel multiple

max_channels: 768 # max_channels

# YOLOv8x

depth_multiple: 1.33 # model depth multiple

width_multiple: 1.25 # layer channel multiple

max_channels: 512 # max_channels2.4 注册模块

关键步骤四:在task.py的parse_model函数CSWin Transformer”

elif m in {

CSWin_tiny,

CSWin_small,

CSWin_large,

}:

m = m(*args)

c2 = m.channel2.5 执行程序

在train.py中,将model的参数路径设置为yolov8_cswin.yaml的路径

建议大家写绝对路径,确保一定能找到

from ultralytics import YOLO

# Load a model

# model = YOLO('yolov8n.yaml') # build a new model from YAML

# model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

model = YOLO(r'/projects/ultralytics/ultralytics/cfg/models/v8/yolov8_cswin.yaml') # build from YAML and transfer weights

# Train the model

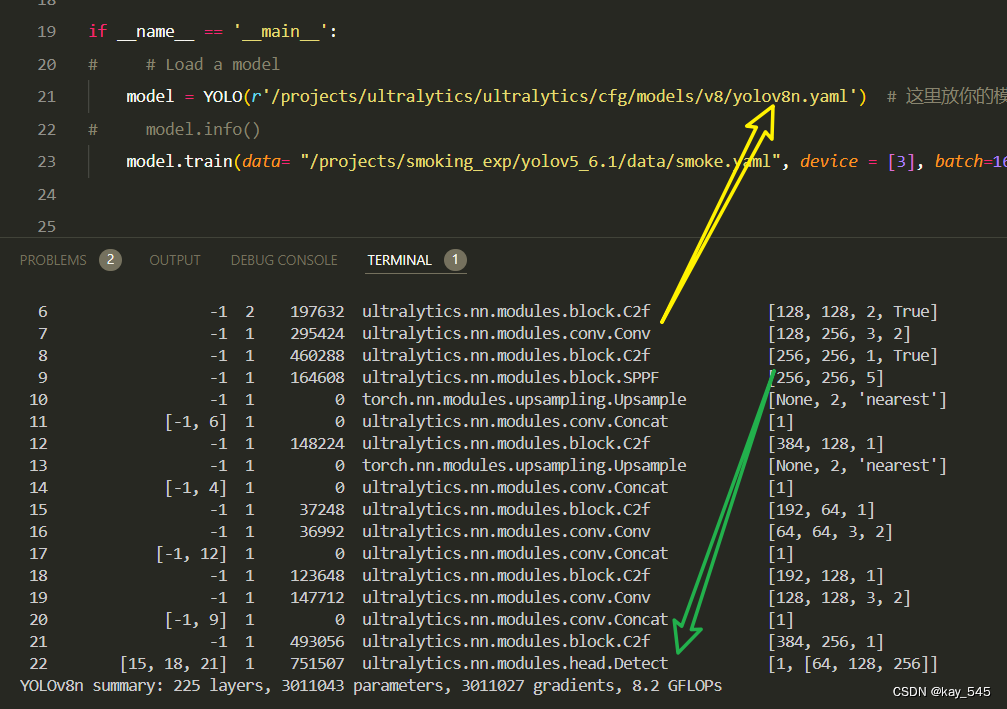

model.train()🚀运行程序,如果出现下面的内容则说明添加成功🚀

from n params module arguments

0 -1 1 21806528 CSWin_tiny []

1 -1 1 394240 ultralytics.nn.modules.block.SPPF [512, 256, 5]

2 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

3 [-1, 3] 1 0 ultralytics.nn.modules.conv.Concat [1]

4 -1 1 164608 ultralytics.nn.modules.block.C2f [512, 128, 1]

5 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

6 [-1, 2] 1 0 ultralytics.nn.modules.conv.Concat [1]

7 -1 1 41344 ultralytics.nn.modules.block.C2f [256, 64, 1]

8 -1 1 36992 ultralytics.nn.modules.conv.Conv [64, 64, 3, 2]

9 [-1, 8] 1 0 ultralytics.nn.modules.conv.Concat [1]

10 -1 1 123648 ultralytics.nn.modules.block.C2f [192, 128, 1]

11 -1 1 147712 ultralytics.nn.modules.conv.Conv [128, 128, 3, 2]

12 [-1, 5] 1 0 ultralytics.nn.modules.conv.Concat [1]

13 -1 1 493056 ultralytics.nn.modules.block.C2f [384, 256, 1]

14 [11, 14, 17] 1 897664 ultralytics.nn.modules.head.Detect [80, [64, 128, 256]]

YOLOv8_cswin summary: 626 layers, 24105792 parameters, 24105776 gradients3. 完整代码分享

https://pan.baidu.com/s/1aAgi8N8Y4hGOSeJozdO8FQ?pwd=bk6r提取码: bk6r

4. GFLOPs

关于GFLOPs的计算方式可以查看:百面算法工程师 | 卷积基础知识——Convolution

未改进的YOLOv8nGFLOPs

改进后的GFLOPs

现在手上没有卡了,等过段时候有卡了把这补上,需要的同学自己测一下

5. 进阶

可以与其他模块或者损失函数等结合,进一步提升检测效果

6. 总结

CSWin Transformer通过引入跨形窗口自注意力机制(Cross-Shaped Window Self-Attention)和局部增强位置编码(Locally-enhanced Positional Encoding, LePE)来提升视觉Transformer的效率和性能。其主要原理是将输入图像特征划分为横向和纵向条带,分别在这些条带内并行计算自注意力,从而扩大注意力范围并减少计算复杂度。与此同时,LePE在自注意力计算后的值上直接添加位置编码信息,增强了局部特征表达能力。此外,CSWin Transformer采用分层结构,在每个阶段通过卷积层下采样,逐步减少特征图尺寸并增加通道数,提取多尺度特征。这种设计在保持计算效率的同时,提高了长距离依赖的建模能力,使CSWin Transformer在图像分类、目标检测和语义分割等任务中表现出色。

2010

2010

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?