论文地址:[1708.06519] Learning Efficient Convolutional Networks through Network Slimming (arxiv.org)

Abstract

The deployment of deep convolutional neural networks (CNNs) in many real world applications is largely hindered by their high computational cost. In this paper, we propose a novel learning scheme for CNNs to simultaneously 1) reduce the model size; 2) decrease the run-time memory footprint; and 3) lower the number of computing operations, without compromising accuracy. This is achieved by enforcing channel-level sparsity in the network in a simple but effective way. Different from many existing approaches, the proposed method directly applies to modern CNN architectures, introduces minimum overhead to the training process, and requires no special software/hardware accelerators for the resulting models. We call our approach network slim-

ming, which takes wide and large networks as input models, but during training insignificant channels are automatically identified and pruned afterwards, yielding thin and compact models with comparable accuracy. We empirically demonstrate the effectiveness of our approach with several state-of-the-art CNN models, including VGGNet, ResNet and DenseNet, on various image classification datasets. For VGGNet, a multi-pass version ofnetwork slimming gives a

20× reduction in model size and a 5× reduction in computing operations.

深度卷积神经网络(CNN)在许多现实世界应用中的部署在很大程度上受到其高计算成本的阻碍。在本文中,我们提出了一种新颖的 CNN 学习方案,以同时 1)减小模型大小; 2)减少运行时内存占用; 3)在不影响精度的情况下减少计算操作的数量。这是通过以简单但有效的方式在网络中强制执行通道级稀疏性来实现的。与许多现有方法不同,所提出的方法直接适用于现代 CNN 架构,在训练过程中引入最小的开销,并且不需要特殊的软件/硬件加速器来生成模型。我们将我们的方法称为网络瘦身,它采用宽而大的网络作为输入模型,但在训练过程中,不重要的通道会被自动识别并修剪,从而产生具有相当精度的瘦而紧凑的模型。我们通过多种最先进的 CNN 模型(包括 VGGNet、ResNet 和 DenseNet)在各种图像分类数据集上凭经验证明了我们的方法的有效性。对于 VGGNet,网络瘦身的多通道版本可将模型大小减少 20 倍,并将计算操作减少 5 倍。

1. Introduction(介绍)

In recent years, convolutional neural networks (CNNs) have become the dominant approach for a variety of computer vision tasks, e.g., image classification [22], object detection [8], semantic segmentation [26]. Large-scale datasets, high-end modern GPUs and new network architec-

tures allow the development of unprecedented large CNN models. For instance, from AlexNet [22], VGGNet [31] and GoogleNet [34] to ResNets [14], the ImageNet Classification Challenge winner models have evolved from 8 layers to more than 100 layers.

近年来,卷积神经网络(CNN)已成为各种计算机视觉任务的主要方法,例如图像分类[22]、对象检测[8]、语义分割[26]。大规模数据集、高端现代 GPU 和新的网络架构允许开发前所未有的大型 CNN 模型。例如,从 AlexNet [22]、VGGNet [31] 和 GoogleNet [34] 到 ResNets [14],ImageNet 分类挑战赛获胜者模型已经从 8 层发展到超过 100 层。

However, larger CNNs, although with stronger representation power, are more resource-hungry. For instance, a 152-layer ResNet [14] has more than 60 million parameters and requires more than 20 Giga float-point-operations (FLOPs) when inferencing an image with resolution 224×

224. This is unlikely to be affordable on resource constrained platforms such as mobile devices, wearables or Internet of Things (IoT) devices.

然而,较大的 CNN 虽然具有更强的表示能力,但更需要资源。例如,152 层 ResNet [14] 拥有超过 6000 万个参数,在推理分辨率为 224×224 的图像时需要超过 20 Giga 浮点运算(FLOP)。这在资源有限的情况下不太可能承受。移动设备、可穿戴设备或物联网 (IoT) 设备等平台。

The deployment of CNNs in real world applications are mostly constrained by 1) Model size: CNNs’ strong representation power comes from their millions of trainable parameters. Those parameters, along with network structure information, need to be stored on disk and loaded into memory during inference time. As an example, storing a typical CNN trained on ImageNet consumes more than 300MB space, which is a big resource burden to embedded devices.2) Run-time memory: During inference time, the intermediate activations/responses of CNNs could even take more memory space than storing the model parameters, even with batch size 1. This is not a problem for high-end GPUs, but unaffordable for many applications with low computational power. 3) Number of computing operations: The convolution operations are computationally intensive on high resolution images. A large CNN may take several minutes to process one single image on a mobile device, making it unrealistic to be adopted for real applications.

CNN 在现实世界应用中的部署主要受到以下因素的限制:1)模型大小:CNN 强大的表示能力来自于其数百万个可训练参数。这些参数以及网络结构信息需要存储在磁盘上并在推理期间加载到内存中。例如,存储在 ImageNet 上训练的典型 CNN 会消耗超过 300MB 的空间,这对嵌入式设备来说是一个很大的资源负担。 2) 运行时内存:在推理期间,CNN 的中间激活/响应甚至可能比存储模型参数占用更多的内存空间,即使批量大小为 1。这对于高端 GPU 来说不是问题,但对于高端 GPU 来说却无法承受。许多计算能力较低的应用程序。 3)计算操作的数量:卷积操作在高分辨率图像上是计算密集型的。大型 CNN 在移动设备上处理一张图像可能需要几分钟的时间,这使得在实际应用中采用是不现实的。

Many works have been proposed to compress large CNNs or directly learn more efficient CNN models for fast inference. These include low-rank approximation [7], network quantization [3, 12] and binarization [28, 6], weight pruning [12], dynamic inference [16], etc. However, most of these methods can only address one or two challenges mentioned above. Moreover, some of the techniques require specially designed software/hardware accelerators for execution speedup [28, 6, 12].

已经提出了许多工作来压缩大型 CNN 或直接学习更高效的 CNN 模型以进行快速推理。这些包括低秩近似[7]、网络量化[3, 12]和二值化[28, 6]、权重剪枝[12]、动态推理[16]等。然而,这些方法中的大多数只能解决一种或多种问题。上述两个挑战。此外,一些技术需要专门设计的软件/硬件加速器来加速执行速度[28,6,12]。

Another direction to reduce the resource consumption of large CNNs is to sparsify the network. Sparsity can be imposed on different level of structures [2, 37, 35, 29, 25], which yields considerable model-size compression and inference speedup. However, these approaches generally require special software/hardware accelerators to harvest the gain in memory or time savings, though it is easier than non-structured sparse weight matrix as in [12].

减少大型CNN资源消耗的另一个方向是稀疏网络。稀疏性可以应用于不同级别的结构[2,37,35,29,25],这会产生相当大的模型大小压缩和推理加速。然而,这些方法通常需要特殊的软件/硬件加速器来获得内存或时间节省的收益,尽管它比[12]中的非结构化稀疏权重矩阵更容易。

In this paper, we propose network slimming, a simple yet effective network training scheme, which addresses all the aforementioned challenges when deploying large CNNs under limited resources. Our approach imposes L1 regularization on the scaling factors in batch normalization (BN) layers, thus it is easy to implement without introducing any change to existing CNN architectures. Pushing the values of BN scaling factors towards zero with L1 regularization enables us to identify insignificant channels (or neurons), as each scaling factor corresponds to a specific convolutional channel (or a neuron in a fully-connected layer).This facilitates the channel-level pruning at the followedstep. The additional regularization term rarely hurt the performance. In fact, in some cases it leads to higher generalization accuracy. Pruning unimportant channels may sometimes temporarily degrade the performance, but this effect can be compensated by the followed finetuning of the pruned network. After pruning, the resulting narrower network is much more compact in terms of model size, runtime memory, and computing operations compared to the initial wide network. The above process can be repeated for several times, yielding a multi-pass network slimming scheme which leads to even more compact network.

在本文中,我们提出了网络瘦身,这是一种简单而有效的网络训练方案,它解决了在有限资源下部署大型 CNN 时遇到的所有上述挑战。我们的方法对批量归一化 (BN) 层中的缩放因子进行 L1 正则化,因此很容易实现,无需对现有 CNN 架构进行任何更改。通过 L1 正则化将 BN 缩放因子的值推向零使我们能够识别无关紧要的通道(或神经元),因为每个缩放因子对应于特定的卷积通道(或全连接层中的神经元)。这有利于后续步骤中的通道级修剪。额外的正则化项很少会损害性能。事实上,在某些情况下,它会带来更高的泛化精度。修剪不重要的通道有时可能会暂时降低性能,但这种影响可以通过随后对修剪后的网络进行微调来补偿。剪枝后,与初始的宽网络相比,所得的窄网络在模型大小、运行时内存和计算操作方面更加紧凑。上述过程可以重复多次,产生多通道网络瘦身方案,从而使网络更加紧凑。

Experiments on several benchmark datasets and different network architectures show that we can obtain CNN models with up to 20x mode-size compression and 5x reduction in computing operations of the original ones, while achieving the same or even higher accuracy. Moreover, our method achieves model compression and inference speedup with conventional hardware and deep learning software packages, since the resulting narrower model is free of any sparse storing format or computing operations.

在多个基准数据集和不同网络架构上的实验表明,我们可以获得比原始模型模型大小压缩高达 20 倍、计算量减少 5 倍的 CNN 模型,同时达到相同甚至更高的精度。此外,我们的方法使用传统硬件和深度学习软件包实现了模型压缩和推理加速,因为所得的较窄模型没有任何稀疏存储格式或计算操作。

2. Related Work(相关工作)

In this section, we discuss related work from five aspects.

Low-rank Decomposition approximates weight matrix in neural networks with low-rank matrix using techniques like Singular Value Decomposition (SVD) [7]. This method works especially well on fully-connected layers, yielding ∼3x model-size compression however without notable speed acceleration, since computing operations in CNN mainly come from convolutional layers.

Weight Quantization. HashNet [3] proposes to quantize the network weights. Before training, network weights are hashed to different groups and within each group weight the value is shared. In this way only the shared weights and hash indices need to be stored, thus a large amount of storage space could be saved. [12] uses a improved quantization technique in a deep compression pipeline and achieves 35x to 49x compression rates on AlexNet and VGGNet. However, these techniques can neither save run-time memory nor inference time, since during inference shared weights need to be restored to their original positions.

[28, 6] quantize real-valued weights into binary/ternary weights (weight values restricted to {−1, 1} or {−1, 0, 1}). This yields a large amount of model-size saving, and significant speedup could also be obtained given bitwise operation libraries. However, this aggressive low-bit approximation method usually comes with a moderate accuracy loss.

本节我们从五个方面讨论相关工作。

低秩分解使用奇异值分解 (SVD) [7] 等技术,用低秩矩阵近似神经网络中的权重矩阵。该方法在全连接层上尤其有效,可产生约 3 倍的模型大小压缩,但速度没有显着加快,因为 CNN 中的计算操作主要来自卷积层。

权重量化。 HashNet [3]提出量化网络权重。在训练之前,网络权重被散列到不同的组,并且在每个组权重内共享该值。这样只需要存储共享权重和哈希索引,可以节省大量的存储空间。 [12] 在深度压缩管道中使用改进的量化技术,并在 AlexNet 和 VGGNet 上实现了 35 倍到 49 倍的压缩率。然而,这些技术既不能节省运行时内存,也不能节省推理时间,因为在推理期间共享权重需要恢复到其原始位置。

[28, 6] 将实值权重量化为二元/三元权重(权重值仅限于 {−1, 1} 或 {−1, 0, 1})。这可以节省大量的模型大小,并且在给定按位运算库的情况下也可以获得显着的加速。然而,这种激进的低位近似方法通常会带来适度的精度损失。

Weight Pruning / Sparsifying. [12] proposes to prune the unimportant connections with small weights in trained neu- ral networks. The resulting network’s weights are mostly zeros thus the storage space can be reduced by storing the model in a sparse format. However, these methods can only achieve speedup with dedicated sparse matrix operation li- braries and/or hardware. The run-time memory saving is also very limited since most memory space is consumed by the activation maps (still dense) instead of the weights.

权重修剪/稀疏。 [12]建议修剪 受过训练的神经网络中与小权重的不重要联系 拉尔网络。所得网络的权重主要为 零,因此可以通过存储来减少存储空间 稀疏格式的模型。然而这些方法只能 通过专用稀疏矩阵运算实现加速 库和/或硬件。运行时节省的内存为 也非常有限,因为大部分内存空间都被消耗了 激活图(仍然密集)而不是权重。

In [12], there is no guidance for sparsity during training. [32] overcomes this limitation by explicitly imposing sparse constraint over each weight with additional gate variables, and achieve high compression rates by pruning connections with zero gate values. This method achieves better com- pression rate than [12], but suffers from the same drawback.

在[12]中,训练期间没有稀疏性指导。 [32]通过明确地施加稀疏克服了这个限制 使用附加门变量对每个权重进行约束, 并通过修剪连接实现高压缩率 门值为零。该方法实现了比[12]更好的压缩率,但也存在同样的缺点。

Structured Pruning / Sparsifying. Recently, [23] proposes to prune channels with small incoming weights in trained CNNs, and then fine-tune the network to regain accuracy. [2] introduces sparsity by random deactivating input-output channel-wise connections in convolutional layers before training, which also yields smaller networks with moderate accuracy loss. Compared with these works, we explicitly impose channel-wise sparsity in the optimization objective during training, leading to smoother channel pruning process and little accuracy loss.

结构化修剪/稀疏。最近,[23]提出在经过训练的 CNN 中修剪输入权重较小的通道,然后微调网络以重新获得准确性。 [2] 通过在训练前随机停用卷积层中的输入输出通道连接来引入稀疏性,这也会产生具有中等精度损失的较小网络。与这些工作相比,我们在训练过程中在优化目标中明确施加通道稀疏性,从而导致通道剪枝过程更平滑并且精度损失很小。

[37] imposes neuron-level sparsity during training thus some neurons could be pruned to obtain compact networks. [35] proposes a Structured Sparsity Learning (SSL) method to sparsify different level of structures (e.g. filters, channels or layers) in CNNs. Both methods utilize group sparsity regualarization during training to obtain structured sparsity. Instead of resorting to group sparsity on convolutional weights, our approach imposes simple L1 sparsity on channel-wise scaling factors, thus the optimization objective is much simpler.

[37]在训练过程中施加神经元级稀疏性,因此可以修剪一些神经元以获得紧凑的网络。 [35]提出了一种结构化稀疏学习(SSL)方法来稀疏化 CNN 中不同级别的结构(例如滤波器、通道或层)。两种方法在训练期间都利用组稀疏性正则化来获得结构化稀疏性。我们的方法不是在卷积权重上采用组稀疏性,而是在通道缩放因子上施加简单的 L1 稀疏性,因此优化目标要简单得多。

Since these methods prune or sparsify part of the network structures (e.g., neurons, channels) instead of individual weights, they usually require less specialized libraries (e.g. for sparse computing operation) to achieve inference speedup and run-time memory saving. Our network slimming also falls into this category, with absolutely no special libraries needed to obtain the benefits.

由于这些方法修剪或稀疏部分网络结构(例如神经元、通道)而不是单个权重,因此它们通常需要不太专业的库(例如用于稀疏计算操作)来实现推理加速和运行时内存节省。我们的网络瘦身也属于这一类,完全不需要特殊的库来获得好处。

Neural Architecture Learning. While state-of-the-art CNNs are typically designed by experts [22, 31, 14], there are also some explorations on automatically learning network architectures. [20] introduces sub-modular/supermodular optimization for network architecture search with a given resource budget. Some recent works [38, 1] propose to learn neural architecture automatically with reinforcement learning. The searching space of these methods are extremely large, thus one needs to train hundreds of models to distinguish good from bad ones. Network slimming can also be treated as an approach for architecture learning, despite the choices are limited to the width of each layer. However, in contrast to the aforementioned methods, network slimming learns network architecture through only a single training process, which is in line with our goal of efficiency.

神经架构学习。虽然最先进的 CNN 通常是由专家设计的 [22,31,14],但也有一些关于自动学习网络架构的探索。 [20]引入了给定资源预算下网络架构搜索的子模块/超模块优化。最近的一些工作 [38, 1] 提出通过强化学习自动学习神经架构。这些方法的搜索空间非常大,因此需要训练数百个模型来区分好坏模型。网络瘦身也可以被视为架构学习的一种方法,尽管选择仅限于每层的宽度。然而,与上述方法相比,网络瘦身仅通过单个训练过程来学习网络架构,这符合我们的效率目标。

3. Network slimming(网络瘦身 )

We aim to provide a simple scheme to achieve channellevel sparsity in deep CNNs. In this section, we first discuss the advantages and challenges of channel-level sparsity, and introduce how we leverage the scaling layers in batch normalization to effectively identify and prune unimportant channels in the network.

我们的目标是提供一种简单的方案来实现深度 CNN 中的通道级稀疏性。在本节中,我们首先讨论通道级稀疏性的优点和挑战,并介绍如何利用批量归一化中的缩放层来有效地识别和修剪网络中的不重要通道。

Advantages of Channel-level Sparsity. As discussed in prior works [35, 23, 11], sparsity can be realized at different levels, e.g., weight-level, kernel-level, channel-level or layer-level. Fine-grained level (e.g., weight-level) sparsity gives the highest flexibility and generality leads to higher compression rate, but it usually requires special software or hardware accelerators to do fast inference on the sparsified model [11]. On the contrary, the coarsest layer-level sparsity does not require special packages to harvest the inference speedup, while it is less flexible as some whole layers need to be pruned. In fact, removing layers is only effective when the depth is sufficiently large, e.g., more than 50 layers [35, 18]. In comparison, channel-level sparsity provides a nice tradeoff between flexibility and ease of implementation. It can be applied to any typical CNNs or fullyconnected networks (treat each neuron as a channel), and the resulting network is essentially a “thinned” version of the unpruned network, which can be efficiently inferenced on conventional CNN platforms.

通道级稀疏性的优点。正如先前的工作[35,23,11]中所讨论的,稀疏性可以在不同的级别上实现,例如权重级别、内核级别、通道级别或层级别。细粒度级别(例如权重级别)稀疏性提供了最高的灵活性和通用性,从而带来更高的压缩率,但通常需要特殊的软件或硬件加速器来对稀疏模型进行快速推理[11]。相反,最粗的层级稀疏性不需要特殊的包来获得推理加速,但它的灵活性较差,因为需要修剪一些整个层。事实上,去除层仅在深度足够大时才有效,例如超过 50 层 [35, 18]。相比之下,通道级稀疏性在灵活性和易于实现之间提供了很好的权衡。它可以应用于任何典型的 CNN 或全连接网络(将每个神经元视为一个通道),并且生成的网络本质上是未剪枝网络的“瘦身”版本,可以在传统 CNN 平台上进行高效推理。

Challenges. Achieving channel-level sparsity requires pruning all the incoming and outgoing connections associated with a channel. This renders the method of directly pruning weights on a pre-trained model ineffective, as it is unlikely that all the weights at the input or output end of a channel happen to have near zero values. As reported in [23], pruning channels on pre-trained ResNets can only lead to a reduction of∼10% in the number of parameters without suffering from accuracy loss. [35] addresses this problem by enforcing sparsity regularization into the training objective. Specifically, they adopt group LASSO to push all the filter weights corresponds to the same channel towards zero simultaneously during training. However, this approach requires computing the gradients of the additional regularization term with respect to all the filter weights, which is nontrivial. We introduce a simple idea to address the above challenges, and the details are presented below.

挑战。实现通道级稀疏性需要修剪与通道关联的所有传入和传出连接。这使得直接修剪预训练模型上的权重的方法无效,因为通道输入或输出端的所有权重不太可能恰好具有接近零的值。正如[23]中所报道的,在预训练的 ResNet 上修剪通道只能导致参数数量减少约 10%,而不会损失准确性。 [35]通过在训练目标中强制稀疏正则化来解决这个问题。具体来说,他们采用组LASSO在训练期间将同一通道对应的所有滤波器权重同时推向零。然而,这种方法需要计算附加正则化项相对于所有滤波器权重的梯度,这是不平凡的。我们介绍一个简单的想法来解决上述挑战,详细信息如下。

Scaling Factors and Sparsity-induced Penalty. Our idea is introducing a scaling factor γ for each channel, which is multiplied to the output of that channel. Then we jointly train the network weights and these scaling factors, with sparsity regularization imposed on the latter. Finally we prune those channels with small factors, and fine-tune the pruned network. Specifically, the training objective of our approach is given by![]() ,where (x, y) denote the train input and target, W denotes the trainable weights, the first sum-term corresponds to the normal training loss of a CNN, g(·) is a sparsity-induced penalty on the scaling factors, and λ balances the two terms. In our experiment, we choose g(s) = |s|, which is known as L1-norm and widely used to achieve sparsity. Subgradient descent is adopted as the optimization method for the nonsmooth L1 penalty term. An alternative option is to replace the L1 penalty with the smooth-L1 penalty [30] to avoid using sub-gradient at non-smooth point.

,where (x, y) denote the train input and target, W denotes the trainable weights, the first sum-term corresponds to the normal training loss of a CNN, g(·) is a sparsity-induced penalty on the scaling factors, and λ balances the two terms. In our experiment, we choose g(s) = |s|, which is known as L1-norm and widely used to achieve sparsity. Subgradient descent is adopted as the optimization method for the nonsmooth L1 penalty term. An alternative option is to replace the L1 penalty with the smooth-L1 penalty [30] to avoid using sub-gradient at non-smooth point.

As pruning a channel essentially corresponds to removing all the incoming and outgoing connections of that channel, we can directly obtain a narrow network (see Figure 1) without resorting to any special sparse computation packages. The scaling factors act as the agents for channel selection. As they are jointly optimized with the network weights, the network can automatically identity insignificant channels, which can be safely removed without greatly affecting the generalization performance.

缩放因子和稀疏引起的惩罚。我们的想法是为每个通道引入一个缩放因子 γ,该因子乘以该通道的输出。然后,我们联合训练网络权重和这些缩放因子,并对后者进行稀疏正则化。最后,我们用小因素修剪这些通道,并对修剪后的网络进行微调。具体来说,我们方法的训练目标由下式给出式(1)其中 (x, y) 表示训练输入和目标,W 表示 可训练权重,第一个总和项对应于 CNN 的正常训练损失,g(·) 是稀疏性引起的 缩放因子的惩罚,并且 λ 平衡这两项。 在我们的实验中,我们选择 g(s) = |s|,这被称为 L1 范数并广泛用于实现稀疏性。采用次梯度下降作为非光滑L1惩罚项的优化方法。另一种选择是用平滑 L1 惩罚代替 L1 惩罚 [30],以避免在非平滑点使用次梯度。

由于修剪通道本质上相当于删除该通道的所有传入和传出连接,因此我们可以直接获得窄网络(见图 1),而无需借助任何特殊的稀疏计算包。缩放因子充当通道选择的代理。由于它们与网络权重共同优化,网络可以自动识别不重要的通道,可以安全地删除这些通道,而不会极大地影响泛化性能。

Leveraging the Scaling Factors in BN Layers. Batch normalization [19] has been adopted by most modern CNNs as a standard approach to achieve fast convergence and better generalization performance. The way BN normalizes the activations motivates us to design a simple and efficient method to incorporates the channel-wise scaling factors. Particularly, BN layer normalizes the internal activations using mini-batch statistics. Let zin and zout be the input and output of a BN layer, B denotes the current minibatch, BN layer performs the following transformation: ,where µB and σB are the mean and standard deviation values of input activations over B, γ and β are trainable affine transformation parameters (scale and shift) which provides the possibility of linearly transforming normalized activations back to any scales.

,where µB and σB are the mean and standard deviation values of input activations over B, γ and β are trainable affine transformation parameters (scale and shift) which provides the possibility of linearly transforming normalized activations back to any scales.

利用 BN 层中的缩放因子。批量归一化 [19] 已被大多数现代 CNN 采纳作为实现快速收敛和更好泛化性能的标准方法。 BN 标准化激活的方式促使我们设计一种简单而有效的方法来合并通道缩放因子。特别是,BN 层使用小批量统计数据对内部激活进行标准化。设zin和zout为BN层的输入和输出,B表示当前的minibatch,BN层执行以下变换:公式(2),其中 µB 和 σB 是 B 上输入激活的平均值和标准偏差值,γ 和 β 是可训练的仿射变换参数(尺度和位移),它提供了将归一化激活线性变换回任何尺度的可能性。

It is common practice to insert a BN layer after a convolutional layer, with channel-wise scaling/shifting parameters. Therefore, we can directly leverage the γ parameters in BN layers as the scaling factors we need for network slimming. It has the great advantage of introducing no overhead to the network. In fact, this is perhaps also the most effective way we can learn meaningful scaling factors for channel pruning. 1), if we add scaling layers to a CNN without BN layer, the value of the scaling factors are not meaningful for evaluating the importance of a channel, because both convolution layers and scaling layers are linear transformations. One can obtain the same results by decreasing the scaling factor values while amplifying the weights in the convolution layers. 2), if we insert a scaling layer before a BN layer, the scaling effect of the scaling layer will be completely canceled by the normalization process in BN. 3), if we insert scaling layer after BN layer, there are two consecutive scaling factors for each channel.

通常的做法是在卷积层之后插入 BN 层,并具有通道级缩放/移位参数。因此,我们可以直接利用 BN 层中的 γ 参数作为网络瘦身所需的缩放因子。它的一大优点是不会给网络带来任何开销。事实上,这也许也是我们学习有意义的通道修剪缩放因子的最有效方法。 1),如果我们在没有BN层的CNN中添加缩放层,缩放因子的值对于评估通道的重要性没有意义,因为卷积层和缩放层都是线性变换。通过减小缩放因子值同时放大卷积层中的权重可以获得相同的结果。 2),如果我们在BN层之前插入一个缩放层,则缩放层的缩放效果将被BN中的归一化过程完全抵消。 3),如果我们在BN层之后插入缩放层,则每个通道有两个连续的缩放因子。

Channel Pruning and Fine-tuning. After training under channel-level sparsity-induced regularization, we obtain a model in which many scaling factors are near zero (see Figure 1). Then we can prune channels with near-zero scaling factors, by removing all their incoming and outgoing connections and corresponding weights. We prune channels with a global threshold across all layers, which is defined as a certain percentile of all the scaling factor values. For instance, we prune 70% channels with lower scaling factors by choosing the percentile threshold as 70%. By doing so, we obtain a more compact network with less parameters and run-time memory, as well as less computing operations.

通道修剪和微调。在通道级稀疏引起的正则化下进行训练后,我们获得了一个模型,其中许多缩放因子接近于零(见图 1)。然后,我们可以通过删除所有传入和传出连接以及相应的权重来修剪缩放因子接近于零的通道。我们在所有层上使用全局阈值来修剪通道,该阈值被定义为所有缩放因子值的某个百分位。例如,我们通过将百分位阈值选择为 70% 来修剪具有较低缩放因子的 70% 通道。通过这样做,我们获得了一个更紧凑的网络,具有更少的参数和运行时内存以及更少的计算操作。

Pruning may temporarily lead to some accuracy loss, when the pruning ratio is high. But this can be largely compensated by the followed fine-tuning process on the pruned network. In our experiments, the fine-tuned narrow network can even achieve higher accuracy than the original unpruned network in many cases.

当剪枝率较高时,剪枝可能会暂时导致一些精度损失。但这可以在很大程度上通过修剪网络上的后续微调过程来弥补。在我们的实验中,在许多情况下,微调的窄网络甚至可以比原始未剪枝的网络获得更高的精度。

Multi-pass Scheme. We can also extend the proposed method from single-pass learning scheme (training with sparsity regularization, pruning, and fine-tuning) to a multipass scheme. Specifically, a network slimming procedure results in a narrow network, on which we could again apply the whole training procedure to learn an even more compact model. This is illustrated by the dotted-line in Figure 2. Experimental results show that this multi-pass scheme can lead to even better results in terms of compression rate.

多遍方案。我们还可以将所提出的方法从单遍学习方案(通过稀疏正则化、修剪和微调进行训练)扩展到多遍方案。具体来说,网络瘦身过程会产生一个狭窄的网络,我们可以在网络上再次应用整个训练过程来学习更紧凑的模型。图 2 中的虚线说明了这一点。实验结果表明,这种多通道方案在压缩率方面可以带来更好的结果。

Handling Cross Layer Connections and Pre-activation Structure. The network slimming process introduced above can be directly applied to most plain CNN architectures such as AlexNet [22] and VGGNet [31]. While some adaptations are required when it is applied to modern networks with cross layer connections and the pre-activation design such as ResNet [15] and DenseNet [17]. For these networks, the output of a layer may be treated as the input of multiple subsequent layers, in which a BN layer is placed before the convolutional layer. In this case, the sparsity is achieved at the incoming end of a layer, i.e., the layer selectively uses a subset of channels it received. To harvest the parameter and computation savings at test time, we need to place a channel selection layer to mask out insignificant channels we have identified

处理跨层连接和预激活结构。上面介绍的网络瘦身过程可以直接应用于大多数普通的 CNN 架构,例如 AlexNet [22] 和 VGGNet [31]。然而,当它应用于具有跨层连接和预激活设计的现代网络(例如ResNet [15]和DenseNet [17])时,需要进行一些调整。对于这些网络,一个层的输出可以被视为多个后续层的输入,其中BN层被放置在卷积层之前。在这种情况下,稀疏性是在层的传入端实现的,即该层选择性地使用它接收到的通道的子集。为了在测试时获得参数和计算节省,我们需要放置一个通道选择层来屏蔽我们已识别的无关紧要的通道。

5. Analysis(分析)

There are two crucial hyper-parameters in network slimming, the pruned percentage t and the coefficient of the sparsity regularization term λ (see Equation 1). In this section, we analyze their effects in more detail.

网络瘦身中有两个关键的超参数,即剪枝百分比 t 和稀疏正则化项的系数 λ(参见公式 1)。在本节中,我们将更详细地分析它们的影响。

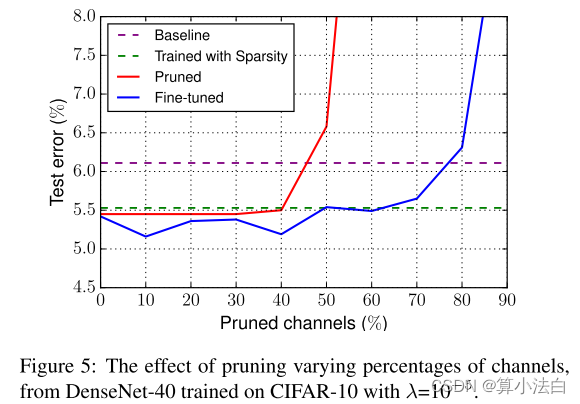

Effect of Pruned Percentage. Once we obtain a model trained with sparsity regularization, we need to decide what percentage of channels to prune from the model. If we prune too few channels, the resource saving can be very limited. However, it could be destructive to the model if we prune too many channels, and it may not be possible to recover the accuracy by fine-tuning. We train a DenseNet40 model with λ=10−5 on CIFAR-10 to show the effect of pruning a varying percentage of channels. The results are summarized in Figure 5.

修剪百分比的影响。一旦我们获得了经过稀疏正则化训练的模型,我们需要决定从模型中修剪多少百分比的通道。如果我们修剪太少的通道,节省的资源可能非常有限。然而,如果我们修剪太多通道,可能会对模型造成破坏,并且可能无法通过微调来恢复精度。我们在 CIFAR-10 上训练 λ=10−5 的 DenseNet40 模型,以显示修剪不同百分比通道的效果。结果总结于图 5 中。

From Figure 5, it can be concluded that the classification performance of the pruned or fine-tuned models degrade only when the pruning ratio surpasses a threshold. The finetuning process can typically compensate the possible accuracy loss caused by pruning. Only when the threshold goes beyond 80%, the test error of fine-tuned model falls behind the baseline model. Notably, when trained with sparsity, even without fine-tuning, the model performs better than the original model. This is possibly due the the regularization effect of L1 sparsity on channel scaling factors.

从图5可以看出,分类 修剪或微调模型的性能下降 仅当剪枝率超过阈值时。微调过程通常可以补偿由修剪引起的可能的精度损失。只有当阈值超过 80% 时,微调模型的测试误差才会落后于基线模型。值得注意的是,当进行稀疏训练时,即使没有微调,模型的性能也比原始模型更好。这可能是由于 L1 稀疏性对通道缩放因子的正则化影响。

图 4:经过训练的 VGGNet 在不同程度的稀疏正则化下的缩放因子分布(由参数 λ 控制)。随着 λ 的增加,缩放因子变得更稀疏。

图 5:修剪不同百分比通道的效果,来自在 CIFAR-10 上训练的 DenseNet-40,其中 λ=10−5。

Channel Sparsity Regularization. The purpose of the L1 sparsity term is to force many of the scaling factors to be near zero. The parameter λ in Equation 1 controls its significance compared with the normal training loss. In Figure 4 we plot the distributions of scaling factors in the whole network with different λ values. For this experiment we use a VGGNet trained on CIFAR-10 dataset.

通道稀疏正则化。 L1 稀疏项的目的是迫使许多缩放因子接近于零。等式1中的参数λ控制其与正常训练损失相比的显着性。在图 4 中,我们绘制了具有不同 λ 值的整个网络中缩放因子的分布。在本实验中,我们使用在 CIFAR-10 数据集上训练的 VGGNet。

It can be observed that with the increase of λ, the scaling factors are more and more concentrated near zero. When λ=0, i.e., there’s no sparsity regularization, the distribution is relatively flat. When λ=10−4, almost all scaling factors fall into a small region near zero. This process can be seen as a feature selection happening in intermediate layers of deep networks, where only channels with non-negligible scaling factors are chosen. We further visualize this pro- cess by a heatmap. Figure 6 shows the magnitude of scaling factors from one layer in VGGNet, along the training pro- cess. Each channel starts with equal weights; as the training progresses, some channels’ scaling factors become larger (brighter) while others become smaller (darker).

可以看出,随着 λ 的增加,缩放比例 因素越来越集中在零附近。什么时候 λ=0,即没有稀疏正则化,分布 是比较平坦的。当 λ=10−4 时,几乎所有缩放因子 落入接近零的小区域。这个过程可以看到 作为发生在中间层的特征选择 深层网络,其中只有不可忽略的通道 选择比例因子。我们进一步想象这个亲 通过热图进行处理。图 6 显示了缩放的幅度 来自 VGGNet 中一层的因子,沿着训练过程 过程。每个通道以相同的权重开始;随着训练的进行,一些通道的缩放因子变得更大(更亮),而另一些通道的缩放因子变得更小(更暗)。

6. Conclusion(结论)

We proposed the network slimming technique to learn more compact CNNs. It directly imposes sparsity-induced regularization on the scaling factors in batch normalization layers, and unimportant channels can thus be automatically identified during training and then pruned. On multiple datasets, we have shown that the proposed method is able to significantly decrease the computational cost (up to 20×) of state-of-the-art networks, with no accuracy loss. More importantly, the proposed method simultaneously reduces the model size, run-time memory, computing operations while introducing minimum overhead to the training process, and the resulting models require no special libraries/hardware for efficient inference.

我们提出了网络瘦身技术来学习更紧凑的 CNN。它直接对批量归一化层中的缩放因子进行稀疏诱导的正则化,因此可以在训练过程中自动识别不重要的通道,然后进行剪枝。在多个数据集上,我们已经证明所提出的方法能够显着降低最先进网络的计算成本(高达 20 倍),并且没有精度损失。更重要的是,所提出的方法同时减少了模型大小、运行时内存、计算操作,同时为训练过程引入最小的开销,并且生成的模型不需要特殊的库/硬件来进行有效的推理。

Acknowledgements. Gao Huang is supported by the International Postdoctoral Exchange Fellowship Program of China Postdoctoral Council (No.20150015). Changshui Zhang is supported by NSFC and DFG joint project NSFC 61621136008/DFG TRR-169.

致谢。高黄获中国博士后理事会国际博士后交流资助计划(编号:20150015)资助。张长水得到国家自然科学基金委员会和DFG联合项目NSFC 61621136008/DFG TRR-169的支持。

419

419

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?