前情提要:

跑个模型——ViT(一)-CSDN博客![]() https://blog.csdn.net/m0_74841987/article/details/144471350

https://blog.csdn.net/m0_74841987/article/details/144471350

一、测试代码

在上次我们学习了一下ViT的网络架构,并测试了一下,但没有输出预测结果。这个问题已经解决啦。

用来测试的代码为:

if __name__ == '__main__':

v = ViT(

image_size=224, # 输入图像的大小

patch_size=16, # 每个token/patch的大小16x16

num_classes=1000, # 多分类

dim=1024, # encoder规定的输入的维度

depth=6, # Encoder的个数

heads=16, # 多头注意力机制的head个数

mlp_dim=2048, # mlp的维度

dropout=0.1, #

emb_dropout=0.1 # embedding一半会接一个dropout

)

img = torch.randn(1, 3, 224, 224)

preds = v(img)# (1, 1000)

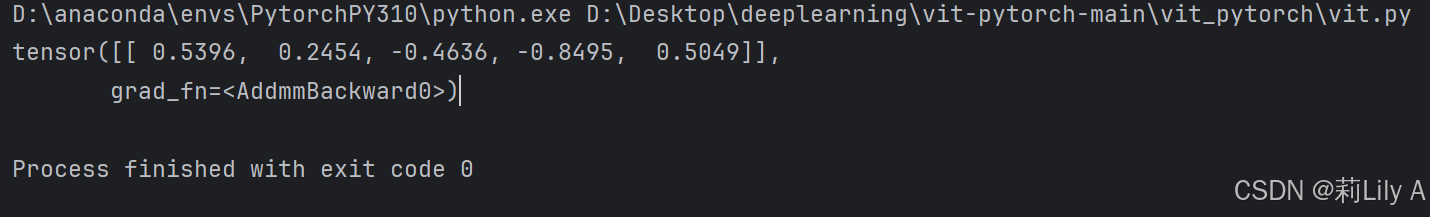

print(preds)我把num_classes改成了5,1000实在太大了,然后再把preds打印一下,运行结果是

解释一下这个运行结果:这个五个数字分别是输入图像是某一类的概率,为了更直观地看到结果,我们补充几行代码

preds = torch.softmax(preds, dim = -1)

preds_argmax = preds.argmax(dim=1)

print(preds)

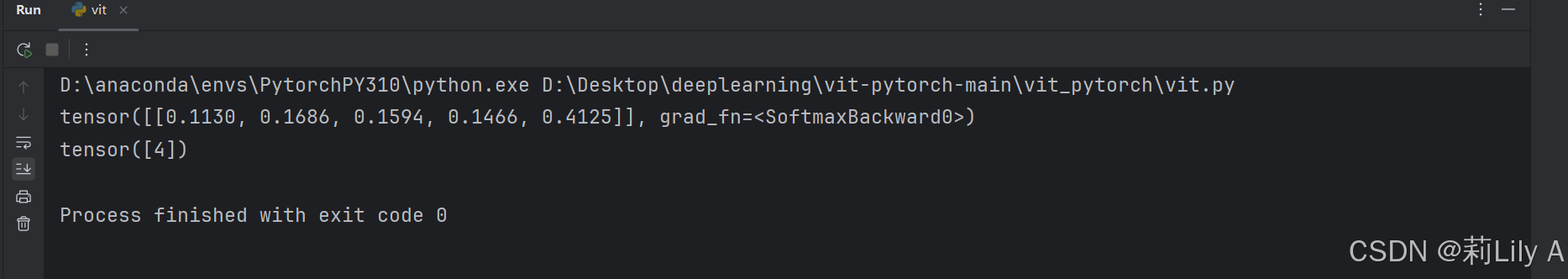

print(preds_argmax)运行结果是

说明这张图是类别4的概率最大。

ps:因为图像是随机生成的,所以每次运行结果都不一样。

完整的测试代码

if __name__ == '__main__':

v = ViT(

image_size=224, # 输入图像的大小

patch_size=16, # 每个token/patch的大小16x16

num_classes=5, # 多分类

dim=1024, # encoder规定的输入的维度

depth=6, # Encoder的个数

heads=16, # 多头注意力机制的head个数

mlp_dim=2048, # mlp的维度

dropout=0.1, #

emb_dropout=0.1 # embedding一半会接一个dropout

)

img = torch.randn(1, 3, 224, 224)

preds = v(img)# (1, 5)

preds = torch.softmax(preds, dim = -1)

preds_argmax = preds.argmax(dim=1)

print(preds)

print(preds_argmax)二、修改代码

在前面的测试中,没有遇到报错,说明这个代码应该没啥问题,复现这个代码的大佬还贴心地给了个猫狗二分类的example,接下来我打算根据这个例子来训练一个花卉分类。

备份cats_and_dogs.ipynb,直接改个文件名flower.ipynb,然后在这个文件里修改代码。

device根据自己电脑的情况修改一下,我电脑不是cuda芯片,是amd

讲一下data目录结构吧。提前准备好数据集,我自己按照7:3比例手动划分了一下,两个文件夹train和test,文件夹里面有五个文件夹,名称分别是花的种类,将train和test压缩成zip,压缩包和data同级目录,执行完以下代码后,目录结构变成

with zipfile.ZipFile('train.zip') as train_zip:

train_zip.extractall('data')

with zipfile.ZipFile('test.zip') as test_zip:

test_zip.extractall('data')data/

train/

class1/

img1.jpg

img2.jpg

...

class2/

img1.jpg

img2.jpg

...

test/

img1.jpg

img2.jpg

...

我根据我的数据集目录结构调整了一下Load Data的代码:

# 获取训练集图片路径和标签

train_list = []

train_labels = []

# 遍历训练集子目录(按类别组织)

for class_dir in os.listdir(train_dir):

class_path = os.path.join(train_dir, class_dir)

if os.path.isdir(class_path):

# 获取该类别下所有图片的路径

class_images = glob.glob(os.path.join(class_path, '*.jpg'))

train_list.extend(class_images)

train_labels.extend([class_dir] * len(class_images)) # 标签是子目录的名字

# 获取测试集图片路径

test_list = glob.glob(os.path.join(test_dir, '*.jpg'))

print(f"Train Data: {len(train_list)}")

print(f"Test Data: {len(test_list)}")

labels = train_labels # 训练集的标签已经从目录名中提取出来Load Datasets也要根据目录结构修改一下。

训练后得到的参数要记得保存。

- 通过每个 epoch 保存检查点,可以在训练过程中断时恢复训练。

- 每个检查点包含了训练过程中的关键信息,如模型、优化器、调度器的状态字典,损失和准确率等。

- 最终训练结束时,也可以保存一个最终的检查点,用于后续使用或推理。

# loss function

criterion = nn.CrossEntropyLoss()

# optimizer

optimizer = optim.Adam(model.parameters(), lr=lr)

# scheduler

scheduler = StepLR(optimizer, step_size=1, gamma=gamma)

# 保存路径

save_dir = './checkpoints' # 设置保存路径

os.makedirs(save_dir, exist_ok=True)

# 训练过程

for epoch in range(epochs):

epoch_loss = 0

epoch_accuracy = 0

for data, label in tqdm(train_loader):

data = data.to(device)

label = label.to(device)

output = model(data)

loss = criterion(output, label)

optimizer.zero_grad()

loss.backward()

optimizer.step()

acc = (output.argmax(dim=1) == label).float().mean()

epoch_accuracy += acc / len(train_loader)

epoch_loss += loss / len(train_loader)

# 验证集评估

with torch.no_grad():

epoch_val_accuracy = 0

epoch_val_loss = 0

for data, label in valid_loader:

data = data.to(device)

label = label.to(device)

val_output = model(data)

val_loss = criterion(val_output, label)

acc = (val_output.argmax(dim=1) == label).float().mean()

epoch_val_accuracy += acc / len(valid_loader)

epoch_val_loss += val_loss / len(valid_loader)

# 打印每个epoch的训练信息

print(

f"Epoch : {epoch+1} - loss : {epoch_loss:.4f} - acc: {epoch_accuracy:.4f} - val_loss : {epoch_val_loss:.4f} - val_acc: {epoch_val_accuracy:.4f}\n"

)

# 每个 epoch 保存模型和训练参数

checkpoint_path = os.path.join(save_dir, f'checkpoint_epoch_{epoch+1}.pth')

torch.save({

'epoch': epoch + 1, # 当前epoch

'model_state_dict': model.state_dict(), # 模型的状态字典

'optimizer_state_dict': optimizer.state_dict(), # 优化器的状态字典

'scheduler_state_dict': scheduler.state_dict(), # 学习率调度器的状态字典

'loss': epoch_loss, # 当前epoch的损失

'accuracy': epoch_accuracy, # 当前epoch的准确率

'val_loss': epoch_val_loss, # 当前epoch的验证损失

'val_accuracy': epoch_val_accuracy # 当前epoch的验证准确率

}, checkpoint_path)

print(f"Checkpoint saved at {checkpoint_path}")

# 更新学习率调度器

scheduler.step()

# 训练完成后保存最终的模型和训练参数

final_checkpoint_path = os.path.join(save_dir, 'final_checkpoint.pth')

torch.save({

'epoch': epochs, # 最终的epoch

'model_state_dict': model.state_dict(),

'optimizer_state_dict': optimizer.state_dict(),

'scheduler_state_dict': scheduler.state_dict(),

'loss': epoch_loss,

'accuracy': epoch_accuracy,

'val_loss': epoch_val_loss,

'val_accuracy': epoch_val_accuracy

}, final_checkpoint_path)

print(f"Final model checkpoint saved at {final_checkpoint_path}")

然后试着运行一下,开始改报错。

三、调试代码

解决一些小bug

以下是最终代码

from __future__ import print_function

import glob

from itertools import chain

import os

import random

import zipfile

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from linformer import Linformer

from PIL import Image

from sklearn.model_selection import train_test_split

from torch.optim.lr_scheduler import StepLR

from torch.utils.data import DataLoader, Dataset

from torchvision import datasets, transforms

from tqdm.notebook import tqdm

from vit_pytorch.efficient import ViT

print(f"Torch: {torch.__version__}")

# Training settings

batch_size = 64

epochs = 20

lr = 3e-5

gamma = 0.7

seed = 42

def seed_everything(seed):

random.seed(seed)

os.environ['PYTHONHASHSEED'] = str(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed)

torch.backends.cudnn.deterministic = True

seed_everything(seed)

os.makedirs('data', exist_ok=True)

train_dir = 'data/train'

test_dir = 'data/test'

with zipfile.ZipFile('train.zip') as train_zip:

train_zip.extractall('data')

with zipfile.ZipFile('test.zip') as test_zip:

test_zip.extractall('data')

# 获取训练集图片路径和标签

train_list = []

train_labels = []

# 遍历训练集子目录(按类别组织)

for class_dir in os.listdir(train_dir):

class_path = os.path.join(train_dir, class_dir)

if os.path.isdir(class_path):

# 获取该类别下所有图片的路径

class_images = glob.glob(os.path.join(class_path, '*.jpg'))

train_list.extend(class_images)

train_labels.extend([class_dir] * len(class_images)) # 标签是子目录的名字

# 获取测试集图片路径

test_list = glob.glob(os.path.join(test_dir, '*.jpg'))

print(f"Train Data: {len(train_list)}")

print(f"Test Data: {len(test_list)}")

labels = train_labels # 训练集的标签已经从目录名中提取出来

random_idx = np.random.randint(1, len(train_list), size=9)

fig, axes = plt.subplots(3, 3, figsize=(16, 12))

for idx, ax in enumerate(axes.ravel()):

img = Image.open(train_list[idx])

ax.set_title(labels[idx])

ax.imshow(img)

train_list, valid_list = train_test_split(train_list,

test_size=0.2,

stratify=labels,

random_state=seed)

print(f"Train Data: {len(train_list)}")

print(f"Validation Data: {len(valid_list)}")

train_transforms = transforms.Compose(

[

transforms.Resize((224, 224)),

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

]

)

val_transforms = transforms.Compose(

[

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

]

)

test_transforms = transforms.Compose(

[

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

]

)

class FlowersDataset(Dataset):

def __init__(self, file_list, transform=None):

self.file_list = file_list

self.transform = transform

# self.class_to_idx = {class_name: idx for idx, class_name in enumerate(sorted(os.listdir(file_list[0].replace('train', ''))))}

def __len__(self):

self.filelength = len(self.file_list)

return self.filelength

def __getitem__(self, idx):

img_path = self.file_list[idx]

img = Image.open(img_path)

img_transformed = self.transform(img)

# label = img_path.split("/")[-1].split(".")[0]

# label = 1 if label == "dog" else 0

# label = img_path.split(os.sep)[-2] # 获取文件夹名称作为标签

# label = self.class_to_idx[label] # 映射到相应的标签编号

class_name = os.path.basename(os.path.dirname(img_path))

# print(class_name)

if class_name == 'daisy':

label = 0

elif class_name == 'dandelion':

label = 1

elif class_name == 'rose':

label = 2

elif class_name == 'sunflower':

label = 3

else:

label = 4

# print(label)

return img_transformed, label

print(f"Test Data: {len(test_list)}")

train_data = FlowersDataset(train_list, transform=train_transforms)

valid_data = FlowersDataset(valid_list, transform=test_transforms)

test_data = FlowersDataset(test_list, transform=test_transforms)

train_loader = DataLoader(dataset = train_data, batch_size=batch_size, shuffle=True )

valid_loader = DataLoader(dataset = valid_data, batch_size=batch_size, shuffle=True)

test_loader = DataLoader(dataset = test_data, batch_size=batch_size, shuffle=True)

print(len(train_data), len(train_loader))

print(len(valid_data), len(valid_loader))

efficient_transformer = Linformer(

dim=128,

seq_len=49+1, # 7x7 patches + 1 cls-token

depth=12,

heads=8,

k=64

)

model = ViT(

dim=128,

image_size=224,

patch_size=32,

num_classes=5,

transformer=efficient_transformer,

channels=3,

)

# loss function

criterion = nn.CrossEntropyLoss()

# optimizer

optimizer = optim.Adam(model.parameters(), lr=lr)

# scheduler

scheduler = StepLR(optimizer, step_size=1, gamma=gamma)

# 保存路径

save_dir = './checkpoints' # 设置保存路径

os.makedirs(save_dir, exist_ok=True)

for epoch in range(epochs):

epoch_loss = 0

epoch_accuracy = 0

print(f"Epoch {epoch + 1}/{epochs}")

for data, label in train_loader:

output = model(data)

loss = criterion(output, label)

optimizer.zero_grad()

loss.backward()

optimizer.step()

acc = (output.argmax(dim=1) == label).float().mean()

epoch_accuracy += acc / len(train_loader)

epoch_loss += loss / len(train_loader)

with torch.no_grad():

epoch_val_accuracy = 0

epoch_val_loss = 0

for data, label in valid_loader:

val_output = model(data)

val_loss = criterion(val_output, label)

acc = (val_output.argmax(dim=1) == label).float().mean()

epoch_val_accuracy += acc / len(valid_loader)

epoch_val_loss += val_loss / len(valid_loader)

print(

f"Epoch : {epoch+1} - loss : {epoch_loss:.4f} - acc: {epoch_accuracy:.4f} - val_loss : {epoch_val_loss:.4f} - val_acc: {epoch_val_accuracy:.4f}\n"

)

checkpoint_path = os.path.join(save_dir, f'checkpoint_epoch_{epoch+1}.pth')

torch.save({

'epoch': epoch + 1, # 当前epoch

'model_state_dict': model.state_dict(), # 模型的状态字典

'optimizer_state_dict': optimizer.state_dict(), # 优化器的状态字典

'scheduler_state_dict': scheduler.state_dict(), # 学习率调度器的状态字典

'loss': epoch_loss, # 当前epoch的损失

'accuracy': epoch_accuracy, # 当前epoch的准确率

'val_loss': epoch_val_loss, # 当前epoch的验证损失

'val_accuracy': epoch_val_accuracy # 当前epoch的验证准确率

}, checkpoint_path)

print(f"Checkpoint saved at {checkpoint_path}")

# 更新学习率调度器

scheduler.step()

# 训练完成后保存最终的模型和训练参数

final_checkpoint_path = os.path.join(save_dir, 'final_checkpoint.pth')

torch.save({

'epoch': epochs, # 最终的epoch

'model_state_dict': model.state_dict(),

'optimizer_state_dict': optimizer.state_dict(),

'scheduler_state_dict': scheduler.state_dict(),

'loss': epoch_loss,

'accuracy': epoch_accuracy,

'val_loss': epoch_val_loss,

'val_accuracy': epoch_val_accuracy

}, final_checkpoint_path)

print(f"Final model checkpoint saved at {final_checkpoint_path}")

2447

2447

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?