[Android取经之路] 的源码都基于Android-Q(10.0) 进行分析

[Android取经之路] 系列文章:

《系统启动篇》

Android系统架构

Android是怎么启动的

Android 10.0系统启动之init进程

Android10.0系统启动之Zygote进程

Android 10.0 系统启动之SystemServer进程

Android 10.0 系统服务之ActivityMnagerService

Android10.0系统启动之Launcher(桌面)启动流程

Android10.0应用进程创建过程以及Zygote的fork流程

Android 10.0 PackageManagerService(一)工作原理及启动流程

Android 10.0 PackageManagerService(二)权限扫描

Android 10.0 PackageManagerService(三)APK扫描

Android 10.0 PackageManagerService(四)APK安装流程

《日志系统篇》

Android10.0 日志系统分析(一)-logd、logcat 指令说明、分类和属性

Android10.0 日志系统分析(二)-logd、logcat架构分析及日志系统初始化

Android10.0 日志系统分析(三)-logd、logcat读写日志源码分析

Android10.0 日志系统分析(四)-selinux、kernel日志在logd中的实现

《Binder通信原理》:

Android10.0 Binder通信原理(一)Binder、HwBinder、VndBinder概要

Android10.0 Binder通信原理(二)-Binder入门篇

Android10.0 Binder通信原理(三)-ServiceManager篇

Android10.0 Binder通信原理(四)-Native-C\C++实例分析

Android10.0 Binder通信原理(五)-Binder驱动分析

Android10.0 Binder通信原理(六)-Binder数据如何完成定向打击

Android10.0 Binder通信原理(七)-Framework binder示例

Android10.0 Binder通信原理(八)-Framework层分析

Android10.0 Binder通信原理(九)-AIDL Binder示例

Android10.0 Binder通信原理(十)-AIDL原理分析-Proxy-Stub设计模式

Android10.0 Binder通信原理(十一)-Binder总结

《HwBinder通信原理》

HwBinder入门篇-Android10.0 HwBinder通信原理(一)

HIDL详解-Android10.0 HwBinder通信原理(二)

HIDL示例-C++服务创建Client验证-Android10.0 HwBinder通信原理(三)

HIDL示例-JAVA服务创建-Client验证-Android10.0 HwBinder通信原理(四)

HwServiceManager篇-Android10.0 HwBinder通信原理(五)

Native层HIDL服务的注册原理-Android10.0 HwBinder通信原理(六)

Native层HIDL服务的获取原理-Android10.0 HwBinder通信原理(七)

JAVA层HIDL服务的注册原理-Android10.0 HwBinder通信原理(八)

JAVA层HIDL服务的获取原理-Android10.0 HwBinder通信原理(九)

HwBinder驱动篇-Android10.0 HwBinder通信原理(十)

HwBinder原理总结-Android10.0 HwBinder通信原理(十一)

《编译原理》

编译系统入门篇-Android10.0编译系统(一)

编译环境初始化-Android10.0编译系统(二)

make编译过程-Android10.0编译系统(三)

Image打包流程-Android10.0编译系统(四)

Kati详解-Android10.0编译系统(五)

Blueprint简介-Android10.0编译系统(六)

Blueprint代码详细分析-Android10.0编译系统(七)

Android.bp 语法浅析-Android10.0编译系统(八)

Ninja简介-Android10.0编译系统(九)

Ninja提升编译速度的方法-Android10.0编译系统(十)

Android10.0编译系统(十一)

1.概述

HwServiceManager是HAL服务管理中心,负责管理系统中的所有HAL服务,由init进程启动。

HwServiceManager 的主要工作就是收集各个硬件服务,当有进程需要服务时由HwServiceManager 提供特定的硬件服务。

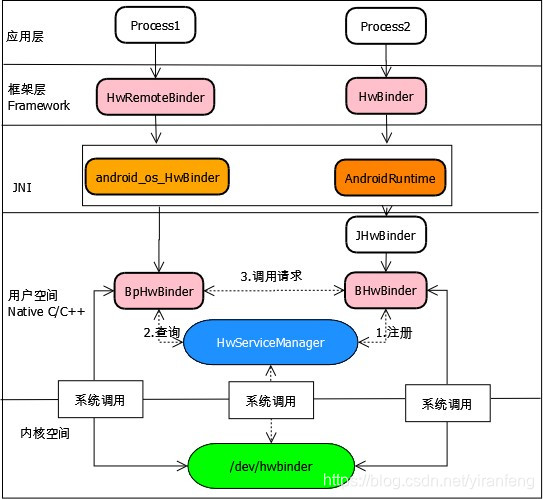

2.HwBinder架构

HwBinder通信原理:

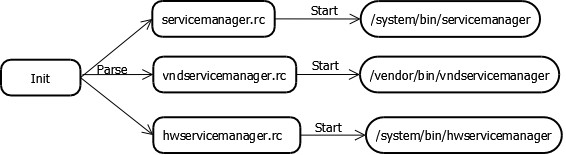

3.hwservicemanager的启动

在init.rc中,当init进程启动后,会去启动servicemanager、hwservicemanager、vndservicemanager 三个binder的守护进程

[/system/core/rootdir/init.rc]

on init

...

start servicemanager

start hwservicemanager

start vndservicemanager

hwservicemanager.rc 文件如下:

[/system/hwservicemanager/hwservicemanager.rc]

service hwservicemanager /system/bin/hwservicemanager

user system

disabled

group system readproc

critical

onrestart setprop hwservicemanager.ready false //重启后,需要把属性hwservicemanager.ready设置为false

onrestart class_restart main

onrestart class_restart hal

onrestart class_restart early_hal

writepid /dev/cpuset/system-background/tasks

class animation

shutdown critical4.HwServiceManager调用栈

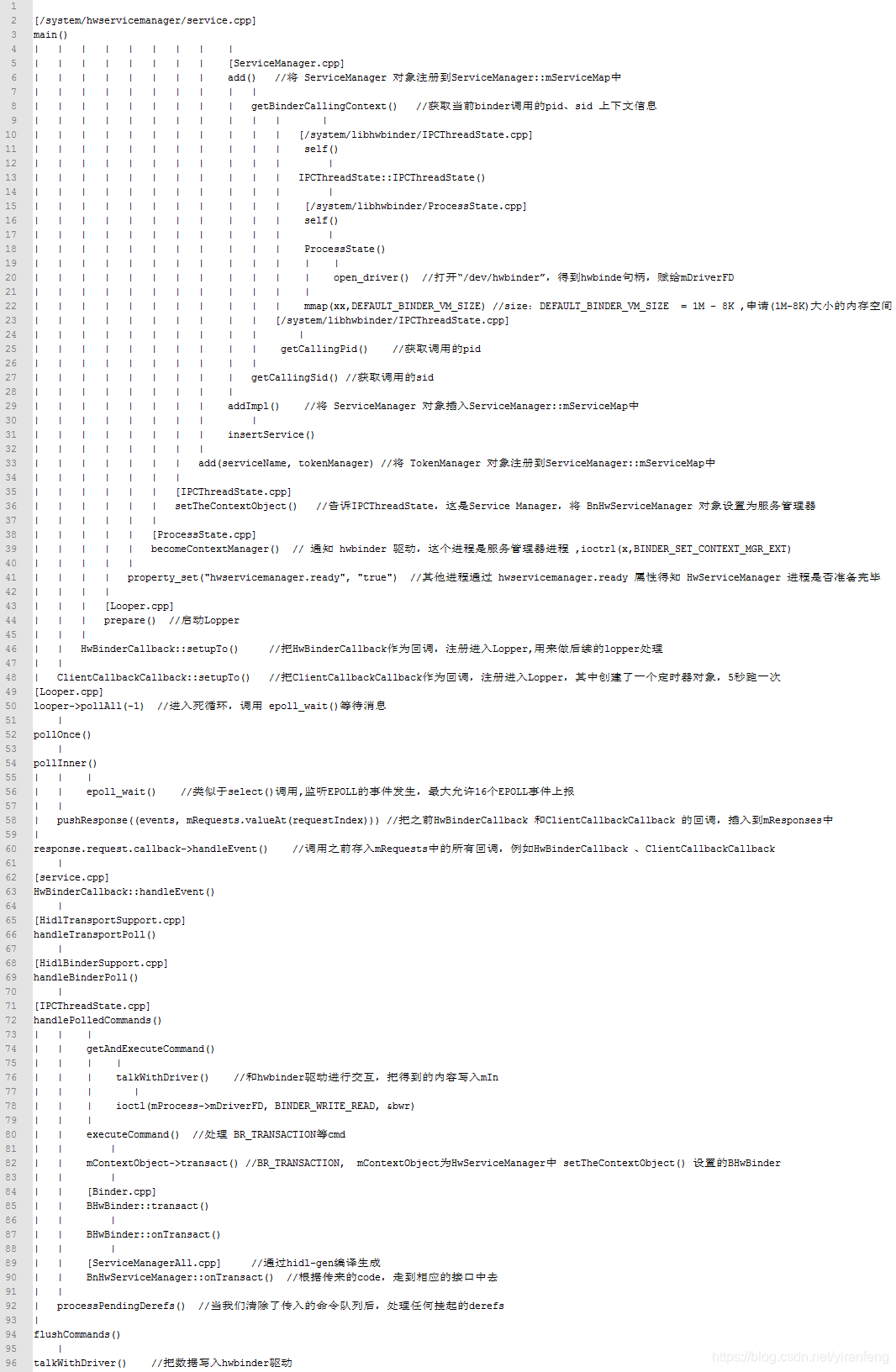

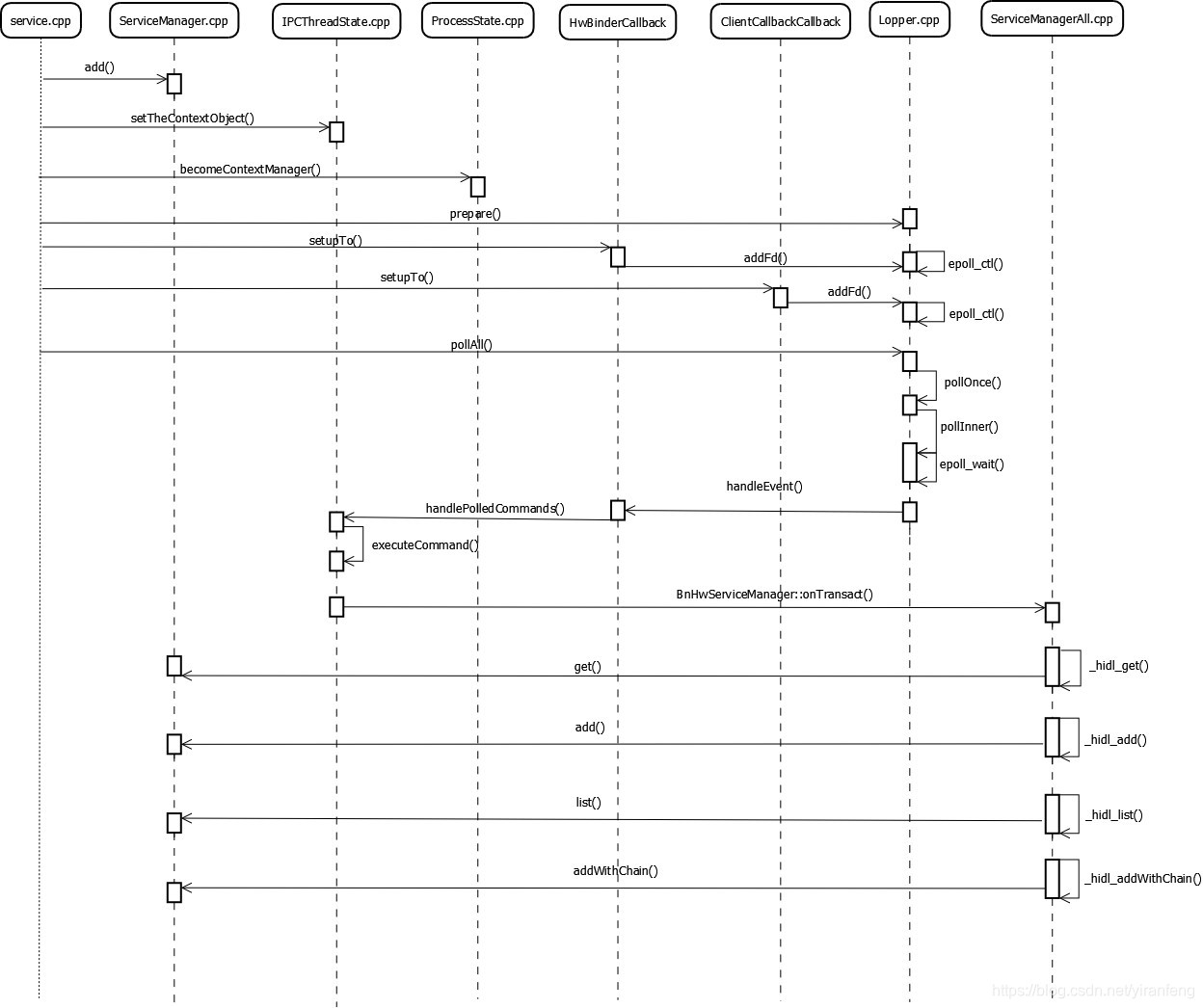

在分析源码之前,我们先来看下上下文的粗略调用关系,HwServiceManager的调用栈信息如下:

5.源码分析

下面我们正式进入HwServiceManager的源码分析。

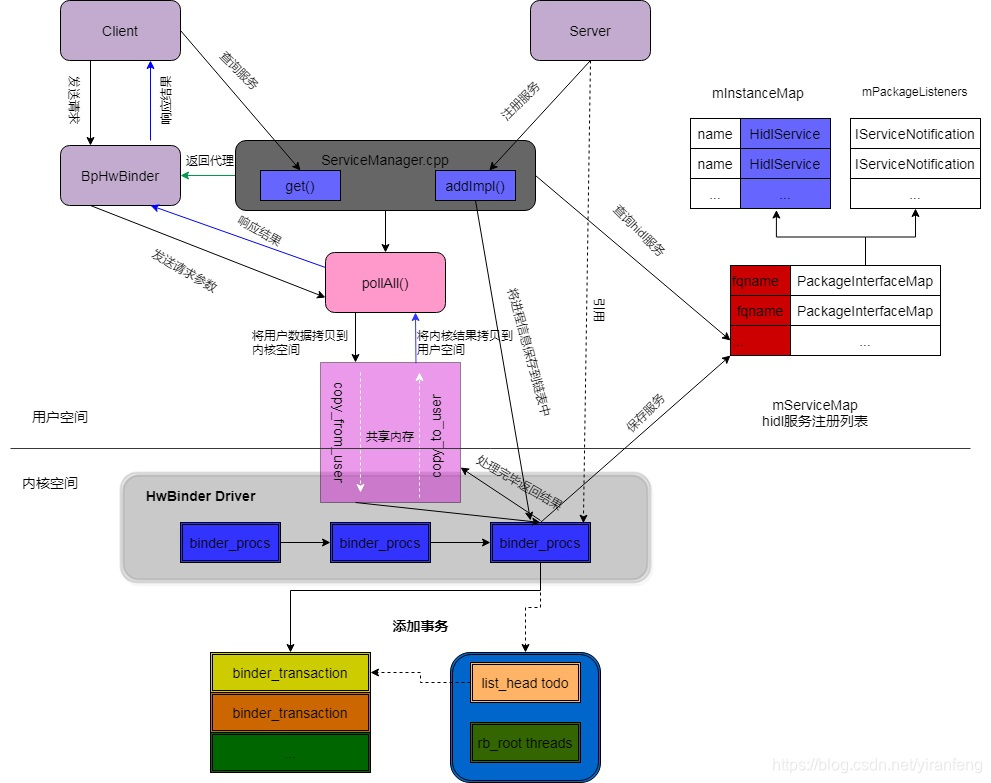

HwServiceManager时序图如下:

5.1 main()

HwServiceManager 启动时主要需要完成以下几件事情:

将ServiceManager、TokenManager 注册到mServiceMap表中

根据ServiceManager找到为Binder对象,得到的Binder对象为BnHwServiceManager,最终转换为本地的Binder对象-BHwBinder,为后续流程处理做准备

初始化/dev/hwbinder驱动,为其分配一块虚拟内存用于IPC数据交换

向hwbinder注册HAL服务管家(IPC上下文管理者)

进入死循环,调用epoll_wait() 监听/dev/hwbinder是否有数据可读,如有则调用回调执行指令,根据client传入的code,从而调用get()\add()\addWithChain()\list() 等接口,来查询、注册hwbinder的hal服务

[/system/hwservicemanager/service.cpp]

int main() {

// If hwservicemanager crashes, the system may be unstable and hard to debug. This is both why

// we log this and why we care about this at all.

setProcessHidlReturnRestriction(HidlReturnRestriction::ERROR_IF_UNCHECKED);

//1.创建ServiceManager对象,继承自IServiceManager,采用sp强指针来进行内存自动释放

sp<ServiceManager> manager = new ServiceManager();

setRequestingSid(manager, true);

//1.1将ServiceManager对象自身注册到mServiceMap表中,参考[5.2]

if (!manager->add(serviceName, manager).withDefault(false)) {

ALOGE("Failed to register hwservicemanager with itself.");

}

//2.创建TokenManager对象

sp<TokenManager> tokenManager = new TokenManager();

//2.1将TokenManager对象自身注册到mServiceMap表中

if (!manager->add(serviceName, tokenManager).withDefault(false)) {

ALOGE("Failed to register ITokenManager with hwservicemanager.");

}

//3.告诉IPCThreadState,我们是ServiceManager,

// Tell IPCThreadState we're the service manager

//根据ServiceManager找到为Binder对象,得到的Binder对象为BnHwServiceManager, 参考[5.3]

sp<IBinder> binder = toBinder<IServiceManager>(manager);

//把binder对象BnHwServiceManager转换为BHwBinder 本地binder对象

sp<BHwBinder> service = static_cast<BHwBinder*>(binder.get()); // local binder object

//把服务对象传给IPCThreadState,作为Context Object

IPCThreadState::self()->setTheContextObject(service);

//4.通知 binder 驱动,这个进程是服务管理器进程

// Then tell the kernel

ProcessState::self()->becomeContextManager(nullptr, nullptr);

//设置属性:hwservicemanager.ready 为true

int rc = property_set("hwservicemanager.ready", "true");

if (rc) {

ALOGE("Failed to set \"hwservicemanager.ready\" (error %d). "\

"HAL services will not start!\n", rc);

}

//5.其他进程通过 hwservicemanager.ready 属性得知 HwServiceManager 进程是否准备完毕

sp<Looper> looper = Looper::prepare(0 /* opts */);

//6.把HwBinderCallback作为回调,注册进入Lopper,用来做后续的lopper处理

(void)HwBinderCallback::setupTo(looper);

//7.把ClientCallbackCallback作为回调,注册进入Lopper,其中创建了一个定时器对象,5秒跑一次

(void)ClientCallbackCallback::setupTo(looper, manager);

ALOGI("hwservicemanager is ready now.");

//8.进入死循环,调用 epoll_wait()等待消息

while (true) {

looper->pollAll(-1 /* timeoutMillis */);

}

return 0;

}接下来针对main()的整个流程做拆解分析。

5.2 ServiceManager::add()

将ServiceManager、TokenManager 注册到mServiceMap表中

[/system/hwservicemanager/ServiceManager.cpp]

Return<bool> ServiceManager::add(const hidl_string& name, const sp<IBase>& service) {

bool addSuccess = false;

if (service == nullptr) {

return false;

}

//1.获取当前binder调用的pid、sid 上下文信息,参考[5.2.1]

auto pidcon = getBinderCallingContext();

//从IServiceManager中得到几组descriptor字符串

auto ret = service->interfaceChain([&](const auto &interfaceChain) {

//2.参考[5.2.7]

addSuccess = addImpl(name, service, interfaceChain, pidcon);

});

if (!ret.isOk()) {

LOG(ERROR) << "Failed to retrieve interface chain: " << ret.description();

return false;

}

return addSuccess;

}[/out/soong/.intermediates/system/libhidl/transport/manager/1.0/android.hidl.manager@1.0_genc++/gen/android/hidl/manager/1.2/ServiceManagerAll.cpp]

::android::hardware::Return<void> IServiceManager::interfaceChain(interfaceChain_cb _hidl_cb){

_hidl_cb({

::android::hidl::manager::V1_0::IServiceManager::descriptor,

::android::hidl::base::V1_0::IBase::descriptor,

});

return ::android::hardware::Void();}

::android::hardware::Return<void> IServiceManager::interfaceChain(interfaceChain_cb _hidl_cb){

_hidl_cb({

::android::hidl::manager::V1_1::IServiceManager::descriptor,

::android::hidl::manager::V1_0::IServiceManager::descriptor,

::android::hidl::base::V1_0::IBase::descriptor,

});

return ::android::hardware::Void();}

::android::hardware::Return<void> IServiceManager::interfaceChain(interfaceChain_cb _hidl_cb){

_hidl_cb({

::android::hidl::manager::V1_2::IServiceManager::descriptor,

::android::hidl::manager::V1_1::IServiceManager::descriptor,

::android::hidl::manager::V1_0::IServiceManager::descriptor,

::android::hidl::base::V1_0::IBase::descriptor,

});

return ::android::hardware::Void();

}5.2.1 getBinderCallingContext()

先获取当前binder线程的对象,再获取其调用的pid、sid 上下文信息

[/system/hwservicemanager/ServiceManager.cpp]

AccessControl::CallingContext getBinderCallingContext() {

//获取当前binder线程的对象,参考[5.2.2]

const auto& self = IPCThreadState::self();

pid_t pid = self->getCallingPid(); //得到pid

const char* sid = self->getCallingSid(); //得到sid

if (sid == nullptr) {

if (pid != getpid()) {

android_errorWriteLog(0x534e4554, "121035042");

}

//如果sid为空,根据pid来得到对应的context值,返回相应结构

return AccessControl::getCallingContext(pid);

} else {

return { true, sid, pid };

}

}5.2.2 IPCThreadState::self()

用来获取当前binder线程的对象,如果没有对象,就创建一个

[/system/libhwbinder/IPCThreadState.cpp]

IPCThreadState* IPCThreadState::self()

{

//1.第一次进入时,gHaveTLS为false

if (gHaveTLS) {

restart:

//6.如果线程中有IPCThreadState数据,就返回线程中的对象, 否是新创建一个IPCThreadState的对象并返回

const pthread_key_t k = gTLS;

IPCThreadState* st = (IPCThreadState*)pthread_getspecific(k);

if (st) return st;

return new IPCThreadState; //参考[5.2.3]

}

//2.第一次进入时,gShutdown为false

if (gShutdown) {

ALOGW("Calling IPCThreadState::self() during shutdown is dangerous, expect a crash.\n");

return nullptr;

}

pthread_mutex_lock(&gTLSMutex); //上锁

if (!gHaveTLS) {

//3.创建线程私有数据

int key_create_value = pthread_key_create(&gTLS, threadDestructor);

if (key_create_value != 0) {

pthread_mutex_unlock(&gTLSMutex);

ALOGW("IPCThreadState::self() unable to create TLS key, expect a crash: %s\n",

strerror(key_create_value));

return nullptr;

}

//4.gHaveTLS置为true

gHaveTLS = true;

}

pthread_mutex_unlock(&gTLSMutex);//解锁

//5.跳转到restart

goto restart;

}5.2.3 IPCThreadState::IPCThreadState()

用来做一些初值赋值,主要是获取ProcessState的对象,存入mProcess

[/system/libhwbinder/IPCThreadState.cpp]

IPCThreadState::IPCThreadState()

: mProcess(ProcessState::self()), //参考[5.2.4]

mStrictModePolicy(0),

mLastTransactionBinderFlags(0),

mIsLooper(false),

mIsPollingThread(false),

mCallRestriction(mProcess->mCallRestriction) {

pthread_setspecific(gTLS, this);

clearCaller();

mIn.setDataCapacity(256);

mOut.setDataCapacity(256);

mIPCThreadStateBase = IPCThreadStateBase::self();

}5.2.4 ProcessState::self()

这是一个单例的实现,在一个进程中,只会创建一次ProcessState对象

[/system/libhwbinder/ProcessState.cpp ]

sp<ProcessState> ProcessState::self()

{

Mutex::Autolock _l(gProcessMutex);

if (gProcess != nullptr) {

return gProcess;

}

gProcess = new ProcessState(DEFAULT_BINDER_VM_SIZE); //参考[5.2.5]

return gProcess;

}5.2.5 ProcessState::ProcessState()

打开“/dev/hwbinder”,得到hwbinde句柄,赋给mDriverFD,后面该进程中就通过这个句柄和hwbinder驱动进行通信

同时,申请一块(1M-8K)的虚拟内存空间,用来接收事务

[/system/libhwbinder/ProcessState.cpp ]

ProcessState::ProcessState(size_t mmap_size)

: mDriverFD(open_driver()) //打开“/dev/hwbinder”,得到hwbinde句柄,赋给mDriverFD

, mVMStart(MAP_FAILED)

, mThreadCountLock(PTHREAD_MUTEX_INITIALIZER)

, mThreadCountDecrement(PTHREAD_COND_INITIALIZER)

, mExecutingThreadsCount(0)

, mMaxThreads(DEFAULT_MAX_BINDER_THREADS) //默认的最大binder线程个数,这里为0

, mStarvationStartTimeMs(0)

, mManagesContexts(false)

, mBinderContextCheckFunc(nullptr)

, mBinderContextUserData(nullptr)

, mThreadPoolStarted(false)

, mSpawnThreadOnStart(true)

, mThreadPoolSeq(1)

, mMmapSize(mmap_size)

, mCallRestriction(CallRestriction::NONE)

{

if (mDriverFD >= 0) {

// mmap the binder, providing a chunk of virtual address space to receive transactions.

//申请一块(1M-8K)的虚拟内存空间,用来接收事务

mVMStart = mmap(nullptr, mMmapSize, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

if (mVMStart == MAP_FAILED) {

// *sigh*

ALOGE("Mmapping /dev/hwbinder failed: %s\n", strerror(errno));

close(mDriverFD);

mDriverFD = -1;

}

}

else {

ALOGE("Binder driver could not be opened. Terminating.");

}

}5.2.6 open_driver()

打开/dev/hwbinder驱动,比较binder比较协议版本是否相同,不同则跳出,同时设置最大的binder线程个数

[/system/libhwbinder/ProcessState.cpp]

static int open_driver()

{

//1.打开hwinder设备驱动,陷入内核

int fd = open("/dev/hwbinder", O_RDWR | O_CLOEXEC);

if (fd >= 0) {

int vers = 0;

//2.获取Binder的版本信息

status_t result = ioctl(fd, BINDER_VERSION, &vers);

if (result == -1) {

ALOGE("Binder ioctl to obtain version failed: %s", strerror(errno));

close(fd);

fd = -1;

}

//3.比较协议版本是否相同,不同则跳出

if (result != 0 || vers != BINDER_CURRENT_PROTOCOL_VERSION) {

ALOGE("Binder driver protocol(%d) does not match user space protocol(%d)!", vers, BINDER_CURRENT_PROTOCOL_VERSION);

close(fd);

fd = -1;

}

//3.设置binder最大线程个数

size_t maxThreads = DEFAULT_MAX_BINDER_THREADS;

result = ioctl(fd, BINDER_SET_MAX_THREADS, &maxThreads);

if (result == -1) {

ALOGE("Binder ioctl to set max threads failed: %s", strerror(errno));

}

} else {

ALOGW("Opening '/dev/hwbinder' failed: %s\n", strerror(errno));

}

return fd;

}5.2.7 ServiceManager::addImpl()

获取当前binder调用的pid、sid 上下文信息后,开始注册ServiceManager自身,即把ServiceManager对象注册到mServiceMap中

在过程中,要进行selinux检查,确认该进程有权限添加service后,再检查是否有重复注册,而且如果子类在父类上注册,则注销它,

排除一切问题后,把需要注册的服务,注册到mServiceMap中,最后建立一个死亡连接,当服务挂掉时会接收到通知,做一些清理工作。

bool ServiceManager::addImpl(const std::string& name,

const sp<IBase>& service,

const hidl_vec<hidl_string>& interfaceChain,

const AccessControl::CallingContext& callingContext) {

//传入的interfaceChain size大于0

if (interfaceChain.size() == 0) {

LOG(WARNING) << "Empty interface chain for " << name;

return false;

}

//1.首先,检查是否有权限来否允许add()整个接口层次结构,最终调用的是selinux_check_access来检查,是否有 hwservice_manager的权限

for(size_t i = 0; i < interfaceChain.size(); i++) {

const std::string fqName = interfaceChain[i];

if (!mAcl.canAdd(fqName, callingContext)) {

return false;

}

}

// 2.检查是否有重复注册

if (interfaceChain.size() > 1) {

// 倒数第二的条目应该是除IBase之外的最高基类。

const std::string baseFqName = interfaceChain[interfaceChain.size() - 2];

const HidlService *hidlService = lookup(baseFqName, name);

if (hidlService != nullptr && hidlService->getService() != nullptr) {

// This shouldn't occur during normal operation. Here are some cases where

// it might get hit:

// - bad configuration (service installed on device multiple times)

// - race between death notification and a new service being registered

// (previous logs should indicate a separate problem)

const std::string childFqName = interfaceChain[0];

pid_t newServicePid = IPCThreadState::self()->getCallingPid();

pid_t oldServicePid = hidlService->getDebugPid();

LOG(WARNING) << "Detected instance of " << childFqName << " (pid: " << newServicePid

<< ") registering over instance of or with base of " << baseFqName << " (pid: "

<< oldServicePid << ").";

}

}

// 3.如果子类在父类上注册,则注销它

{

// For IBar extends IFoo if IFoo/default is being registered, remove

// IBar/default. This makes sure the following two things are equivalent

// 1). IBar::castFrom(IFoo::getService(X))

// 2). IBar::getService(X)

// assuming that IBar is declared in the device manifest and there

// is also not an IBaz extends IFoo and there is no race.

const std::string childFqName = interfaceChain[0];

const HidlService *hidlService = lookup(childFqName, name);

if (hidlService != nullptr) {

const sp<IBase> remove = hidlService->getService();

if (remove != nullptr) {

const std::string instanceName = name;

removeService(remove, &instanceName /* restrictToInstanceName */);

}

}

}

// 4.排除问题后,把需要注册的服务,注册到mServiceMap中

for(size_t i = 0; i < interfaceChain.size(); i++) {

const std::string fqName = interfaceChain[i];

PackageInterfaceMap &ifaceMap = mServiceMap[fqName];

HidlService *hidlService = ifaceMap.lookup(name);

if (hidlService == nullptr) {

//服务插入 mServiceMap,以后从这取出服务

ifaceMap.insertService(

std::make_unique<HidlService>(fqName, name, service, callingContext.pid));

} else {

hidlService->setService(service, callingContext.pid);

}

ifaceMap.sendPackageRegistrationNotification(fqName, name);

}

//建立一个死亡连接,当服务挂掉时会接收到通知,做一些清理工作

bool linkRet = service->linkToDeath(this, kServiceDiedCookie).withDefault(false);

if (!linkRet) {

LOG(ERROR) << "Could not link to death for " << interfaceChain[0] << "/" << name;

}

return true;

}5.3 toBinder()

根据ServiceManager找到对应的Binder对象,最终找到的Binder对象为BnHwServiceManager

[\system\libhidl\transport\include\hidl\HidlBinderSupport.h]

sp<IBinder> toBinder(sp<IType> iface) {

IType *ifacePtr = iface.get();

return getOrCreateCachedBinder(ifacePtr);

}转换一下:

sp<IBinder> toBinder(sp<IServiceManager> manager) {

IServiceManager *ifacePtr = manager.get(); //得到一个IServiceManager服务对象

return getOrCreateCachedBinder(ifacePtr);

}

[\system\libhidl\transport\include\hidl\HidlBinderSupport.cpp]

sp<IBinder> getOrCreateCachedBinder(::android::hidl::base::V1_0::IBase* ifacePtr) {

if (ifacePtr == nullptr) {

return nullptr;

}

这里首先判断hal对象是否是BpHwxxx 远程Binder,如果是,直接返回remote的BpHwBinder对象

if (ifacePtr->isRemote()) {

using ::android::hidl::base::V1_0::BpHwBase;

BpHwBase* bpBase = static_cast<BpHwBase*>(ifacePtr);

BpHwRefBase* bpRefBase = static_cast<BpHwRefBase*>(bpBase);

return sp<IBinder>(bpRefBase->remote());

}

//获得IServiceManager的description

std::string descriptor = details::getDescriptor(ifacePtr);

if (descriptor.empty()) {

// interfaceDescriptor fails

return nullptr;

}

// for get + set

std::unique_lock<std::mutex> _lock = details::gBnMap->lock();

wp<BHwBinder> wBnObj = details::gBnMap->getLocked(ifacePtr, nullptr);

sp<IBinder> sBnObj = wBnObj.promote();

if (sBnObj == nullptr) {

//获取BnConstructorMap的map对象

auto func = details::getBnConstructorMap().get(descriptor, nullptr);

if (!func) {

// TODO(b/69122224): remove this static variable when prebuilts updated

func = details::gBnConstructorMap->get(descriptor, nullptr);

}

LOG_ALWAYS_FATAL_IF(func == nullptr, "%s gBnConstructorMap returned null for %s", __func__,

descriptor.c_str());

//强转为一个IBinder的强指针对象

sBnObj = sp<IBinder>(func(static_cast<void*>(ifacePtr)));

LOG_ALWAYS_FATAL_IF(sBnObj == nullptr, "%s Bn constructor function returned null for %s",

__func__, descriptor.c_str());

details::gBnMap->setLocked(ifacePtr, static_cast<BHwBinder*>(sBnObj.get()));

}

return sBnObj;

}这里就有一个疑问getBnConstructorMap()获取的Binder对象,是从哪里来的呢?

这里传入的description是IServiceManager的description,所以我们回到IServiceManager的实现中去,在ServiceManagerAll.cpp的开头有下面这么一段:

[/out/soong/.intermediates/system/libhidl/transport/manager/1.0/android.hidl.manager@1.0_genc++/gen/android/hidl/manager/1.2/ServiceManagerAll.cpp]

__attribute__((constructor)) static void static_constructor() {

::android::hardware::details::getBnConstructorMap().set(IServiceManager::descriptor,

[](void *iIntf) -> ::android::sp<::android::hardware::IBinder> {

return new BnHwServiceManager(static_cast<IServiceManager *>(iIntf));

});

::android::hardware::details::getBsConstructorMap().set(IServiceManager::descriptor,

[](void *iIntf) -> ::android::sp<::android::hidl::base::V1_0::IBase> {

return new BsServiceManager(static_cast<IServiceManager *>(iIntf));

});

};__attribute__((constructor)) 不是标准C++,他是GCC的一种扩展属性函数。constructor属性使得函数在执行进入main()之前被自动调用。

所以在HwServiceManager进程中,在serviec.cpp的main()函数执行之前会先执行__attribute__((constructor))中的内容,从这里可以看到__attribute__((constructor)) 中把 BnHwServiceManager 对象和BsServiceManager对象,分别插入了BnConstructorMap 和BsConstructorMap的map中,

当main()启动后,其他函数调用getBnConstructorMap().get()时,就能获得BnHwServiceManager对象,所以我们这里的getOrCreateCachedBinder()最终获得的Binder对象就是BnHwServiceManager。

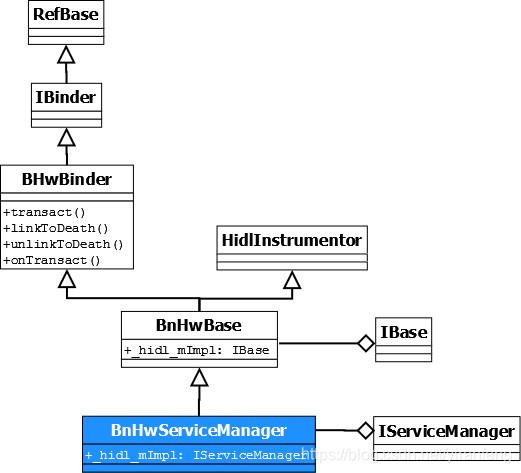

BnHwServiceManager类继承关系如下图展示:

5.4 ProcessState::becomeContextManager()

让HwServiceManager成为整个系统中唯一的上下文管理器,其实也就是service管理器,这样我们就可以把HwServiceManager称之为守护进程。

bool ProcessState::becomeContextManager(context_check_func checkFunc, void* userData)

{

if (!mManagesContexts) {

AutoMutex _l(mLock);

mBinderContextCheckFunc = checkFunc;

mBinderContextUserData = userData;

flat_binder_object obj {

.flags = FLAT_BINDER_FLAG_TXN_SECURITY_CTX,

};

//Android10.0 中引入BINDER_SET_CONTEXT_MGR_EXT,用来把HwServiecManager设置成为安全的上下文,

status_t result = ioctl(mDriverFD, BINDER_SET_CONTEXT_MGR_EXT, &obj);

// fallback to original method

if (result != 0) {

android_errorWriteLog(0x534e4554, "121035042");

int dummy = 0;

//如果安全上下文设置失败,继续使用原有的BINDER_SET_CONTEXT_MGR来进行控制

result = ioctl(mDriverFD, BINDER_SET_CONTEXT_MGR, &dummy);

}

if (result == 0) {

mManagesContexts = true;

} else if (result == -1) {

mBinderContextCheckFunc = nullptr;

mBinderContextUserData = nullptr;

ALOGE("Binder ioctl to become context manager failed: %s\n", strerror(errno));

}

}

return mManagesContexts;

}5.5 HwBinderCallback::setupTo()

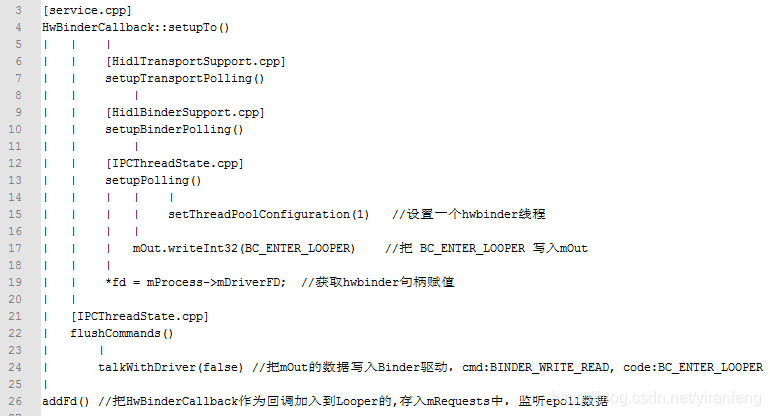

调用栈:

setupTo()主要是把HwBinderCallback对象作为回调,加入到Lopper中,fd为之前打开的hwbinder句柄,ident:POLL_CALLBACK, events:EVENT_INPUT,调用epoll_ctl()来监听fd的变化,为后面回调处理做准备。

[/system/hwservicemanager/service.cpp]

static sp<HwBinderCallback> setupTo(const sp<Looper>& looper) {

//1.拿到HwBinderCallback对象

sp<HwBinderCallback> cb = new HwBinderCallback;

//2.获取到之前得到的open("/dev/hwbinder")的句柄mProcess->mDriverFD,配置一个1线程,mOut写入一个参数 BC_ENTER_LOOPER

//参考[5.5.1]

int fdHwBinder = setupTransportPolling();

LOG_ALWAYS_FATAL_IF(fdHwBinder < 0, "Failed to setupTransportPolling: %d", fdHwBinder);

// Flush after setupPolling(), to make sure the binder driver

// knows about this thread handling commands.

//3.其中最终调用的是talkWithDriver(),用来把前面的code:BC_ENTER_LOOPER发给binder驱动,参考[5.5.2]

IPCThreadState::self()->flushCommands();

//把HwBinderCallback作为回调加入到Looper的,IPC请求回调,有数据时会调用该回调进行处理

int ret = looper->addFd(fdHwBinder,

Looper::POLL_CALLBACK,

Looper::EVENT_INPUT,

cb,

nullptr /*data*/);

LOG_ALWAYS_FATAL_IF(ret != 1, "Failed to add binder FD to Looper");

return cb;

}5.5.1 setupPolling()

根据setupTo()的调用栈可知,setupTransportPolling最终会调到setupPolling(),主要用来配置一个hwbinder线程,往mOut写入一个code:BC_ENTER_LOOPER,用来后面和hwbinder进行通信,再把hwbinder的句柄赋值给fd

[/system/libhwbinder/IPCThreadState.cpp]

int IPCThreadState::setupPolling(int* fd)

{

if (mProcess->mDriverFD <= 0) {

return -EBADF;

}

// Tells the kernel to not spawn any additional binder threads,

// as that won't work with polling. Also, the caller is responsible

// for subsequently calling handlePolledCommands()

//配置hwbinder信息信息

mProcess->setThreadPoolConfiguration(1, true /* callerWillJoin */);

mIsPollingThread = true;

mOut.writeInt32(BC_ENTER_LOOPER); //把BC_ENTER_LOOPER 写入mOut

*fd = mProcess->mDriverFD; //获取hwbinder句柄赋值

return 0;

}5.5.2 IPCThreadState::talkWithDriver()

这里主要是把之前mOut的内容(code:BC_ENTER_LOOPER)写入hwbinder驱动,从binder驱动读入返回值,存入mIn

[/system/libhwbinder/IPCThreadState.cpp]

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

if (mProcess->mDriverFD <= 0) {

return -EBADF;

}

binder_write_read bwr; //准备一个write_read 结构,用来向hwbinder驱动发送数据

// Is the read buffer empty?

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

// We don't want to write anything if we are still reading

// from data left in the input buffer and the caller

// has requested to read the next data.

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

bwr.write_size = outAvail;

bwr.write_buffer = (uintptr_t)mOut.data(); 把mOut的数据存入 write_buffer中,code:BC_ENTER_LOOPER

// doReceive为false,这次负责write,不用read

if (doReceive && needRead) {

//接收数据缓冲区信息的填充。如果以后收到数据,就直接填在mIn中了

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (uintptr_t)mIn.data();

} else {

//没有收到数据时,把read置空,binder只进行write处理

bwr.read_size = 0;

bwr.read_buffer = 0;

}

//当读缓冲和写缓冲都为空,则直接返回

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

#if defined(__ANDROID__)

///通过ioctl不停的读写操作,跟hwbinder驱动进行通信

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

#else

err = INVALID_OPERATION;

#endif

if (mProcess->mDriverFD <= 0) {

err = -EBADF;

}

} while (err == -EINTR); //当被中断,则继续执行

...

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {

if (bwr.write_consumed < mOut.dataSize())

mOut.remove(0, bwr.write_consumed);

else {

mOut.setDataSize(0);

processPostWriteDerefs();

}

}

//当从binder驱动读到内容后,存入mIn

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

...

return NO_ERROR;

}

return err;

}5.5.3 Looper::addFd()

fd放入线程epoll中,epoll_wait等待时,当fd有数据接入,我们就可以处理相应的数据

[/system/core/libutils/Looper.cpp]

int Looper::addFd(int fd, int ident, int events, const sp<LooperCallback>& callback, void* data) {

if (!callback.get()) {

if (! mAllowNonCallbacks) {

ALOGE("Invalid attempt to set NULL callback but not allowed for this looper.");

return -1;

}

if (ident < 0) {

ALOGE("Invalid attempt to set NULL callback with ident < 0.");

return -1;

}

} else {

ident = POLL_CALLBACK;

}

{ // acquire lock

AutoMutex _l(mLock);

Request request;

request.fd = fd;

request.ident = ident;

request.events = events;

request.seq = mNextRequestSeq++;

request.callback = callback;

request.data = data;

if (mNextRequestSeq == -1) mNextRequestSeq = 0; // reserve sequence number -1

struct epoll_event eventItem;

request.initEventItem(&eventItem);

ssize_t requestIndex = mRequests.indexOfKey(fd);

if (requestIndex < 0) {

// requestIndex < 0 表示之前没有添加过, 添加 epoll 监听fd

int epollResult = epoll_ctl(mEpollFd.get(), EPOLL_CTL_ADD, fd, &eventItem);

if (epollResult < 0) {

ALOGE("Error adding epoll events for fd %d: %s", fd, strerror(errno));

return -1;

}

//把之前的cb赋值的request存入mRequests,供后续调用

mRequests.add(fd, request);

} else {

// 表示之前有添加过,更新epoll

int epollResult = epoll_ctl(mEpollFd.get(), EPOLL_CTL_MOD, fd, &eventItem);

if (epollResult < 0) {

if (errno == ENOENT) {

...

epollResult = epoll_ctl(mEpollFd.get(), EPOLL_CTL_ADD, fd, &eventItem);

if (epollResult < 0) {

ALOGE("Error modifying or adding epoll events for fd %d: %s",

fd, strerror(errno));

return -1;

}

scheduleEpollRebuildLocked();

} else {

ALOGE("Error modifying epoll events for fd %d: %s", fd, strerror(errno));

return -1;

}

}

mRequests.replaceValueAt(requestIndex, request);

}

} // release lock

return 1;

}5.6 ClientCallbackCallback::setupTo()

把ClientCallbackCallback 加入Lopper,存入全局列表mRequests中,epoll监听fdTimer的变化

[/system/hwservicemanager/service.cpp]

class ClientCallbackCallback : public LooperCallback {

public:

static sp<ClientCallbackCallback> setupTo(const sp<Looper>& looper, const sp<ServiceManager>& manager) {

sp<ClientCallbackCallback> cb = new ClientCallbackCallback(manager);

//创建一个定时器

int fdTimer = timerfd_create(CLOCK_MONOTONIC, 0 /*flags*/);

LOG_ALWAYS_FATAL_IF(fdTimer < 0, "Failed to timerfd_create: fd: %d err: %d", fdTimer, errno);

itimerspec timespec {

.it_interval = {

.tv_sec = 5, //设置查询周期为5秒

.tv_nsec = 0,

},

.it_value = {

.tv_sec = 5, //设置超时为5秒

.tv_nsec = 0,

},

};

int timeRes = timerfd_settime(fdTimer, 0 /*flags*/, ×pec, nullptr);

LOG_ALWAYS_FATAL_IF(timeRes < 0, "Failed to timerfd_settime: res: %d err: %d", timeRes, errno);

//把ClientCallbackCallback 加入Lopper,存入全局列表mRequests中

int addRes = looper->addFd(fdTimer,

Looper::POLL_CALLBACK,

Looper::EVENT_INPUT,

cb,

nullptr);

LOG_ALWAYS_FATAL_IF(addRes != 1, "Failed to add client callback FD to Looper");

return cb;

}

};5.7 pollAll()

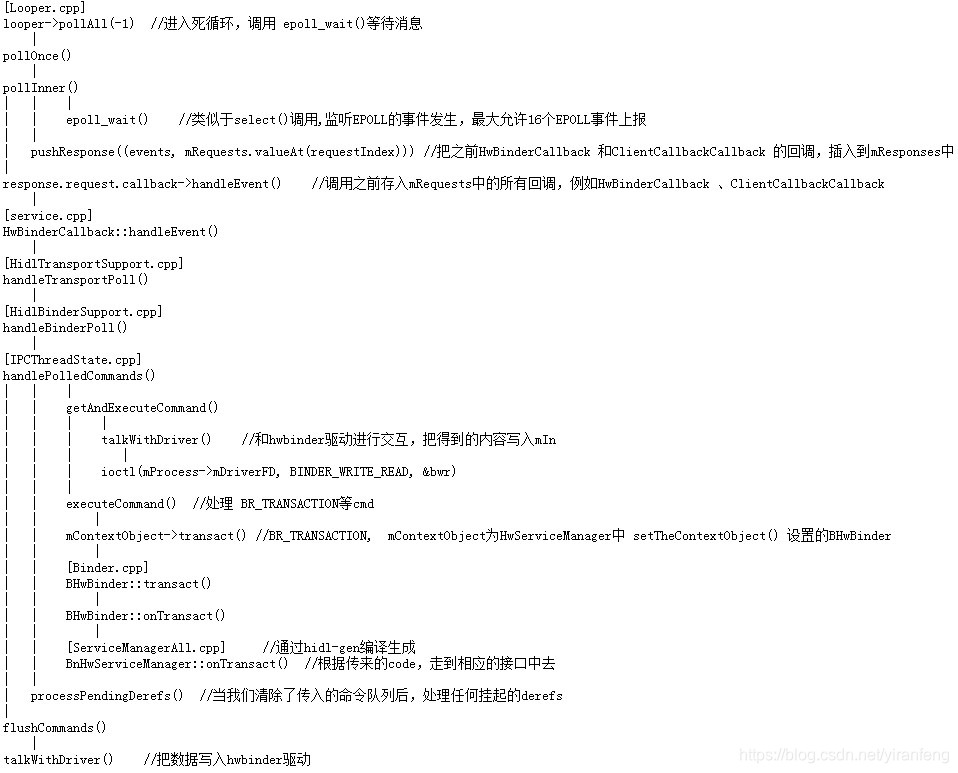

HwServiceManager 会启动一个死循环来等待事件、分析时间,这个等待的入口就是pollAll(),先简略的看一下pollAll()的上下文关系。

pollAll()的调用栈如下:

传入的timeoutMillis为-1

[/system/core/libutils/Looper.cpp]

inline int pollAll(int timeoutMillis) {

return pollAll(timeoutMillis, nullptr, nullptr, nullptr);

}

int Looper::pollAll(int timeoutMillis, int* outFd, int* outEvents, void** outData) {

if (timeoutMillis <= 0) {

int result;

do {

//传入的timeoutMillis为-1,走该流程,调用pollOnce() 参考[5.7.1]

result = pollOnce(timeoutMillis, outFd, outEvents, outData);

} while (result == POLL_CALLBACK);

return result;

} else {

nsecs_t endTime = systemTime(SYSTEM_TIME_MONOTONIC)

+ milliseconds_to_nanoseconds(timeoutMillis);

for (;;) {

int result = pollOnce(timeoutMillis, outFd, outEvents, outData);

if (result != POLL_CALLBACK) {

return result;

}

nsecs_t now = systemTime(SYSTEM_TIME_MONOTONIC);

timeoutMillis = toMillisecondTimeoutDelay(now, endTime);

if (timeoutMillis == 0) {

return POLL_TIMEOUT;

}

}

}

}5.7.1 Looper::pollOnce()

检查response是否有需要处理,最终调用pollInner()

[/system/core/libutils/Looper.cpp]

int Looper::pollOnce(int timeoutMillis, int* outFd, int* outEvents, void** outData) {

int result = 0;

for (;;) {

while (mResponseIndex < mResponses.size()) {

const Response& response = mResponses.itemAt(mResponseIndex++);

int ident = response.request.ident;

if (ident >= 0) {

int fd = response.request.fd;

int events = response.events;

void* data = response.request.data;

...

if (outFd != nullptr) *outFd = fd;

if (outEvents != nullptr) *outEvents = events;

if (outData != nullptr) *outData = data;

return ident;

}

}

if (result != 0) {

...

if (outFd != nullptr) *outFd = 0;

if (outEvents != nullptr) *outEvents = 0;

if (outData != nullptr) *outData = nullptr;

return result;

}

//参考[5.7.2]

result = pollInner(timeoutMillis);

}

}5.7.2 Looper::pollInner()

调用epoll_wait,等待epoll的消息,epoll_wait()类似于select()调用,监听EPOLL的事件发生,最大允许16个EPOLL事件上报,当有事件上报时,调用之前存入mRequests中的所有回调,例如HwBinderCallback 、ClientCallbackCallback 的handleEvent

[/system/core/libutils/Looper.cpp]

int Looper::pollInner(int timeoutMillis) {

// Adjust the timeout based on when the next message is due.

if (timeoutMillis != 0 && mNextMessageUptime != LLONG_MAX) {

nsecs_t now = systemTime(SYSTEM_TIME_MONOTONIC);

int messageTimeoutMillis = toMillisecondTimeoutDelay(now, mNextMessageUptime);

if (messageTimeoutMillis >= 0

&& (timeoutMillis < 0 || messageTimeoutMillis < timeoutMillis)) {

timeoutMillis = messageTimeoutMillis;

}

...

}

// Poll.

int result = POLL_WAKE;

mResponses.clear();

mResponseIndex = 0;

// We are about to idle.

mPolling = true;

//1.调用epoll_wait,等待epoll的消息

//epoll_wait()类似于select()调用,监听EPOLL的事件发生,最大允许16个EPOLL事件上报

struct epoll_event eventItems[EPOLL_MAX_EVENTS];

int eventCount = epoll_wait(mEpollFd.get(), eventItems, EPOLL_MAX_EVENTS, timeoutMillis);

// No longer idling.

mPolling = false;

// 上锁

mLock.lock();

// Rebuild epoll set if needed.

if (mEpollRebuildRequired) {

mEpollRebuildRequired = false;

rebuildEpollLocked();

goto Done;

}

// Check for poll error.

if (eventCount < 0) {

if (errno == EINTR) {

goto Done;

}

ALOGW("Poll failed with an unexpected error: %s", strerror(errno));

result = POLL_ERROR;

goto Done;

}

// Check for poll timeout.

if (eventCount == 0) {

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ pollOnce - timeout", this);

#endif

result = POLL_TIMEOUT;

goto Done;

}

for (int i = 0; i < eventCount; i++) {

int fd = eventItems[i].data.fd;

uint32_t epollEvents = eventItems[i].events;

if (fd == mWakeEventFd.get()) {

if (epollEvents & EPOLLIN) {

awoken();

} else {

ALOGW("Ignoring unexpected epoll events 0x%x on wake event fd.", epollEvents);

}

} else {

ssize_t requestIndex = mRequests.indexOfKey(fd);

if (requestIndex >= 0) {

int events = 0;

if (epollEvents & EPOLLIN) events |= EVENT_INPUT;

if (epollEvents & EPOLLOUT) events |= EVENT_OUTPUT;

if (epollEvents & EPOLLERR) events |= EVENT_ERROR;

if (epollEvents & EPOLLHUP) events |= EVENT_HANGUP;

//把之前HwBinderCallback 和ClientCallbackCallback 的回调,插入到mResponses中

pushResponse(events, mRequests.valueAt(requestIndex));

} else {

ALOGW("Ignoring unexpected epoll events 0x%x on fd %d that is "

"no longer registered.", epollEvents, fd);

}

}

}

Done: ;

// Invoke pending message callbacks.

mNextMessageUptime = LLONG_MAX;

while (mMessageEnvelopes.size() != 0) {

nsecs_t now = systemTime(SYSTEM_TIME_MONOTONIC);

const MessageEnvelope& messageEnvelope = mMessageEnvelopes.itemAt(0);

if (messageEnvelope.uptime <= now) {

// Remove the envelope from the list.

// We keep a strong reference to the handler until the call to handleMessage

// finishes. Then we drop it so that the handler can be deleted *before*

// we reacquire our lock.

{ // obtain handler

sp<MessageHandler> handler = messageEnvelope.handler;

Message message = messageEnvelope.message;

mMessageEnvelopes.removeAt(0);

mSendingMessage = true;

mLock.unlock();

..

handler->handleMessage(message);

} // release handler

mLock.lock();

mSendingMessage = false;

result = POLL_CALLBACK;

} else {

// The last message left at the head of the queue determines the next wakeup time.

mNextMessageUptime = messageEnvelope.uptime;

break;

}

}

//释放锁

mLock.unlock();

// Invoke all response callbacks.

for (size_t i = 0; i < mResponses.size(); i++) {

Response& response = mResponses.editItemAt(i);

if (response.request.ident == POLL_CALLBACK) {

int fd = response.request.fd;

int events = response.events;

void* data = response.request.data;

...

//调用之前存入mRequests中的所有回调,例如HwBinderCallback 、ClientCallbackCallback 的handleEvent

//参考[5.7.3]

int callbackResult = response.request.callback->handleEvent(fd, events, data);

if (callbackResult == 0) {

removeFd(fd, response.request.seq);

}

// Clear the callback reference in the response structure promptly because we

// will not clear the response vector itself until the next poll.

response.request.callback.clear();

result = POLL_CALLBACK;

}

}

return result;

}我们这里以HwBinderCallback::handleEvent()为回调的handler处理为例进行分析。

5.7.3 HwBinderCallback::handleEvent()

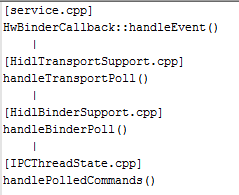

handleEvent调用栈:

[/system/hwservicemanager/service.cpp]

class HwBinderCallback : public LooperCallback {

public:

int handleEvent(int fd, int /*events*/, void* /*data*/) override {

handleTransportPoll(fd);

return 1; // Continue receiving callbacks.

}

};最终会调用到IPCThreadState::handlePolledCommands()

5.7.4 IPCThreadState::handlePolledCommands()

从hwbinder去除命令并执行命令,最后将mOut的数据写入hwbinder驱动

[/system/libhwbinder/IPCThreadState.cpp]

status_t IPCThreadState::handlePolledCommands()

{

status_t result;

do {

//1.获取命令,并执行它, 参考[5.7.5]

result = getAndExecuteCommand();

} while (mIn.dataPosition() < mIn.dataSize());

processPendingDerefs();

flushCommands();

return result;

}5.7.5 IPCThreadState::getAndExecuteCommand()

从hwbinder取出cmd命令,然后执行这些命令

[/system/libhwbinder/IPCThreadState.cpp]

status_t IPCThreadState::getAndExecuteCommand()

{

status_t result;

int32_t cmd;

//1.向hwbinder发送read_write 命令,获取hwbinder的返回数据

//参考[5.5.2]

result = talkWithDriver();

if (result >= NO_ERROR) {

size_t IN = mIn.dataAvail();

if (IN < sizeof(int32_t)) return result;

//从hwbinder驱动cmd命令

cmd = mIn.readInt32();

IF_LOG_COMMANDS() {

alog << "Processing top-level Command: "

<< getReturnString(cmd) << endl;

}

pthread_mutex_lock(&mProcess->mThreadCountLock);

mProcess->mExecutingThreadsCount++;

if (mProcess->mExecutingThreadsCount >= mProcess->mMaxThreads &&

mProcess->mMaxThreads > 1 && mProcess->mStarvationStartTimeMs == 0) {

mProcess->mStarvationStartTimeMs = uptimeMillis();

}

pthread_mutex_unlock(&mProcess->mThreadCountLock);

//2.处理 hwbinder驱动返回的BR_TRANSACTION等 命令,参考[5.7.6]

result = executeCommand(cmd);

...

return result;

}5.7.6 IPCThreadState::executeCommand()

执行命令,hwbinder和hwservicemanager 通信的常用命令为 BR_TRANSACTION

[/system/libhwbinder/IPCThreadState.cpp]

status_t IPCThreadState::executeCommand(int32_t cmd)

{

BHwBinder* obj;

RefBase::weakref_type* refs;

status_t result = NO_ERROR;

switch ((uint32_t)cmd) {

...

case BR_TRANSACTION_SEC_CTX:

case BR_TRANSACTION:

{

binder_transaction_data_secctx tr_secctx;

binder_transaction_data& tr = tr_secctx.transaction_data;

if (cmd == BR_TRANSACTION_SEC_CTX) {

result = mIn.read(&tr_secctx, sizeof(tr_secctx));

} else {

result = mIn.read(&tr, sizeof(tr));

tr_secctx.secctx = 0;

}

ALOG_ASSERT(result == NO_ERROR,

"Not enough command data for brTRANSACTION");

if (result != NO_ERROR) break;

// Record the fact that we're in a hwbinder call

mIPCThreadStateBase->pushCurrentState(

IPCThreadStateBase::CallState::HWBINDER);

Parcel buffer;

buffer.ipcSetDataReference(

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), freeBuffer, this);

const pid_t origPid = mCallingPid;

const char* origSid = mCallingSid;

const uid_t origUid = mCallingUid;

const int32_t origStrictModePolicy = mStrictModePolicy;

const int32_t origTransactionBinderFlags = mLastTransactionBinderFlags;

mCallingPid = tr.sender_pid;

mCallingSid = reinterpret_cast<const char*>(tr_secctx.secctx);

mCallingUid = tr.sender_euid;

mLastTransactionBinderFlags = tr.flags;

Parcel reply;

status_t error;

bool reply_sent = false;

...

//构造一个匿名回调函数,处理完之后会使用这个函数发送 replyParcel

auto reply_callback = [&] (auto &replyParcel) {

if (reply_sent) {

// Reply was sent earlier, ignore it.

ALOGE("Dropping binder reply, it was sent already.");

return;

}

reply_sent = true;

if ((tr.flags & TF_ONE_WAY) == 0) {

replyParcel.setError(NO_ERROR);

sendReply(replyParcel, 0);

} else {

ALOGE("Not sending reply in one-way transaction");

}

};

//hwservicemanager不会走这个分支

if (tr.target.ptr) {

// We only have a weak reference on the target object, so we must first try to

// safely acquire a strong reference before doing anything else with it.

if (reinterpret_cast<RefBase::weakref_type*>(

tr.target.ptr)->attemptIncStrong(this)) {

error = reinterpret_cast<BHwBinder*>(tr.cookie)->transact(tr.code, buffer,

&reply, tr.flags, reply_callback);

reinterpret_cast<BHwBinder*>(tr.cookie)->decStrong(this);

} else {

error = UNKNOWN_TRANSACTION;

}

} else {

//mContextObject 就是我们前面设置的 BnHwServiceManager ,参考[5.7.7]

error = mContextObject->transact(tr.code, buffer, &reply, tr.flags, reply_callback);

}

mIPCThreadStateBase->popCurrentState();

if ((tr.flags & TF_ONE_WAY) == 0) {

if (!reply_sent) {

// Should have been a reply but there wasn't, so there

// must have been an error instead.

reply.setError(error);

sendReply(reply, 0);

} else {

if (error != NO_ERROR) {

ALOGE("transact() returned error after sending reply.");

} else {

// Ok, reply sent and transact didn't return an error.

}

}

} else {

// One-way transaction, don't care about return value or reply.

}

//ALOGI("<<<< TRANSACT from pid %d restore pid %d sid %s uid %d\n",

// mCallingPid, origPid, (origSid ? origSid : "<N/A>"), origUid);

mCallingPid = origPid;

mCallingSid = origSid;

mCallingUid = origUid;

mStrictModePolicy = origStrictModePolicy;

mLastTransactionBinderFlags = origTransactionBinderFlags;

IF_LOG_TRANSACTIONS() {

alog << "BC_REPLY thr " << (void*)pthread_self() << " / obj "

<< tr.target.ptr << ": " << indent << reply << dedent << endl;

}

}

break;

case BR_DEAD_BINDER:

{

BpHwBinder *proxy = (BpHwBinder*)mIn.readPointer();

proxy->sendObituary();

mOut.writeInt32(BC_DEAD_BINDER_DONE);

mOut.writePointer((uintptr_t)proxy);

} break;

...

}

if (result != NO_ERROR) {

mLastError = result;

}

return result;

}5.7.7 BHwBinder::transact()

根据传入的BR_TRANSACTION,最终调用到BnHwServiceManager的transact,但是由于BnHwServiceManager没有transact(),所以调用父类的transact(),即BHwBinder::transact

[/system/libhwbinder/Binder.cpp]

status_t BHwBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags, TransactCallback callback)

{

data.setDataPosition(0);

status_t err = NO_ERROR;

switch (code) {

default:

//BnHwServiceManager 实现了 onTransact(),参考[5.7.8]

err = onTransact(code, data, reply, flags,

[&](auto &replyParcel) {

replyParcel.setDataPosition(0);

if (callback != nullptr) {

callback(replyParcel);

}

});

break;

}

return err;

}5.7.8 BnHwServiceManager::onTransact()

最终会调用到这来处理其他进程发来的请求, 其实最后做事的是 ServiceManager对象,根据client传入的code,来执行不同的接口

例如有进程向注册一个hidl的服务时,调用registerAsService()时,在Android10.0上最终是调用addWithChain()接口,这里的code是12,

一个进程需要获取某个hidl服务时,需要调用get()接口,这里的code是1

[/out/soong/.intermediates/system/libhidl/transport/manager/1.0/android.hidl.manager@1.0_genc++/gen/android/hidl/manager/1.2/ServiceManagerAll.cpp]

::android::status_t BnHwServiceManager::onTransact(

uint32_t _hidl_code,

const ::android::hardware::Parcel &_hidl_data,

::android::hardware::Parcel *_hidl_reply,

uint32_t _hidl_flags,

TransactCallback _hidl_cb) {

::android::status_t _hidl_err = ::android::OK;

switch (_hidl_code) {

case 1 /* get */:

{

bool _hidl_is_oneway = _hidl_flags & 1u /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_get(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 2 /* add */:

{

bool _hidl_is_oneway = _hidl_flags & 1u /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_add(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 3 /* getTransport */:

{

bool _hidl_is_oneway = _hidl_flags & 1u /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_getTransport(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 4 /* list */:

{

bool _hidl_is_oneway = _hidl_flags & 1u /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_list(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 5 /* listByInterface */:

{

bool _hidl_is_oneway = _hidl_flags & 1u /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_listByInterface(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 6 /* registerForNotifications */:

{

bool _hidl_is_oneway = _hidl_flags & 1u /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_registerForNotifications(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 7 /* debugDump */:

{

bool _hidl_is_oneway = _hidl_flags & 1u /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_debugDump(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 8 /* registerPassthroughClient */:

{

bool _hidl_is_oneway = _hidl_flags & 1u /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_0::BnHwServiceManager::_hidl_registerPassthroughClient(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 9 /* unregisterForNotifications */:

{

bool _hidl_is_oneway = _hidl_flags & 1u /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_1::BnHwServiceManager::_hidl_unregisterForNotifications(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 10 /* registerClientCallback */:

{

bool _hidl_is_oneway = _hidl_flags & 1u /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_2::BnHwServiceManager::_hidl_registerClientCallback(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 11 /* unregisterClientCallback */:

{

bool _hidl_is_oneway = _hidl_flags & 1u /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_2::BnHwServiceManager::_hidl_unregisterClientCallback(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 12 /* addWithChain */:

{

bool _hidl_is_oneway = _hidl_flags & 1u /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_2::BnHwServiceManager::_hidl_addWithChain(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 13 /* listManifestByInterface */:

{

bool _hidl_is_oneway = _hidl_flags & 1u /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_2::BnHwServiceManager::_hidl_listManifestByInterface(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

case 14 /* tryUnregister */:

{

bool _hidl_is_oneway = _hidl_flags & 1u /* oneway */;

if (_hidl_is_oneway != false) {

return ::android::UNKNOWN_ERROR;

}

_hidl_err = ::android::hidl::manager::V1_2::BnHwServiceManager::_hidl_tryUnregister(this, _hidl_data, _hidl_reply, _hidl_cb);

break;

}

default:

{

return ::android::hidl::base::V1_0::BnHwBase::onTransact(

_hidl_code, _hidl_data, _hidl_reply, _hidl_flags, _hidl_cb);

}

}

if (_hidl_err == ::android::UNEXPECTED_NULL) {

_hidl_err = ::android::hardware::writeToParcel(

::android::hardware::Status::fromExceptionCode(::android::hardware::Status::EX_NULL_POINTER),

_hidl_reply);

}return _hidl_err;

}6.总结

HwServiceManager作为HwBinder的守护进程,主要作用就是收集各个硬件服务,当有进程需要服务时由 HwServiceManager 提供特定的硬件服务。

7.代码路径:

/system/hwservicemanager/service.cpp

/system/hwservicemanager/ServiceManager.cpp

/system/libhwbinder/Binder.cpp

/system/libhwbinder/IPCThreadState.cpp

/system/libhwbinder/ProcessState.cpp

/system/libhidl/transport/HidlTransportSupport.cpp

/system/libhidl/transport/HidlBinderSupport.cpp

/system/core/libutils/Looper.cpp

/out/soong/.intermediates/system/libhidl/transport/manager/1.0/android.hidl.manager@1.0_genc++/gen/android/hidl/manager/1.0/ServiceManagerAll.cpp

/out/soong/.intermediates/system/libhidl/transport/manager/1.0/android.hidl.manager@1.0_genc++/gen/android/hidl/manager/1.1/ServiceManagerAll.cpp

/out/soong/.intermediates/system/libhidl/transport/manager/1.0/android.hidl.manager@1.0_genc++/gen/android/hidl/manager/1.2/ServiceManagerAll.cpp

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?