实例分割比物体检测更进一步,它能够识别图像中的单个物体,并将它们与图像的其他部分分割开来。

实例分割模型的输出是一组勾勒出图像中每个物体的遮罩或轮廓,以及每个物体的类标签和置信度分数。当你不仅需要知道物体在图像中的位置,还需要知道它们的具体形状时,实例分割就非常有用了。

YOLO-SEGMENT预测返回的是Python list类型的 Results 对象,包含的数据项很多,结构比较复杂,本文进行详细介绍。

YOLO11-segment默认模型

YOLO11-segment默认模型是使用coco数据集训练的。

path: ../datasets/coco8 # dataset root dir

train: images/train # train images (relative to 'path')

val: images/val # val images (relative to 'path')

test: # test images (optional)

# Classes

names:

0: person

1: bicycle

2: car

......

在COCO数据集中,有80个分类:

{0: 'person', 1: 'bicycle', 2: 'car', 3: 'motorcycle', 4: 'airplane', 5: 'bus', 6: 'train', 7: 'truck', 8: 'boat', 9: 'traffic light', 10: 'fire hydrant', 11: 'stop sign', 12: 'parking meter', 13: 'bench', 14: 'bird', 15: 'cat', 16: 'dog', 17: 'horse', 18: 'sheep', 19: 'cow', 20: 'elephant', 21: 'bear', 22: 'zebra', 23: 'giraffe', 24: 'backpack', 25: 'umbrella', 26: 'handbag', 27: 'tie', 28: 'suitcase', 29: 'frisbee', 30: 'skis', 31: 'snowboard', 32: 'sports ball', 33: 'kite', 34: 'baseball bat', 35: 'baseball glove', 36: 'skateboard', 37: 'surfboard', 38: 'tennis racket', 39: 'bottle', 40: 'wine glass', 41: 'cup', 42: 'fork', 43: 'knife', 44: 'spoon', 45: 'bowl', 46: 'banana', 47: 'apple', 48: 'sandwich', 49: 'orange', 50: 'broccoli', 51: 'carrot', 52: 'hot dog', 53: 'pizza', 54: 'donut', 55: 'cake', 56: 'chair', 57: 'couch', 58: 'potted plant', 59: 'bed', 60: 'dining table', 61: 'toilet', 62: 'tv', 63: 'laptop', 64: 'mouse', 65: 'remote', 66: 'keyboard', 67: 'cell phone', 68: 'microwave', 69: 'oven', 70: 'toaster', 71: 'sink', 72: 'refrigerator', 73: 'book', 74: 'clock', 75: 'vase', 76: 'scissors', 77: 'teddy bear', 78: 'hair drier', 79: 'toothbrush'}

下面结合实例进行具体分析。

Results实例

我们直接使用预训练模型yolo11n-seg.pt对bus.jpg图像进行检测。

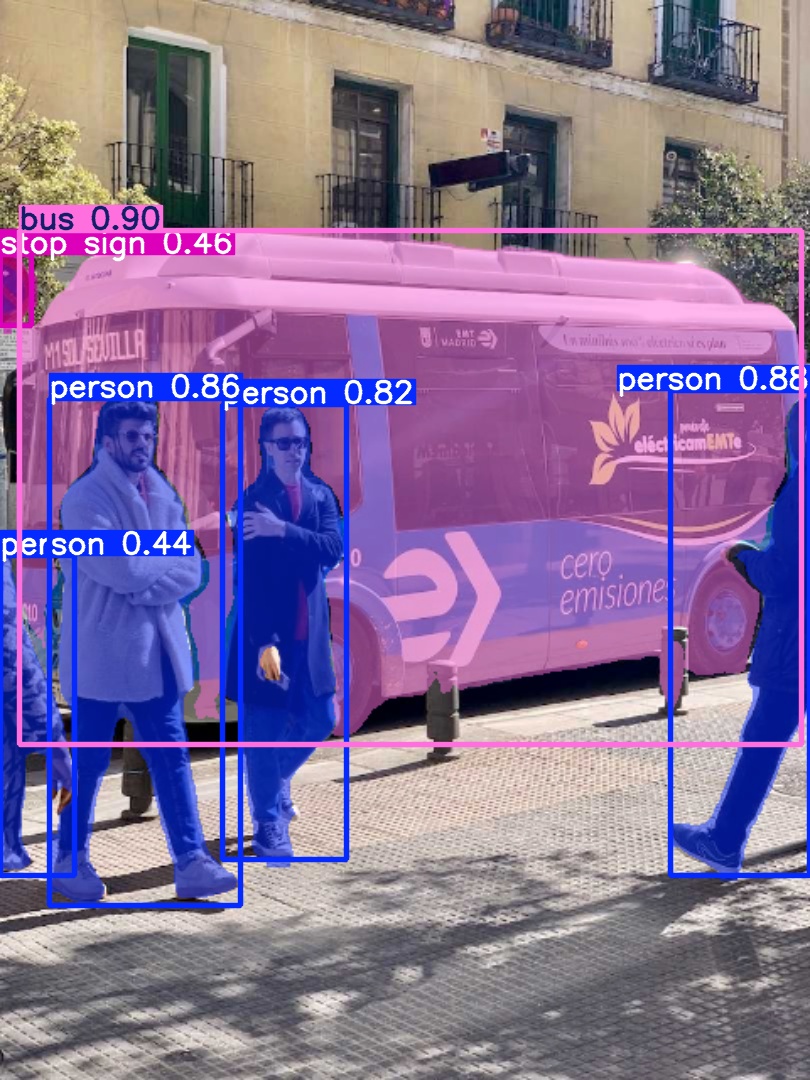

检测完成后,输出的图像:

只输入一张图片,results列表只有一个值results[0]。

从图中可以看出,检出4个’person’、1辆’bus’和1个’stop sign’。

print(results[0]):

boxes: ultralytics.engine.results.Boxes object

keypoints: None

masks: ultralytics.engine.results.Masks object

names: {0: 'person', 1: 'bicycle', 2: 'car', 3: 'motorcycle', 4: 'airplane', 5: 'bus', 6: 'train', 7: 'truck', 8: 'boat', 9: 'traffic light', 10: 'fire hydrant', 11: 'stop sign', 12: 'parking meter', 13: 'bench', 14: 'bird', 15: 'cat', 16: 'dog', 17: 'horse', 18: 'sheep', 19: 'cow', 20: 'elephant', 21: 'bear', 22: 'zebra', 23: 'giraffe', 24: 'backpack', 25: 'umbrella', 26: 'handbag', 27: 'tie', 28: 'suitcase', 29: 'frisbee', 30: 'skis', 31: 'snowboard', 32: 'sports ball', 33: 'kite', 34: 'baseball bat', 35: 'baseball glove', 36: 'skateboard', 37: 'surfboard', 38: 'tennis racket', 39: 'bottle', 40: 'wine glass', 41: 'cup', 42: 'fork', 43: 'knife', 44: 'spoon', 45: 'bowl', 46: 'banana', 47: 'apple', 48: 'sandwich', 49: 'orange', 50: 'broccoli', 51: 'carrot', 52: 'hot dog', 53: 'pizza', 54: 'donut', 55: 'cake', 56: 'chair', 57: 'couch', 58: 'potted plant', 59: 'bed', 60: 'dining table', 61: 'toilet', 62: 'tv', 63: 'laptop', 64: 'mouse', 65: 'remote', 66: 'keyboard', 67: 'cell phone', 68: 'microwave', 69: 'oven', 70: 'toaster', 71: 'sink', 72: 'refrigerator', 73: 'book', 74: 'clock', 75: 'vase', 76: 'scissors', 77: 'teddy bear', 78: 'hair drier', 79: 'toothbrush'}

obb: None

orig_img: array([[[119, 146, 172],

[121, 148, 174],

[122, 152, 177],

...,

[161, 171, 188],

[160, 170, 187],

[160, 170, 187]],

...,

[[123, 122, 126],

[145, 144, 148],

[176, 175, 179],

...,

[ 95, 85, 91],

[ 96, 86, 92],

[ 98, 88, 94]]], shape=(1080, 810, 3), dtype=uint8)

orig_shape: (1080, 810)

path: 'bus.jpg'

probs: None

save_dir: 'runs\\segment\\predict'

speed: {'preprocess': 6.919400009792298, 'inference': 347.9526999872178, 'postprocess': 23.429099994245917}

print(result[0].boxes)

cls: tensor([ 5., 0., 0., 0., 11., 0.])

conf: tensor([0.8985, 0.8849, 0.8628, 0.8223, 0.4611, 0.4428])

data: tensor([[1.9683e+01, 2.3003e+02, 8.0179e+02, 7.4414e+02, 8.9853e-01, 5.0000e+00],

[6.7014e+02, 3.9057e+02, 8.0955e+02, 8.7529e+02, 8.8493e-01, 0.0000e+00],

[4.9487e+01, 3.9813e+02, 2.4076e+02, 9.0509e+02, 8.6281e-01, 0.0000e+00],

[2.2265e+02, 4.0454e+02, 3.4606e+02, 8.5989e+02, 8.2234e-01, 0.0000e+00],

[1.1448e-01, 2.5458e+02, 3.1959e+01, 3.2565e+02, 4.6115e-01, 1.1000e+01],

[0.0000e+00, 5.5533e+02, 7.4818e+01, 8.7502e+02, 4.4278e-01, 0.0000e+00]])

id: None

is_track: False

orig_shape: (1080, 810)

shape: torch.Size([6, 6])

xywh: tensor([[410.7375, 487.0835, 782.1091, 514.1113],

[739.8477, 632.9328, 139.4077, 484.7191],

[145.1215, 651.6073, 191.2684, 506.9590],

[284.3539, 632.2175, 123.4095, 455.3473],

[ 16.0369, 290.1147, 31.8448, 71.0691],

[ 37.4089, 715.1753, 74.8179, 319.6833]])

xywhn: tensor([[0.5071, 0.4510, 0.9656, 0.4760],

[0.9134, 0.5860, 0.1721, 0.4488],

[0.1792, 0.6033, 0.2361, 0.4694],

[0.3511, 0.5854, 0.1524, 0.4216],

[0.0198, 0.2686, 0.0393, 0.0658],

[0.0462, 0.6622, 0.0924, 0.2960]])

xyxy: tensor([[1.9683e+01, 2.3003e+02, 8.0179e+02, 7.4414e+02],

[6.7014e+02, 3.9057e+02, 8.0955e+02, 8.7529e+02],

[4.9487e+01, 3.9813e+02, 2.4076e+02, 9.0509e+02],

[2.2265e+02, 4.0454e+02, 3.4606e+02, 8.5989e+02],

[1.1448e-01, 2.5458e+02, 3.1959e+01, 3.2565e+02],

[0.0000e+00, 5.5533e+02, 7.4818e+01, 8.7502e+02]])

xyxyn: tensor([[2.4300e-02, 2.1299e-01, 9.8987e-01, 6.8902e-01],

[8.2734e-01, 3.6164e-01, 9.9945e-01, 8.1046e-01],

[6.1095e-02, 3.6864e-01, 2.9723e-01, 8.3804e-01],

[2.7488e-01, 3.7458e-01, 4.2723e-01, 7.9620e-01],

[1.4133e-04, 2.3572e-01, 3.9456e-02, 3.0153e-01],

[0.0000e+00, 5.1420e-01, 9.2368e-02, 8.1020e-01]])

print(result[0].masks)

data: tensor([[[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.]],

......

[[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.]]])

orig_shape: (1080, 810)

shape: torch.Size([6, 640, 480])

xy: [array([[ 185.62, 232.88],

[ 185.62, 237.94],

[ 183.94, 239.62],

...,

[ 410.06, 239.62],

[ 408.38, 237.94],

[ 408.38, 232.88]], shape=(669, 2), dtype=float32),

array([[ 804.94, 396.56],

[ 803.25, 398.25],

[ 801.56, 398.25],

[ 799.88, 399.94],

...,

[ 803.25, 715.5],

[ 806.62, 712.12],

[ 808.31, 712.12],

[ 808.31, 396.56]], dtype=float32),

array([[ 101.25, 394.88],

[ 101.25, 401.62],

[ 99.562, 403.31],

...,

[ 153.56, 403.31],

[ 153.56, 401.62],

[ 151.88, 399.94],

[ 151.88, 394.88]], dtype=float32),

array([[ 264.94, 401.62],

[ 264.94, 408.38],

[ 263.25, 410.06],

[ 263.25, 411.75],

...,

[ 302.06, 413.44],

[ 302.06, 411.75],

[ 298.69, 408.38],

[ 298.69, 401.62]], dtype=float32),

array([[ 3.375, 253.12],

[ 3.375, 332.44],

[ 10.125, 332.44],

[ 10.125, 325.69],

...,

[ 21.938, 263.25],

[ 21.938, 261.56],

[ 20.25, 259.88],

[ 20.25, 253.12]], dtype=float32),

array([[ 0, 556.88],

[ 0, 867.38],

[ 6.75, 867.38],

[ 8.4375, 869.06],

...,

[ 11.812, 582.19],

[ 11.812, 572.06],

[ 10.125, 570.38],

[ 10.125, 556.88]], dtype=float32)]

xyn: [array([[ 0.22917, 0.21563],

[ 0.22917, 0.22031],

[ 0.22708, 0.22187],

...,

[ 0.50625, 0.22187],

[ 0.50417, 0.22031],

[ 0.50417, 0.21563]], shape=(669, 2), dtype=float32),

array([[ 0.99375, 0.36719],

[ 0.99167, 0.36875],

[ 0.98958, 0.36875],

[ 0.9875, 0.37031],

...,

[ 0.99167, 0.6625],

[ 0.99583, 0.65938],

[ 0.99792, 0.65938],

[ 0.99792, 0.36719]], dtype=float32),

array([[ 0.125, 0.36562],

[ 0.125, 0.37187],

[ 0.12292, 0.37344],

[ 0.12292, 0.37656],

...,

[ 0.18958, 0.37344],

[ 0.18958, 0.37187],

[ 0.1875, 0.37031],

[ 0.1875, 0.36562]], dtype=float32),

array([[ 0.32708, 0.37187],

[ 0.32708, 0.37813],

[ 0.325, 0.37969],

[ 0.325, 0.38125],

...,

[ 0.37292, 0.38281],

[ 0.37292, 0.38125],

[ 0.36875, 0.37813],

[ 0.36875, 0.37187]], dtype=float32),

array([[ 0.0041667, 0.23438],

[ 0.0041667, 0.30781],

[ 0.0125, 0.30781],

[ 0.0125, 0.30156],

...,

[ 0.027083, 0.24375],

[ 0.027083, 0.24219],

[ 0.025, 0.24062],

[ 0.025, 0.23438]], dtype=float32),

array([[ 0, 0.51562],

[ 0, 0.80313],

[ 0.0083333, 0.80313],

[ 0.010417, 0.80469],

...,

[ 0.014583, 0.53906],

[ 0.014583, 0.52969],

[ 0.0125, 0.52812],

[ 0.0125, 0.51562]], dtype=float32)]

Results数据解读

Results对象整体结构

使用model.predict()对一批图像进行预测时,会返回一个列表,列表中的每个元素都是一个Result对象,每个Result对象代表了对一张输入图像的预测结果。

Result对象的主要属性

原始图像属性

orig_img:原始的输入图像,输入图像的原始像素信息,数据类型为uint8(dtype=uint8),形状为(1080,810,3)(高度、宽度、通道数)- ‘orig_shape’:,图像的原始高度和宽度。

- ‘path’:图像的原始路径

边界框boxes属性

boxes:Boxes对象属性,包含了图像中检测到的所有边界框的信息,包括边界框的坐标、置信度以及类别索引等。

boxes具有以下属性:

cls:检测到boxes的分类index。每个index对应的名称在’names’: 属性中(本例中为{0: ‘person’, 5:‘bus’, 11:‘stop sign’})。

conf: 每个box的置信度

xywh: box在原图中的中心坐标值和宽高

xywhn:xywh的归一化值

xyxy: box在原图中的左上和右下两个点的坐标

xyxyn: xyxy的归一化值

遮罩masks属性

xy: 关键点的坐标。从数据中可以看出,遮罩轮廓由大量的点组成。

xyn:关键点坐标的归一化值

其他属性

speed:前置处理、推理、后置处理各个阶段的处理时间

names: 索引对应的分类名称

path:图像的原始路径

save_dir: 结果数据保存路径

orig_shape:图像的原始高度和宽度

结果数据调用

可以使用以下方式调用数据、展示和保存处理后的图像:

from ultralytics import YOLO

# Load a model

model = YOLO("yolo11n-seg.pt") # load an official model

# Predict with the model

results = model("bus汽车.jpg") # predict on an image

#results[0].save(filename="result汽车.jpg")

xy = [(0,0)]

xyn = [(0,0)]

for i, result in enumerate(results):

xy[i] = result.masks.xy # mask in polygon format

xyn[i] = result.masks.xyn # normalized

results[i].save(filename=f"results{i}.jpg")

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?