背景

业务场景: 在测试环境中没有真实的数据,所以需要把离线数据模拟成实时数据,把以年月日为文件名的目录和以日期为字段的文本内容都改为当日或未来某一天。

查看原始数据格式为CSV,以纯文本(含字符序列,不含二进制)形式存储表格数据,以制表符为分隔符,每日大概500-600万笔。测试环境中,默认安装了MySQL5.1,其默认存储引擎为myisam。楼主接下去将简要对比下innodb与myisam的某些方面的性能差异。

CSV样例:

由于数据量较大,加载进内存排序,其检索性能不高。可借用一台数据库服务器持久化当日数据,并对表示时间的字段索引。把凌晨1点-6点之间的数据摒弃掉,为其入库时间,入库需要定时定点操作,可使用quartz框架。对于短时间内进行大数据量插入适合先插入后建索引,所以该临时表就设置为每日一删一建一插一索引。设置每X秒对远程服务器的realtime表做一次入库,则需要在X秒内完成两个步骤:①检索过去X秒~当前时间的数据;②对远程表做入库。

首先探究短时间内插入大数据量的问题,对单文本进行IO操作,其性能瓶颈在IO上,使用多线程读取文本,要根据我们服务器的磁盘结构:是单片双片还是三片,在磁头只有一个的情况下,多个线程于事无补因为共用一个磁头,线程切换上下文耗费的时间非但不能提高效率还会拖垮程序性能。所以我们会考虑使用单线程读,多线程写。一次写多少数据好,根据CVS格式数据,我们决定采用JDBC+C3P0做insert into table values(…),(…),….,每一万笔的时候插入一次。

public class BatchInsertThread implements Runnable{

private String sql;

private static Logger log = Logger.getLogger(BatchInsertThread.class);

public BatchInsertThread(String sql) {

super();

this.sql = sql;

}

@Override

public void run() {

BaseDao.batchInsertData(sql);//异常的时候继续执行

}

}接下去我们尝试做了入库,发现时间把控较为困难,在第一笔数据还没有完全插入之前,第二笔数据的线程已经启动,紧接着第三笔数据的线程也启动…

mysql> show processlist;

+—-+——+—————–+———-+———+——+———————————+——————————————————————————————————+

| Id | User | Host | db | Command | Time | State | Info |

+—-+——+—————–+———-+———+——+———————————+——————————————————————————————————+

| 14 | root | localhost | realtime | Sleep | 7715 | | NULL |

| 15 | root | localhost:47508 | realtime | Query | 5025 | Waiting for table metadata lock | ALTER TABLE realtemp2 ADD INDEXidx_realt_tradedate(TRADE_DATE) USING BTREE |

| 16 | root | localhost:47510 | realtime | Sleep | 7072 | | NULL |

| 17 | root | localhost:47509 | realtime | Sleep | 7081 | | NULL |

| 18 | root | localhost:47512 | realtime | Sleep | 7473 | | NULL |

| 19 | root | localhost:47514 | realtime | Sleep | 7233 | | NULL |

| 20 | root | localhost:47515 | realtime | Sleep | 6262 | | NULL |

| 21 | root | localhost:47513 | realtime | Sleep | 6745 | | NULL |

| 22 | root | localhost:47516 | realtime | Sleep | 7273 | | NULL |

| 23 | root | localhost:47517 | realtime | Sleep | 7286 | | NULL |

| 24 | root | localhost:47518 | realtime | Sleep | 7344 | | NULL |

| 25 | root | localhost:47519 | realtime | Sleep | 7305 | | NULL |

| 26 | root | localhost:47520 | realtime | Sleep | 7032 | | NULL |

| 27 | root | localhost:47521 | realtime | Sleep | 6551 | | NULL |

| 28 | root | localhost:47522 | realtime | Sleep | 6276 | | NULL |

| 29 | root | localhost:47523 | realtime | Sleep | 6580 | | NULL |

| 30 | root | localhost:47524 | realtime | Sleep | 6117 | | NULL |

| 31 | root | localhost:47525 | realtime | Sleep | 6671 | | NULL |

| 32 | root | localhost:47526 | realtime | Sleep | 5981 | | NULL |

| 33 | root | localhost:47527 | realtime | Sleep | 5202 | | NULL |

| 34 | root | localhost:47528 | realtime | Sleep | 5514 | | NULL |

| 35 | root | localhost:47529 | realtime | Sleep | 2875 | | NULL |

| 36 | root | localhost:47531 | realtime | Query | 3151 | Waiting for table metadata lock | INSERT INTO realtemp2 (CARD_ID, TRADE_DATE, TRADE_ADDRESS,TRADE_TYPE,START_ADDRESS,DESTINATION) VALU |

| 37 | root | localhost:47530 | realtime | Sleep | 3030 | | NULL |

| 38 | root | localhost:47533 | realtime | Sleep | 6470 | | NULL |

| 39 | root | localhost:47534 | realtime | Sleep | 6510 | | NULL |

| 40 | root | localhost:47535 | realtime | Sleep | 6550 | | NULL |

| 41 | root | localhost:47537 | realtime | Sleep | 6229 | | NULL |

| 42 | root | localhost:47536 | realtime | Sleep | 6390 | | NULL |

| 43 | root | localhost:47538 | realtime | Query | 2900 | Waiting for table metadata lock | INSERT INTO realtemp2 (CARD_ID, TRADE_DATE, TRADE_ADDRESS,TRADE_TYPE,START_ADDRESS,DESTINATION) VALU |

| 44 | root | localhost:47539 | realtime | Query | 2927 | Waiting for table metadata lock | INSERT INTO realtemp2 (CARD_ID, TRADE_DATE, TRADE_ADDRESS,TRADE_TYPE,START_ADDRESS,DESTINATION) VALU |

| 45 | root | localhost:47540 | realtime | Sleep | 6350 | | NULL |

| 46 | root | localhost:47541 | realtime | Sleep | 6189 | | NULL |

| 47 | root | localhost:47542 | realtime | Sleep | 6028 | | NULL |

| 48 | root | localhost:47543 | realtime | Sleep | 6069 | | NULL |

| 49 | root | localhost:47544 | realtime | Query | 2882 | Waiting for table metadata lock | INSERT INTO realtemp2 (CARD_ID, TRADE_DATE, TRADE_ADDRESS,TRADE_TYPE,START_ADDRESS,DESTINATION) VALU |

| 50 | root | localhost:47545 | realtime | Sleep | 3971 | | NULL |

| 51 | root | localhost:47546 | realtime | Sleep | 5788 | | NULL |

| 52 | root | localhost:47547 | realtime | Query | 3401 | Waiting for table metadata lock | INSERT INTO realtemp2 (CARD_ID, TRADE_DATE, TRADE_ADDRESS,TRADE_TYPE,START_ADDRESS,DESTINATION) VALU |

| 53 | root | localhost:47548 | realtime | Query | 4761 | Waiting for table metadata lock | INSERT INTO realtemp2 (CARD_ID, TRADE_DATE, TRADE_ADDRESS,TRADE_TYPE,START_ADDRESS,DESTINATION) VALU |

| 54 | root | localhost:47549 | realtime | Sleep | 5908 | | NULL |

| 55 | root | localhost:47550 | realtime | Sleep | 5948 | | NULL |

| 56 | root | localhost:47551 | realtime | Sleep | 4568 | | NULL |

| 57 | root | localhost:47552 | realtime | Sleep | 3293 | | NULL |

| 58 | root | localhost:47553 | realtime | Sleep | 4817 | | NULL |

| 59 | root | localhost:47554 | realtime | Sleep | 5302 | | NULL |

| 60 | root | localhost:47555 | realtime | Sleep | 5707 | | NULL |

| 61 | root | localhost:47556 | realtime | Sleep | 5426 | | NULL |

| 62 | root | localhost:47557 | realtime | Query | 3114 | Waiting for table metadata lock | INSERT INTO realtemp2 (CARD_ID, TRADE_DATE, TRADE_ADDRESS,TRADE_TYPE,START_ADDRESS,DESTINATION) VALU |

| 63 | root | localhost:47558 | realtime | Query | 2836 | Waiting for table metadata lock | INSERT INTO realtemp2 (CARD_ID, TRADE_DATE, TRADE_ADDRESS,TRADE_TYPE,START_ADDRESS,DESTINATION) VALU |

| 64 | root | localhost:47559 | realtime | Sleep | 3167 | | NULL |

| 65 | root | localhost:47560 | realtime | Sleep | 3181 | | NULL |

| 66 | root | localhost | realtime | Query | 0 | init | show processlist |

+—-+——+—————–+———-+———+——+———————————+——————————————————————————————————+

53 rows in set (0.00 sec)

我们发现短时间内建立了大量连接,最终很容易出现几条Waiting for table metadata lock停滞而产生死锁。需要重启数据库才能解锁,或者kill掉进程号(有时kill行不通)。以上实验仅为楼主测试用,实际情况使用单线程阻塞读写即可满足需求。

然后,我们把卡号(varchar)和交易时间(datetime)做联合主键,同时交易时间在数据插入完之后又单独建立了索引。我们尝试在myisam存储引擎上,把当日数据做入库。

……

2017 Mar 22 15:28:59,702 DEBUG [TextUtil:47] success at 535

2017 Mar 22 15:29:32,052 DEBUG [TextUtil:47] success at 536

2017 Mar 22 15:30:06,576 DEBUG [TextUtil:47] success at 537

2017 Mar 22 15:30:41,522 DEBUG [TextUtil:47] success at 538

2017 Mar 22 15:31:14,240 DEBUG [TextUtil:47] success at 539

2017 Mar 22 15:31:47,557 DEBUG [TextUtil:47] success at 540

2017 Mar 22 15:32:23,786 DEBUG [TextUtil:47] success at 541

2017 Mar 22 15:32:57,608 DEBUG [TextUtil:47] success at 542

2017 Mar 22 15:33:29,735 DEBUG [TextUtil:47] success at 543

2017 Mar 22 15:34:03,540 DEBUG [TextUtil:47] success at 544

2017 Mar 22 15:34:39,732 DEBUG [TextUtil:47] success at 545

2017 Mar 22 15:35:13,377 DEBUG [TextUtil:47] success at 546

2017 Mar 22 15:35:47,047 DEBUG [TextUtil:47] success at 547

2017 Mar 22 15:35:47,198 DEBUG [Action:55] 插入数据:18051s

2017 Mar 22 16:19:03,794 DEBUG [Action:58] 建立了索引:2596s在myisam存储引擎上,每条插入连接一万笔,使用联合主键,插入数据共18051s≈5.01小时,建立索引共2596s≈43.27分钟。

5.1版本mysql> SHOW variables like “have_%”;

Connection id: 150899

Current database: realtime

+————————-+———-+

| Variable_name | Value |

+————————-+———-+

| have_community_features | YES |

| have_compress | YES |

| have_crypt | YES |

| have_csv | YES |

| have_dynamic_loading | YES |

| have_geometry | YES |

| have_innodb | YES |

| have_ndbcluster | NO |

| have_openssl | DISABLED |

| have_partitioning | YES |

| have_query_cache | YES |

| have_rtree_keys | YES |

| have_ssl | DISABLED |

| have_symlink | DISABLED |

+————————-+———-+

14 rows in set (0.05 sec)

5.1支持切换为innodb,要特别设置InnoDB为默认引擎,需要在 /etc/my.cnf 文件中的 [mysqld] 下面加入default-storage-engine=INNODB 一句,保存,重启。

再次使用:mysql> show engines;

然而切换了存储引擎之后,同样的插入方案还是大概要5个小时左右。

我们把5.1卸载掉,换成5.6,具体步骤参看文本末。

把每次插入的数据换成5万笔,得到的结果如下:

……

2017 Mar 23 15:41:59,626 DEBUG [TextUtil:54] success at 95

2017 Mar 23 15:43:53,861 DEBUG [TextUtil:54] success at 96

2017 Mar 23 15:45:51,042 DEBUG [TextUtil:54] success at 97

2017 Mar 23 15:47:47,207 DEBUG [TextUtil:54] success at 98

2017 Mar 23 15:49:47,012 DEBUG [TextUtil:54] success at 99

2017 Mar 23 15:51:48,821 DEBUG [TextUtil:54] success at 100

2017 Mar 23 15:53:52,469 DEBUG [TextUtil:54] success at 101

2017 Mar 23 15:55:46,813 DEBUG [TextUtil:54] success at 102

2017 Mar 23 15:57:42,407 DEBUG [TextUtil:54] success at 103

2017 Mar 23 15:59:42,079 DEBUG [TextUtil:54] success at 104

2017 Mar 23 16:01:40,422 DEBUG [TextUtil:54] success at 105

2017 Mar 23 16:01:40,500 DEBUG [Action:56] 插入数据:5867s

2017 Mar 23 16:02:44,538 DEBUG [Action:59] 建立了索引:64s在5.6的innodb存储引擎上,每条插入连接五万笔,使用联合主键,插入数据共5867s≈1.63小时,建立索引共64s。

取日志记录的前4条,中间4条和最后4条做对比:

**前4条**

2017 Mar 23 14:23:58,981 DEBUG [TextUtil:54] success at 1

2017 Mar 23 14:24:01,351 DEBUG [TextUtil:54] success at 2

2017 Mar 23 14:24:03,611 DEBUG [TextUtil:54] success at 3

2017 Mar 23 14:24:05,813 DEBUG [TextUtil:54] success at 4

**中间4条**

2017 Mar 23 14:41:07,941 DEBUG [TextUtil:54] success at 53

2017 Mar 23 14:42:05,892 DEBUG [TextUtil:54] success at 54

2017 Mar 23 14:43:05,115 DEBUG [TextUtil:54] success at 55

2017 Mar 23 14:44:01,821 DEBUG [TextUtil:54] success at 56

**最后4条**

2017 Mar 23 15:55:46,813 DEBUG [TextUtil:54] success at 102

2017 Mar 23 15:57:42,407 DEBUG [TextUtil:54] success at 103

2017 Mar 23 15:59:42,079 DEBUG [TextUtil:54] success at 104

2017 Mar 23 16:01:40,422 DEBUG [TextUtil:54] success at 105我们可以发现,刚开始插入数据,量小,大概每几秒插入五万笔;到数据量达250多万级别,大概每1分钟插入五万笔;到数据量达500万以上级别,大概每2分钟插入五万笔。因为主键是联合主键,且第一个字段是字符串,数据库对于varchar类型数据只能逐个字母匹配,每插一条都得对varchar进行n个字母匹配和对datetime进行比较。我们把联合主键去掉,换成int型的自增id,每次插入的数据还是5万笔,得到的结果如下:

5068 2017 Mar 25 01:17:05,881 DEBUG [TextUtil:54] success at 110

5069 2017 Mar 25 01:17:07,628 DEBUG [TextUtil:54] success at 111

5070 2017 Mar 25 01:17:08,725 DEBUG [TextUtil:54] success at 112

5071 2017 Mar 25 01:17:09,698 DEBUG [TextUtil:54] success at 113

5072 2017 Mar 25 01:17:11,140 DEBUG [TextUtil:54] success at 114

5073 2017 Mar 25 01:17:13,409 DEBUG [TextUtil:54] success at 115

5074 2017 Mar 25 01:17:15,346 DEBUG [TextUtil:54] success at 116

5075 2017 Mar 25 01:17:15,365 DEBUG [LoadNextDayDataJob:43] 插入数据:735s

2017 Mar 25 01:36:33,916 DEBUG [LoadNextDayDataJob:46] 建立了索引:1158s

2017 Mar 25 01:36:33,917 INFO [LoadNextDayDataJob:48] MyJob is end .....................该日志是夜间执行quartz任务的,在5.6的innodb存储引擎上,使用int型自增id,每条插入连接五万笔,插入数据共735s≈12.25分钟,建立索引共1158s≈19.30分钟。

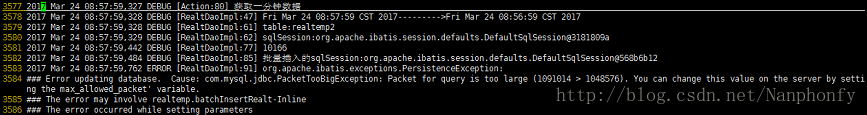

在高峰的时候,传输数据包超过数据库阈值:

mysql会根据配置文件会限制server接受的数据包大小。

有时候大的插入和更新会受max_allowed_packet 参数限制,导致写入或者更新失败。

5.1 mysql> show VARIABLES like '%max_allowed_packet%';

+--------------------------+------------+

| Variable_name | Value |

+--------------------------+------------+

| max_allowed_packet | 1048576 |

| slave_max_allowed_packet | 1073741824 |

+--------------------------+------------+

5.6mysql> show VARIABLES like '%max_allowed_packet%';

+--------------------------+------------+

| Variable_name | Value |

+--------------------------+------------+

| max_allowed_packet | 4194304 |

| slave_max_allowed_packet | 1073741824 |

+--------------------------+------------+所以要对远程5.1的mysql服务器的容量进行修改。

命令行记录

连接远程数据库:mysql -h 192.168.40.128 -P 3306 -u root -p

查看表结构:show create table tablename;

查看当前的进程情况:mysql> show processlist ;

查看表索引:show index from realtemp2;

查看mysql已提供什么存储引擎:mysql> show engines;

查看mysql当前默认的存储引擎:mysql> show variables like '%storage_engine%';

查看myql是否支持InnoDB引擎:mysql> SHOW variables like "have_%";

server接受的数据包大小:show VARIABLES like '%max_allowed_packet%';

quartz框架和mybatis的双数据源

quartz框架

public class QuartzManager {

private static String JOB_GROUP_NAME = "group1";

private static String TRIGGER_GROUP_NAME = "trigger1";

//开始一个simpleSchedule()调度

public static void startSchedule(String jobName, Job job, String time) {

try {

// 1、创建一个JobDetail实例,指定Quartz

JobDetail jobDetail = JobBuilder.newJob(job.getClass())

// 任务执行类

.withIdentity(jobName, JOB_GROUP_NAME)

// 任务名,任务组

.build();

CronScheduleBuilder builder = CronScheduleBuilder.cronSchedule(time);// 每天1点之后触发

// 2、创建Trigger

Trigger trigger = TriggerBuilder.newTrigger().withIdentity(TRIGGER_GROUP_NAME, JOB_GROUP_NAME).startNow()

.withSchedule(builder).build();

// 3、创建Scheduler

Scheduler scheduler = StdSchedulerFactory.getDefaultScheduler();

scheduler.start();

// 4、调度执行

scheduler.scheduleJob(jobDetail, trigger);

} catch (SchedulerException e) {

e.printStackTrace();

}

}

}public class LoadNextDayDataJob implements Job {

private static final Logger log = Logger.getLogger(LoadNextDayDataJob.class);

@Override

public void execute(JobExecutionContext context) throws JobExecutionException {

log.info("MyJob is start ..................");

log.info("Hello quzrtz " + new SimpleDateFormat("yyyy-MM-dd HH:mm:ss ").format(new Date()));

RealtDao rd = new RealtDaoImpl( DataSourceSqlSessionFactory.getSqlSessionFactory(DataSourceSqlSessionFactory.LOCAL_ENVIRONMENT_ID));

long begin = System.currentTimeMillis();

log.debug(begin);

rd.createNewTable("realtemp2");

String fileName = DateUtil.parseDateToString(new Date(), DateUtil.PATTERN_yyyy_MM_dd);

try {

TextUtil.parseText2SQLBatchInsert(Cfg.cfgMap.get(Cfg.DATA_PATH) + fileName + "/part-r-00000");

} catch (IOException e) {

log.debug(e);

e.printStackTrace();

}

log.debug("插入数据:" + (System.currentTimeMillis() - begin) / 1000 + "s");

begin = System.currentTimeMillis();

rd.createIdxRealtTradeDate("realtemp2");

log.debug("建立了索引:" + (System.currentTimeMillis() - begin) / 1000 + "s");

log.info("MyJob is end .....................");

}

}mybatis的双数据源

SqlMapConfig.xml

<!-- 和spring整合后 environments配置将废除-->

<environments default="LOCAL">

<environment id="LOCAL">

<!-- 使用jdbc事务管理,事务控制由mybatis-->

<transactionManager type="JDBC" />

<!-- 数据库连接池,由mybatis管理-->

<dataSource type="POOLED">

<property name="driver" value="${local.jdbc.driverClassName}" />

<property name="url" value="${local.jdbc.url}"/>

<property name="password" value="${local.jdbc.password}"/>

<property name="username" value="${local.jdbc.username}" />

</dataSource>

</environment>

<environment id="REMOTE">

<!-- 使用jdbc事务管理,事务控制由mybatis-->

<transactionManager type="JDBC" />

<!-- 数据库连接池,由mybatis管理-->

<dataSource type="POOLED">

<property name="driver" value="${remote.jdbc.driverClassName}" />

<property name="url" value="${remote.jdbc.url}"/>

<property name="password" value="${remote.jdbc.password}"/>

<property name="username" value="${remote.jdbc.username}" />

</dataSource>

</environment>

</environments>//http://zhangbo-peipei-163-com.iteye.com/blog/2052924

//根据mybatis.xml中配置的不同的environment创建对应的SqlSessionFactory

public final class DataSourceSqlSessionFactory {

private static Logger logger = Logger.getLogger(DataSourceSqlSessionFactory.class);

private static final String CONFIGURATION_PATH = "SqlMapConfig.xml";

public final static String LOCAL_ENVIRONMENT_ID = "LOCAL";

public final static String REMOTE_ENVIRONMENT_ID = "REMOTE";

public static SqlSessionFactory getSqlSessionFactory(String environment) {

InputStream inputStream = null;

SqlSessionFactory sqlSessionFactory = null;

try {

inputStream = Resources.getResourceAsStream(CONFIGURATION_PATH);

sqlSessionFactory = new SqlSessionFactoryBuilder().build(inputStream, environment);

inputStream.close();

logger.info("获取 [ " + environment + " ] 数据源连接成功");

} catch (IOException e) {

logger.error("获取 [ " + environment + " ] 数据源连接失败,错误信息 :" + e);

}

return sqlSessionFactory;

}

}MySQL5.6的安装

MySQL的卸载

查看是否有mysql软件:

rpm -qa|grep mysql

卸载mysql:

yum remove mysql mysql-server mysql-libs mysql-common

rm -rf /var/lib/mysql

rm /etc/my.cnf

查看是否还有mysql软件,有的话继续删除。

软件卸载完毕后如果需要可以删除mysql的数据库:/var/lib/mysql

yum安装

下载rpm包:

使用yum 安装mysql,要使用mysql的yum仓库,先从官网下载适合你系统的仓库

我们选择mysql-community-release-el6-5.noarch.rpm

安装仓库列表:

yum localinstall mysql-community-release-el6-5.noarch.rpm

安装mysql:

yum install mysql-community-server

启动,重置,授权

启动mysql:

sudo service mysqld start

设置root用户密码:

mysql数据库安装完以后只会有一个root管理员账号,但是此时的root账号还并没有为其设置密码,在第一次启动mysql服务时,会进行数据库的一些初始化工作。

/usr/bin/mysqladmin -u root password 'new-password'

mysql远程连接授权:

GRANT ALL PRIVILEGES ON *.* TO 'username'@'%' IDENTIFIED BY 'password' WITH GRANT OPTION;

参看:

MySQL命令行常用操作

MySQL出现Waiting for table metadata lock的原因以及解决方法

LINUX下的MYSQL怎么开启INNODB数据支持

关闭MySQL的DNS反向解析

作者: @nanphonfy

转载出处 : http://blog.csdn.net/Nanphonfy

301

301

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?