from:https://towardsdatascience.com/a-simple-guide-to-the-versions-of-the-inception-network-7fc52b863202

A Simple Guide to the Versions of the Inception Network

Inception网络版本的简单指南

作者:

May 30, 2018

The Inception network was an important milestone in the development of CNN classifiers. Prior to its inception (pun intended), most popular CNNs just stacked convolution layers deeper and deeper, hoping to get better performance.

inception网络是CNN分类器发展的一个重要里程碑。在它出现之前(双关语),大多数流行的CNNs只是把卷积层叠得越来越深,希望获得更好的性能。

Designing CNNs in a nutshell. Fun fact, this meme was referenced in the first inception net paper.

The Inception network on the other hand, was complex (heavily engineered). It used a lot of tricks to push performance; both in terms of speed and accuracy. Its constant evolution lead to the creation of several versions of the network. The popular versions are as follows:

简单设计CNNs。有趣的是,这个模因在第一篇inception网络论文中被引用。另一方面,Inception网络是复杂的(经过大量设计)。它使用了很多技巧来提高性能;在速度和精度方面都是如此。它的不断发展导致了几个版本的网络的创建。流行的版本如下:

Each version is an iterative improvement over the previous one. Understanding the upgrades can help us to build custom classifiers that are optimized both in speed and accuracy. Also, depending on your data, a lower version may actually work better.

每个版本都是对前一个版本的迭代改进。理解升级可以帮助我们构建在速度和精度上都得到优化的自定义分类器。此外,根据您的数据,较低的版本实际上可能工作得更好。

This blog post aims to elucidate the evolution of the inception network.

这篇博客文章旨在阐明先启网络的发展。

Inception v1

This is where it all started. Let us analyze what problem it was purported to solve, and how it solved it. (Paper)

这就是一切开始的地方。让我们来分析一下它要解决什么问题,以及它是如何解决的。

The Premise:

- Salient parts in the image can have extremely large variation in size. For instance, an image with a dog can be either of the following, as shown below. The area occupied by the dog is different in each image.

- 图像中的突出部分在大小上可能有非常大的变化。例如,一张带有狗的图片可以是以下任意一种,如下所示。在每张图片中,狗所占据的区域是不同的。

From left: A dog occupying most of the image, a dog occupying a part of it, and a dog occupying very little space (Images obtained from Unsplash).

- Because of this huge variation in the location of the information, choosing the right kernel size for the convolution operation becomes tough. A larger kernel is preferred for information that is distributed more globally, and a smaller kernel is preferred for information that is distributed more locally.

- Very deep networks are prone to overfitting. It also hard to pass gradient updates through the entire network.

- Naively stacking large convolution operations is computationally expensive.

- 由于信息位置的巨大变化,为卷积操作选择合适的内核大小变得非常困难。对于分布得更全局的信息,更大的内核是首选的,对于分布得更局部的信息,更小的内核是首选的。

- 非常深的网络容易过度拟合。它也很难通过整个网络梯度更新。

- 天真地叠加大型卷积运算在计算上是昂贵的。

The Solution:

Why not have filters with multiple sizes operate on the same level? The network essentially would get a bit “wider” rather than “deeper”. The authors designed the inception module to reflect the same.

为什么不让多个大小的过滤器在同一级别上运行?网络本质上将变得更“宽”而不是“深”。作者设计了inception模块来反映这一点。

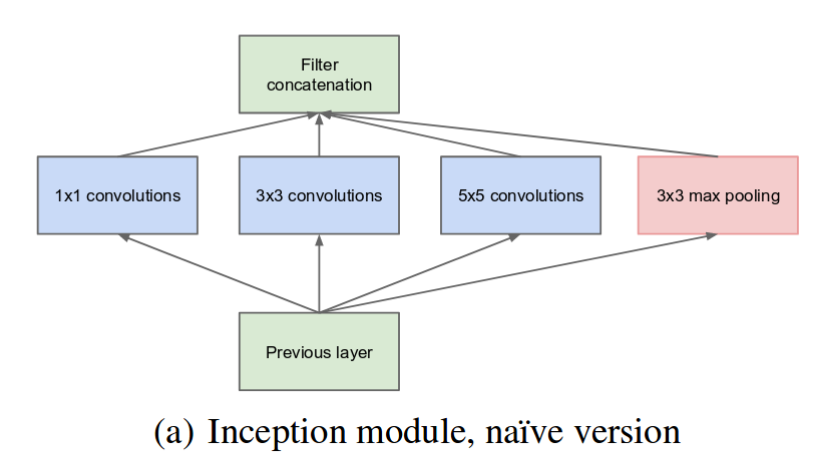

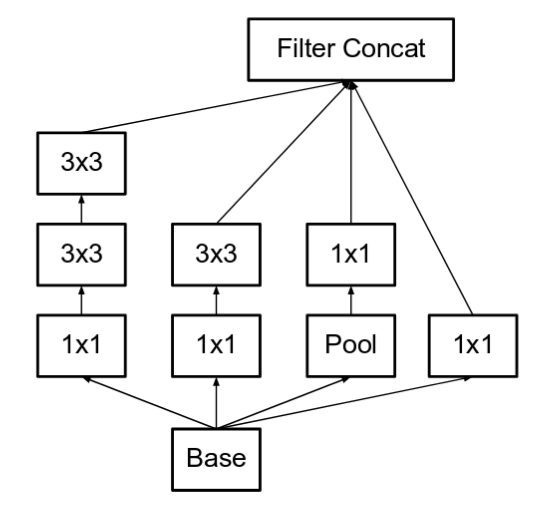

The below image is the “naive” inception module. It performs convolution on an input, with 3 different sizes of filters (1x1, 3x3, 5x5). Additionally, max pooling is also performed. The outputs are concatenated and sent to the next inception module.

下图是“naive”inception模块。它使用3种不同大小的滤波器(1x1、3x3、5x5)对输入进行卷积。此外,还执行最大池。输出被连接起来并发送到下一个inception模块。

As stated before, deep neural networks are computationally expensive. To make it cheaper, the authors limit the number of input channels by adding an extra 1x1 convolution before the 3x3 and 5x5 convolutions. Though adding an extra operation may seem counterintuitive, 1x1 convolutions are far more cheaper than 5x5 convolutions, and the reduced number of input channels also help. Do note that however, the 1x1 convolution is introduced after the max pooling layer, rather than before.

如前所述,深度神经网络在计算上是昂贵的。为了降低成本,作者在3x3和5x5卷积之前添加了额外的1x1卷积,从而限制了输入通道的数量。虽然添加一个额外的操作似乎违反直觉,但是1x1卷积要比5x5卷积便宜得多,而且输入通道的减少也有帮助。但是请注意,1x1卷积是在最大池化层之后引入的,而不是之前。

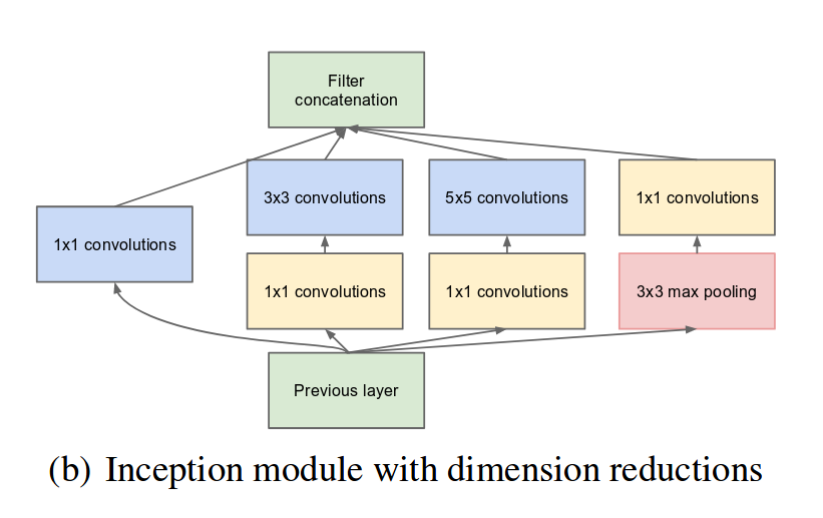

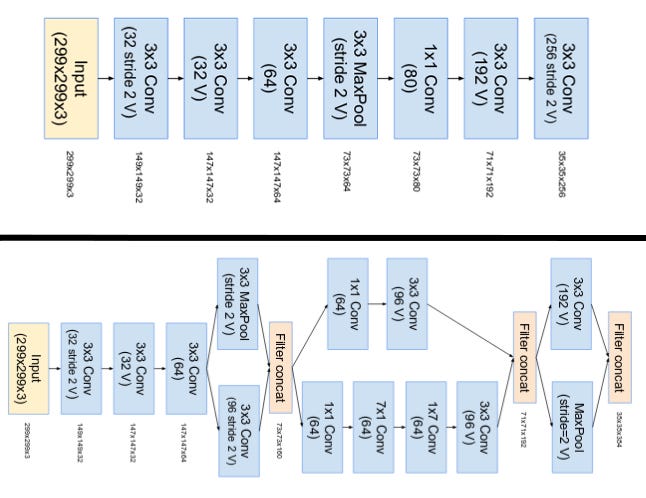

Using the dimension reduced inception module, a neural network architecture was built. This was popularly known as GoogLeNet (Inception v1). The architecture is shown below:

利用降维inception模块,建立了神经网络体系结构。这就是众所周知的GoogLeNet (Inception v1)。架构如下图所示:

GoogLeNet has 9 such inception modules stacked linearly. It is 22 layers deep (27, including the pooling layers). It uses global average pooling at the end of the last inception module.

GoogLeNet有9个这样的inception模块线性堆叠。它有22层深(27层,包括池化层)。它在最后一个先启模块的末尾使用全局平均池。

Needless to say, it is a pretty deep classifier. As with any very deep network, it is subject to the vanishing gradient problem.

不用说,它是一个相当深刻的分类器。与任何非常深的网络一样,它也受制于消失梯度问题。

To prevent the middle part of the network from “dying out”, the authors introduced two auxiliary classifiers (The purple boxes in the image). They essentially applied softmax to the outputs of two of the inception modules, and computed an auxiliary loss over the same labels. The total loss functionis a weighted sum of the auxiliary loss and the real loss. Weight value used in the paper was 0.3 for each auxiliary loss.

为了防止网络的中间部分“消失”,作者引入了两个辅助分类器(图中的紫色框)。实际上,他们将softmax应用于两个inception模块的输出,并在相同的标签上计算辅助损失。总损失函数是辅助损失和实际损失的加权和。本文采用的权重值为0.3,对于每个辅助损失。

# The total loss used by the inception net during training. # 初始网络在培训期间使用的总损失。 total_loss = real_loss + 0.3 * aux_loss_1 + 0.3 * aux_loss_2

Needless to say, auxiliary loss is purely used for training purposes, and is ignored during inference.

不用说,辅助损失纯粹用于训练目的,在推理过程中被忽略。

Inception v2

Inception v2 and Inception v3 were presented in the same paper. The authors proposed a number of upgrades which increased the accuracy and reduced the computational complexity. Inception v2 explores the following:

Inception v2和Inception v3是在同一篇文章中提出的。作者提出了一系列改进方案,提高了计算精度,降低了计算复杂度。Inception v2探索了以下内容:

The Premise:

- Reduce representational bottleneck. The intuition was that, neural networks perform better when convolutions didn’t alter the dimensions of the input drastically. Reducing the dimensions too much may cause loss of information, known as a “representational bottleneck”

- Using smart factorization methods, convolutions can be made more efficient in terms of computational complexity.

- 减少表征瓶颈。直觉是,当卷积没有显著改变输入的维数时,神经网络表现得更好。过多地减少维度可能导致信息丢失,称为“代表性瓶颈”。

- 使用智能因子分解方法,卷积可以在计算复杂度方面得到更高的效率。

The Solution:

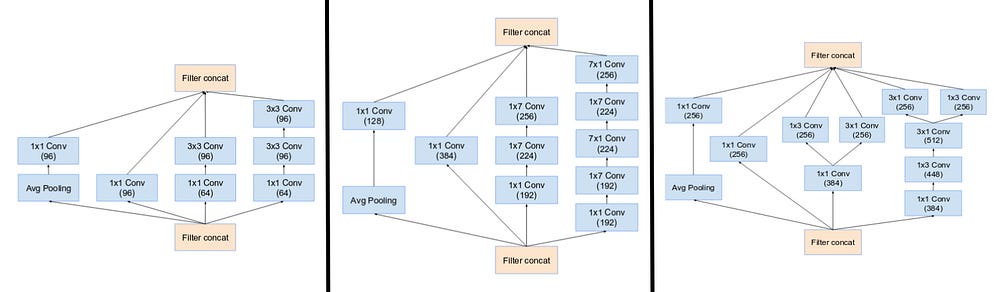

- Factorize 5x5 convolution to two 3x3 convolution operations to improve computational speed. Although this may seem counterintuitive, a 5x5 convolution is 2.78 times more expensive than a 3x3 convolution. So stacking two 3x3 convolutions infact leads to a boost in performance. This is illustrated in the below image.

- 将5x5卷积分解为两个3x3卷积运算,以提高计算速度。虽然这看起来有悖直觉,但是一个5x5的卷积比一个3x3的卷积贵2.78倍。所以叠加两个3x3的卷积实际上提高了性能。如下图所示。

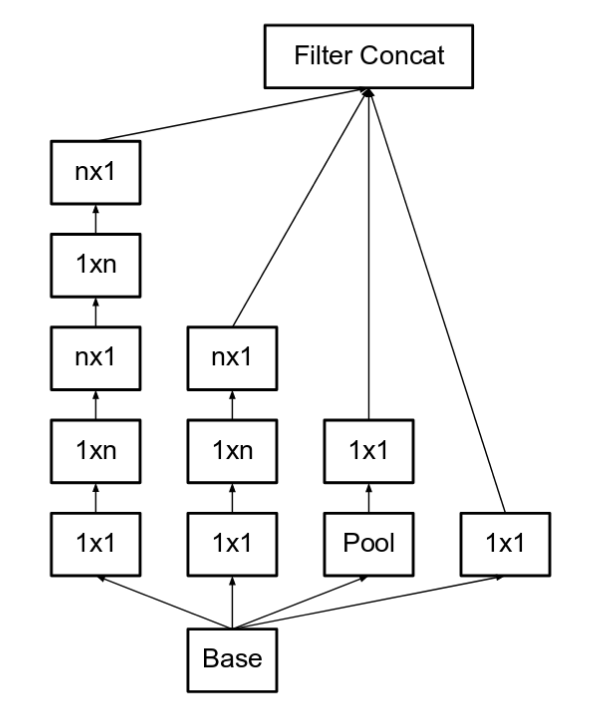

- Moreover, they factorize convolutions of filter size nxn to a combination of 1xn and nx1 convolutions. For example, a 3x3 convolution is equivalent to first performing a 1x3 convolution, and then performing a 3x1 convolution on its output. They found this method to be 33% more cheaper than the single 3x3 convolution. This is illustrated in the below image.

- 此外,它们将滤波器大小为nxn的卷积分解为1xn和nx1卷积的组合。例如,一个3x3卷积等价于首先执行一个1x3卷积,然后对它的输出执行一个3x1卷积。他们发现这种方法比单一的3x3卷积便宜33%。如下图所示。

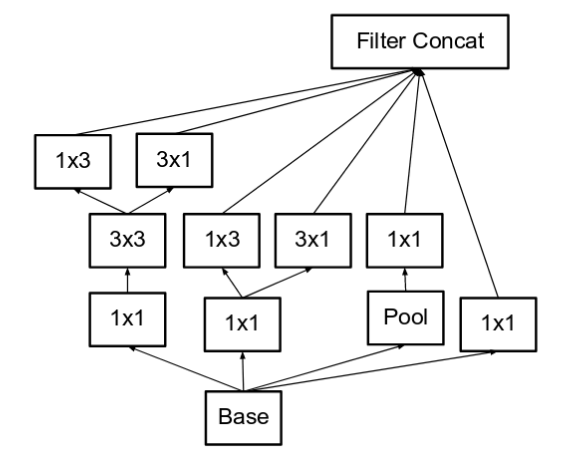

- The filter banks in the module were expanded (made wider instead of deeper) to remove the representational bottleneck. If the module was made deeper instead, there would be excessive reduction in dimensions, and hence loss of information. This is illustrated in the below image.

- 模块中的滤波器组被扩展(变得更宽而不是更深)以消除代表性瓶颈。如果将模块做得更深,则会导致维度的过度减少,从而导致信息的丢失。如下图所示。

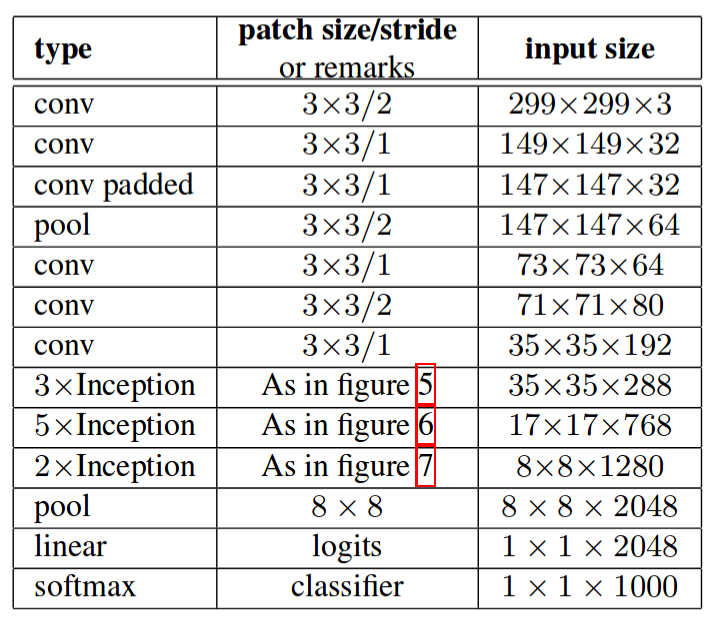

- The above three principles were used to build three different types of inception modules (Let’s call them modules A,B and C in the order they were introduced. These names are introduced for clarity, and not the official names). The architecture is as follows:

- 以上三个原则用于构建三种不同类型的inception模块(让我们按照它们被引入的顺序将它们称为模块A、B和C)。这些名称是为了清晰起见而引入的,而不是官方名称)。架构如下:

Inception v3

The Premise

- The authors noted that the auxiliary classifiers didn’t contribute much until near the end of the training process, when accuracies were nearing saturation. They argued that they function as regularizes, especially if they have BatchNorm or Dropout operations.

- Possibilities to improve on the Inception v2 without drastically changing the modules were to be investigated.

- 作者注意到,辅助分类器直到训练过程接近尾声时,当准确率接近饱和时,才发挥了很大作用。他们认为,它们的功能是规范化的,特别是当它们具有批处理规范或退出操作时。

- 将研究在Inception v2的基础上改进而不大幅度改变模块的可能性。

The Solution

- Inception Net v3 incorporated all of the above upgrades stated for Inception v2, and in addition used the following:

- Inception Net v3包含了Inception v2的所有上述升级,并且使用了以下内容:

- RMSProp Optimizer.

- Factorized 7x7 convolutions.

- BatchNorm in the Auxillary Classifiers.

- Label Smoothing (A type of regularizing component added to the loss formula that prevents the network from becoming too confident about a class. Prevents over fitting).

- RMSProp优化器。

- 映像7 x7的隆起。

- 辅助分类器中的BatchNorm。

- 标签平滑(一种添加到损失公式中的正则化组件,它可以防止网络对类过于自信。防止过拟合的)。

Inception v4

Inception v4 and Inception-ResNet were introduced in the same paper. For clarity, let us discuss them in separate sections.

本文介绍了Inception v4和Inception- resnet。为了清楚起见,让我们在单独的部分中讨论它们。

The Premise

- Make the modules more uniform. The authors also noticed that some of the modules were more complicated than necessary. This can enable us to boost performance by adding more of these uniform modules.

- 使模块更加统一。作者还注意到,有些模块比必要的更复杂。这可以使我们通过添加更多的这些统一模块来提高性能。

The Solution

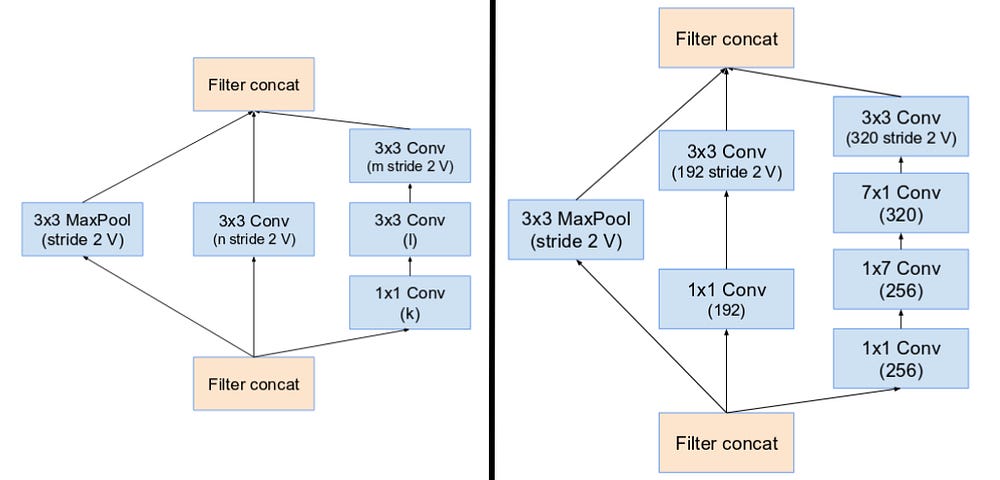

- The “stem” of Inception v4 was modified. The stem here, refers to the initial set of operations performed before introducing the Inception blocks.

- Inception v4的“茎”被修改了。这里的stem指的是在引入初始块之前执行的初始操作集。

- They had three main inception modules, named A,B and C (Unlike Inception v2, these modules are infact named A,B and C). They look very similar to their Inception v2 (or v3) counterparts.

- 他们有三个主要的inception模块,分别命名为A、B和C(不像inception v2,这些模块实际上分别命名为A、B和C),它们看起来和inception v2(或者v3)非常相似。

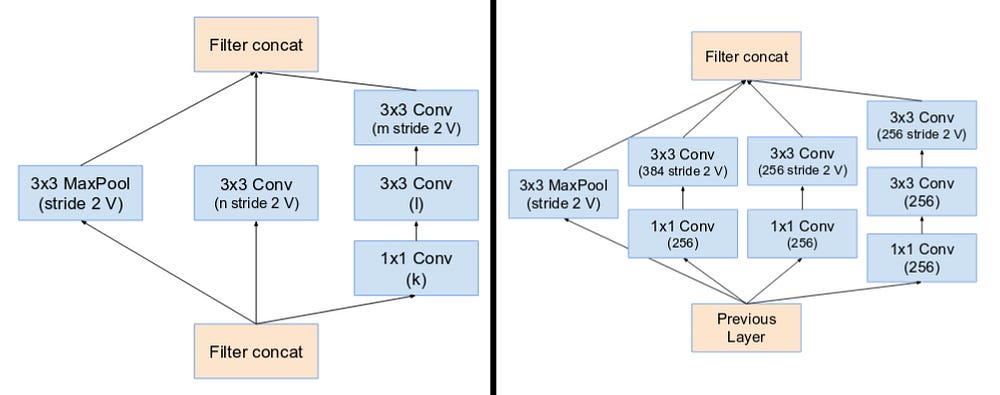

- Inception v4 introduced specialized “Reduction Blocks” which are used to change the width and height of the grid. The earlier versions didn’t explicitly have reduction blocks, but the functionality was implemented.

- Inception v4引入了专门的“约简块”,用来改变网格的宽度和高度。较早的版本没有显式地包含约简块,但是实现了该功能。

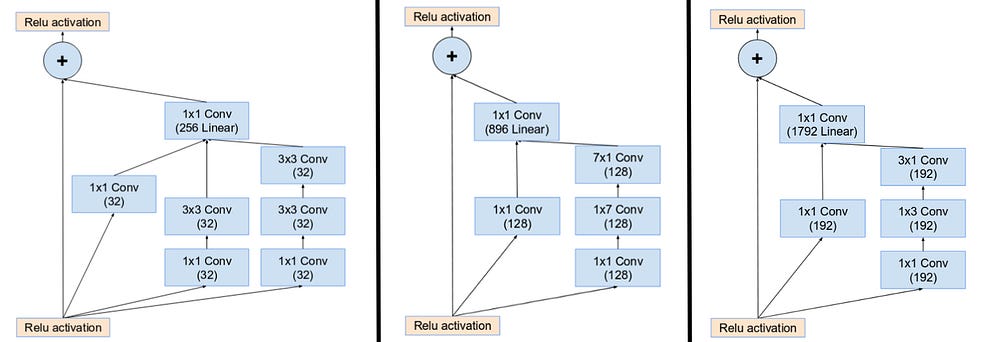

Inception-ResNet v1 and v2

Inspired by the performance of the ResNet, a hybrid inception module was proposed. There are two sub-versions of Inception ResNet, namely v1 and v2. Before we checkout the salient features, let us look at the minor differences between these two sub-versions.

受ResNet性能的启发,提出了一种混合先启模块。Inception ResNet有两个子版本,即v1和v2。在签出主要特性之前,让我们先看看这两个子版本之间的细微差别。

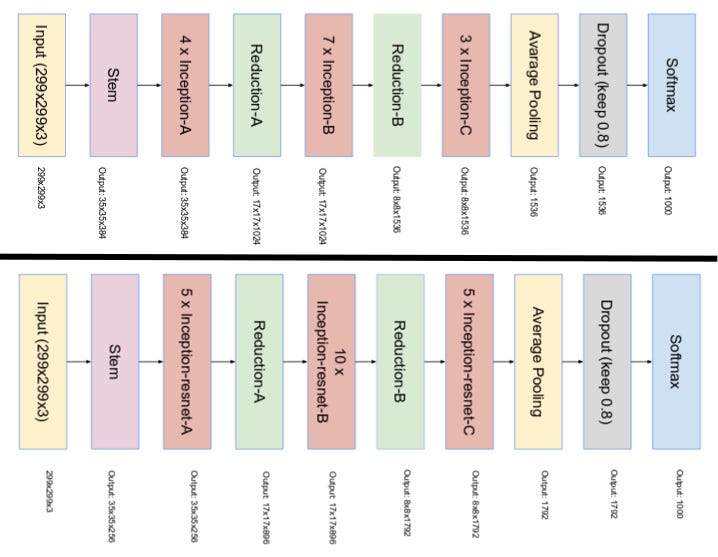

- Inception-ResNet v1 has a computational cost that is similar to that of Inception v3.

- Inception-ResNet v2 has a computational cost that is similar to that of Inception v4.

- They have different stems, as illustrated in the Inception v4 section.

- Both sub-versions have the same structure for the modules A, B, C and the reduction blocks. Only difference is the hyper-parameter settings. In this section, we’ll only focus on the structure. Refer to the paper for the exact hyper-parameter settings (The images are of Inception-Resnet v1).

- Inception- resnet v1的计算成本类似于Inception v3。

- Inception- resnet v2的计算成本类似于Inception v4。

- 它们有不同的茎,如Inception v4部分所示。

- 对于模块A、B、C和约简块,这两个子版本具有相同的结构。唯一的区别是超参数设置。在本节中,我们只关注结构。具体超参数设置参见本文(图像为Inception-Resnet v1)。

The Premise

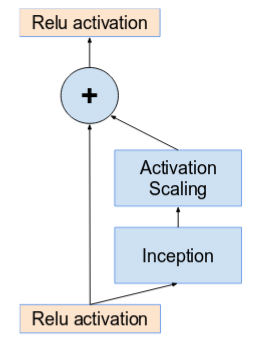

- Introduce residual connections that add the output of the convolution operation of the inception module, to the input.

- 引入剩余连接,将inception模块的卷积运算的输出添加到输入中。

The Solution

- For residual addition to work, the input and output after convolution must have the same dimensions. Hence, we use 1x1 convolutions after the original convolutions, to match the depth sizes (Depth is increased after convolution).

- 对于残差加法,卷积后的输入和输出必须具有相同的维数。因此,我们在原卷积之后使用1x1卷积,来匹配深度大小(深度在卷积之后增加)。

- The pooling operation inside the main inception modules were replaced in favor of the residual connections. However, you can still find those operations in the reduction blocks. Reduction block A is same as that of Inception v4.

- 主在先启模块中的池操作被替换为剩余连接。不过,您仍然可以在约简块中找到这些操作。约简块A与Inception v4相同。

- Networks with residual units deeper in the architecture caused the network to “die” if the number of filters exceeded 1000. Hence, to increase stability, the authors scaled the residual activations by a value around 0.1 to 0.3.

- 如果过滤器的数量超过1000个,在体系结构中具有更深层次剩余单元的网络将导致网络“死亡”。因此,为了增加稳定性,作者将剩余激活量按0.1到0.3左右的值进行了缩放。

- The original paper didn’t use BatchNorm after summation to train the model on a single GPU (To fit the entire model on a single GPU).

- It was found that Inception-ResNet models were able to achieve higher accuracies at a lower epoch.

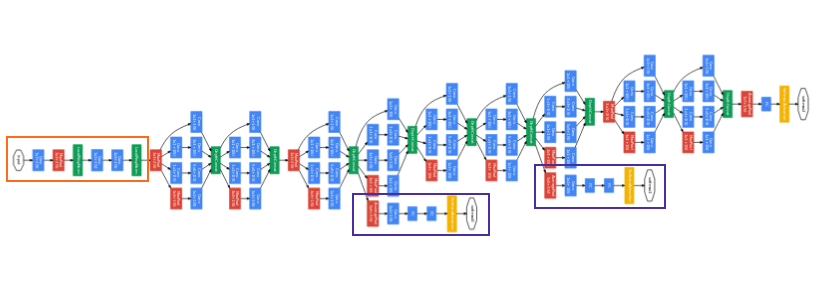

- The final network layout for both Inception v4 and Inception-ResNet are as follows:

- 原始的论文并没有使用BatchNorm在一个GPU上对模型进行训练(将整个模型放在一个GPU上)。

- 结果表明,该模型在较低的时间内可以获得较高的精度。

- Inception v4和incep - resnet的最终网络布局如下:

892

892

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?