提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档

前言

`本文主要对fastmvsnet的实战进行讲解,具体来说是网络的测试部分。

该网络的原理参考原始论文 https://arxiv.org/pdf/2003.13017.pdf 。

或博客https://blog.csdn.net/qq_43027065/article/details/116790264

详细的逐行代码分析在手撕fast

一、测试数据集构建

我之前使用过的网络,比如说D2HC,AA-RMVS,或者是r-mvs。所用的测试数据集为DTU的测试数据集,该数据集为1g左右,可从DTU官网下载 http://roboimagedata.compute.dtu.dk/?page_id=36。

但此网络使用的数据集不同,需要下载训练数据集30g与矫正过的图片123g。

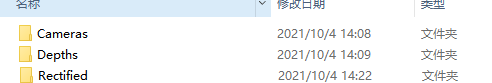

训练数据集结构

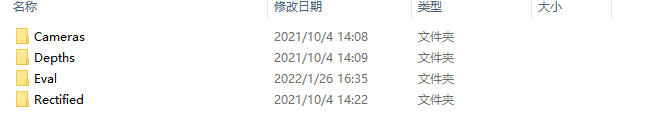

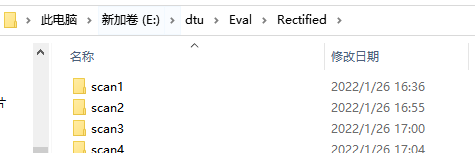

下载矫正后的数据集Rectified,将其放置dtu目录下创建的Eval文件夹中,如下结构

二、网络改写

首先需要讲明,我在win下未能成功编译并运行github官方所用的深度图融合程序,因此使用了d2hc网络的深度图融合脚本。

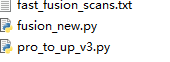

这就需要对原始代码进行一些改变。我的改动是添加两个脚本

并对脚本eval_file_logger.py进行改写

``

def eval_file_logger(data_batch, preds, ref_img_path, folder, scene_name_index=-2, out_index_minus=1, save_prob_volume=False):

#print(ref_img_path) ##########

ref_img_path = ref_img_path.replace('\\','/') #2022/2/22增加一句

l = ref_img_path.split("/")

eval_folder = "/".join(l[:-3])

scene = l[scene_name_index]

# print(l[-1][5:8]) #####

scene_folder = osp.join(eval_folder, folder, scene)

#改版V1##################################################

ceshi_path = 'E:/pangdawei/FastMVSNet/pfm_ceshi'

ceshi_path = osp.join(ceshi_path,scene)

if not osp.isdir(ceshi_path):

mkdir(ceshi_path)

#########################################################

if not osp.isdir(scene_folder):

mkdir(scene_folder)

print("**** {} ****".format(scene))

out_index = int(l[-1][5:8]) - out_index_minus #2022/2/22 [11:14]

cam_params_list = data_batch["cam_params_list"].cpu().numpy()

ref_cam_paras = cam_params_list[0, 0, :, :, :]

init_depth_map_path = scene_folder + ('/%08d_init.pfm' % out_index)

init_prob_map_path = scene_folder + ('/%08d_init_prob.pfm' % out_index)

#改版V1##################################################

init_prob_map_path_1 = osp.join(ceshi_path, "{:08d}_init_prob.pfm".format(out_index))

#########################################################

out_ref_image_path = scene_folder + ('/%08d.jpg' % out_index)

init_depth_map = preds["coarse_depth_map"].cpu().numpy()[0, 0]

init_prob_map = preds["coarse_prob_map"].cpu().numpy()[0, 0]

ref_image = data_batch["ref_img"][0].cpu().numpy()

write_pfm(init_depth_map_path, init_depth_map)

write_pfm(init_prob_map_path, init_prob_map)

#改版V1##################################################

write_pfm(init_prob_map_path_1, init_prob_map)

#########################################################

cv2.imwrite(out_ref_image_path, ref_image)

out_init_cam_path = scene_folder + ('/cam_%08d_init.txt' % out_index)

init_cam_paras = ref_cam_paras.copy()

init_cam_paras[1, :2, :3] *= (float(init_depth_map.shape[0]) / ref_image.shape[0])

write_cam_dtu(out_init_cam_path, init_cam_paras)

interval_list = np.array([-2.0, -1.0, 0.0, 1.0, 2.0])

interval_list = np.reshape(interval_list, [1, 1, -1])

for i, k in enumerate(preds.keys()):

if "flow" in k:

if "prob" in k:

out_flow_prob_map = preds[k][0].cpu().permute(1, 2, 0).numpy()

num_interval = out_flow_prob_map.shape[-1]

assert num_interval == interval_list.size

pred_interval = np.sum(out_flow_prob_map * interval_list, axis=-1) + 2.0

pred_floor = np.floor(pred_interval).astype(np.int)[..., np.newaxis]

pred_ceil = pred_floor + 1

pred_ceil = np.clip(pred_ceil, 0, num_interval - 1)

pred_floor = np.clip(pred_floor, 0, num_interval - 1)

prob_height, prob_width = pred_floor.shape[:2]

prob_height_ind = np.tile(np.reshape(np.arange(prob_height), [-1, 1, 1]), [1, prob_width, 1])

prob_width_ind = np.tile(np.reshape(np.arange(prob_width), [1, -1, 1]), [prob_height, 1, 1])

floor_prob = np.squeeze(out_flow_prob_map[prob_height_ind, prob_width_ind, pred_floor], -1)

ceil_prob = np.squeeze(out_flow_prob_map[prob_height_ind, prob_width_ind, pred_ceil], -1)

flow_prob = floor_prob + ceil_prob

flow_prob_map_path = scene_folder + "/{:08d}_{}.pfm".format(out_index, k)

write_pfm(flow_prob_map_path, flow_prob)

else: #走這

out_flow_depth_map = preds[k][0, 0].cpu().numpy()

flow_depth_map_path = scene_folder + "/{:08d}_{}.pfm".format(out_index, k)

#改版V1##################################################

flow_depth_map_path_1 = osp.join(ceshi_path,"{:08d}_{}.pfm".format(out_index, k))

write_pfm(flow_depth_map_path, out_flow_depth_map)

write_pfm(flow_depth_map_path_1, out_flow_depth_map)

###########################################################

out_flow_cam_path = scene_folder + "/cam_{:08d}_{}.txt".format(out_index, k)

flow_cam_paras = ref_cam_paras.copy()

flow_cam_paras[1, :2, :3] *= (float(out_flow_depth_map.shape[0]) / float(ref_image.shape[0]))

write_cam_dtu(out_flow_cam_path, flow_cam_paras)

world_pts = depth2pts_np(out_flow_depth_map, flow_cam_paras[1][:3, :3], flow_cam_paras[0])

save_points(osp.join(scene_folder, "{:08d}_{}pts.xyz".format(out_index, k)), world_pts)

# save cost volume

if save_prob_volume:

probability_volume = preds["coarse_prob_volume"].cpu().numpy()[0]

init_prob_volume_path = scene_folder + ('/%08d_init_prob_volume.npz' % out_index)

np.savez(init_prob_volume_path, probability_volume)

`脚本pro_to_up_v3.py

import argparse

import os.path as osp

from struct import *

import sys

import os

import cv2

import numpy as np

import re

testlist = 'E:/FastMVSNet_alt/tools/fast_fusion_scans.txt'

scene_folder = 'E:/FastMVSNet/pfm_ceshi'

name = 'flow2'

view_num = 49

mode = cv2.INTER_LANCZOS4

out_depth = 'E:/FastMVSNet_alt/outputs/checkpoints_model.ckpt'

out_pro = 'E:/FastMVSNet_alt/outputs/checkpoints_model.ckpt'

def probability_filter(scene_folder, name, view_num, mode, scan, out_depth, out_pro):

name_bak = name

out_pro = os.path.join(out_pro, scan, 'confidence_0', )

out_depth = os.path.join(out_depth, scan, 'depth_est_0')

if not osp.isdir(out_depth):

mkdir(out_depth)

if not osp.isdir(out_pro):

mkdir(out_pro)

for v in range(view_num):

# name = 'init'

init_prob_map_path = os.path.join(scene_folder, scan, "{:08d}_init_prob.pfm".format(v))

init_depth_map_path = os.path.join(scene_folder, scan,"{:08d}_{}.pfm".format(v, name))

# name = name_bak

out_pro_map_path = os.path.join(out_pro,"{:08d}.pfm".format(v))

out_depth_alt = os.path.join(out_depth,"{:08d}.pfm".format(v))

depth_map = load_pfm(init_depth_map_path)[0]

write_pfm(out_depth_alt, depth_map)

init_prob_map = load_pfm(init_prob_map_path)[0]

if init_prob_map.shape != depth_map.shape:

init_prob_map = cv2.resize(init_prob_map, (depth_map.shape[1], depth_map.shape[0]), interpolation=mode)

write_pfm(out_pro_map_path, init_prob_map)

print('完成prob上采样')

def load_pfm(file):

file = open(file, 'rb')

color = None

width = None

height = None

scale = None

endian = None

header = file.readline().rstrip()

if header.decode("ascii") == 'PF':

color = True

elif header.decode("ascii") == 'Pf':

color = False

else:

raise Exception('Not a PFM file.')

dim_match = re.match(r'^(\d+)\s(\d+)\s$', file.readline().decode("ascii"))

if dim_match:

width, height = list(map(int, dim_match.groups()))

else:

raise Exception('Malformed PFM header.')

scale = float(file.readline().decode("ascii").rstrip())

if scale < 0: # little-endian

endian = '<'

scale = -scale

else:

endian = '>' # big-endian

data = np.fromfile(file, endian + 'f')

shape = (height, width, 3) if color else (height, width)

data = np.reshape(data, shape)

data = np.flipud(data)

return data, scale

def write_pfm(file, image, scale=1):

file = open(file, mode='wb')

color = None

if image.dtype.name != 'float32':

raise Exception('Image dtype must be float32.')

image = np.flipud(image)

if len(image.shape) == 3 and image.shape[2] == 3: # color image

color = True

elif len(image.shape) == 2 or len(image.shape) == 3 and image.shape[2] == 1: # greyscale

color = False

else:

raise Exception('Image must have H x W x 3, H x W x 1 or H x W dimensions.')

file.write('PF\n' if color else 'Pf\n'.encode())

file.write('%d %d\n'.encode() % (image.shape[1], image.shape[0]))

endian = image.dtype.byteorder

if endian == '<' or endian == '=' and sys.byteorder == 'little':

scale = -scale

file.write('%f\n'.encode() % scale)

image_string = image.tostring()

file.write(image_string)

file.close()

def mkdir(path):

os.makedirs(path, exist_ok=True)

with open(testlist) as f:

scans = f.readlines()

scans = [line.rstrip() for line in scans]

for scan in scans:

probability_filter(scene_folder, name, view_num, mode, scan,out_depth , out_pro)

脚本fusion_new.py

"""

基於D2HC的新融合方法,可移植使用。

"""

import argparse

import os

import torch

import torch.nn as nn

import torch.nn.parallel

import torch.backends.cudnn as cudnn

import torch.optim as optim

from torch.utils.data import DataLoader

from torch.autograd import Variable

import torch.nn.functional as F

import numpy as np

import time

import re

import sys

import cv2

from plyfile import PlyData, PlyElement

from PIL import Image

cudnn.benchmark = True

parser = argparse.ArgumentParser(description='Predict depth, filter, and fuse. May be different from the original implementation')

parser.add_argument('--model', default='mvsnet', help='select model')

parser.add_argument('--dataset', default='dtu_yao_eval', help='select dataset')

parser.add_argument('--testpath', default='E:/D2HC-RMVSNet-master/data',help='testing data path') #使用D2HC形式的數據集路徑

parser.add_argument('--testlist', default='E:/FastMVSNet_alt/tools/fast_fusion_scans.txt',help='testing scan list')

parser.add_argument('--batch_size', type=int, default=1, help='testing batch size')

parser.add_argument('--numdepth', type=int, default=192, help='the number of depth values')

parser.add_argument('--interval_scale', type=float, default=1.06, help='the depth interval scale')

parser.add_argument('--loadckpt', default=None, help='load a specific checkpoint')

parser.add_argument('--outdir', default='E:/FastMVSNet_alt/outputs/checkpoints_model.ckpt', help='output dir')

parser.add_argument('--display', action='store_true', help='display depth images and masks')

parser.add_argument('--test_dataset', default='dtu', help='which dataset to evaluate')

# parse arguments and check

args = parser.parse_args()

print("argv:", sys.argv[1:])

def print_args(args):

print("################################ args ################################")

for k, v in args.__dict__.items():

print("{0: <10}\t{1: <30}\t{2: <20}".format(k, str(v), str(type(v))))

print("########################################################################")

print_args(args)

def read_pfm(filename):

file = open(filename, 'rb')

color = None

width = None

height = None

scale = None

endian = None

header = file.readline().decode('utf-8').rstrip()

if header == 'PF':

color = True

elif header == 'Pf':

color = False

else:

raise Exception('Not a PFM file.')

dim_match = re.match(r'^(\d+)\s(\d+)\s$', file.readline().decode('utf-8'))

if dim_match:

width, height = map(int, dim_match.groups())

else:

raise Exception('Malformed PFM header.')

scale = float(file.readline().rstrip())

if scale < 0: # little-endian

endian = '<'

scale = -scale

else:

endian = '>' # big-endian

data = np.fromfile(file, endian + 'f')

shape = (height, width, 3) if color else (height, width)

data = np.reshape(data, shape)

data = np.flipud(data)

file.close()

return data, scale

def save_pfm(filename, image, scale=1):

file = open(filename, "wb")

color = None

image = np.flipud(image)

if image.dtype.name != 'float32':

raise Exception('Image dtype must be float32.')

if len(image.shape) == 3 and image.shape[2] == 3: # color image

color = True

elif len(image.shape) == 2 or len(image.shape) == 3 and image.shape[2] == 1: # greyscale

color = False

else:

raise Exception('Image must have H x W x 3, H x W x 1 or H x W dimensions.')

file.write('PF\n'.encode('utf-8') if color else 'Pf\n'.encode('utf-8'))

file.write('{} {}\n'.format(image.shape[1], image.shape[0]).encode('utf-8'))

endian = image.dtype.byteorder

if endian == '<' or endian == '=' and sys.byteorder == 'little':

scale = -scale

file.write(('%f\n' % scale).encode('utf-8'))

image.tofile(file)

file.close()

# read intrinsics and extrinsics

def read_camera_parameters(filename,scale,index,flag):

with open(filename) as f:

lines = f.readlines()

lines = [line.rstrip() for line in lines]

# extrinsics: line [1,5), 4x4 matrix

extrinsics = np.fromstring(' '.join(lines[1:5]), dtype=np.float32, sep=' ').reshape((4, 4))

# intrinsics: line [7-10), 3x3 matrix

intrinsics = np.fromstring(' '.join(lines[7:10]), dtype=np.float32, sep=' ').reshape((3, 3))

# TODO: assume the feature is 1/4 of the original image size

intrinsics[:2, :] *= scale

if (flag==0):

intrinsics[0,2]-=index

else:

intrinsics[1,2]-=index

return intrinsics, extrinsics

# read an image

def read_img(filename):

img = Image.open(filename)

# scale 0~255 to 0~1

np_img = np.array(img, dtype=np.float32) / 255.

return np_img

# read a binary mask

def read_mask(filename):

return read_img(filename) > 0.5

# save a binary mask

def save_mask(filename, mask):

assert mask.dtype == np.bool

mask = mask.astype(np.uint8) * 255

Image.fromarray(mask).save(filename)

# read a pair file, [(ref_view1, [src_view1-1, ...]), (ref_view2, [src_view2-1, ...]), ...]

def read_pair_file(filename):

data = []

with open(filename) as f:

num_viewpoint = int(f.readline())

# 49 viewpoints

for view_idx in range(num_viewpoint):

ref_view = int(f.readline().rstrip())

src_views = [int(x) for x in f.readline().rstrip().split()[1::2]]

data.append((ref_view, src_views))

return data

def read_score_file(filename):

data=[]

with open(filename) as f:

num_viewpoint = int(f.readline())

# 49 viewpoints

for view_idx in range(num_viewpoint):

ref_view = int(f.readline().rstrip())

scores = [float(x) for x in f.readline().rstrip().split()[2::2]]

data.append(scores)

return data

# project the reference point cloud into the source view, then project back

def reproject_with_depth(depth_ref, intrinsics_ref, extrinsics_ref, depth_src, intrinsics_src, extrinsics_src):

width, height = depth_ref.shape[1], depth_ref.shape[0]

## step1. project reference pixels to the source view

# reference view x, y

x_ref, y_ref = np.meshgrid(np.arange(0, width), np.arange(0, height))

x_ref, y_ref = x_ref.reshape([-1]), y_ref.reshape([-1])

# reference 3D space

xyz_ref = np.matmul(np.linalg.inv(intrinsics_ref),

np.vstack((x_ref, y_ref, np.ones_like(x_ref))) * depth_ref.reshape([-1]))

# source 3D space

xyz_src = np.matmul(np.matmul(extrinsics_src, np.linalg.inv(extrinsics_ref)),

np.vstack((xyz_ref, np.ones_like(x_ref))))[:3]

# source view x, y

K_xyz_src = np.matmul(intrinsics_src, xyz_src)

xy_src = K_xyz_src[:2] / K_xyz_src[2:3]

## step2. reproject the source view points with source view depth estimation

# find the depth estimation of the source view

x_src = xy_src[0].reshape([height, width]).astype(np.float32)

y_src = xy_src[1].reshape([height, width]).astype(np.float32)

sampled_depth_src = cv2.remap(depth_src, x_src, y_src, interpolation=cv2.INTER_LINEAR)

# mask = sampled_depth_src > 0

# source 3D space

# NOTE that we should use sampled source-view depth_here to project back

xyz_src = np.matmul(np.linalg.inv(intrinsics_src),

np.vstack((xy_src, np.ones_like(x_ref))) * sampled_depth_src.reshape([-1]))

# reference 3D space

xyz_reprojected = np.matmul(np.matmul(extrinsics_ref, np.linalg.inv(extrinsics_src)),

np.vstack((xyz_src, np.ones_like(x_ref))))[:3]

# source view x, y, depth

depth_reprojected = xyz_reprojected[2].reshape([height, width]).astype(np.float32)

K_xyz_reprojected = np.matmul(intrinsics_ref, xyz_reprojected)

xy_reprojected = K_xyz_reprojected[:2] / K_xyz_reprojected[2:3]

x_reprojected = xy_reprojected[0].reshape([height, width]).astype(np.float32)

y_reprojected = xy_reprojected[1].reshape([height, width]).astype(np.float32)

return depth_reprojected, x_reprojected, y_reprojected, x_src, y_src

def check_geometric_consistency(depth_ref, intrinsics_ref, extrinsics_ref, depth_src, intrinsics_src, extrinsics_src

):

width, height = depth_ref.shape[1], depth_ref.shape[0]

x_ref, y_ref = np.meshgrid(np.arange(0, width), np.arange(0, height))

depth_reprojected, x2d_reprojected, y2d_reprojected, x2d_src, y2d_src = reproject_with_depth(depth_ref,

intrinsics_ref,

extrinsics_ref,

depth_src,

intrinsics_src,

extrinsics_src)

# check |p_reproj-p_1| < 1

dist = np.sqrt((x2d_reprojected - x_ref) ** 2 + (y2d_reprojected - y_ref) ** 2)

# check |d_reproj-d_1| / d_1 < 0.01

depth_diff = np.abs(depth_reprojected - depth_ref)

relative_depth_diff = depth_diff / depth_ref

masks=[]

for i in range(2,21):

mask = np.logical_and(dist < i/4, relative_depth_diff < i/1300)

masks.append(mask)

depth_reprojected[~mask] = 0

return masks, mask, depth_reprojected, x2d_src, y2d_src

def filter_depth(scan_folder, out_folder, plyfilename, photo_threshold):

# the pair file

pair_file = os.path.join(scan_folder, "pair.txt")

# for the final point cloud

vertexs = []

vertex_colors = []

pair_data = read_pair_file(pair_file)

score_data = read_score_file(pair_file)

nviews = len(pair_data)

# TODO: hardcode size

# used_mask = [np.zeros([296, 400], dtype=np.bool) for _ in range(nviews)]

# for each reference view and the corresponding source views

ct2 = -1

for ref_view, src_views in pair_data:

ct2 += 1

# load the reference image

ref_img = read_img(os.path.join(scan_folder, 'images/{:0>8}.jpg'.format(ref_view)))

# load the estimated depth of the reference view

ref_depth_est = read_pfm(os.path.join(out_folder, 'depth_est_0/{:0>8}.pfm'.format(ref_view)))[0]

import cv2

# ref_img=cv2.pyrUp(ref_img)

#ref_depth_est=cv2.pyrUp(ref_depth_est)

# ref_depth_est=cv2.pyrUp(ref_depth_est)

# load the photometric mask of the reference view

confidence = read_pfm(os.path.join(out_folder, 'confidence_0/{:0>8}.pfm'.format(ref_view)))[0]

scale=float(confidence.shape[0])/ref_img.shape[0]

index=int((int(ref_img.shape[1]*scale)-confidence.shape[1])/2)

flag=0

if (confidence.shape[1]/ref_img.shape[1]>scale):

scale=float(confidence.shape[1])/ref_img.shape[1]

index=int((int(ref_img.shape[0]*scale)-confidence.shape[0])/2)

flag=1

#confidence=cv2.pyrUp(confidence)

ref_img=cv2.resize(ref_img,(int(ref_img.shape[1]*scale),int(ref_img.shape[0]*scale)))

if (flag==0):

ref_img=ref_img[:,index:ref_img.shape[1]-index,:]

else:

ref_img=ref_img[index:ref_img.shape[0]-index,:,:]

# load the camera parameters

ref_intrinsics, ref_extrinsics = read_camera_parameters(

os.path.join(scan_folder, 'cams/{:0>8}_cam.txt'.format(ref_view)),scale,index,flag)

photo_mask = confidence > photo_threshold

# photo_mask = confidence>=0

# photo_mask = confidence > confidence.mean()

# ref_depth_est=ref_depth_est * photo_mask

all_srcview_depth_ests = []

all_srcview_x = []

all_srcview_y = []

all_srcview_geomask = []

# compute the geometric mask

geo_mask_sum = 0

geo_mask_sums=[]

n=1

for src_view in src_views:

n+=1

ct = 0

for src_view in src_views:

ct = ct + 1

# camera parameters of the source view

src_intrinsics, src_extrinsics = read_camera_parameters(

os.path.join(scan_folder, 'cams/{:0>8}_cam.txt'.format(src_view)),scale,index,flag)

# the estimated depth of the source view

src_depth_est = read_pfm(os.path.join(out_folder, 'depth_est_0/{:0>8}.pfm'.format(src_view)))[0]

#src_depth_est=cv2.pyrUp(src_depth_est)

# src_depth_est=cv2.pyrUp(src_depth_est)

src_confidence = read_pfm(os.path.join(out_folder, 'confidence_0/{:0>8}.pfm'.format(src_view)))[0]

# src_mask=src_confidence>0.1

# src_mask=src_confidence>src_confidence.mean()

# src_depth_est=src_depth_est*src_mask

masks, geo_mask, depth_reprojected, x2d_src, y2d_src = check_geometric_consistency(ref_depth_est, ref_intrinsics,

ref_extrinsics,

src_depth_est,

src_intrinsics, src_extrinsics)

if (ct==1):

for i in range(2,n):

geo_mask_sums.append(masks[i-2].astype(np.int32))

else :

for i in range(2,n):

geo_mask_sums[i-2]+=masks[i-2].astype(np.int32)

geo_mask_sum+=geo_mask.astype(np.int32)

all_srcview_depth_ests.append(depth_reprojected)

# all_srcview_x.append(x2d_src)

# all_srcview_y.append(y2d_src)

# all_srcview_geomask.append(geo_mask)

geo_mask=geo_mask_sum>=n

for i in range (2,n):

geo_mask=np.logical_or(geo_mask,geo_mask_sums[i-2]>=i)

print(geo_mask.mean())

depth_est_averaged = (sum(all_srcview_depth_ests) + ref_depth_est) / (geo_mask_sum + 1)

if (not isinstance(geo_mask, bool)):

final_mask = np.logical_and(photo_mask, geo_mask)

os.makedirs(os.path.join(out_folder, "mask"), exist_ok=True)

save_mask(os.path.join(out_folder, "mask/{:0>8}_photo.png".format(ref_view)), photo_mask)

save_mask(os.path.join(out_folder, "mask/{:0>8}_geo.png".format(ref_view)), geo_mask)

save_mask(os.path.join(out_folder, "mask/{:0>8}_final.png".format(ref_view)), final_mask)

print("processing {}, ref-view{:0>2}, photo/geo/final-mask:{}/{}/{}".format(scan_folder, ref_view,

photo_mask.mean(),

geo_mask.mean(),

final_mask.mean()))

if args.display:

import cv2

cv2.imshow('ref_img', ref_img[:, :, ::-1])

cv2.imshow('ref_depth', ref_depth_est / 800)

cv2.imshow('ref_depth * photo_mask', ref_depth_est * photo_mask.astype(np.float32) / 800)

cv2.imshow('ref_depth * geo_mask', ref_depth_est * geo_mask.astype(np.float32) / 800)

cv2.imshow('ref_depth * mask', ref_depth_est * final_mask.astype(np.float32) / 800)

cv2.waitKey(0)

height, width = depth_est_averaged.shape[:2]

x, y = np.meshgrid(np.arange(0, width), np.arange(0, height))

# valid_points = np.logical_and(final_mask, ~used_mask[ref_view])

valid_points = final_mask

print("valid_points", valid_points.mean())

x, y, depth = x[valid_points], y[valid_points], depth_est_averaged[valid_points]

color = ref_img[:, :, :][valid_points] # hardcoded for DTU dataset

xyz_ref = np.matmul(np.linalg.inv(ref_intrinsics),

np.vstack((x, y, np.ones_like(x))) * depth)

xyz_world = np.matmul(np.linalg.inv(ref_extrinsics),

np.vstack((xyz_ref, np.ones_like(x))))[:3]

vertexs.append(xyz_world.transpose((1, 0)))

vertex_colors.append((color * 255).astype(np.uint8))

# # set used_mask[ref_view]

# used_mask[ref_view][...] = True

# for idx, src_view in enumerate(src_views):

# src_mask = np.logical_and(final_mask, all_srcview_geomask[idx])

# src_y = all_srcview_y[idx].astype(np.int)

# src_x = all_srcview_x[idx].astype(np.int)

# used_mask[src_view][src_y[src_mask], src_x[src_mask]] = True

vertexs = np.concatenate(vertexs, axis=0)

vertex_colors = np.concatenate(vertex_colors, axis=0)

vertexs = np.array([tuple(v) for v in vertexs], dtype=[('x', 'f4'), ('y', 'f4'), ('z', 'f4')])

vertex_colors = np.array([tuple(v) for v in vertex_colors], dtype=[('red', 'u1'), ('green', 'u1'), ('blue', 'u1')])

vertex_all = np.empty(len(vertexs), vertexs.dtype.descr + vertex_colors.dtype.descr)

for prop in vertexs.dtype.names:

vertex_all[prop] = vertexs[prop]

for prop in vertex_colors.dtype.names:

vertex_all[prop] = vertex_colors[prop]

el = PlyElement.describe(vertex_all, 'vertex')

PlyData([el]).write(plyfilename)

print("saving the final model to", plyfilename)

if __name__ == '__main__':

with open(args.testlist) as f:

scans = f.readlines()

scans = [line.rstrip() for line in scans]

for scan in scans:

scan_folder = os.path.join(args.testpath, scan)

out_folder = os.path.join(args.outdir, scan)

# step2. filter saved depth maps with photometric confidence maps and geometric constraints

if (args.test_dataset=='dtu'):

scan_id = int(scan[4:])

photo_threshold=0.35

filter_depth(scan_folder, out_folder, os.path.join(args.outdir, 'fastmvsnet_{:0>3}_l3.ply'.format(scan_id) ), photo_threshold)

if (args.test_dataset=='tanks'):

photo_threshold=0.3

filter_depth(scan_folder, out_folder, os.path.join(args.outdir, scan + '.ply'), photo_threshold)

以上为网络改变部分

2.测试流程

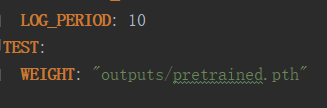

1.对dtu.yaml文件进行修改

2.test.py修改

3.运行test.py

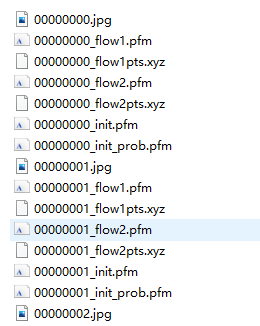

原先的网络深度图输出如下

其输出很凌乱,且不方便使用新融合脚本。

经过改版后的代码会生成 pfm_ceshi文件夹,其中有各个镜头的置信文件和深度图文件

3.运行pro_to_up_v2.py代码

目的为使置信文件和深度问价尺寸相同,并且输出形式符合新融合代码的输入结构

4.运行fusion_new.py

在outputs文件夹下会生成最终的融合代码。

该融合代码是基于动态一致性的。

结果图

569

569

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?