目录

8.练习:What kind of communication Pattern

9.Parallel Communicaiton Pattern Recap

10.Let us talk About GPU Hardware

12.Thread Blocks and GPU Hardware

14.Anoter Quiz on Threads and Blocks

15.What Can the Programmer Specify

16.CUDA Makes Few Guarantees About Thread Blocks

17.练习:A Thread Block Programming Example

18.练习:Code for A Thread Block Programming Example

21.练习:A Quize About GPU Memory Model

25.练习:A Quzie On Synchronization

27.Minimize Time Spent On Memory

30.练习:A Quize On Memory Access

32.练习:A Quiz on Coalescing Memory Access

36.Limitations of Atomic Memory Operations

37.练习:Code For Timing Atomic Operations

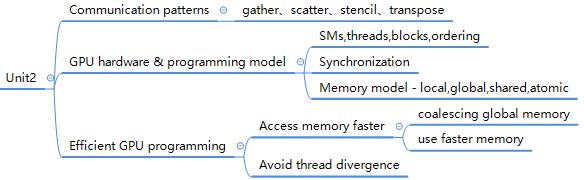

1.Welcome to Unit2

2.Communication Patterns

Parallel computing : Many threads solving a problem by working together. = communication ! !

3.Map and Gathre

4.练习:Scatter

5.练习:Stencil

6.Transpose Part 1

7.Transpose Part 2

8.练习:What kind of communication Pattern

9.Parallel Communicaiton Pattern Recap

10.Let us talk About GPU Hardware

11.Programmer View of GPU

12.Thread Blocks and GPU Hardware

13.Threads and Blocks

14.Anoter Quiz on Threads and Blocks

15.What Can the Programmer Specify

16.CUDA Makes Few Guarantees About Thread Blocks

17.练习:A Thread Block Programming Example

18.练习:Code for A Thread Block Programming Example

#include <stdio.h>

#define NUM_BLOCKS 16

#define BLOCK_WIDTH 1

__global__ void hello()

{

printf("Hello world! I'm a thread in block %d\n", blockIdx.x);

}

int main(int argc,char **argv)

{

// launch the kernel

hello<<<NUM_BLOCKS, BLOCK_WIDTH>>>();

// force the printf()s to flush

cudaDeviceSynchronize();

printf("That's all!\n");

return 0;

}19.What dows CUDA Guarantee

Later on we’ll learn how you can use a concept called ‘streams’ to relax this guarantee and overlap different kernels when as the programmer you know it’s safe to do so.

20.GPU Memory Model

21.练习:A Quize About GPU Memory Model

22.Synchronization - Barrier

23.练习:The need for barries

24.Programming Model

高度总结了CUDA的概念:A hierachy of Computation 、Memory Space、Synchronization

25.练习:A Quzie On Synchronization

Students paying close attention will notice another bug in this code: an off-by-one array access. Thread 0 will try to write to location s[-1]. Oops!

26.Writing Efficient Programs

27.Minimize Time Spent On Memory

28.Global Memory

/**********************

* using local memory *

**********************/

// a __device__ or __global__ function runs on the GPU

__global__ void use_local_memory_GPU(float in)

{

float f; // variable "f" is in local memory and private to each thread

f = in; // parameter "in" is in local memory and private to each thread

// ... real code would presumably do other stuff here ...

}

/**********************

* using global memory *

**********************/

// a __global__ function runs on the GPU & can be called from host

__global__ void use_global_memory_GPU(float *array)

{

// "array" is a pointer into global memory on the device

array[threadIdx.x] = 2.0f * (float) threadIdx.x;

}Note that in this example we are shipping the data to the GPU, running only a single kernel, then copying it back. Often we will run several kernels on the GPU, one after another. When this happens there is no need to copy the intermediate results back to the host - you can run each kernel in sequence, leaving the intermediate result data on the GPU in global memory, and only copy the final result back to the host

29.Shared Memory

// Using different memory spaces in CUDA

#include <stdio.h>

/**********************

* using local memory *

**********************/

// a __device__ or __global__ function runs on the GPU

__global__ void use_local_memory_GPU(float in)

{

float f; // variable "f" is in local memory and private to each thread

f = in; // parameter "in" is in local memory and private to each thread

// ... real code would presumably do other stuff here ...

}

/**********************

* using global memory *

**********************/

// a __global__ function runs on the GPU & can be called from host

__global__ void use_global_memory_GPU(float *array)

{

// "array" is a pointer into global memory on the device

array[threadIdx.x] = 2.0f * (float) threadIdx.x;

}

/**********************

* using shared memory *

**********************/

// (for clarity, hardcoding 128 threads/elements and omitting out-of-bounds checks)

__global__ void use_shared_memory_GPU(float *array) //局部变量是一个指针,指向预先分配的全局内存

{

// local variables, private to each thread

int i, index = threadIdx.x;

float average, sum = 0.0f;

// __shared__ variables are visible to all threads in the thread block

// and have the same lifetime as the thread block

__shared__ float sh_arr[128];

// copy data from "array" in global memory to sh_arr in shared memory.

// here, each thread is responsible for copying a single element.

sh_arr[index] = array[index];

__syncthreads(); // ensure all the writes to shared memory have completed

// now, sh_arr is fully populated. Let's find the average of all previous elements

for (i=0; i<index; i++) { sum += sh_arr[i]; } //因为共享内存特别快,访问共享内存比全局内存快的多

//每个线程要访问数组中的一堆元素

average = sum / (index + 1.0f);

// if array[index] is greater than the average of array[0..index-1], replace with average.

// since array[] is in global memory, this change will be seen by the host (and potentially

// other thread blocks, if any)

if (array[index] > average) { array[index] = average; }

// the following code has NO EFFECT: it modifies shared memory, but

// the resulting modified data is never copied back to global memory

// and vanishes when the thread block completes

sh_arr[index] = 3.14; //不起作用,共享内存的寿命是线程块的寿命,一旦线程块完成了,该内存就蒸发了

}

int main(int argc, char **argv)

{

/*

* First, call a kernel that shows using local memory

*/

use_local_memory_GPU<<<1, 128>>>(2.0f);

/*

* Next, call a kernel that shows using global memory

*/

float h_arr[128]; // convention: h_ variables live on host

float *d_arr; // convention: d_ variables live on device (GPU global mem)

// allocate global memory on the device, place result in "d_arr"

cudaMalloc((void **) &d_arr, sizeof(float) * 128);

// now copy data from host memory "h_arr" to device memory "d_arr"

cudaMemcpy((void *)d_arr, (void *)h_arr, sizeof(float) * 128, cudaMemcpyHostToDevice);

// launch the kernel (1 block of 128 threads)

use_global_memory_GPU<<<1, 128>>>(d_arr); // modifies the contents of array at d_arr 共享内存

// copy the modified array back to the host, overwriting contents of h_arr

cudaMemcpy((void *)h_arr, (void *)d_arr, sizeof(float) * 128, cudaMemcpyDeviceToHost);

// ... do other stuff ...

/*

* Next, call a kernel that shows using shared memory

*/

// as before, pass in a pointer to data in global memory

use_shared_memory_GPU<<<1, 128>>>(d_arr);

// copy the modified array back to the host

cudaMemcpy((void *)h_arr, (void *)d_arr, sizeof(float) * 128, cudaMemcpyHostToDevice);

// ... do other stuff ...

return 0;

}There should be a __syncthreads() before the final line, to avoid threads that reach that line from overwriting sh_arr while other threads are still computing their averages. Thanks to all of you that have pointed this out.

30.练习:A Quize On Memory Access

31.Coalesce Memory Access

32.练习:A Quiz on Coalescing Memory Access

33.A Related Problem Part 1

问题:线程相互覆盖,所以是随机的

34.A Related Problem Part 2

35.Atomic Memory Operations

使用GPU内置的特殊硬件以执行原子运算

解决:多个线程视图同时在同一内存位置读写的冲突,把不同线程对内存的访问做到了串行化

#include <stdio.h>

#include "gputimer.h"

#define NUM_THREADS 1000000

#define ARRAY_SIZE 100

#define BLOCK_WIDTH 1000

void print_array(int *array, int size)

{

printf("{ ");

for (int i = 0; i < size; i++) { printf("%d ", array[i]); }

printf("}\n");

}

__global__ void increment_naive(int *g)

{

// which thread is this?

int i = blockIdx.x * blockDim.x + threadIdx.x;

// each thread to increment consecutive elements, wrapping at ARRAY_SIZE

i = i % ARRAY_SIZE;

g[i] = g[i] + 1;

}

__global__ void increment_atomic(int *g)

{

// which thread is this?

int i = blockIdx.x * blockDim.x + threadIdx.x;

// each thread to increment consecutive elements, wrapping at ARRAY_SIZE

i = i % ARRAY_SIZE;

atomicAdd(& g[i], 1);

}

int main(int argc,char **argv)

{

GpuTimer timer;

printf("%d total threads in %d blocks writing into %d array elements\n",

NUM_THREADS, NUM_THREADS / BLOCK_WIDTH, ARRAY_SIZE);

// declare and allocate host memory

int h_array[ARRAY_SIZE];

const int ARRAY_BYTES = ARRAY_SIZE * sizeof(int);

// declare, allocate, and zero out GPU memory

int * d_array;

cudaMalloc((void **) &d_array, ARRAY_BYTES);

cudaMemset((void *) d_array, 0, ARRAY_BYTES); //数组里面的值初始化为0

// launch the kernel - comment out one of these

timer.Start();

// increment_naive<<<NUM_THREADS/BLOCK_WIDTH, BLOCK_WIDTH>>>(d_array);

increment_atomic<<<NUM_THREADS/BLOCK_WIDTH, BLOCK_WIDTH>>>(d_array);

timer.Stop();

// copy back the array of sums from GPU and print

cudaMemcpy(h_array, d_array, ARRAY_BYTES, cudaMemcpyDeviceToHost);

print_array(h_array, ARRAY_SIZE);

printf("Time elapsed = %g ms\n", timer.Elapsed());

// free GPU memory allocation and exit

cudaFree(d_array);

return 0;

}wlsh@wlsh-ThinkStation:~/Desktop/cs344-master/Lesson Code Snippets/Lesson 2 Code Snippets$ ./a.out

1000000 total threads in 1000 blocks writing into 100 array elements

{ 32 32 32 32 31 31 31 31 33 33 33 33 32 32 32 32 32 32 32 32 33 33 33 33 34 34 34 34 32 32 32 32 32 32 32 32 30 30 30 30 30 30 30 30 31 31 31 31 31 31 31 31 32 32 32 32 31 31 31 31 31 31 31 31 34 34 34 34 31 31 31 31 32 32 32 32 34 34 34 34 33 33 33 33 33 33 33 33 32 32 32 32 31 31 31 31 31 31 31 31 }

Time elapsed = 0.454272 ms

wlsh@wlsh-ThinkStation:~/Desktop/cs344-master/Lesson Code Snippets/Lesson 2 Code Snippets$ nvcc atomics.cu

wlsh@wlsh-ThinkStation:~/Desktop/cs344-master/Lesson Code Snippets/Lesson 2 Code Snippets$ ./a.out

1000000 total threads in 1000 blocks writing into 100 array elements

{ 33 33 33 33 31 31 31 31 32 32 32 32 33 33 33 33 31 31 31 31 34 34 34 34 31 31 31 31 32 32 32 32 33 33 33 33 32 32 32 32 32 32 32 32 31 31 31 31 34 34 34 34 32 32 32 32 33 33 33 33 32 32 32 32 31 31 31 31 33 33 33 33 32 32 32 32 32 32 32 32 31 31 31 31 33 33 33 33 32 32 32 32 31 31 31 31 32 32 32 32 }

Time elapsed = 0.466592 ms

wlsh@wlsh-ThinkStation:~/Desktop/cs344-master/Lesson Code Snippets/Lesson 2 Code Snippets$ nvcc atomics.cu

wlsh@wlsh-ThinkStation:~/Desktop/cs344-master/Lesson Code Snippets/Lesson 2 Code Snippets$ ./a.out

1000000 total threads in 1000 blocks writing into 100 array elements

{ 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 10000 }

Time elapsed = 0.324992 ms

36.Limitations of Atomic Memory Operations

An example of floating point being non-associative: associative.cu

37.练习:Code For Timing Atomic Operations

代码在上面

38.练习:Let us Time some Code

39.High Arithmetic Intensity

threadIdx.是不会改变的

40.Thread Divergence

降低速度

41.Summary of Unit 2

8577

8577

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?