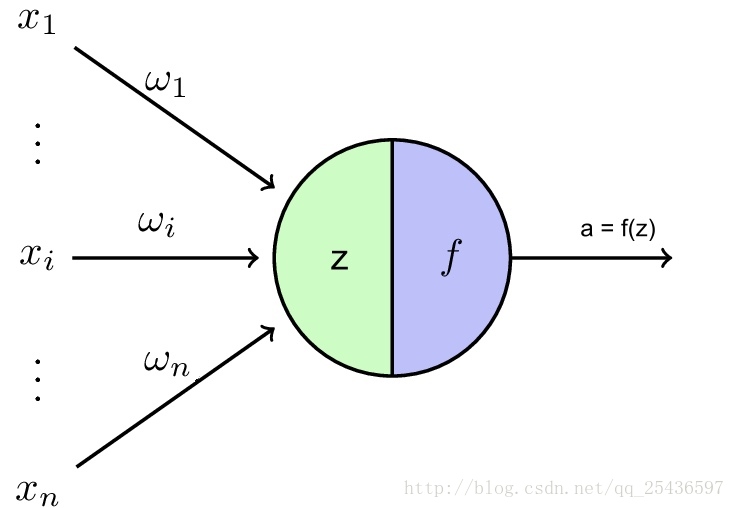

神经元的计算模型

特征:多输入、单输出

基本功能:收集、转换

(图片来自百度图片(捂脸))

- x1, x2, …, xn表示神经元的输入,eg.要预测一套房子的价格时,输入可以是房子的面积、位置、装修等等

- w1, w2, …, wn表示连接权,可理解为上一步输入的内容每一项所占的权重,比如面积占的权重较大,位置所占的权重稍小

- 神经元根据输入和连接权,计算输出。计算输出需要两步,首先是要把每个因素都考虑进来,故使用z = ∑ni=1wi∗xi 来包含所有输入。但是我们求和的值往往可能很大,也有可能出现负数。所以我们要使用一个激活函数f(),来使我们神经元的输出结果是我们想要的值。例如在很多情况下,我们往往想要知道一件事情发生的概率,那我们就需要一个激活函数,将输出值控制在0~1之间。

常用的激活函数有:

- 线性函数:f(z) = z

- Sigmod函数:f(z) = 11+e−z 该函数可以保证输出在0~1之间,且z值越大,输出越接近1,反之则越接近0

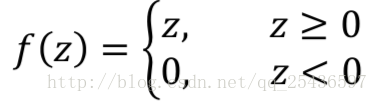

- 修正线性单元(ReLU):

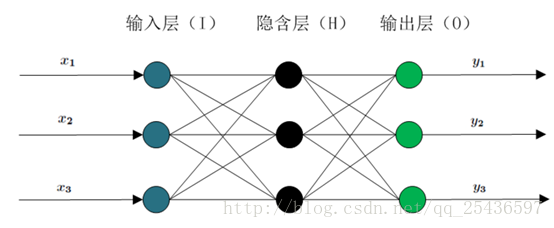

神经网络的计算模型

神经网络 = 神经元 + 连接

即神经元相互连接就构成了神经网络

(图片还是来自百度。。。)图中一个个的大圆点就代表了一个个神经元

我们前面已经讨论过了每个神经元是如何计算输出的,现在我们将该层的输出作为下一层的输入,不断往前计算。

其实只需要两个简单的公式就可以完成整个神经网络的前驱计算:

和单个神经元的计算一样,首先计算当前神经元的z值,经过激励函数后,得出该神经元的输出值,即下一层的输入值。

zl+1i=∑nlj=1wlijalj

al+1i=f(zl+1i)

al 是一个列向量,元素的个数为l(这是英文字母l,不是1噢)层神经元的个数,元素的值为该层中每个神经元的输出值。

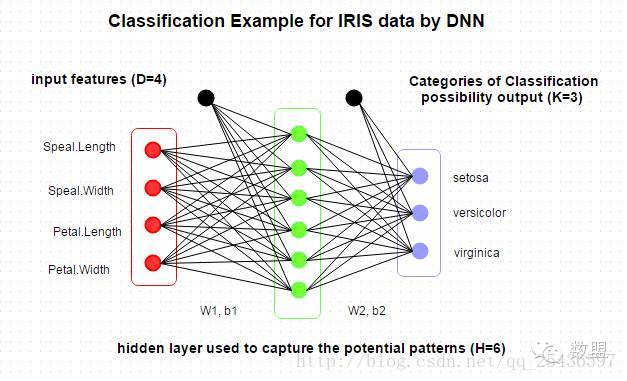

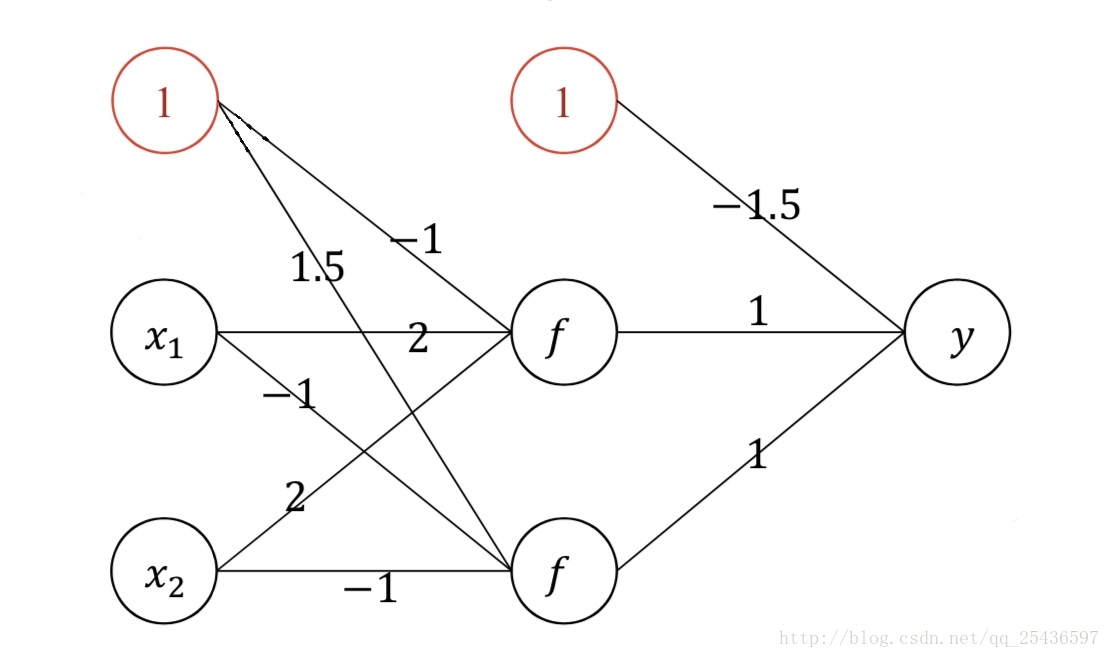

包含外部输入的神经网络

图中两个单个的黑色神经元就是外部输入,外部输入不和上一层的神经元连接,只与下一层的神经元连接,其结果影响下一层。

作业:Matlab实现

使用神经网络实现异或,即输入为0和1两个数字,若数字相同,同为0或同为1,则神经网络输出0;若输入不同,即一个为0,一个为1,则神经网络输出1.

神经网络分为三层:第一层和第二层结构相同,各包含三个神经元,两个内部输入,一个外部输入。最后一层为输出层,只包含一个神经元,即输出结果(0/1)。

程序共分两种形式实现,分别为元素形式fc_c、向量形式fc_v。

程序框架已给出:链接 (若想要自己尝试实现的,可下载链接代码,不必往下看)

Files

main_c.m- the MATLAB code file for XOR-worms example. Please fill it with component implementfc_c.m- the MATLAB code file for feedforward computation in component formmain_v.m- the MATLAB code file for XOR-worms example. Please fill it with vector implementfc_v.m- the MATLAB code file for feedforward computation in vector form

Instructions

Task 1: Implement the forward computation of FNN in component form

- in

fc_c.m, add external inputs with value 1 - in

fc_c.m, calculate net input for each neuron - in

fc_c.m, calculate activation for each neuron - in

main_c.m, calculate the activation a2, call function fc_c - in

main_c.m, calculate the activation a3, call function fc_c

Task 2: Implement the forward computation of FNN in vector form

- in

fc_v.m, add external inputs with value 1 - in

fc_v.m, calculate net input for all neurons - in

fc_v.m, calculate activation for each neurons - in

main_v.m, calculate the activation a2, call function fc_v - in

main_v.m, calculate the activation a3, call function fc_v

fc_c.m

function a_next = fc_c(w, a)

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% implement forward computation from layer l to layer l+1

% in component form

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% define the activation function

f = @(s) s >= 0;

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Your code BELOW

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% 1. add external inputs with value 1

a = [a

1];

% for each neuron located in layer l+1

z = [];

for i=1:size(w,1)

% 2. calculate net input

z(i) = 0;

for j = 1 : 3

z(i) = z(i) + w(i, j) * a(j);

end

% 3. calculate activation

a_next(i) = f(z(i));

end

a_next = double(a_next);

a_next = reshape(a_next, size(a_next, 2), 1);

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Your code ABOVE

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

end

main_c.m

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Course: Introduction to Deep Learning

%

% Lab 2 - Feedforward Computation

%

% Task 1: Implement the forward computation of FNN in component form

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% prepare the samples

data = [1 0 0 1

0 1 0 1]; % samples

labels = [1 1 0 0]; % labels

m = size(data, 2); % number of samples

% assign connection weight

w1 = [ 2 2 -1

-1 -1 1.5]; % connection from layer 1 to layer 2

w2 = [ 1 1 -1.5 ]; % connection from layer 2 to layer 3

% for each sample

for i = 1:m

x = data(:, i); % retrieve the i-th column of data

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Your code BELOW

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% layer 1 - input layer

a1 = x;

% layer 2 - hidden layer

a2 = [];

% 1. calculate the activation a2, call function fc_c

a2 = fc_c(w1, a1);

% layer 3 - output layer

a3 = fc_c(w2, a2);

% 2. calculate the activation a3, call function fc_c

% collect result

y = a3;

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Your code ABOVE

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% display the result

fprintf('Sample [%i %i] is classified as %i.\n', x(1), x(2), y);

end

fc_v.m

function a_next = fc_v(w, a)

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% implement forward computation from layer l to layer l+1

% in vector form

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% define the activation function

f = @(s) s >= 0;

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Your code BELOW

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% 1. add external inputs with value 1

a = cat(1, a, 1);

% 2. calculate net input

z = w*a;

% 3. calculate activation

a_next = f(z);

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Your code ABOVE

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

endmain_v.m

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Course: Introduction to Deep Learning

%

% Lab 2 - Feedforward Computation

%

% Task 2: Implement the forward computation of FNN in vector form

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% prepare the samples

data = [1 0 0 1

0 1 0 1]; % samples

labels = [1 1 0 0]; % labels

m = size(data, 2); % number of samples

% assign connection weight

w1 = [ 2 2 -1

-1 -1 1.5]; % connection from layer 1 to layer 2

w2 = [ 1 1 -1.5 ]; % connection from layer 2 to layer 3

% for each sample

for i = 1:m

x = data(:, i); % retrieve the i-th column of data

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Your code BELOW

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% layer 1 - input layer

a1 = x;

% layer 2 - hidden layer

a2 = [];

% 1. calculate the activation a2, call function fc_v

a2 = fc_v(w1, a1);

% layer 3 - output layer

a3 = [];

% 2. calculate the activation a3, call function fc_v

a3 = fc_v(w2, a2);

% collect result

y = a3;

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% Your code ABOVE

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% display the result

fprintf('Sample [%i %i] is classified as %i.\n', x(1), x(2), y);

end

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?