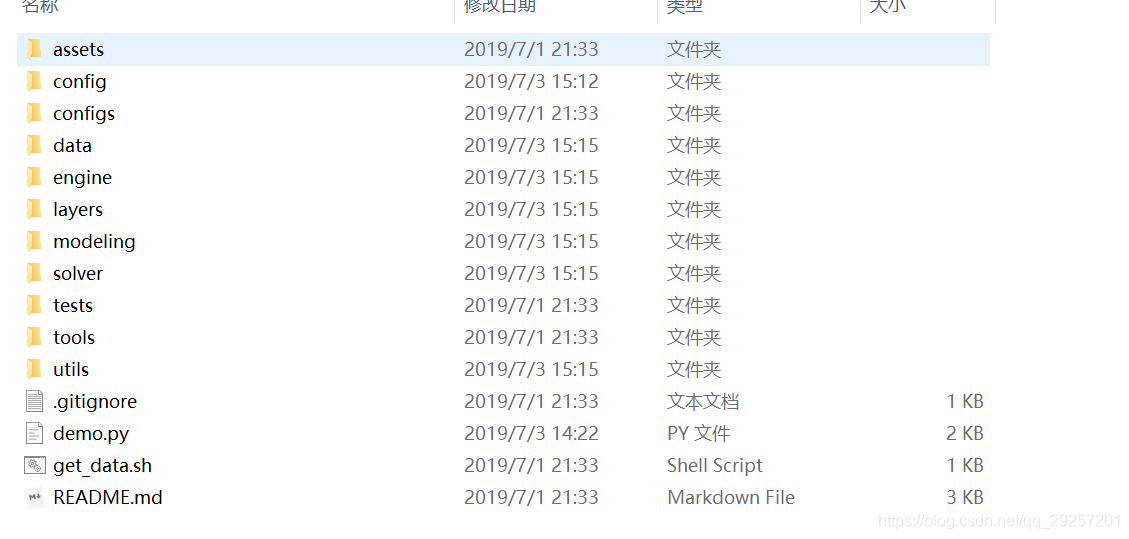

总览

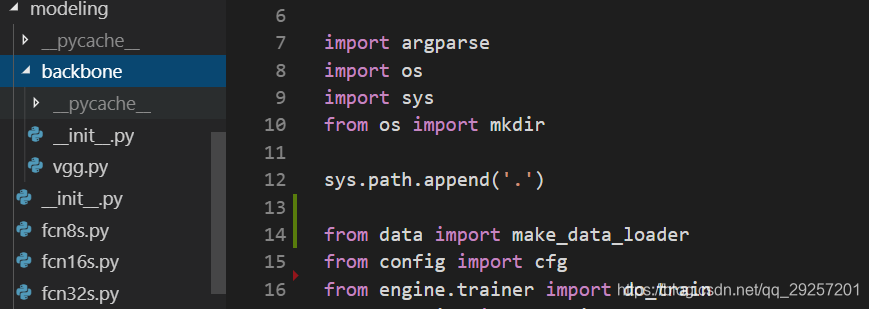

modeling

由于论文中有fcn8s、16s、32s对比实验,且前部分均和vgg16相同,故定义了backbone文件

一、backbone

vgg.py

这里也不多说了,预训练模型,接下来需要接受~

import torch

import torchvision

from torch import nn

class VGG16(nn.Module):

def __init__(self):

super(VGG16, self).__init__()

self.conv1_1 = nn.Conv2d(3, 64, 3, padding=100)

self.relu1_1 = nn.ReLU(inplace=True)

self.conv1_2 = nn.Conv2d(64, 64, 3, padding=1)

self.relu1_2 = nn.ReLU(inplace=True)

self.pool1 = nn.MaxPool2d(2, stride=2, ceil_mode=True) # 1/2

# conv2

self.conv2_1 = nn.Conv2d(64, 128, 3, padding=1)

self.relu2_1 = nn.ReLU(inplace=True)

self.conv2_2 = nn.Conv2d(128, 128, 3, padding=1)

self.relu2_2 = nn.ReLU(inplace=True)

self.pool2 = nn.MaxPool2d(2, stride=2, ceil_mode=True) # 1/4

# conv3

self.conv3_1 = nn.Conv2d(128, 256, 3, padding=1)

self.relu3_1 = nn.ReLU(inplace=True)

self.conv3_2 = nn.Conv2d(256, 256, 3, padding=1)

self.relu3_2 = nn.ReLU(inplace=True)

self.conv3_3 = nn.Conv2d(256, 256, 3, padding=1)

self.relu3_3 = nn.ReLU(inplace=True)

self.pool3 = nn.MaxPool2d(2, stride=2, ceil_mode=True) # 1/8

# conv4

self.conv4_1 = nn.Conv2d(256, 512, 3, padding=1)

self.relu4_1 = nn.ReLU(inplace=True)

self.conv4_2 = nn.Conv2d(512, 512, 3, padding=1)

self.relu4_2 = nn.ReLU(inplace=True)

self.conv4_3 = nn.Conv2d(512, 512, 3, padding=1)

self.relu4_3 = nn.ReLU(inplace=True)

self.pool4 = nn.MaxPool2d(2, stride=2, ceil_mode=True) # 1/16

# conv5

self.conv5_1 = nn.Conv2d(512, 512, 3, padding=1)

self.relu5_1 = nn.ReLU(inplace=True)

self.conv5_2 = nn.Conv2d(512, 512, 3, padding=1)

self.relu5_2 = nn.ReLU(inplace=True)

self.conv5_3 = nn.Conv2d(512, 512, 3, padding=1)

self.relu5_3 = nn.ReLU(inplace=True)

self.pool5 = nn.MaxPool2d(2, stride=2, ceil_mode=True) # 1/32

def forward(self, x):

x = self.relu1_1(self.conv1_1(x))

x = self.relu1_2(self.conv1_2(x))

x = self.pool1(x) #1/2

x = self.relu2_1(self.conv2_1(x))

x = self.relu2_2(self.conv2_2(x))

x = self.pool2(x) # 1/4

x = self.relu3_1(self.conv3_1(x))

x = self.relu3_2(self.conv3_2(x))

x = self.relu3_3(self.conv3_3(x))

x = self.pool3(x) # 1/8

x = self.relu4_1(self.conv4_1(x))

x = self.relu4_2(self.conv4_2(x))

x = self.relu4_3(self.conv4_3(x))

x = self.pool4(x) # 1/16

x = self.relu5_1(self.conv5_1(x))

x = self.relu5_2(self.conv5_2(x))

x = self.relu5_3(self.conv5_3(x))

x = self.pool5(x) # 1/32

return x

def pretrained_vgg(cfg):

model = torchvision.models.vgg16(pretrained=False)

model.load_state_dict(torch.load(cfg.MODEL.BACKBONE.WEIGHT))

return model

__init__.py

from .vgg import VGG16

def build_backbone(cfg):

if cfg.MODEL.BACKBONE.NAME == 'vgg16':

backbone = VGG16()

return backbone

二、fcns32.py

网络结构and初始化

from torch import nn

from layers.bilinear_upsample import bilinear_upsampling

from layers.conv_layer import conv_layer

from .backbone import build_backbone

class FCN32s(nn.Module):

def __init__(self, cfg):

super(FCN32s, self).__init__()

self.backbone = build_backbone(cfg) # feature

num_classes = cfg.MODEL.NUM_CLASSES

self.fc1 = conv_layer(512, 4096, 7)

self.relu1 = nn.ReLU(inplace=True)

self.drop1 = nn.Dropout2d()

self.fc2 = conv_layer(4096, 4096, 1)

self.relu2 = nn.ReLU(inplace=True)

self.drop2 = nn.Dropout2d()

self.score_fr = conv_layer(4096, num_classes, 1)

# 上采样,放大32,[n, n_class, H,W]

self.upscore = bilinear_upsampling(num_classes, num_classes, 64, stride=32,

bias=False)

def forward(self, x):

_, _, h, w = x.size()

x = self.backbone(x)

x = self.relu1(self.fc1(x))

x = self.drop1(x)

x = self.relu2(self.fc2(x))

x = self.drop2(x)

x = self.score_fr(x)

x = self.upscore(x)

x = x[:, :, 19:19 + h, 19:19 + w].contiguous()

return x

def copy_params_from_vgg16(self, vgg16):

feat = self.backbone

features = [

feat.conv1_1, feat.relu1_1,

feat.conv1_2, feat.relu1_2,

feat.pool1,

feat.conv2_1, feat.relu2_1,

feat.conv2_2, feat.relu2_2,

feat.pool2,

feat.conv3_1, feat.relu3_1,

feat.conv3_2, feat.relu3_2,

feat.conv3_3, feat.relu3_3,

feat.pool3,

feat.conv4_1, feat.relu4_1,

feat.conv4_2, feat.relu4_2,

feat.conv4_3, feat.relu4_3,

feat.pool4,

feat.conv5_1, feat.relu5_1,

feat.conv5_2, feat.relu5_2,

feat.conv5_3, feat.relu5_3,

feat.pool5

]

for l1, l2 in zip(vgg16.features, features):

if isinstance(l1, nn.Conv2d) and isinstance(l2, nn.Conv2d):

assert l1.weight.size() == l2.weight.size()

assert l1.bias.size() == l2.bias.size()

l2.weight.data.copy_(l1.weight.data)

l2.bias.data.copy_(l1.bias.data)

for i, name in zip([0, 3], ['fc1', 'fc2']):

l1 = vgg16.classifier[i]

l2 = getattr(self, name)

l2.weight.data.copy_(l1.weight.data.view(l2.weight.size()))

l2.bias.data.copy_(l1.bias.data.view(l2.bias.size()))

三、__init__.py

import torch

from .backbone.vgg import pretrained_vgg

from .fcn16s import FCN16s

from .fcn32s import FCN32s

from .fcn8s import FCN8s

_FCN_META_ARCHITECTURE = {'fcn32s': FCN32s,

'fcn16s': FCN16s,

'fcn8s': FCN8s}

def build_fcn_model(cfg):

meta_arch = _FCN_META_ARCHITECTURE[cfg.MODEL.META_ARCHITECTURE]

model = meta_arch(cfg)

if cfg.MODEL.BACKBONE.PRETRAINED:

vgg16 = pretrained_vgg(cfg)

model.copy_params_from_vgg16(vgg16)

if cfg.MODEL.REFINEMENT.NAME == 'fcn32s':

fcn32s = FCN32s(cfg)

fcn32s.load_state_dict(torch.load(cfg.MODEL.REFINEMENT.WEIGHT))

model.copy_params_from_fcn32s(fcn32s)

elif cfg.MODEL.REFINEMENT.NAME == 'fcn16s':

fcn16s = FCN16s(cfg)

fcn16s.load_state_dict(torch.load(cfg.MODEL.REFINEMENT.WEIGHT))

model.copy_params_from_fcn16s(fcn16s)

return model

总结

主函数中,import该函数

from modeling import build_fcn_model

1903

1903

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?