ros-by-example

- 前情提要

- 4. Networking Between a Robot and a Desktop Computer

- 4.1Time Synchronization

- 4.2ROS Networking using Zeroconf

- 4.3Testing Connectivity

- 4.4Setting the ROS_MASTER_URI and ROS_HOSTNAME Variables

- 4.5Opening New Terminals

- 4.6Running Nodes on both Machines

- 4.7ROS Networking across the Internet

- 4.8ROS recap

- 4.9What is a ROS Application

- 4.10Installing Packages with SVN, Git, and Mercurial

- 4.11Removing Packages from your Personal catkin Directory

- 4.12How to Find Third-Party ROS Packages

- 5. Installing THE ROS-BY-EXAMPLE CODE

- 6. INSTALLING THE ARBOTIX SIMULATOR

- 7. CONTROLLING A MOBILE BASE

前情提要

这是rbx1几章内容的笔记,本来是想借此书来学习ROS的,可是这本书的例程是关于SLAM的,我想要看关于机器手抓取的ROS例程,所以学到半途准备转去学习MoveIt!

4. Networking Between a Robot and a Desktop Computer

笔记本电脑与机器人的网络连接配置。

4.1Time Synchronization

对其电脑与机器的时钟,在电脑与机器人中安装 chrony 即可,运行以下命令行:

$ sudo apt-get install chrony

如此便可以将时钟与互联网服务器的进行对齐,如此便可与各种机器进行时钟对齐

4.2ROS Networking using Zeroconf

由Ubuntu的 Zeroconf 支持,使得在同一局域网下的计算机和机器人可以用各自的hostname进行交流,而不用IP addresses.

$ hostname

my_desktop_name

4.3Testing Connectivity

从电脑端输入以下代码来验证与机器人的连接是否成功:

$ ping my_robot.local

由机器人ping电脑端同样类似(使用ssh进入机器人端)

注意: 若ping的时候显示 “unknown host” error,尝试重启 avahi-daemon :

$ sudo service avahi-daemon restart

4.4Setting the ROS_MASTER_URI and ROS_HOSTNAME Variables

在ROS network中,需要将一个机器设置为 ROS master,且仅在该机器上运行 roscore. 其他机器设置 ROS_MASTER_URI 环境变量,来指向 MASTER host. 通常来说,让谁成为MASTER都可以,但是我们希望将robot作为master,使其不依赖于desktop.

#on the robot

#set the ROS_HOSTNAME to its Zeroconf name

$ export ROS_HOSTNAME=my_robot.local

$ roscore#as master

#on the desktop

$ export ROS_HOSTNAME=my_desktop.local

#set the ROS_MASTER_URI environment variable to point to the master host

$ export ROS_MASTER_URI=http://my_robot.local:11311

#on the desktop

#同步desktop和robot的时间

$ sudo ntpdate -b my_robot.local

#on the desktop

$ rostopic list

#若运行成功,则可见以下两个话题

#/rosout

#/rosout_agg

4.5Opening New Terminals

无论在何处打开了一个新的终端,都需要设置 ROS_HOSTNAME=对应的机器Zeroconf name,并且对于每个nonMaster,还需要设置ROS_MASTER_URI. 所以若是一段时间用的设备不变的话,可以在~/.bashrc中加入此些代码,使得每次打开终端的时候都会自动执行

4.6Running Nodes on both Machines

设置好相互的连接后便可以开始执行节点了,相互连接的机器可以识别所有的topics and services.

# on the desktop

ssh my_robot.local

#on the robot(via ssh)

$ export ROS_HOSTNAME=my_robot.local

$ roscore & #这会返回命令提示符,这样我们就可以启动robot的启动文件,而不必打开另一个ssh会话

$ roslaunch my_robot startup.launch

#on the desktop

$ export ROS_HOSTNAME=my_desktop.local

$ export ROS_MASTER_URI=http://my_robot.local:11311

$ rosrun rviz rviz -d `rospack find rbx1_nav`/nav.rviz

4.7ROS Networking across the Internet

与使用Zeroconf类似,在Internet下连接就是利用fully qualified hostnames or IP addresses.

4.8ROS recap

重点就在于 节点,由C++/Python编写的程序。它可以在特定的话题中分布消息,也可以为其他节点提供服务。

4.9What is a ROS Application

基于Arduino或者stm32的机器人,通常是用C编程的,它们能够控制机器人的硬件,从而直接机器人的行为。而ROS则是将各种行为划分为几个独立的节点,然后让此些节点相互通信。熟悉这种编程方式的话,可以将很多节点的代码重复利用于其他应用中。例如Turtlebot follower application的节点代码可以被利用于使用深度相机和移动底盘的机器人当中。而且ROS是分布式的,意味着可以将一些计算量大的节点置于PC端,low-computation的底层行为置于机器人端。只要机器们在同一网络下,它们就能够通讯。

4.10Installing Packages with SVN, Git, and Mercurial

$ sudo apt-get install git subversion mercurial

常用的操作有两个,第一个是下载软件包,第二个是获取更新,三种系统的命令均不一样。

SVN

#To do the initial checkout and build the package in your personal catkin directory, run the following commands.

$ cd ~/catkin_ws/src

$ svn checkout http://repository/svn/package_name

$ cd ~/catkin_ws

$ catkin_make

$ source devel/setup.bash

$ rospack profile

#To update the package later on, run the commands

$ cd ~/catkin_ws/src/package_name

$ svn update

$ cd ~/catkin_ws

$ catkin_make

$ source devel/setup.bash

Git

$ cd ~/catkin_ws/src

$ git clone git://repository/package_name

$ cd ~/catkin_ws

$ catkin_make

$ source devel/setup.bash

$ rospack profile

$ cd ~/catkin_ws/src/package_name

$ git pull

$ cd ~/catkin_ws

$ catkin_make

$ source devel/setup.bash

Mercurial

$ cd ~/catkin_ws/src

$ hg clone http://repository/package_name

$ cd ~/catkin_ws

$ catkin_make

$ source devel/setup.bash

$ rospack profile

$ cd ~/catkin_ws/src/package_name

$ hg update

$ cd ~/catkin_ws

$ catkin_make

$ source devel/setup.bash

4.11Removing Packages from your Personal catkin Directory

首先删除/src中的包:

$ cd ~/catkin_ws/src

$ \rm -rf my_catkin_package

然后删除所有catkin build objects for all packages,然后再运行catkin_make:

$ cd ~/catkin_ws

$ \rm -rf devel build install

$ catkin_make

$ source devel/setup.bash

#验证package有无被移除

$ roscd my_ros_package

#roscd: No such package 'my_ros_package'

4.12How to Find Third-Party ROS Packages

- ROS Wiki 包含了许多ROS packages and stacks,可以根据索引检索,也可以在搜索栏中进行关键字检索

- 还可以用命令行检索:

$ roslocate uri ros_arduino_bridge - Browse Software 可以在wiki中游览完整的列表(ROS packages, stacks and repositories as indexed)

- Google search

5. Installing THE ROS-BY-EXAMPLE CODE

5.1Installing the Prerequisites

$ sudo apt-get install ros-kinetic-turtlebot-bringup \

ros-kinetic-turtlebot-create-desktop ros-kinetic-openni-* \

ros-kinetic-openni2-* ros-kinetic-freenect-* ros-kinetic-usb-cam \

ros-kinetic-laser-* ros-kinetic-hokuyo-node \

ros-kinetic-audio-common gstreamer0.10-pocketsphinx \

ros-kinetic-pocketsphinx ros-kinetic-slam-gmapping \

ros-kinetic-joystick-drivers python-rosinstall \

ros-kinetic-orocos-kdl ros-kinetic-python-orocos-kdl \

python-setuptools ros-kinetic-dynamixel-motor-* \

libopencv-dev python-opencv ros-kinetic-vision-opencv \

ros-kinetic-depthimage-to-laserscan ros-kinetic-arbotix-* \

ros-kinetic-turtlebot-teleop ros-kinetic-move-base \

ros-kinetic-map-server ros-kinetic-fake-localization \

ros-kinetic-amcl git subversion mercurial

5.2Cloning the rbx1 repository for the first time

$ cd ~/catkin_ws/src

$ git clone https://github.com/vanadiumlabs/arbotix_ros.git

$ cd ~/catkin_ws

catkin_make

运行以下代码,确保rbx1是Up-To-Date的:

$ cd ~/catkin_ws/src/rbx1

$ git pull

$ cd ~/catkin_ws

$ catkin_make

6. INSTALLING THE ARBOTIX SIMULATOR

ArbotiX是一个仿真器,用来测试代码。

6.1Installing the Simulator

方法一:

$ sudo apt-get install ros-kinetic-arbotix-*

方法二,利用git源码进行安装:

$ cd ~/catkin_ws/src

$ git clone https://github.com/vanadiumlabs/arbotix_ros.git

$ cd ..

$ catkin_make

6.2Testing the Simulator

启动仿真器TurtleBot:

$ roslaunch rbx1_bringup fake_turtlebot.launch

#输出信息应如下所示

process[arbotix-1]: started with pid [23668]

process[robot_state_publisher-2]: started with pid [23669]

[INFO] [1567686794.847364]: ArbotiX being simulated.

[INFO] [1567686794.883537]: Started DiffController (base_controller). Geometry: 0.26m wide, 4100.0 ticks/m.

若使用Pi Robot的模型,则运行如下命令:

$ roslaunch rbx1_bringup fake_pi_robot.launch

下一步,运行 RViz 来观察仿真机器人的运动:

#一个小trick,利用rospack来取得包的路径

$ rosrun rviz rviz -d `rospack find rbx1_nav`/sim.rviz

在终端发布消息,管擦仿真机器人的运动(应该为顺时针的运动):

$ rostopic pub -r 10 /cmd_vel geometry_msgs/Twist '{linear: {x: 0.2, y:

0, z: 0}, angular: {x: 0, y: 0, z: 0.5}}'

停止转动,ctrl+c,然后发布如下信息

$ rostopic pub -1 /cmd_vel geometry_msgs/Twist '{}'

6.3Running the Simulator with Your Own Robot

若有自己机器人的 URDFmodel文件,则可以让它运行于 Arbotix simulator,如turtlebot和Pi Robot一般。

7. CONTROLLING A MOBILE BASE

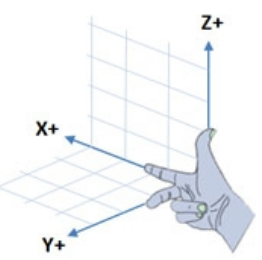

7.1Units and Coordinate Systems

ROS中的机器所用的坐标系为右手坐标系

机器人向前,向左转分别对应+x和+y;绕着z轴的正旋转则表示机器人的逆时针旋转。并且ROS使用的是米制系统,所以线速度和角速度的单位分别是m/s, rad/s,对于室内机器人移动,0.2m/s的速度较为适宜。

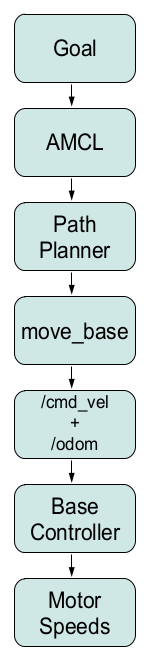

7.2Levels of Motion Control

运动控制有很多级别的,这些级别代表不同程度的抽象。此处所讲的是从直接控制电机开始,一直到路径规划和SLAM。

Motors, Wheels, and Encoders

大多数差分驱动移动机器人的轮子都有一个编码器,知道车轮的直径和它们之间的距离,编码器刻度可以转换为以米为单位的移动距离或者以弧度旋转的角度。这些内部的运动数据便称为里程计。 当然,由于环境还有里程计来源的不同,机器人实际的位置和运动可能是不一样的。所以才会有SLAM进行运动估计

Motor Controllers and Drivers

在最低级的运动控制中,我们需要一个驱动机器人的马达控制器,它可以以期望的速度转动驱动轮,通常使用内部单位,如每秒编码器滴答声或最大速度的百分比。除了PR2和TurtleBot,核心的ROS packages一般不包含驱动机器人的马达控制器。但是有许多第三方的ROS开发者发布了一些很受欢迎的控制器,如Arduino, ArbotiX等…

The ROS Base Controller

再上升一个级别,就是要在现实世界中获取精确的速度和角速度,通常使用的方法为PID(Proportional Integral Derivative),所利用的是误差的比例, 积分, 微分。驱动器和PID controller 通常组合包含在一个ROS节点中,这个节点名为base controller。base controller通常运行于直接与电机控制器连接的电脑上,并且是启动机器后需要首先运行的节点之一。

base controller发布里程计信息于 /odom 话题中,并且订阅/cmd_vel话题的运动命令。与此同时,controller节点有时还会发布/odom frame到base frame(/base_link or /base_footprint)的transform. 像TurtleBot,则会使用robot_pose_ekf package 来结合轮子的里程计信息和陀螺仪信息来获得机器人位置和朝向的精确估计,与此同时还会发布/odom到/base_footprint的transform. robot_pose_ekf package的原理是,利用一个6D模型(3D Position and 3D orientation)的扩展卡尔曼滤波器来结合轮子里程计、IMU传感器和视觉里程计的信息以获取机器人精确的3D位姿估计.

在实际应用中,如果不是详细的工程需要,这些硬件方面的东西不用过分关注,ROS都会帮我们搞定。我们的编程可以仅仅关注理想的线速度和角速度,并且我们在ROS交互界面所写的代码应该都可以正常工作于base controller.

Frame-Base Motion using the move_base ROS Package

再上升一个级别,就是允许我们指挥机器人到达一个特定的位置和朝向(相对于某一frame),常用的包为move_base. 总的来说,它可以让机器人移动到指定位置并实现避障功能。它会结合里程计信息以及局部和全局的cost maps,以实现全局路径规划和局部路径规划;同时它还可以控制机器人的线速度、角速度和加速度(速度范围由configuration files设置)。

SLAM using the gmapping and amcl ROS Packages

再再上升一个层次,就是ROS能够让我们使用gmapping来创建环境的地图. 雷达扫描的建图效果最好,但也可以使用Kinect or Asus Xtion深度相机来提供一个仿真的雷达扫描。TurtleBot meta-package包含了SLAM所用到的所有工具。

一旦建图成功,便可以调用ROS提供的acml (adaptive Monte Carlo localization自适应蒙特卡洛定位法)包,根据即时的雷达信息和里程计信息来自动地定位机器人.这个包允许操作者任意指定地图的一个位置,然后机器人会自己规划路径到达目的地,并且还能够避障。

Semantic Goal

最后一个高层次,就是机器人可以实现具有语义信息的运动目标,如“去厨房帮我拿个苹果”, 甚至更简单的"帮我拿个苹果"。

Summary

总的来说,整个montion control hierarchy如下所示:

7.3Twisting and Turning with ROS

/cmd_vel 话题常用的消息类型是Twist :

$ rosmsg show geometry_msgs/Twist

#m/s

geometry_msgs/Vector3 linear

float64 x

float64 y

float64 z

#radians/s, 1 radian = 57°

geometry_msgs/Vector3 angular

float64 x

float64 y

float64 z

对于一个在二维平面的机器人,它的线速度只有x方向,角速度只有z轴,只有飞行器或者水下机器人才有六个完整的部分。

Example Twist Messages

如果想要机器人以0.1m/s的速度直线运动,那么Twist消息部分应如下所示:

'{linear: {x: 0.1, y: 0, z: 0}, angular: {x: 0, y: 0, z: 0}}'

如果想要机器人即直行又逆时针转弯:

'{linear: {x: 0.1, y: 0, z: 0}, angular: {x: 0, y: 0, z: 1.0}}'

Monitoring Robot Motion using RViz

$ roslaunch rbx1_bringup fake_turtlebot.launch

$ rosrun rviz rviz -d `rospack find rbx1_nav`/sim.rviz

$ rostopic pub -r 10 /cmd_vel geometry_msgs/Twist '{linear: {x: 0.1, y:

0, z: 0}, angular: {x: 0, y: 0, z: -0.5}}'

最终在Rviz界面中,机器人会如下显示:

在Odometry里程计当中,Keep=50意味着在最久远的箭头消失前,最多存留有50个箭头;Position Tolerance=0.1m and Angle Tolerance=0.05意味着一个新的箭头的更新频率。

在Odometry里程计当中,Keep=50意味着在最久远的箭头消失前,最多存留有50个箭头;Position Tolerance=0.1m and Angle Tolerance=0.05意味着一个新的箭头的更新频率。

停止机器人的运动:

#1. ctrl+c停止发布消息

#2. 发布空的Twist消息

$ rostopic pub -1 /cmd_vel geometry_msgs/Twist '{}'

第二个示例:

下面示例效果是先直行3秒(-l option意思是"publish once"),然后还是无止境地向前逆时针转圈。

$ rostopic pub -1 /cmd_vel geometry_msgs/Twist '{linear: {x: 0.2, y: 0, z: 0}, angular: {x: 0, y: 0, z: 0}}'; rostopic pub -r 10 /cmd_vel geometry_msgs/Twist '{linear: {x: 0.2, y: 0, z: 0}, angular: {x: 0, y: 0, z: 0.5}}'

7.4Calibrating Your Robot’s Odometry

因为地板的摩擦因数不同,所以要对机器进行里程计标定以得到机器人角位移和线位移的修正因子。在进行标定之前,需要安装Orocos kinematics packages:

$ sudo apt-get install ros-kinetic-orocos-kdl ros-kinetic-python-orocos-kdl

rbx1_nav package有两个标定脚本: calibrate_linear and calibrate_angular.py. 第一个通过监听 /odom话题让机器人向前移动1米,并且在离目的地还有1厘米时停下来,可以通过更改脚本或者使用rqt_reconfigure来调整目标距离和移动速度;第二个脚本是通过监听 /odom话题让机器人旋转360°. 接下来两节描述了如何根据结果来调整PID参数

Linear Calibration

本打算使用的机器人turtlebot3,可是树莓派上Ubuntu的网络连接无法显示,无法连接至WIFI,故还是使用Turtlebot2进行实验。

用卷尺拉到至少1米,然后将机器人的标志点与卷尺端对齐,并且让机器人平行于卷尺。对于iRobot Create based TurtleBot,ssh进入机器人的笔记本(因为我只有一个笔记本,所以直接连线操作),运行如下代码:

#启动节点

$ roslaunch rbx1_bringup turtlebot_minimal_create.launch

但却出现如下的错误代码:

process[turtlebot_laptop_battery-8]: started with pid [6698]

[WARN] [1568146010.167950]: Create : robot not connected yet, sci not available

[WARN] [1568146013.174055]: Create : robot not connected yet, sci not available

[WARN] [1568146016.358069]: Invalid OI Mode Reported 15

[WARN] [1568146016.359836]: Invalid Charging Source 252, actual value: 252

[ERROR] [1568146016.430697]: Failed to contact device with error: [Distance, angle displacement too big, invalid readings from robot. Distance: 10.24, Angle: 0.00]. Please check that the Create is powered on and that the connector is plugged into the Create.

[kinect_breaker_enabler-4] process has finished cleanly

log file: /home/jing/.ros/log/82f36ea0-d406-11e9-9222-d85de2302bb5/kinect_breaker_enabler-4*.log

[WARN] [1568146022.842394]: Invalid OI Mode Reported 15

[WARN] [1568146022.843971]: Invalid Charging Source 252, actual value: 252

[WARN] [1568146023.062029]: Invalid OI Mode Reported 108

[WARN] [1568146023.063756]: Invalid Charging Source 111, actual value: 111

[WARN] [1568146023.282010]: Invalid OI Mode Reported 241

[WARN] [1568146023.501217]: Invalid OI Mode Reported 243

[ERROR] [1568146023.574445]: Failed to contact device with error: [Distance, angle displacement too big, invalid readings from robot. Distance: 0.00, Angle: -554.04]. Please check that the Create is powered on and that the connector is plugged into the Create.

原因应该是我用的turtlebot2是kobuki底盘的,与作者所用的Create底盘不一样,所以配置文件也是不一样的,所以会显示Create : robot not connected yet, sci not available,意味着一直没有连接上机器人,因为找不到Create底盘的机器人。

#运行线速度标定节点

$ rosrun rbx1_nav calibrate_linear.py

最后运行rqt_reconfigure:

$ rosrun rqt_reconfigure rqt_reconfigure

选择rqt_configure窗口里的calibrate_linear节点。勾选start_test开始实验(若机器人仍不移动,则反复勾选),理论上机器人应该向前移动1m。遵从以下步骤来获取校正因子:

- 记录机器人实际运动的距离actual distance

- 记录x = actual distance / target distance

- 回到reconfigure的GUI界面,new(odom_linear_scale_correction) =

x*old(odom_linear_scale_correction) - 将机器人移动至初始位置,再进行标定实验

- 重复以上实验直至结果令人满意,1cm/1m的精度大概足矣

最终得到的校正因子需要用合适的launch文件添加至robot’s base controller的参数当中,若使用的是turtlebot, 则在turtlebot.launch中添加一下代码:

# X is my correction factor

<param name="turtlebot_node/odom_linear_scale_correction" value="X"/>

如果用的是ArbotiX base controller,则编辑YAML配置文件,将tick_meter更改为除以修正因子的新值。最终,启动calibration_linear.py script,进入rqt_reconfigure GUI界面,将odom_linear_scale_correction设置为1.0,因为在参数文件中已经进行了校正。

562

562

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?