Tensorflow高阶操作

三、Tensorflow高阶操作

3.1 合并与分割

3.1.1 tf.concat([a, b], axis) 拼接

tf.concat([a, b], axis)合并,在原来的维度上累加,axis维度合并,要求其他维度的长度都相等

In [8]:a=tf. ones([4,32,8])

In [9]:b=tf. ones([4,3,8])

In [10]: tf. concat([a,b], axis=1). shape

Out[10]: TensorShape([4,35,8])

3.1.2 tf.stack([a, b], axis) 堆叠

tf.stack([a, b], axis)堆叠,axis维度合并,要求所有维度的长度都相等

In [19]:a. shape Out[19]: TensorShape([4,35,8])

In [23]:b. shape Out[23]: Tensor Shape([4,35,8])

In [20]: tf. concat([a,b], axis=-1). shape

Out[20]: Tensor Shape([4,35,16])

In [21]: tf. stack([a,b], axis=0). shape

Out[21]: Tensor Shape([2,4,35,8])

In [22]: tf. stack([a,b], axis=3). shape

Out[22]: TensorShape([4,35,8,2])

3.1.3 res=tf.unstack(c, axis) 打散

res=tf.unstack(c, axis=3) c的第3维上打散成多个张量,数量是这个维度的长度

In [30]:a. shape # Tensor Shape([4,35,8])

In [32]:b=tf. ones([4,35,8])

In [33]:c=tf. stack([a,b])

In [34]:c. shape Out[34]: TensorShape([2,4,35,8])

In [35]: aa, bb=tf. unstack(c, axis=0)

In [36]: aa. shape, bb. shape

Out[36]:(Tensor Shape([4,35,8]), TensorShape([4,35,8]))

#[2,4,35,8]

In [41]: res=tf. unstack(c, axis=3)

In [42]: res[o]. shape, res[7]. shape

Out[42]:(TensorShape([2,4,35]), TensorShape([2,4,35]))

3.1.4 tf.split(c, axis, num_or_size_splits) 分割

tf.split(c, axis=3, num_or_size_splits=[2,3,2])比unstack更灵活,将c按照第三维度轴分割为大小分别为2,3,2三个张量

split VS unstack:

#[2,4,35,8]

In[43]: res=tf. unstack(c, axis=3)

In [44]: len(res)

Out[44]:8

In [45]: res=tf. split(c, axis=3, num_or_size_splits=2)

In [46]: len(res)

0ut[46]:2

In [47]: res[0]. shape

Out[47]: TensorShape([2,4,35,4])

In [48]: res=tf. split(c, axis=3, num or_size_splits=[2,2,4])

In [49]: res[0]. shape, res[2]. shape

Out[49]:(TensorShape([2,4,35,2]), TensorShape([2,4,35,4]))

3.2 数据统计

tf.norm(a)求a的范数,默认是二范数

tf.norm(a, ord=1, axis=1) 第一维看成一个整体,求一范数,ord=1为一范数

tf.reduce_min reduce是为了提醒我们这些操作会降维

tf.reduce_max计算tensor指定轴方向上的各个元素的最大值;

tf.reduce_mean计算tensor指定轴方向上的所有元素的平均值,不指定轴则求所有元素的均值;

参考:https://www.jianshu.com/p/fa334fd76d2f

In [76]:a=tf. random. normal([4,10])

In [78]: tf. reduce_min(a), tf. reduce_max(a), tf. reduce_mean(a)

0ut[78]:

(<tf. Tensor: id=283, shape=(), dtype=float32, numpy=-1.1872448>,

<tf. Tensor: id=285, shape=(), dtype=float32, numpy=2.1353827>,<tf. Tensor: id=287, shape=(), dtype=float32, numpy=0.3523524>)

In [79]: tf. reduce_min(a, axis=1), tf. reduce_max(a, axis=1), tf. reduce_mean(a, axis=1)

0ut[79]:

(<tf. Tensor: id=292, shape=(4,), dtype=float32, numpy=array([-0.3937837,

-1.1872448,-1.0798895,-1.1366792], dtype=float32)>,

<tf. Tensor: id=294, shape=(4,), dtype=float32, numpy=array([1.9718986,1.1612172,

2.1353827,2.0984378], dtype=float32)〉,

<tf. Tensor: id=296, shape=(4,), dtype=float32, numpy=array([ 0.61504304,

-0.01389184,0.606747,0.20151143], dtype=float32)>)

tf.argmax(a)默认返回axis=0上最大值的下标

tf.argmin(a)默认返回axis=0上最小值的下标

In [80]:a. shape

Out[80]: TensorShape([4,10])

In [81]: tf. argmax(a). shape

0ut[81]: Tensor Shape([10])

In [83]: tf. argmax(a)

Out[83]:<tf. Tensor: id=305, shape=(10,), dtype=int64, numpy=array([o,0,2,3,1,

3,0,1,2,0])>

In [82]: tf. argmin(a). shape

Out[82]: TensorShape([10])

tf.equak(a,b)逐元素比较,返回True or False

tf.reduce_sum( input_tensor, axis=None, keepdims=None, name=None, reduction_indices=None, keep_dims=None)用于计算张量tensor沿着某一维度的和,可以在求和后降维。

In [44]:a=tf. constant([1,2,3,2,5])

In [45]:b=tf. range(5) In [46]: tf. equal(a,b)

Out[46]:<tf. Tensor: id=170, shape=(5,), dtype=bool, numpy=array([ False, False, False, False, False])>

In [47]: res=tf. equal(a,b)

In [48]: tf. reduce_sum(tf. cast(res, dtype=tf. int32))

Out[48]:<tf. Tensor: id=175, shape=(), dtype=int32, numpy=0>

tf.unique(a)去除重复元素,返回一个数组和一个idx数组,数组中元素无重复。 参考W3Cschool

In [116]:a=tf. range(5)

In [117]: tf. unique(a)

Out[117]: Unique(y=<tf. Tensor: id=351, shape=(5,), dtype=int32, numpy=array([o,1,

2,3,4], dtype=int32)>, idx=<tf. Tensor: id=352, shape=(5,), dtype=int32, numpy=array([0,1,2,3,4], dtype=int32)>)

In [118]:a=tf. constant([4,2,2,4,3])

In [119]: tf. unique(a)

Out[119]: Unique(y=<tf. Tensor: id=356, shape=(3,), dtype=int32, numpy=array([4,2,

3], dtype=int32)>, idx=<tf. Tensor: id=357, shape=(5,), dtype=int32, numpy=array([0,1,1,0,2], dtype=int32)>)

tf.reduce_all():计算tensor指定轴方向上的各个元素的逻辑和(and运算)

tf.reduce_any():计算tensor指定轴方向上的各个元素的逻辑或(or运算)

3.3 张量排序

3.3.1 sort 和 argsort

tf.sort(a, direction='DESCENDING)' 按照升序或者降序对张量进行排序

In [86]:a=tf. random. shuffle(tf. range(5))# numpy=array([2,0,3,4,1])

In [90]: tf. sort(a, direction=' DESCENDING!)

Out[90]:<tf. Tensor: id=397, shape=(5,), dtype=int32, numpy=array([4,3,2,1,

0])>

In [91]: tf. argsort(a, direction=' DESCENDING')

Out[91]:<tf. Tensor: id=409, shape=(5,), dtype=int32, numpy=array([3,2,0,4,

1])>

In [92]: idx=tf. argsort(a, direction=' DESCENDING!)

In [93]: tf. gather(a, idx)

Out[93]:<tf. Tensor: id=422, shape=(5,), dtype=int32, numpy=array([4,3,2,1,

0])>

tf.argsort(a) 按照升序或者降序对张量进行排序,但返回的是索引

In [95]:a=tf. random. unif orm([3,3], maxval=10, dtype=tf. int32)

array([[4,6,8],

[9,4,7],

[4,5,1]]) In [97]: tf. sort(a)

array([[4,6,8],

[4,7,9],

[1,4,5]]) In [98]: tf. sort(a, direction=' DESCENDING')

array([[8,6,4],

[9,7,4],

[5,4,1]]) In [99]: idx=tf. argsort(a)

array([[0,1,2],

[1,2,0],

[2,0,1]])

3.3.2 top_k函数

tf.math.top_k( )返回前k个最大值

In [104]:a array([[4,6,8],

[9,4,7],

[4,5,1]])

In [101]: res=tf. math. top_k(a,2)

In [102]: res. indices

<tf. Tensor: id=467, shape=(3,2), dtype=int32, numpy=

array([[2,1],

[0,2],

[1,0]])>

In [103]: res. values

<tf. Tensor: id=466, shape=(3,2), dtype=int32, numpy=

array([[8,6],

[9,7],

[5,4]])>

3.3.3 top_k预测准确度

即前k个预测值的正确率

import tensorflow as tf

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

tf.random.set_seed(2467)

def accuracy(output, target, topk=(1,)):

maxk = max(topk)

batch_size = target.shape[0]

pred = tf.math.top_k(output, maxk).indices

pred = tf.transpose(pred, perm=[1, 0])

target_ = tf.broadcast_to(target, pred.shape)

# [10, b]

correct = tf.equal(pred, target_)

res = []

for k in topk:

correct_k = tf.cast(tf.reshape(correct[:k], [-1]), dtype=tf.float32)

correct_k = tf.reduce_sum(correct_k)

acc = float(correct_k * (100.0 / batch_size))

res.append(acc)

return res

output = tf.random.normal([10, 6])

output = tf.math.softmax(output, axis=1)

target = tf.random.uniform([10], maxval=6, dtype=tf.int32)

print('prob:', output.numpy())

pred = tf.argmax(output, axis=1)

print('pred:', pred.numpy())

print('label:', target.numpy())

acc = accuracy(output, target, topk=(1, 2, 3, 4, 5, 6))

print('top-1-6 acc:', acc)

Out:

prob: [[0.25310278 0.21715644 0.16043882 0.13088997 0.04334083 0.19507109]

[0.05892418 0.04548917 0.00926314 0.14529602 0.66777605 0.07325139]

[0.09742808 0.08304427 0.07460099 0.04067177 0.626185 0.07806987]

[0.20478569 0.12294924 0.12010485 0.13751231 0.36418733 0.05046057]

[0.11872064 0.31072393 0.12530336 0.1552888 0.2132587 0.07670452]

[0.01519807 0.09672114 0.1460476 0.00934331 0.5649092 0.16778067]

[0.04199061 0.18141054 0.06647632 0.6006175 0.03198383 0.07752118]

[0.09226219 0.2346089 0.13022321 0.16295874 0.05362028 0.3263266 ]

[0.07019574 0.0861177 0.10912605 0.10521299 0.2152082 0.4141393 ]

[0.01882887 0.26597694 0.19122466 0.24109262 0.14920162 0.13367532]]

pred: [0 4 4 4 1 4 3 5 5 1]

label: [0 2 3 4 2 4 2 3 5 5]

top-1-6 acc: [40.0, 40.0, 50.0, 70.0, 80.0, 100.0]

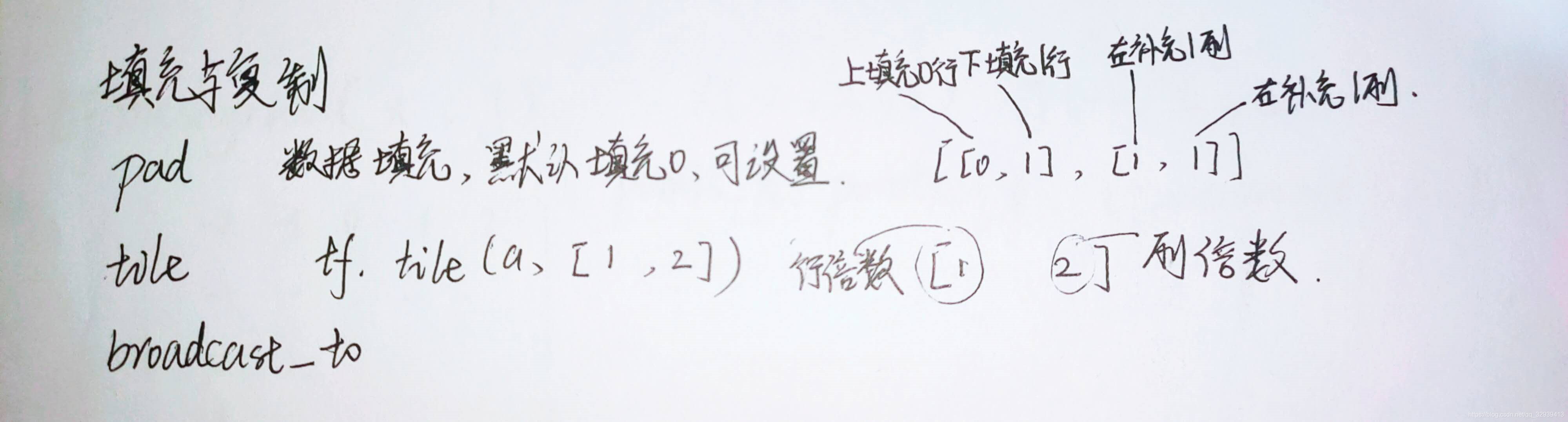

3.4 填充与复制

3.4.1 Pad

a=tf.random.normal([4,28,28,3])

b=tf.pad(a, [[0, 0], [0, 1], [1, 1], [0, 0]]) # 图片左、右、下方向各填充1个像素

In [125]: tf. pad(a,[[1,1],[0,0]])

<tf. Tensor: id=375, shape=(5,3), dtype=int32, numpy=

array([[0,0,0],

[0,1,2],[3,4,5],[6,7,8],

[0,0,0]], dtype=int32)>

In [126]: tf. pad(a,[[1,1],[1,0]])

<tf. Tensor: id=378, shape=(5,4), dtype=int32, numpy=

array([[o,0,0,0],

[0,0,1,2],[0,3,4,5],[0,6,7,8],

[0,0,0,0]], dtype=int32)>

In [127]: tf. pad(a,[[1,1],[1,1]])

<tf. Tensor: id=381, shape=(5,5), dtype=int32, numpy=

array([[o,0,0,0,0],

[0,0,1,2,0],[0,3,4,5,0],[0,6,7,8,0],

[0,0,0,0,0]], dtype=int32)>

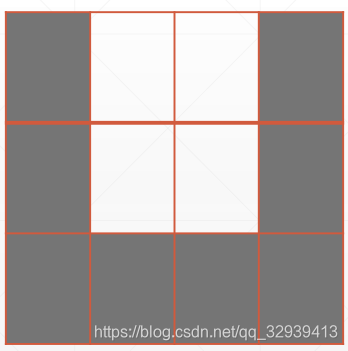

3.4.2 Image Padding

In [128]:a=tf. random. normal([4,28,28,3])

In [129]:b=tf. pad(a,[[0,0],[2,2],[2,2],[0,0]])

In [130]:b. shape

Out[130]: TensorShape([4,32,32,3])

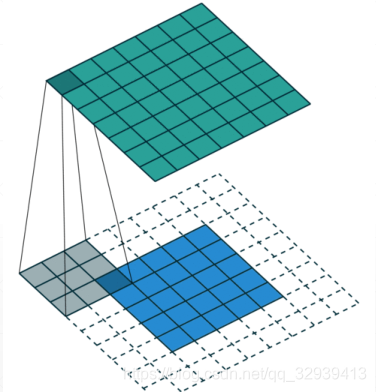

3.4.3 tile

tf.tile( input, multiples, name=None )是用来对张量(Tensor)进行扩展的,其特点是对当前张量内的数据进行一定规则的复制。最终的输出张量维度不变。

In [132]:a

<tf. Tensor: id=396, shape=(3,3), dtype=int32, numpy=

array([[0,1,2],

[3,4,5],

[6,7,8]], dtype=int32)>

In [133]: tf. tile(a,[1,2])

<tf. Tensor: id=399, shape=(3,6), dtype=int32, numpy=

array([[0,1,2,0,1,2],

[3,4,5,3,4,5],

[6,7,8,6,7,8]], dtype=int32)>

In [134]: tf. tile(a,[2,1])

<tf. Tensor: id=402, shape=(6,3), dtype=int32, numpy=

array([[o,1,2],

[3,4,5],

[6,7,8],

[0,1,2],

[3,4,5],

[6,7,8]], dtype=int32)>

In [135]: tf. tile(a,[2,2])

<tf. Tensor: id=405, shape=(6,6), dtype=int32, numpy=

array([[0,1,2,0,1,2],

[3,4,5,3,4,5],

[6,7,8,6,7,8],

[0,1,2,0,1,2],

[3,4,5,3,4,5],

[6,7,8,6,7,8]], dtype=int32)>

tile VS broadcast_to

In [139]: aa=tf. expand_dims(a, axis=0)# Tensor Shape([1,3,3])

<tf. Tensor: id=410, shape=(1,3,3), dtype=int32, numpy=

array([[[0,1,2],

[3,4,5],

[6,7,8]]], dtype=int32)>

In [142]: tf. tile(aa,[2,1,1])

<tf. Tensor: id=413, shape=(2,3,3), dtype=int32, numpy=

array([[[0,1,2],

[3,4,5],

[6,7,8]],[[0,1,2],

[3,4,5],

[6,7,8]]], dtype=int32)>

In [143]: tf. broadcast_to(aa,[2,3,3])

<tf. Tensor: id=416, shape=(2,3,3), dtype=int32, numpy=

array([[[0,1,2],

[3,4,5],

[6,7,8]],[[o,1,2],

[3,4,5],

[6,7,8]]], dtype=int32)>

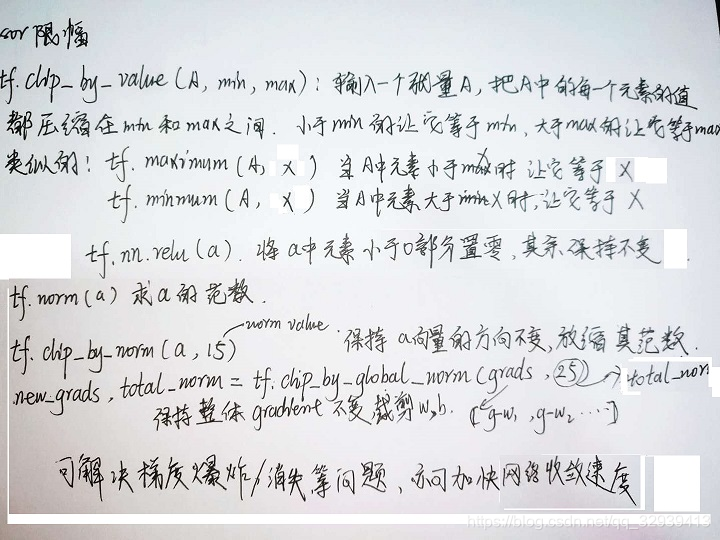

3.5 张量限幅

new_grads, total_norm = tf.clip_by_globel_norm(grads, 15) 等比例放缩,不改变数据的分布,不影响梯度方向

3.5.1 clip_by_value

In [149]:a Out[149]:<tf. Tensor: id=422, shape=(10,), dtype=int32, numpy=array([o,1,2,3,

4,5,6,7,8,9], dtype=int32)>

In [150]: tf. maximum(a,2)

0ut[150]:<tf. Tensor: id=426, shape=(10,), dtype=int32, numpy=array([2,2,2,3,

4,5,6,7,8,9], dtype=int32)>

In [151]: tf. minimum(a,8)

Out[151]:<tf. Tensor: id=429, shape=(10,), dtype=int32, numpy=array([o,1,2,3,

4,5,6,7,8,8], dtype=int32)>

In [152]: tf. clip_by_value(a,2,8)

Out[152]:<tf. Tensor: id=434, shape=(10,), dtype=int32, numpy=array([2,2,2,3,

4,5,6,7,8,8], dtype=int32)>

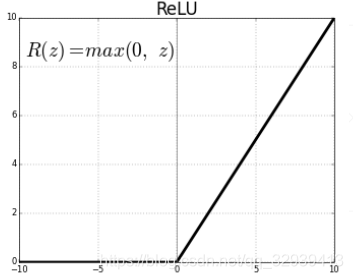

3.5.2 relu

In [153]:a=a-5

Out[154]:<tf. Tensor: id=437, shape=(10,), dtype=int32, numpy=array([-5,-4,-3,

-2,-1,0,1,2,3,4], dtype=int32)>

In [155]: tf. nn. relu(a)

Out[155]:<tf. Tensor: id=439, shape=(10,), dtype=int32, numpy=array([o,0,0,0,

0,0,1,2,3,4], dtype=int32)>

In [156]: tf. maximum(a,0)

Out[156]:<tf. Tensor: id=442, shape=(10,), dtype=int32, numpy=array([o,0,0,0,

0,0,1,2,3,4], dtype=int32)>

3.5.3 clip_by_norm

In [157]:a=tf. random. normal([2,2], mean=10)

<tf. Tensor: id=449, shape=(2,2), dtype=float32, numpy=

array([[12.217459,10.1498375],

[10.84643,10.972536]], dtype=float32)>

In [159]: tf. norm(a)

Out[159]:<tf. Tensor: id=455, shape=(), dtype=float32, numpy=22.14333>

In [161]: aa=tf. clip_by_norm(a,15)

<tf. Tensor: id=473, shape=(2,2), dtype=float32, numpy=

array([[8.276167,6.8755493],

[7.3474245,7.45285]], dtype=float32)>

In [162]: tf. norm(aa)

Out[162]:<tf. Tensor: id=496, shape=(), dtype=float32, numpy=15.000001>

3.5.4 限幅前后对比

print(1==before==1)

for g in grads: print(tf. norm(g))

grads,=tf. clip_by_global_norm(grads,15)

print('==after==1)

for g in grads: print(tf. norm(g))

out:

i@z68:~/TutorialsCN/code_TensorFLow2.0/lesson18-数据限幅$ python main.py

2.0.0-dev20190225

X:(60000,28,28)y:(60000,10)sample:(128,28,28)(128,10)

==before==

tf.Tensor(118.00854,shape=(),dtype=f loat32)

tf.Tensor(3.5821552,shape=(),dtype=float32)tf.Tensor(146.76697,shape=(),dtype=float32)

tf.Tensor(2.830059,shape=(),dtype=float32)

tf.Tensor(183.28879,shape=(),dtype=float32)tf.Tensor(3.4088597,shape=(),dtype=float32)

==after==

tf.Tensor(6.734187,shape=(),dtype=float32)

tf.Tensor(0.20441659,shape=(),dtype=f loat32)

tf.Tensor(8.375294,shape=(),dtype=float32)

tf.Tensor(0.16149803,shape=(),dtype=f loat32)

tf.Tensor(10.45942,shape=(),dtype=float32)

tf.Tensor(0.19452743,shape=(),dtype=f loat32)

0Loss:41.25679016113281

3.6 其它高阶操作

3.6.1 Where

indices=tf.where(a>0) 返回所有为True的坐标,配合tf.gather_nd(a, indices)使用

有两种用法:

1、tf.where(tensor)

tensor 为一个bool 型张量,where函数将返回其中为true的元素的索引。

In [3]:a=tf. random. normal([3,3])

<tf. Tensor: id=11, shape=(3,3), dtype=float32, numpy=

array([[ 1.6420907,0.43938753,-0.31872085],

[1.144599,-0.02425919,-0.9576591],

[1.5931814,0.1182256,-0.39948994]], dtype=float32)>

In [5]: mask=a>0

<tf. Tensor: id=14, shape=(3,3), dtype=bool, numpy=

array([[ True, True, False],

[ True, False, False],

[ True, True, False]])>

In [7]: tf. boolean_mask(a, mask)

<tf. Tensor: id=42, shape=(5,), dtype=float32, numpy=

array([1.6420907,0.43938753,1.144599,1.5931814,0.1182256], dtype=float32)>

In [8]: indices=tf. where(mask)

<tf. Tensor: id=44, shape=(5,2), dtype=int64, numpy=

array([[0,0],

[0,1],

[1,0],

[2,0],

[2,1]])>

In [10]: tf. gather_nd(a, indices)

<tf. Tensor: id=46, shape=(5,), dtype=float32, numpy=

array([1.6420907,0.43938753,1.144599,1.5931814,0.1182256], dtype=float32)>

2、tf.where(tensor,a,b)

a,b为和tensor相同维度的tensor,将tensor中的true位置元素替换为a中对应位置元素,false的替换为b中对应位置元素。

In [11]: mask

<tf. Tensor: id=14, shape=(3,3), dtype=bool, numpy=

array([[ True, True, False],

[ True, False, False],

[ True, True, False]])>

In [12]:A=tf. ones([3,3])

In [13]:B=tf. zeros([3,3])

In [14]: tf. where(mask,A,B)

<tf. Tensor: id=55, shape=(3,3), dtype=float32, numpy=

array([[1.,1.,0.],

[1.,0.,0.],

[1.,1.,0.], dtype=float32)>

3.6.2 tf.scatter_nd

根据indices将updates散布到新的(初始为零)张量。

根据索引对给定shape的零张量中的单个值或切片应用稀疏updates来创建新的张量。此运算符是tf.gather_nd运算符的反函数,它从给定的张量中提取值或切片。

In [17]: indices=tf. constant([[4],[3],[1],[7]])

In[18]: updates=tf. constant([9,10,11,12])

In [19]: shape=tf. constant([8])

In [20]: tf. scatter_nd(indices, updates, shape)

Out[20]:<tf. Tensor: id=60, shape=(8,), dtype=int32, numpy=array([ 0,11,0,10,

9,0,0,12], dtype=int32)>

警告:更新应用的顺序是非确定性的,所以如果indices包含重复项的话,则输出将是不确定的。

3.6.3 tf.meshgrid(x, y)

tf.meshgrid( *args, **kwargs )用于从数组a和b产生网格。生成的网格矩阵A和B大小是相同的。它也可以是更高维的。用法: [A,B]=Meshgrid(a,b),生成size(b) * size(a)大小的矩阵A和B。它相当于a从一行重复增加到size(b)行,把b转置成一列再重复增加到size(a)列

参考W3Cschool:TensorFlow张量变换:tf.meshgrid

2954

2954

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?