在之前一篇文章Linux ARM64 spin-table启动方式实现Hiberate功能_Dingjun798077632的博客-CSDN博客中尝试了使用spin-table启动方式实现Hiberate功能,原本应该采用PSIC启动方式,所以学习了一下PSIC启动方式,这里分析下PSIC启动

时涉及到的ATF Bl31固件代码。

关于PSIC启动的介绍可以参考linux cpu管理(三) psci启动 - 知乎

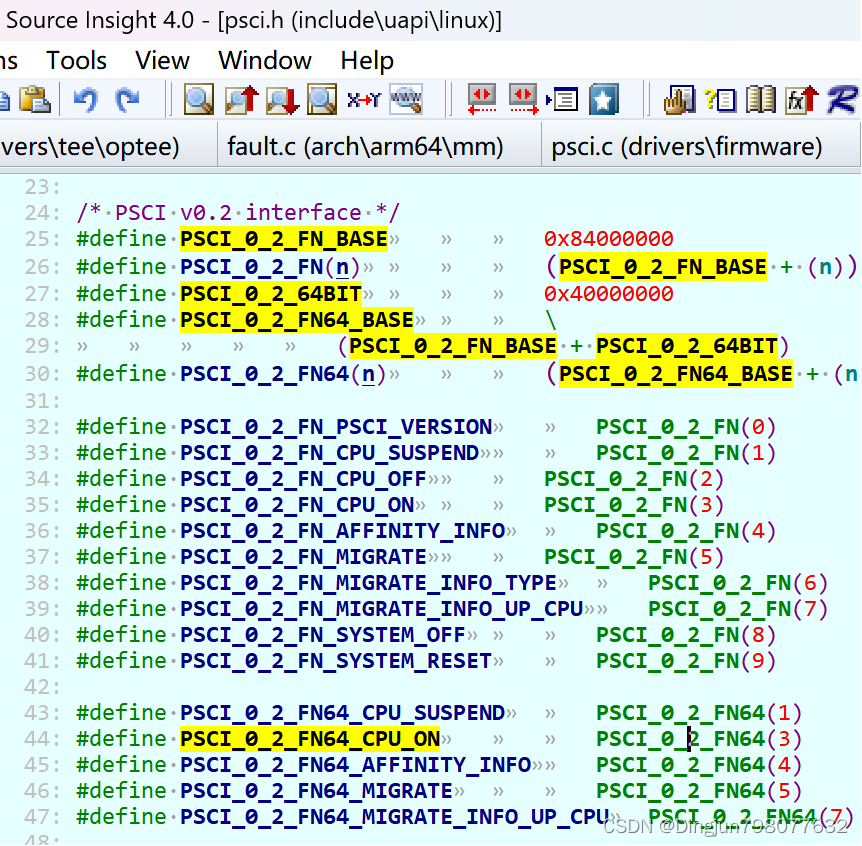

我们知道PSIC启动方式,主cpu启动secondary cpu时,最后是主CPU通过SMC陷入EL3的BL31固件中,调用bl31中电源管理相关服务的接口,完成secondary cpu的启动。psci接口规定了命令对应的function_id、接口的输入参数以及返回值。 其中输入参数可通过x0–x7寄存器传递,而返回值通过x0–x4寄存器传递。通过function_id,Linux内核指定调用BL31或者BL32(如果存在)的相关服务。

首先分析BL31的异常处理相关代码,这里用到ATF固件代码是从NXP官网下载的,对应NXP imx8系列。

一、BL31启动入口与异常向量入口配置

从BL31 中的bl31.ld.S 中SECTIONS定义可以知道,bl31的入口在文件bl31_entrypoint.s中,并且入口函数就是bl31_entrypoint。

bl31.ld.S代码如下:

OUTPUT_FORMAT(PLATFORM_LINKER_FORMAT)

OUTPUT_ARCH(PLATFORM_LINKER_ARCH)

//!指定了BL31入口函数

ENTRY(bl31_entrypoint)

......

MEMORY {

RAM (rwx): ORIGIN = BL31_BASE, LENGTH = BL31_LIMIT - BL31_BASE

#if SEPARATE_NOBITS_REGION

NOBITS (rw!a): ORIGIN = BL31_NOBITS_BASE, LENGTH = BL31_NOBITS_LIMIT - BL31_NOBITS_BASE

#else

#define NOBITS RAM

#endif

......

SECTIONS

{

. = BL31_BASE;

ASSERT(. == ALIGN(PAGE_SIZE),

"BL31_BASE address is not aligned on a page boundary.")

__BL31_START__ = .;

#if SEPARATE_CODE_AND_RODATA

.text . : {

__TEXT_START__ = .;

//指定了BL31入口文件

*bl31_entrypoint.o(.text*)

*(SORT_BY_ALIGNMENT(SORT(.text*)))

*(.vectors)

. = ALIGN(PAGE_SIZE);

__TEXT_END__ = .;

} >RAM

......

}在文件bl31\aarch64\bl31_entrypoint.S 中找到入口函数bl31_entrypoint代码如下:

func bl31_entrypoint

#if (defined COCKPIT_A53) || (defined COCKPIT_A72)

/*

* running on cluster Cortex-A53:

* => proceed to bl31 with errata

*/

mrs x20, mpidr_el1

ands x20, x20, #MPIDR_CLUSTER_MASK /* Test Affinity 1 */

beq bl31_entrypoint_proceed_errata

/*

* running on cluster Cortex-A72:

* => trampoline to 0xC00000xx

* where bl31 version for A72 stands

*/

mov x20, #0xC0000000

add x20, x20, #bl31_entrypoint_proceed - bl31_entrypoint

br x20

bl31_entrypoint_proceed_errata:

#endif

#if (defined COCKPIT_A53) || (defined COCKPIT_A72)

bl31_entrypoint_proceed:

#endif

/* ---------------------------------------------------------------

* Stash the previous bootloader arguments x0 - x3 for later use.

* ---------------------------------------------------------------

*/

mov x20, x0

mov x21, x1

mov x22, x2

mov x23, x3

#if !RESET_TO_BL31

... ...

#else

/* ---------------------------------------------------------------------

* For RESET_TO_BL31 systems which have a programmable reset address,

* bl31_entrypoint() is executed only on the cold boot path so we can

* skip the warm boot mailbox mechanism.

* ---------------------------------------------------------------------

*/

el3_entrypoint_common \

_init_sctlr=1 \

_warm_boot_mailbox=!PROGRAMMABLE_RESET_ADDRESS \

_secondary_cold_boot=!COLD_BOOT_SINGLE_CPU \

_init_memory=1 \

_init_c_runtime=1 \

_exception_vectors=runtime_exceptions \

_pie_fixup_size=BL31_LIMIT - BL31_BASE

/* ---------------------------------------------------------------------

* For RESET_TO_BL31 systems, BL31 is the first bootloader to run so

* there's no argument to relay from a previous bootloader. Zero the

* arguments passed to the platform layer to reflect that.

* ---------------------------------------------------------------------

*/

mov x20, 0

mov x21, 0

mov x22, 0

mov x23, 0

#endif /* RESET_TO_BL31 */

/* --------------------------------------------------------------------

* Perform BL31 setup

* --------------------------------------------------------------------

*/

mov x0, x20

mov x1, x21

mov x2, x22

mov x3, x23

bl bl31_setup

#if ENABLE_PAUTH

/* --------------------------------------------------------------------

* Program APIAKey_EL1 and enable pointer authentication

* --------------------------------------------------------------------

*/

bl pauth_init_enable_el3

#endif /* ENABLE_PAUTH */

/* --------------------------------------------------------------------

* Jump to main function

* --------------------------------------------------------------------

*/

bl bl31_main

/* --------------------------------------------------------------------

* Clean the .data & .bss sections to main memory. This ensures

* that any global data which was initialised by the primary CPU

* is visible to secondary CPUs before they enable their data

* caches and participate in coherency.

* --------------------------------------------------------------------

*/

adrp x0, __DATA_START__

add x0, x0, :lo12:__DATA_START__

adrp x1, __DATA_END__

add x1, x1, :lo12:__DATA_END__

sub x1, x1, x0

bl clean_dcache_range

adrp x0, __BSS_START__

add x0, x0, :lo12:__BSS_START__

adrp x1, __BSS_END__

add x1, x1, :lo12:__BSS_END__

sub x1, x1, x0

bl clean_dcache_range

b el3_exit

endfunc bl31_entrypoint代码用到了一个宏el3_entrypoint_common配置el3的异常向量及地址。在文件include\arch\aarch64\el3_common_macros.S中,可以看到宏el3_entrypoint_common的定义如下:

.macro el3_entrypoint_common \

_init_sctlr, _warm_boot_mailbox, _secondary_cold_boot, \

_init_memory, _init_c_runtime, _exception_vectors, \

_pie_fixup_size

.if \_init_sctlr

/* -------------------------------------------------------------

* This is the initialisation of SCTLR_EL3 and so must ensure

* that all fields are explicitly set rather than relying on hw.

* Some fields reset to an IMPLEMENTATION DEFINED value and

* others are architecturally UNKNOWN on reset.

*

* SCTLR.EE: Set the CPU endianness before doing anything that

* might involve memory reads or writes. Set to zero to select

* Little Endian.

*

* SCTLR_EL3.WXN: For the EL3 translation regime, this field can

* force all memory regions that are writeable to be treated as

* XN (Execute-never). Set to zero so that this control has no

* effect on memory access permissions.

*

* SCTLR_EL3.SA: Set to zero to disable Stack Alignment check.

*

* SCTLR_EL3.A: Set to zero to disable Alignment fault checking.

*

* SCTLR.DSSBS: Set to zero to disable speculation store bypass

* safe behaviour upon exception entry to EL3.

* -------------------------------------------------------------

*/

mov_imm x0, (SCTLR_RESET_VAL & ~(SCTLR_EE_BIT | SCTLR_WXN_BIT \

| SCTLR_SA_BIT | SCTLR_A_BIT | SCTLR_DSSBS_BIT))

msr sctlr_el3, x0

isb

.endif /* _init_sctlr */

#if DISABLE_MTPMU

bl mtpmu_disable

#endif

.if \_warm_boot_mailbox

/* -------------------------------------------------------------

* This code will be executed for both warm and cold resets.

* Now is the time to distinguish between the two.

* Query the platform entrypoint address and if it is not zero

* then it means it is a warm boot so jump to this address.

* -------------------------------------------------------------

*/

bl plat_get_my_entrypoint

cbz x0, do_cold_boot

br x0

do_cold_boot:

.endif /* _warm_boot_mailbox */

.if \_pie_fixup_size

#if ENABLE_PIE

/*

* ------------------------------------------------------------

* If PIE is enabled fixup the Global descriptor Table only

* once during primary core cold boot path.

*

* Compile time base address, required for fixup, is calculated

* using "pie_fixup" label present within first page.

* ------------------------------------------------------------

*/

pie_fixup:

ldr x0, =pie_fixup

and x0, x0, #~(PAGE_SIZE_MASK)

mov_imm x1, \_pie_fixup_size

add x1, x1, x0

bl fixup_gdt_reloc

#endif /* ENABLE_PIE */

.endif /* _pie_fixup_size */

/* ---------------------------------------------------------------------

* Set the exception vectors.

* ---------------------------------------------------------------------

*/

adr x0, \_exception_vectors

msr vbar_el3, x0

Isb

......二、BL31 ATF固件异常处理流程分析

接上文,在宏定义el3_entrypoint_common中,通过下面两行代码

adr x0, \_exception_vectors

msr vbar_el3, x0

配置了el3的异常向量及地址为runtime_exceptions。arm64位与32位架构不同,arm64的异常向量表地址不固定,在启动的时候由软件将异常向量表的地址设置到专用寄存器VBAR(Vector Base Address Register)中。

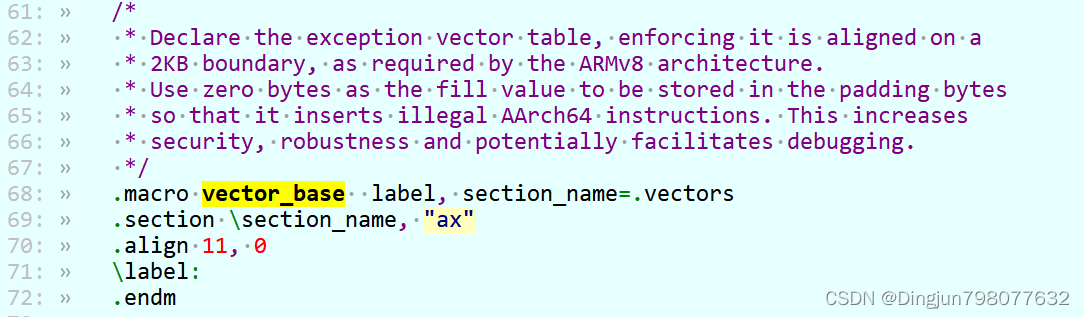

runtime_exceptions在文件bl31\aarch64\runtime_exceptions.S中,Linux内核通过SMC指令陷入到el3,对应的el异常处理入口就在该文件中。这里又涉及到另一个宏vector_base,在文件include/arch/aarch64/asm_macros.S。 宏 vector_base 将向量表放到了 .vectors 段中并且配置ax属性(可执行)等,至于.vectors 段,这里就不在分析了。

include/arch/aarch64/asm_macros.S代码,宏vector_base

bl31\aarch64\runtime_exceptions.S代码

vector_base runtime_exceptions

/* ---------------------------------------------------------------------

* Current EL with SP_EL0 : 0x0 - 0x200

* ---------------------------------------------------------------------

*/

vector_entry sync_exception_sp_el0

#ifdef MONITOR_TRAPS

stp x29, x30, [sp, #-16]!

mrs x30, esr_el3

ubfx x30, x30, #ESR_EC_SHIFT, #ESR_EC_LENGTH

/* Check for BRK */

cmp x30, #EC_BRK

b.eq brk_handler

ldp x29, x30, [sp], #16

#endif /* MONITOR_TRAPS */

/* We don't expect any synchronous exceptions from EL3 */

b report_unhandled_exception

end_vector_entry sync_exception_sp_el0

vector_entry irq_sp_el0

/*

* EL3 code is non-reentrant. Any asynchronous exception is a serious

* error. Loop infinitely.

*/

b report_unhandled_interrupt

end_vector_entry irq_sp_el0

vector_entry fiq_sp_el0

b report_unhandled_interrupt

end_vector_entry fiq_sp_el0

vector_entry serror_sp_el0

no_ret plat_handle_el3_ea

end_vector_entry serror_sp_el0

/* ---------------------------------------------------------------------

* Current EL with SP_ELx: 0x200 - 0x400

* ---------------------------------------------------------------------

*/

vector_entry sync_exception_sp_elx

/*

* This exception will trigger if anything went wrong during a previous

* exception entry or exit or while handling an earlier unexpected

* synchronous exception. There is a high probability that SP_EL3 is

* corrupted.

*/

b report_unhandled_exception

end_vector_entry sync_exception_sp_elx

vector_entry irq_sp_elx

b report_unhandled_interrupt

end_vector_entry irq_sp_elx

vector_entry fiq_sp_elx

b report_unhandled_interrupt

end_vector_entry fiq_sp_elx

vector_entry serror_sp_elx

#if !RAS_EXTENSION

check_if_serror_from_EL3

#endif

no_ret plat_handle_el3_ea

end_vector_entry serror_sp_elx

/* ---------------------------------------------------------------------

* Lower EL using AArch64 : 0x400 - 0x600

* ---------------------------------------------------------------------

*/

vector_entry sync_exception_aarch64

/*

* This exception vector will be the entry point for SMCs and traps

* that are unhandled at lower ELs most commonly. SP_EL3 should point

* to a valid cpu context where the general purpose and system register

* state can be saved.

*/

apply_at_speculative_wa

check_and_unmask_ea

handle_sync_exception

end_vector_entry sync_exception_aarch64

vector_entry irq_aarch64

apply_at_speculative_wa

check_and_unmask_ea

handle_interrupt_exception irq_aarch64

end_vector_entry irq_aarch64

vector_entry fiq_aarch64

apply_at_speculative_wa

check_and_unmask_ea

handle_interrupt_exception fiq_aarch64

end_vector_entry fiq_aarch64

vector_entry serror_aarch64

apply_at_speculative_wa

#if RAS_EXTENSION

msr daifclr, #DAIF_ABT_BIT

b enter_lower_el_async_ea

#else

handle_async_ea

#endif

end_vector_entry serror_aarch64

/* ---------------------------------------------------------------------

* Lower EL using AArch32 : 0x600 - 0x800

* ---------------------------------------------------------------------

*/

vector_entry sync_exception_aarch32

/*

* This exception vector will be the entry point for SMCs and traps

* that are unhandled at lower ELs most commonly. SP_EL3 should point

* to a valid cpu context where the general purpose and system register

* state can be saved.

*/

apply_at_speculative_wa

check_and_unmask_ea

handle_sync_exception

end_vector_entry sync_exception_aarch32

vector_entry irq_aarch32

apply_at_speculative_wa

check_and_unmask_ea

handle_interrupt_exception irq_aarch32

end_vector_entry irq_aarch32

vector_entry fiq_aarch32

apply_at_speculative_wa

check_and_unmask_ea

handle_interrupt_exception fiq_aarch32

end_vector_entry fiq_aarch32

vector_entry serror_aarch32

apply_at_speculative_wa

#if RAS_EXTENSION

msr daifclr, #DAIF_ABT_BIT

b enter_lower_el_async_ea

#else

handle_async_ea

#endif

end_vector_entry serror_aarch32在handle_sync_exception中,

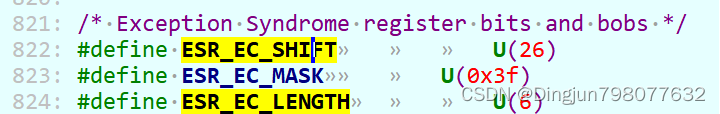

mrs x30, esr_el3

ubfx x30, x30, #ESR_EC_SHIFT, #ESR_EC_LENGTH

将esr_el3读取到x30寄存器,ubfx x30, x30, #ESR_EC_SHIFT, #ESR_EC_LENGTH 相当于x30=(x30 >> ESR_EC_SHIFT) & (1 << ESR_EC_LENGTH -1),

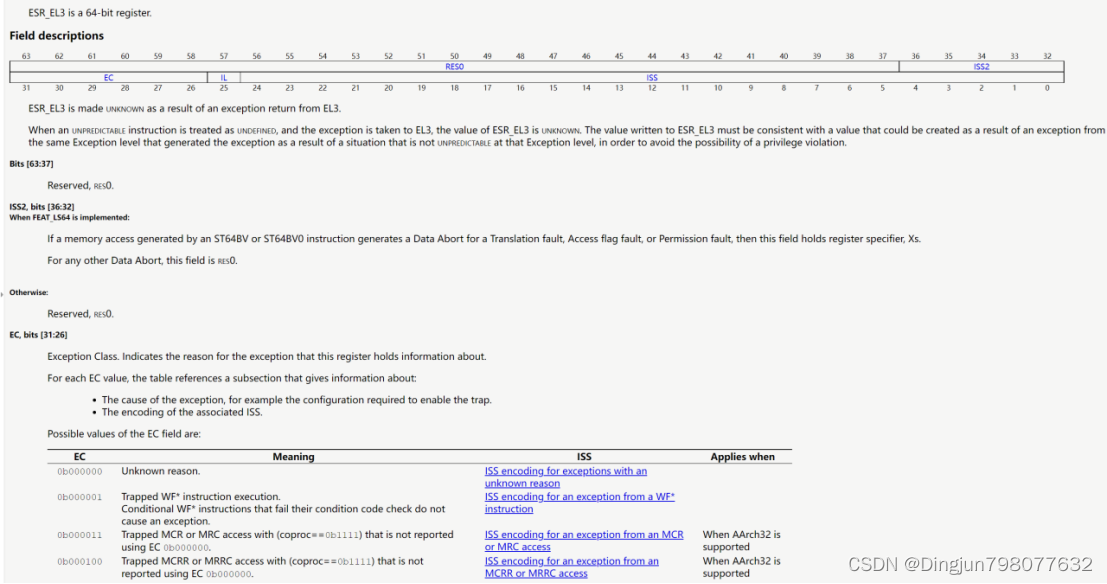

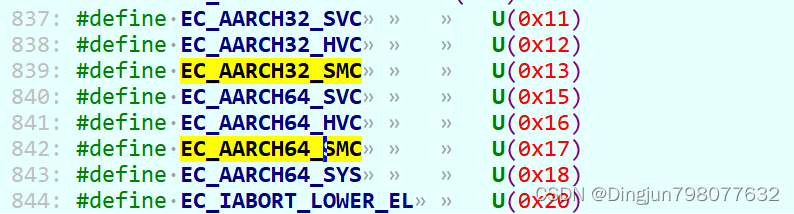

实际就是取出esr_el3的26-31位,即获取异常原因。esr_el3寄存器是异常综合寄存器,当smc陷入el3时,该寄存器ec域(esr_el3[31:26])保存触发异常的原因,esr_el3具体定义如下:

上面获取异常原因,接下来做相应的处理,对应的代码

cmp x30, #EC_AARCH32_SMC

b.eq smc_handler32

cmp x30, #EC_AARCH64_SMC

b.eq smc_handler64

/* Synchronous exceptions other than the above are assumed to be EA */

ldr x30, [sp, #CTX_GPREGS_OFFSET + CTX_GPREG_LR]

b enter_lower_el_sync_ea

EC_AARCH64_SMC的值位0x17,就是对应的上面esr_el3的ec域SMC instruction execution in AArch64 state。上面的代码就很好理解了,AARCH64状态下触发异常smc异常,调用smc_handler64。AARCH32状态下触发异常smc异常,调用smc_handler32(AARCH64和AARCH32状态下smc的异常入口是不一样的,上面有说明,但是sync_exception_aarch64和sync_exception_aarch32都使用了handle_sync_exception,所以这需要区分EC_AARCH32_SMC和EC_AARCH64_SMC)。

smc_handler64代码如下:

smc_handler64:

/* NOTE: The code below must preserve x0-x4 */

/*

* Save general purpose and ARMv8.3-PAuth registers (if enabled).

* If Secure Cycle Counter is not disabled in MDCR_EL3 when

* ARMv8.5-PMU is implemented, save PMCR_EL0 and disable Cycle Counter.

*/

bl save_gp_pmcr_pauth_regs

#if ENABLE_PAUTH

/* Load and program APIAKey firmware key */

bl pauth_load_bl31_apiakey

#endif

/*

* Populate the parameters for the SMC handler.

* We already have x0-x4 in place. x5 will point to a cookie (not used

* now). x6 will point to the context structure (SP_EL3) and x7 will

* contain flags we need to pass to the handler.

*/

mov x5, xzr

mov x6, sp

/*

* Restore the saved C runtime stack value which will become the new

* SP_EL0 i.e. EL3 runtime stack. It was saved in the 'cpu_context'

* structure prior to the last ERET from EL3.

*/

ldr x12, [x6, #CTX_EL3STATE_OFFSET + CTX_RUNTIME_SP]

/* Switch to SP_EL0 */

msr spsel, #MODE_SP_EL0

/*

* Save the SPSR_EL3, ELR_EL3, & SCR_EL3 in case there is a world

* switch during SMC handling.

* TODO: Revisit if all system registers can be saved later.

*/

mrs x16, spsr_el3

mrs x17, elr_el3

mrs x18, scr_el3

stp x16, x17, [x6, #CTX_EL3STATE_OFFSET + CTX_SPSR_EL3]

str x18, [x6, #CTX_EL3STATE_OFFSET + CTX_SCR_EL3]

/* Clear flag register */

mov x7, xzr

#if ENABLE_RME

/* Copy SCR_EL3.NSE bit to the flag to indicate caller's security */

ubfx x7, x18, #SCR_NSE_SHIFT, 1

/*

* Shift copied SCR_EL3.NSE bit by 5 to create space for

* SCR_EL3.NS bit. Bit 5 of the flag correspondes to

* the SCR_EL3.NSE bit.

*/

lsl x7, x7, #5

#endif /* ENABLE_RME */

/* Copy SCR_EL3.NS bit to the flag to indicate caller's security */

bfi x7, x18, #0, #1

mov sp, x12

/* Get the unique owning entity number */

ubfx x16, x0, #FUNCID_OEN_SHIFT, #FUNCID_OEN_WIDTH

ubfx x15, x0, #FUNCID_TYPE_SHIFT, #FUNCID_TYPE_WIDTH

orr x16, x16, x15, lsl #FUNCID_OEN_WIDTH

/* Load descriptor index from array of indices */

adrp x14, rt_svc_descs_indices

add x14, x14, :lo12:rt_svc_descs_indices

ldrb w15, [x14, x16]

/* Any index greater than 127 is invalid. Check bit 7. */

tbnz w15, 7, smc_unknown

/*

* Get the descriptor using the index

* x11 = (base + off), w15 = index

*

* handler = (base + off) + (index << log2(size))

*/

adr x11, (__RT_SVC_DESCS_START__ + RT_SVC_DESC_HANDLE)

lsl w10, w15, #RT_SVC_SIZE_LOG2

ldr x15, [x11, w10, uxtw]

/*

* Call the Secure Monitor Call handler and then drop directly into

* el3_exit() which will program any remaining architectural state

* prior to issuing the ERET to the desired lower EL.

*/

#if DEBUG

cbz x15, rt_svc_fw_critical_error

#endif

blr x15

b el3_exit

smc_unknown:

/*

* Unknown SMC call. Populate return value with SMC_UNK and call

* el3_exit() which will restore the remaining architectural state

* i.e., SYS, GP and PAuth registers(if any) prior to issuing the ERET

* to the desired lower EL.

*/

mov x0, #SMC_UNK

str x0, [x6, #CTX_GPREGS_OFFSET + CTX_GPREG_X0]

b el3_exit

函数 smc_handler64主要做了下面事情:

1. 保存Non-Secure world中的 spsr_el3 、elr_el3、scr_el3到栈中;

2. 切换sp到SP_EL0;

3. 根据 function_id 找到对应的 runtime service, 查找方法:

Index = (function_id >> 24 & 0x3f) | ((function_id >> 31) << 6);

Handler=__RT_SVC_DESCS_START__+RT_SVC_DESC_HANDLE+ rt_svc_descs_indices[Index] << 5

__RT_SVC_DESCS_START__ 为 rt_svc_descs 的起始地址,RT_SVC_DESC_HANDLE 为服务处理函数 handle 在结构体rt_svc_desc 中的偏移,左移5,是因为结构体 rt_svc_desc 大小为 32字节。

4. 跳转到 runtime service 的处理函数 handle 中执行。

5. 最后退出el3

接下来需要结合Linux PSCI相关代码分析,先简单分析Linux PSCI SMC调用处理。

三、Linux PSCI启动方式SMC调用分析

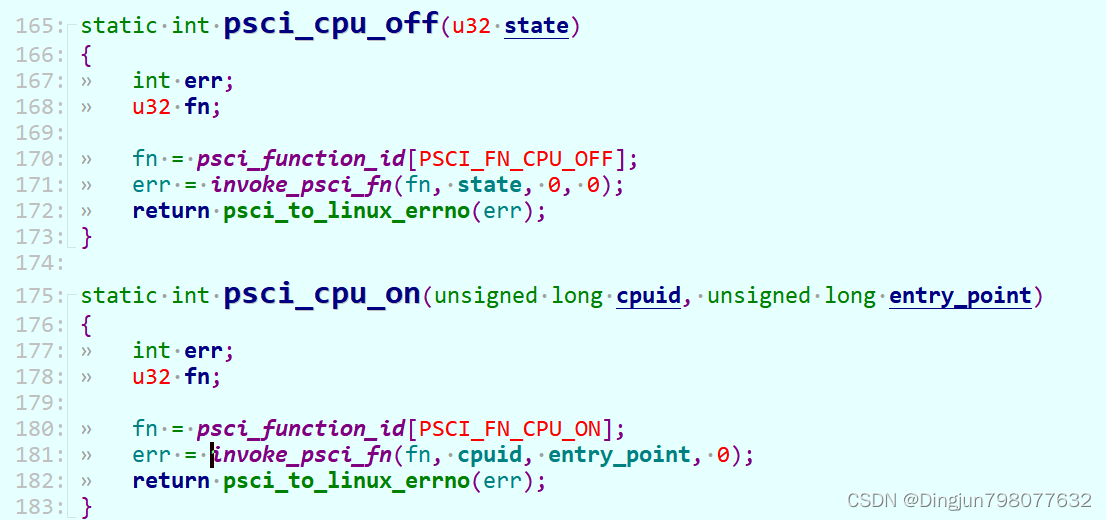

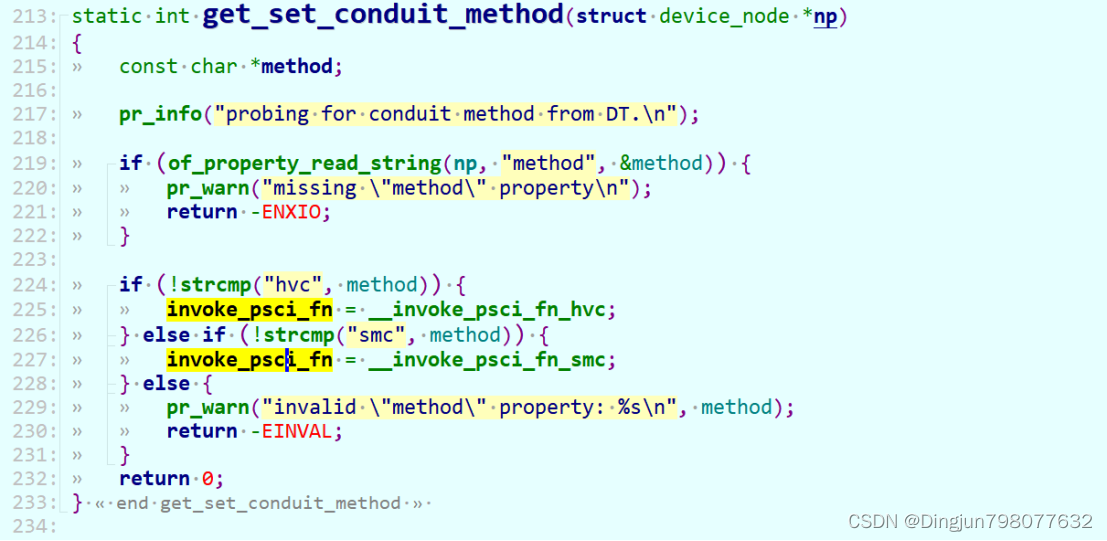

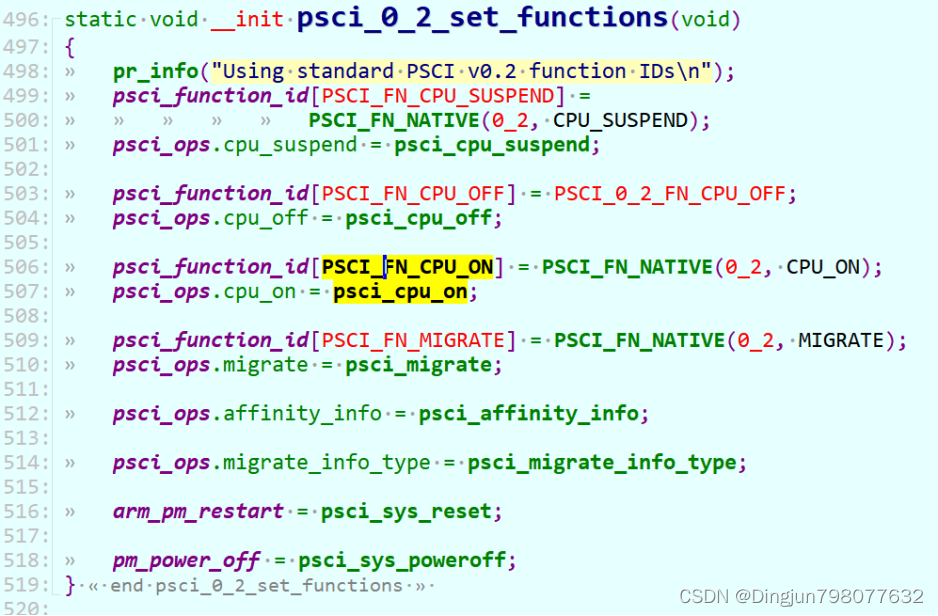

PSIC启动方式,主cpu启动secondary cpu时,最后是主cpu调用到下面的psci_cpu_on接口(至于为什么调用到psci_cpu_on接口,相关文章很多,篇幅有限,这里略过)。

Linux代码drivers\firmware\psci.c

设置invoke_psci_fn的代码如下:

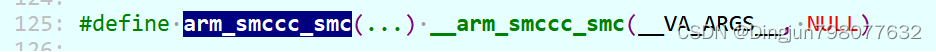

该接口中invoke_psci_fn指向__invoke_psci_fn_smc也就是smc调用(部分soc可能没有实现el3,采用hvc切换到el2,不在本文讨论范围),fn是本地调用的function_id,参数cpuid是需要启动的cpu(一般主cpuid为0,secondary cpu为1、2、3...),entry_point则指向secondary cpu在linux内核的入口地址。

该接口中invoke_psci_fn指向__invoke_psci_fn_smc也就是smc调用(部分soc可能没有实现el3,采用hvc切换到el2,不在本文讨论范围),fn是本地调用的function_id,参数cpuid是需要启动的cpu(一般主cpuid为0,secondary cpu为1、2、3...),entry_point则指向secondary cpu在linux内核的入口地址。

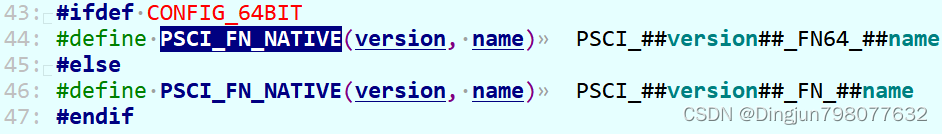

以PSCI0.2为例,PSCI_FN_CPU_ON对应的function_id为PSCI_0_2_FN64_CPU_ON,也就是0x84000000 + 0x40000000 + 0x03 = 0xC4000003,也就是psci_cpu_on中smc调用的第一个参数fn为0xC4000003。

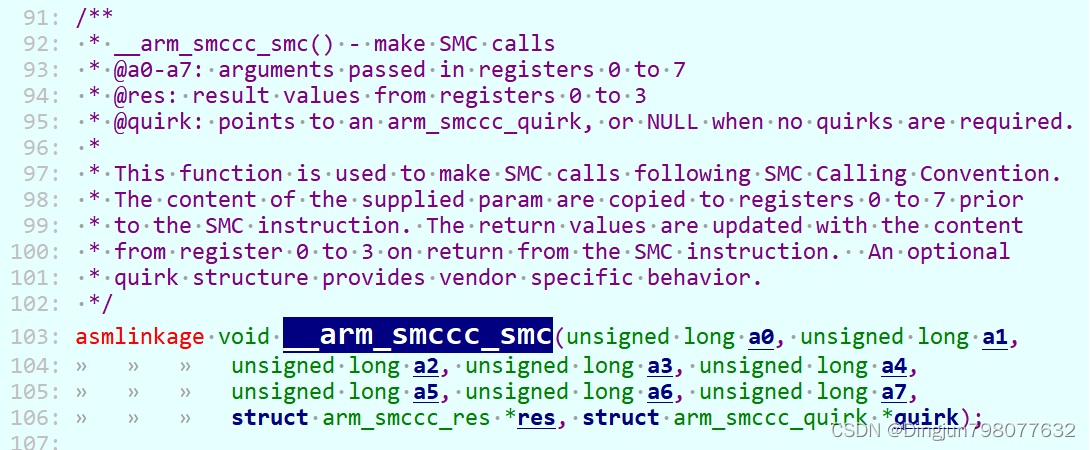

__invoke_psci_fn_smc的具体实现如下:

__invoke_psci_fn_smc最终通过汇编代码smc #0陷入到BL31(el3),也就是上文的vector_entry sync_exception_aarch64,这里fn(function_id)、cpuid、entry_point放入通用寄存器x0、x1、x2中,作为参数传递到了BL31。

__invoke_psci_fn_smc最终通过汇编代码smc #0陷入到BL31(el3),也就是上文的vector_entry sync_exception_aarch64,这里fn(function_id)、cpuid、entry_point放入通用寄存器x0、x1、x2中,作为参数传递到了BL31。

四、BL31 SMC服务分析

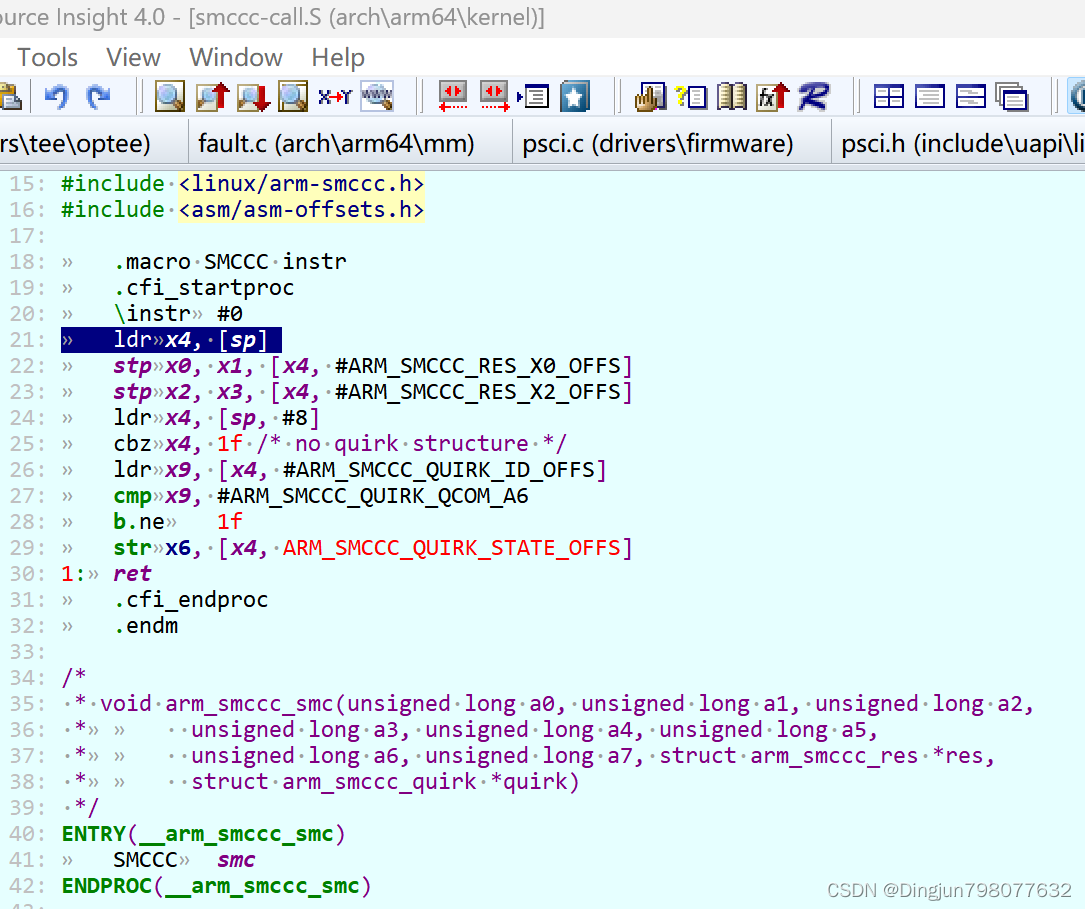

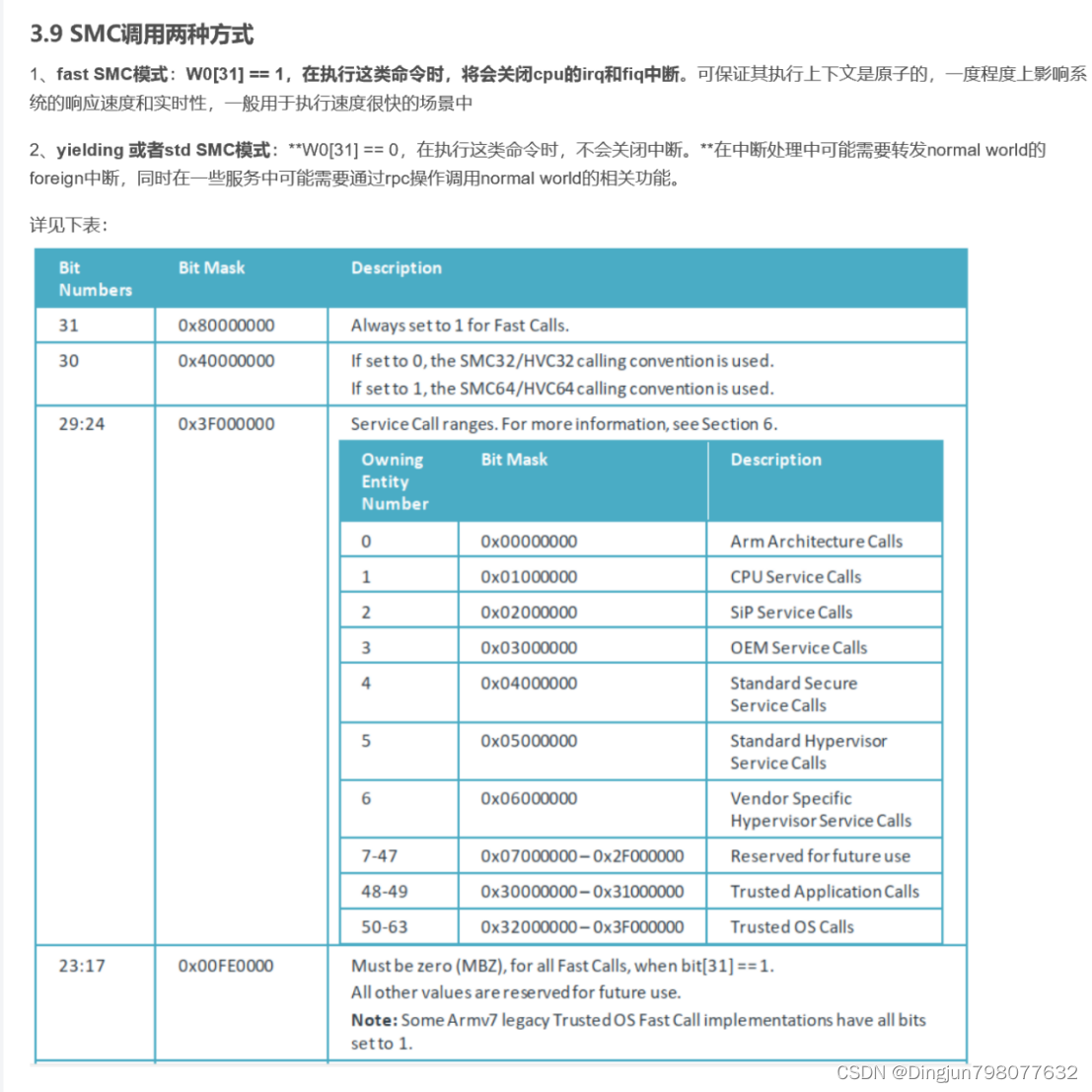

SMC调用的function_id有相应的规范,相关定义如下图,也可以参考文章

【转】ATF中SMC深入理解_arm smc_Hkcoco的博客-CSDN博客

上面的代码中smc_handler64中,把相关宏定义也列出来,加上注释分析这段代码

#define FUNCID_TYPE_SHIFT U(31)

#define FUNCID_TYPE_MASK U(0x1)

#define FUNCID_TYPE_WIDTH U(1)

#define FUNCID_CC_SHIFT U(30)

#define FUNCID_CC_MASK U(0x1)

#define FUNCID_CC_WIDTH U(1)

#define FUNCID_OEN_SHIFT U(24)

#define FUNCID_OEN_MASK U(0x3f)

#define FUNCID_OEN_WIDTH U(6)

#define FUNCID_NUM_SHIFT U(0)

#define FUNCID_NUM_MASK U(0xffff)

#define FUNCID_NUM_WIDTH U(16)

......

/* Get the unique owning entity number */

//上文已分析,x0保存的是function_id,具体值0xC4000003,这里取出了function_id的OEN保存到x16,OEN值为4

ubfx x16, x0, #FUNCID_OEN_SHIFT, #FUNCID_OEN_WIDTH

//取出smc调用类型, x15为1

ubfx x15, x0, #FUNCID_TYPE_SHIFT, #FUNCID_TYPE_WIDTH

//x16 = (x15 << 6) | x16,将OEN作为低6位,type作为第7位组合成已数字index

orr x16, x16, x15, lsl #FUNCID_OEN_WIDTH

/*上面的代码相当于Index = (function_id >> 24 & 0x3f) | ((function_id >> 31) << 6),

接下来的代码查找function_id对应的Handler ,

Handler = __RT_SVC_DESCS_START__ + RT_SVC_DESC_HANDLE + rt_svc_descs_indices[Index] << 5

这里__RT_SVC_DESCS_START__中 */

/* Load descriptor index from array of indices */

adrp x14, rt_svc_descs_indices

add x14, x14, :lo12:rt_svc_descs_indices

ldrb w15, [x14, x16]

/* Any index greater than 127 is invalid. Check bit 7. */

tbnz w15, 7, smc_unknown

/*

* Get the descriptor using the index

* x11 = (base + off), w15 = index

*

* handler = (base + off) + (index << log2(size))

*/

adr x11, (__RT_SVC_DESCS_START__ + RT_SVC_DESC_HANDLE)

lsl w10, w15, #RT_SVC_SIZE_LOG2

ldr x15, [x11, w10, uxtw]

/*

* Call the Secure Monitor Call handler and then drop directly into

* el3_exit() which will program any remaining architectural state

* prior to issuing the ERET to the desired lower EL.

*/

#if DEBUG

cbz x15, rt_svc_fw_critical_error

#endif

blr x15

b el3_exit

根据 x0中保存的function_id ,找到对应的 runtime service, 查找方法:

Index = (function_id >> 24 & 0x3f) | ((function_id >> 31) << 6);

Handler=__RT_SVC_DESCS_START__+RT_SVC_DESC_HANDLE+ rt_svc_descs_indices[Index] << 5

__RT_SVC_DESCS_START__ 为 rt_svc_descs 的起始地址,RT_SVC_DESC_HANDLE 为服务处理函数 handle 在结构体rt_svc_desc 中的偏移,左移5,是因为结构体 rt_svc_desc 大小为 32字节。查找handle 的方法需要结合rt_svc_descs_indices初始化代码分析。

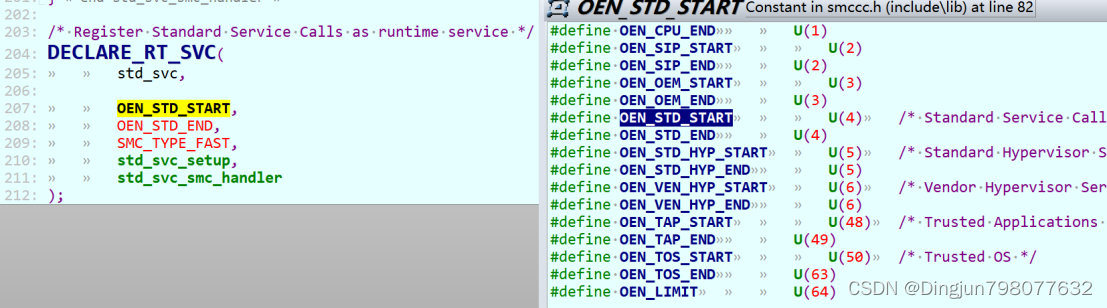

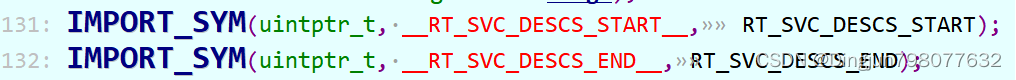

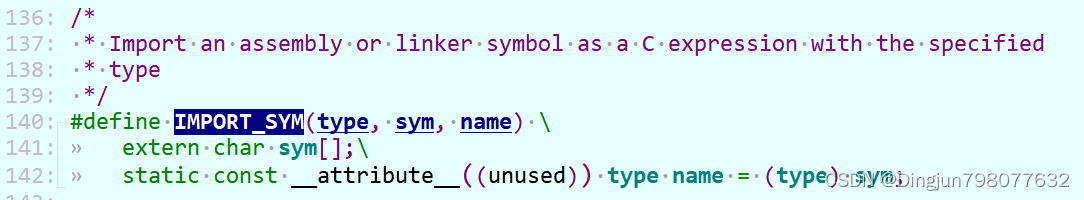

rt_svc_descs_indices初始化在文件common/runtime_svc.c中,代码如下:

void __init runtime_svc_init(void)

{

int rc = 0;

uint8_t index, start_idx, end_idx;

rt_svc_desc_t *rt_svc_descs;

... ...

/* Initialise internal variables to invalid state */

(void)memset(rt_svc_descs_indices, -1, sizeof(rt_svc_descs_indices));

rt_svc_descs = (rt_svc_desc_t *) RT_SVC_DESCS_START;

for (index = 0U; index < RT_SVC_DECS_NUM; index++) {

rt_svc_desc_t *service = &rt_svc_descs[index];

... ...

if (service->init != NULL) {

rc = service->init();

if (rc != 0) {

ERROR("Error initializing runtime service %s\n",

service->name);

continue;

}

}

/*

* Fill the indices corresponding to the start and end

* owning entity numbers with the index of the

* descriptor which will handle the SMCs for this owning

* entity range.

*/

start_idx = (uint8_t)get_unique_oen(service->start_oen,

service->call_type);

end_idx = (uint8_t)get_unique_oen(service->end_oen,

service->call_type);

assert(start_idx <= end_idx);

assert(end_idx < MAX_RT_SVCS);

for (; start_idx <= end_idx; start_idx++)

rt_svc_descs_indices[start_idx] = index;

}

}上面RT_SVC_DESCS_START就是__section("rt_svc_descs")的首地址,__section("rt_svc_descs")保存这个各rt_svc_desc_t结构体。

可以看到,所有OEN为4的fast SMC都由std_svc_smc_handler处理。

可以看到,所有OEN为4的fast SMC都由std_svc_smc_handler处理。

static uintptr_t std_svc_smc_handler(uint32_t smc_fid,

u_register_t x1,

u_register_t x2,

u_register_t x3,

u_register_t x4,

void *cookie,

void *handle,

u_register_t flags)

{

if (((smc_fid >> FUNCID_CC_SHIFT) & FUNCID_CC_MASK) == SMC_32) {

/* 32-bit SMC function, clear top parameter bits */

x1 &= UINT32_MAX;

x2 &= UINT32_MAX;

x3 &= UINT32_MAX;

x4 &= UINT32_MAX;

}

/*

* Dispatch PSCI calls to PSCI SMC handler and return its return

* value

*/

if (is_psci_fid(smc_fid)) {

uint64_t ret;

#if ENABLE_RUNTIME_INSTRUMENTATION

/*

* Flush cache line so that even if CPU power down happens

* the timestamp update is reflected in memory.

*/

PMF_WRITE_TIMESTAMP(rt_instr_svc,

RT_INSTR_ENTER_PSCI,

PMF_CACHE_MAINT,

get_cpu_data(cpu_data_pmf_ts[CPU_DATA_PMF_TS0_IDX]));

#endif

ret = psci_smc_handler(smc_fid, x1, x2, x3, x4,

cookie, handle, flags);

#if ENABLE_RUNTIME_INSTRUMENTATION

PMF_CAPTURE_TIMESTAMP(rt_instr_svc,

RT_INSTR_EXIT_PSCI,

PMF_NO_CACHE_MAINT);

#endif

SMC_RET1(handle, ret);

}

... ...

}u_register_t psci_smc_handler(uint32_t smc_fid,

u_register_t x1,

u_register_t x2,

u_register_t x3,

u_register_t x4,

void *cookie,

void *handle,

u_register_t flags)

{

u_register_t ret;

if (is_caller_secure(flags))

return (u_register_t)SMC_UNK;

/* Check the fid against the capabilities */

if ((psci_caps & define_psci_cap(smc_fid)) == 0U)

return (u_register_t)SMC_UNK;

if (((smc_fid >> FUNCID_CC_SHIFT) & FUNCID_CC_MASK) == SMC_32) {

/* 32-bit PSCI function, clear top parameter bits */

... ...

} else {

/* 64-bit PSCI function */

switch (smc_fid) {

case PSCI_CPU_SUSPEND_AARCH64:

ret = (u_register_t)

psci_cpu_suspend((unsigned int)x1, x2, x3);

break;

case PSCI_CPU_ON_AARCH64:

ret = (u_register_t)psci_cpu_on(x1, x2, x3);

break;

case PSCI_AFFINITY_INFO_AARCH64:

ret = (u_register_t)

psci_affinity_info(x1, (unsigned int)x2);

break;

case PSCI_MIG_AARCH64:

ret = (u_register_t)psci_migrate(x1);

break;

case PSCI_MIG_INFO_UP_CPU_AARCH64:

ret = psci_migrate_info_up_cpu();

break;

case PSCI_NODE_HW_STATE_AARCH64:

ret = (u_register_t)psci_node_hw_state(

x1, (unsigned int) x2);

break;

case PSCI_SYSTEM_SUSPEND_AARCH64:

ret = (u_register_t)psci_system_suspend(x1, x2);

break;

#if ENABLE_PSCI_STAT

case PSCI_STAT_RESIDENCY_AARCH64:

ret = psci_stat_residency(x1, (unsigned int) x2);

break;

case PSCI_STAT_COUNT_AARCH64:

ret = psci_stat_count(x1, (unsigned int) x2);

break;

#endif

case PSCI_MEM_CHK_RANGE_AARCH64:

ret = psci_mem_chk_range(x1, x2);

break;

case PSCI_SYSTEM_RESET2_AARCH64:

/* We should never return from psci_system_reset2() */

ret = psci_system_reset2((uint32_t) x1, x2);

break;

default:

WARN("Unimplemented PSCI Call: 0x%x\n", smc_fid);

ret = (u_register_t)SMC_UNK;

break;

}

}

return ret;

}对于PSCI启动方式CPU_ON,显然会走

case PSCI_CPU_ON_AARCH64:

ret = (u_register_t)psci_cpu_on(x1, x2, x3);

int psci_cpu_on(u_register_t target_cpu,

uintptr_t entrypoint,

u_register_t context_id)

{

int rc;

entry_point_info_t ep;

/* Determine if the cpu exists of not */

rc = psci_validate_mpidr(target_cpu);

if (rc != PSCI_E_SUCCESS)

return PSCI_E_INVALID_PARAMS;

/* Validate the entry point and get the entry_point_info */

rc = psci_validate_entry_point(&ep, entrypoint, context_id);

if (rc != PSCI_E_SUCCESS)

return rc;

/*

* To turn this cpu on, specify which power

* levels need to be turned on

*/

return psci_cpu_on_start(target_cpu, &ep);

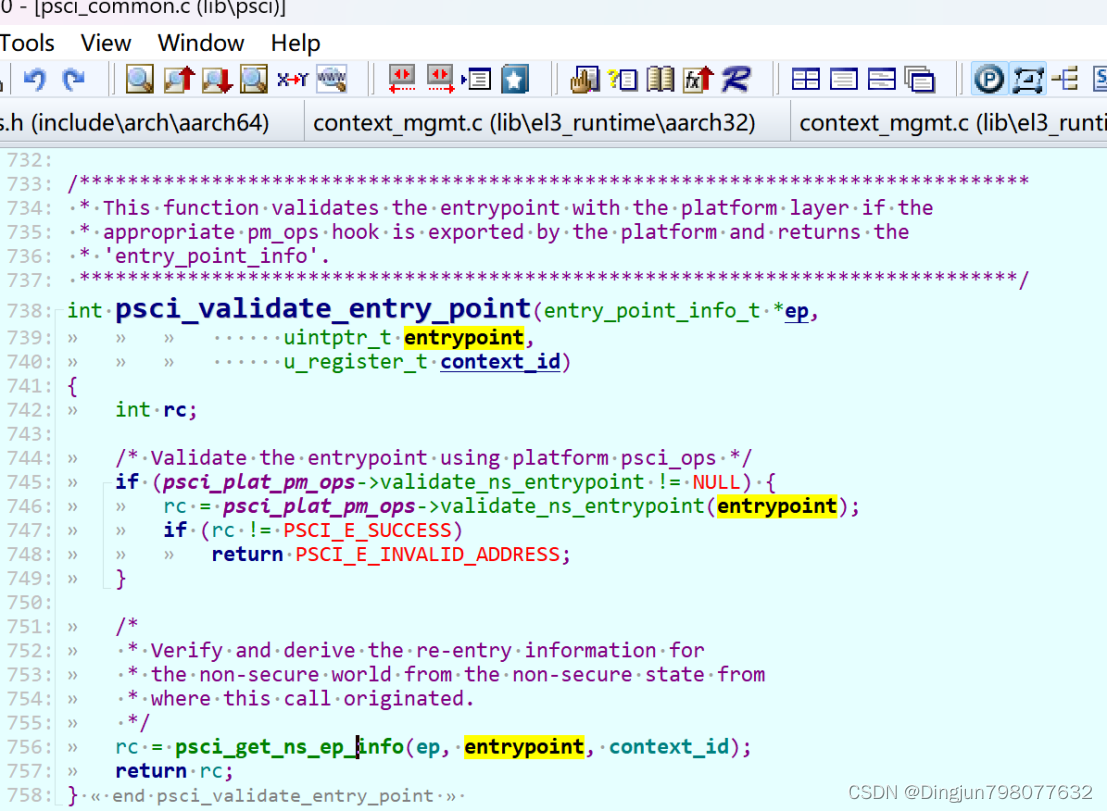

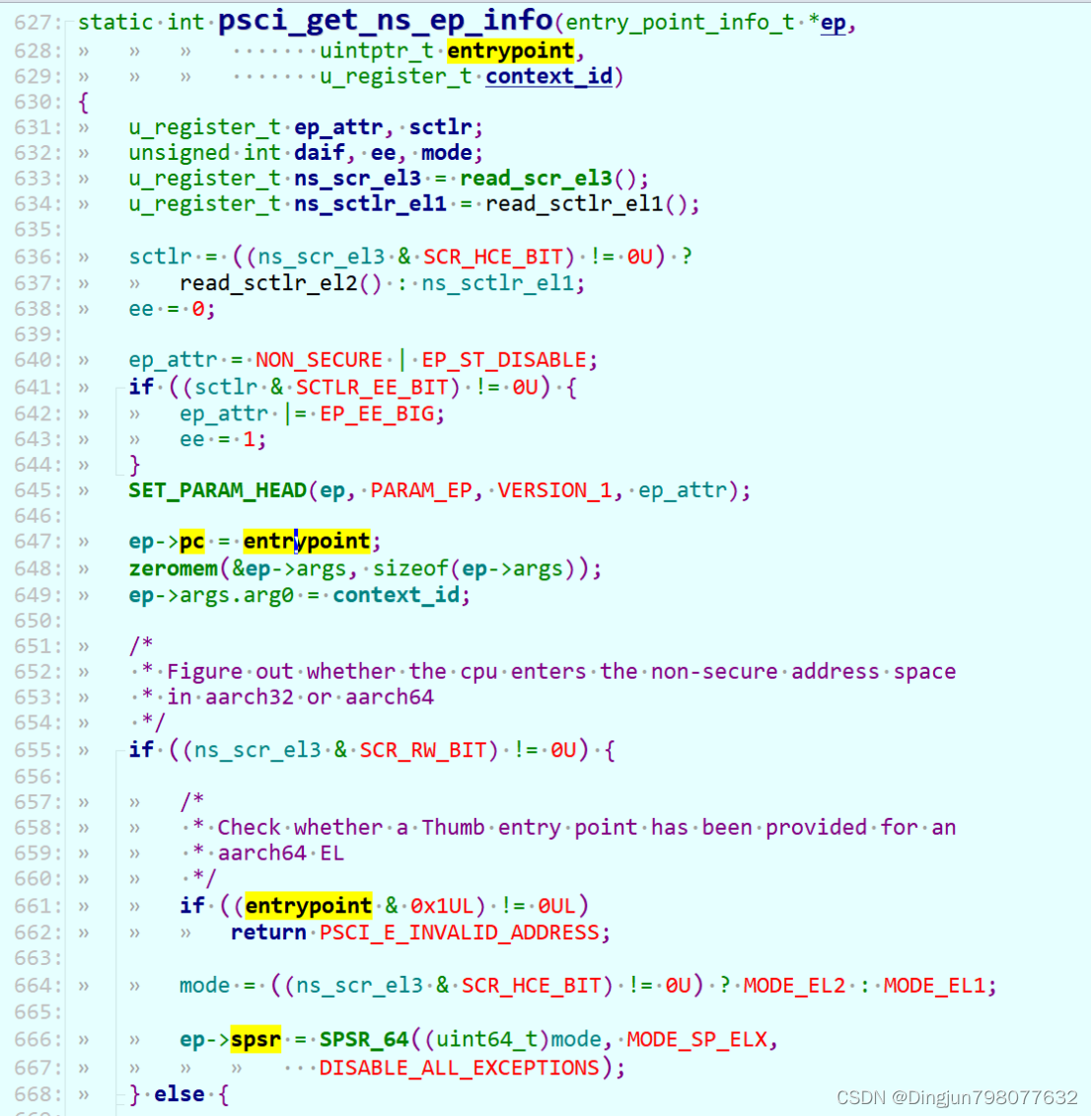

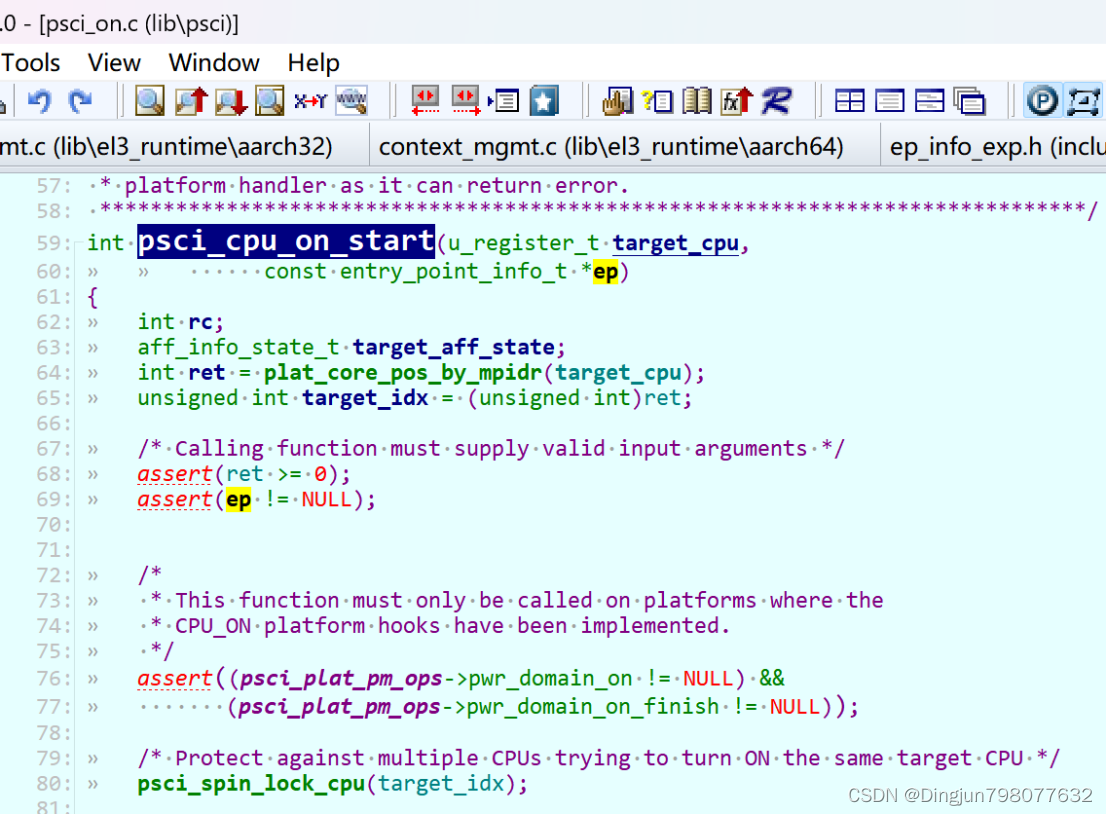

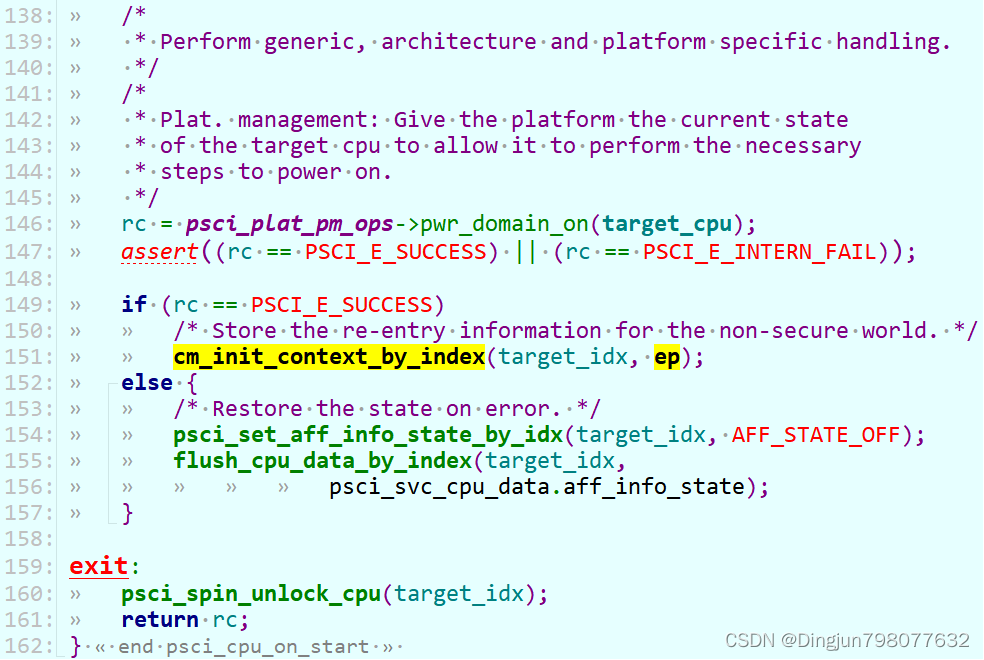

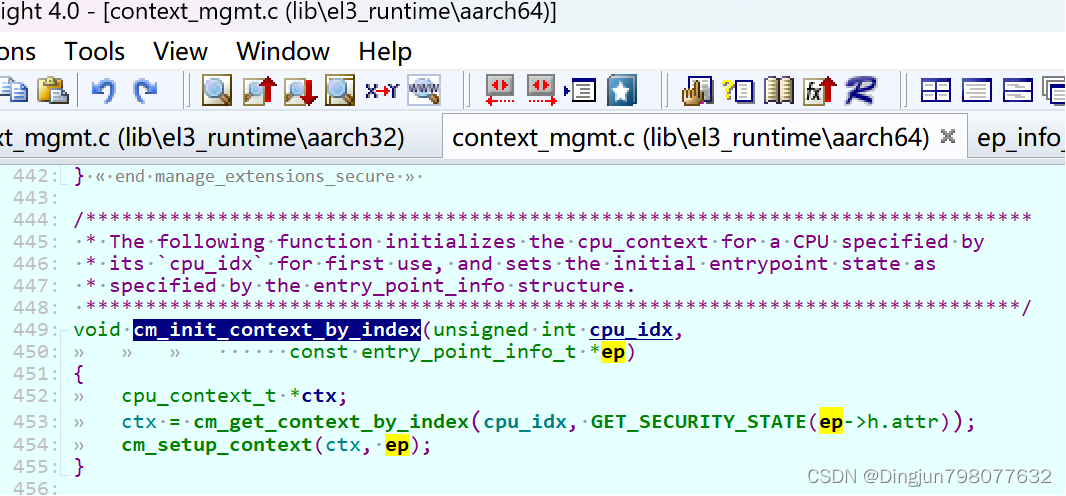

}接下来的流程大致如下

->psci_cpu_on() //lib/psci/psci_main.c

->psci_validate_entry_point() //验证入口地址有效性并 保存入口点到一个结构ep中

->psci_cpu_on_start(target_cpu, &ep) //ep入口地址

->psci_plat_pm_ops->pwr_domain_on(target_cpu)

->qemu_pwr_domain_on //实现核上电(平台实现)

/* Store the re-entry information for the non-secure world. */

->cm_init_context_by_index() //重点: 会通过cpu的编号找到 cpu上下文(cpu_context_t),存在cpu寄存器的值,异常返回的时候写写到对应的寄存器中,然后eret,旧返回到了el1!!!

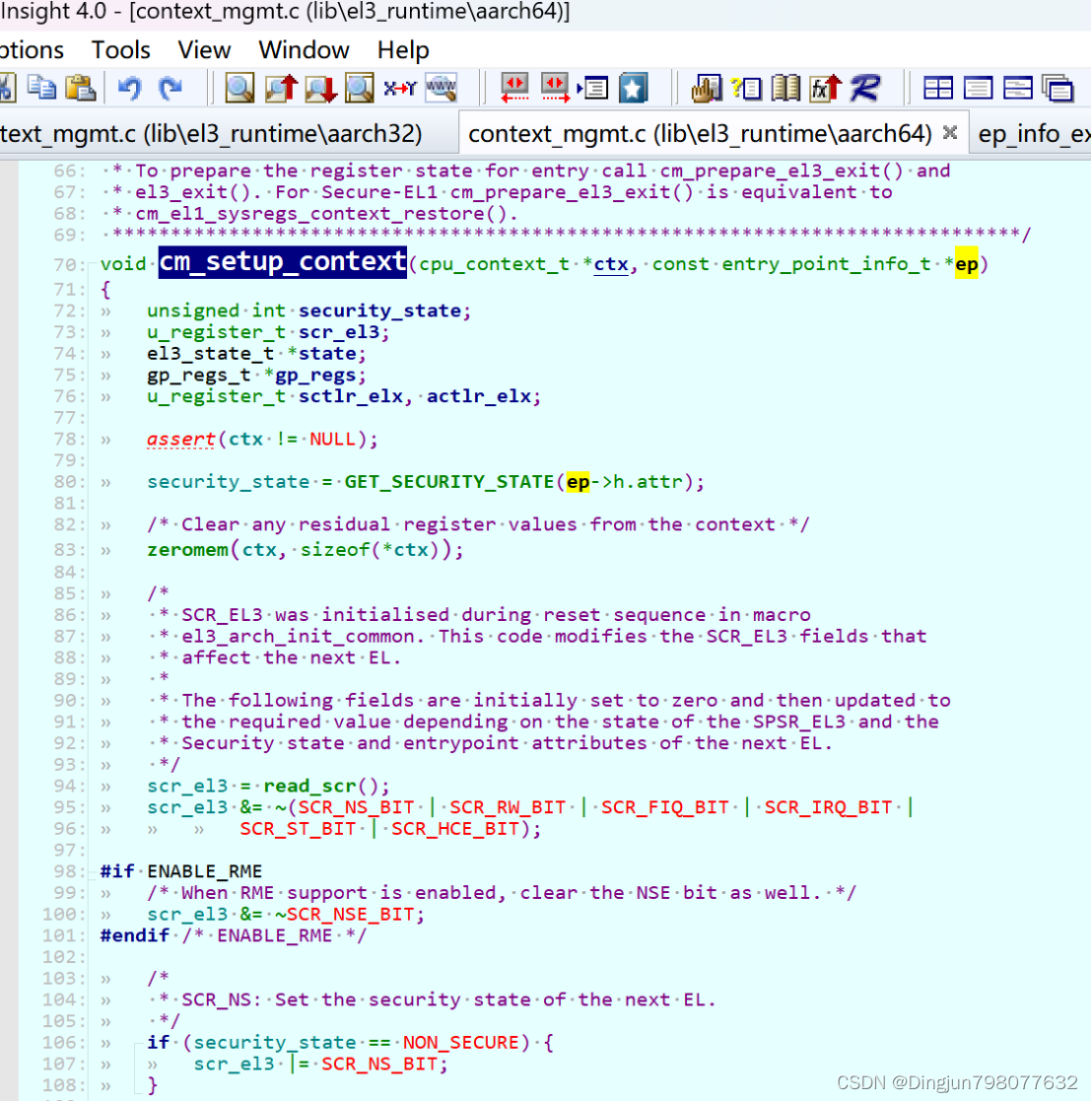

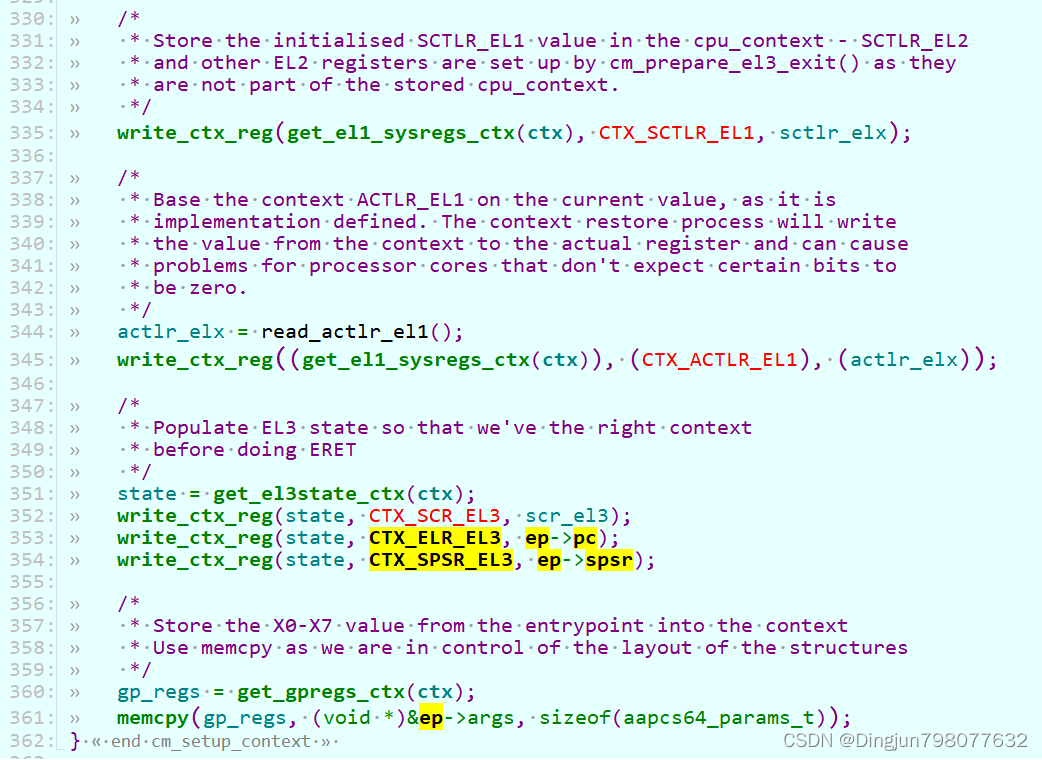

->cm_setup_context() //设置cpu上下文

-> write_ctx_reg(state, CTX_SCR_EL3, scr_el3); //lib/el3_runtime/aarch64/context_mgmt.c

write_ctx_reg(state, CTX_ELR_EL3, ep->pc); //注: 异常返回时执行此地址 于是完成了cpu的启动!!!

write_ctx_reg(state, CTX_SPSR_EL3, ep->spsr);在psci_cpu_on中调用了psci_validate_entry_point和psci_cpu_on_start。

psci_validate_entry_point调用了psci_get_ns_ep_info将Linux SMC调用是x1寄存器保存的entry_point(secondary cpu在linux入口地址)保存到了ep->pc, 并且生成了ep->spsr。

把ep->pc、 ep->spsr保存到contex后,return后回到smc_handler64,接着调用el3_exit, el3_exit最终会调用eret回到el1,也就是Linxu内核中。

把ep->pc、 ep->spsr保存到contex后,return后回到smc_handler64,接着调用el3_exit, el3_exit最终会调用eret回到el1,也就是Linxu内核中。

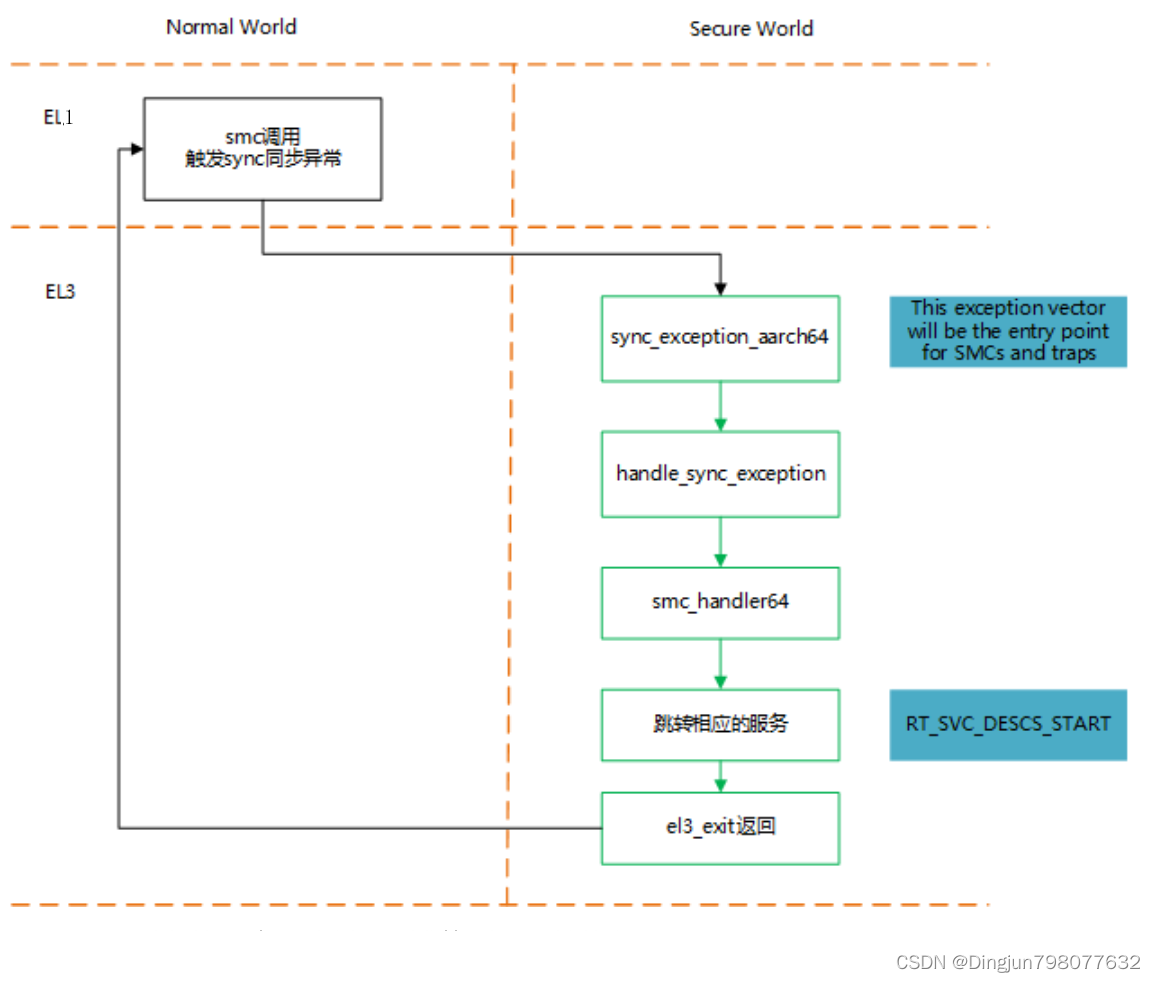

这里给出一张SMC调用的大致流程图如下:

五、PSIC启动secondary cpu的处理

好像上文整个流程,secondary cpu就启动完成了,实际不是的。 上面的过程都是主CPU在处理,主处理器通过smc进入el3请求开核服务,atf中会响应这种请求,通过平台的开核操作来启动secondary cpu,并且设置从处理的一些寄存器如:scr_el3、spsr_el3、elr_el3,然后主处理器,恢复现场,eret再次回到el1(Linux内核)。psci_cpu_on主要完成开核的工作,然后会设置一些异常返回后寄存器的值(eg:从el1 -> el3 -> el1),重点关注 ep->pc写到cpu_context结构的CTX_ELR_EL3偏移处(从处理器启动后会从这个地址取指执行)。

而secondary cpu开核之后会从bl31_warm_entrypoint开始执行,在plat_setup_psci_ops中会设置(每个平台都有自己的启动地址寄存器,通过写这个寄存器来获得上电后执行的指令地址)。

bl31_warm_entrypoint代码如下,在el3_exit中会使用之前保存cpu_context结构中的数据,写入到cscr_el3、spsr_el3、elr_el3,最后通过eret指令使自己进入到Linxu内核。

*/

func bl31_warm_entrypoint

#if ENABLE_RUNTIME_INSTRUMENTATION

/*

* This timestamp update happens with cache off. The next

* timestamp collection will need to do cache maintenance prior

* to timestamp update.

*/

pmf_calc_timestamp_addr rt_instr_svc, RT_INSTR_EXIT_HW_LOW_PWR

mrs x1, cntpct_el0

str x1, [x0]

#endif

/*

* On the warm boot path, most of the EL3 initialisations performed by

* 'el3_entrypoint_common' must be skipped:

*

* - Only when the platform bypasses the BL1/BL31 entrypoint by

* programming the reset address do we need to initialise SCTLR_EL3.

* In other cases, we assume this has been taken care by the

* entrypoint code.

*

* - No need to determine the type of boot, we know it is a warm boot.

*

* - Do not try to distinguish between primary and secondary CPUs, this

* notion only exists for a cold boot.

*

* - No need to initialise the memory or the C runtime environment,

* it has been done once and for all on the cold boot path.

*/

el3_entrypoint_common \

_init_sctlr=PROGRAMMABLE_RESET_ADDRESS \

_warm_boot_mailbox=0 \

_secondary_cold_boot=0 \

_init_memory=0 \

_init_c_runtime=0 \

_exception_vectors=runtime_exceptions \

_pie_fixup_size=0

/*

* We're about to enable MMU and participate in PSCI state coordination.

*

* The PSCI implementation invokes platform routines that enable CPUs to

* participate in coherency. On a system where CPUs are not

* cache-coherent without appropriate platform specific programming,

* having caches enabled until such time might lead to coherency issues

* (resulting from stale data getting speculatively fetched, among

* others). Therefore we keep data caches disabled even after enabling

* the MMU for such platforms.

*

* On systems with hardware-assisted coherency, or on single cluster

* platforms, such platform specific programming is not required to

* enter coherency (as CPUs already are); and there's no reason to have

* caches disabled either.

*/

#if HW_ASSISTED_COHERENCY || WARMBOOT_ENABLE_DCACHE_EARLY

mov x0, xzr

#else

mov x0, #DISABLE_DCACHE

#endif

bl bl31_plat_enable_mmu

#if ENABLE_RME

/*

* At warm boot GPT data structures have already been initialized in RAM

* but the sysregs for this CPU need to be initialized. Note that the GPT

* accesses are controlled attributes in GPCCR and do not depend on the

* SCR_EL3.C bit.

*/

bl gpt_enable

cbz x0, 1f

no_ret plat_panic_handler

1:

#endif

#if ENABLE_PAUTH

/* --------------------------------------------------------------------

* Program APIAKey_EL1 and enable pointer authentication

* --------------------------------------------------------------------

*/

bl pauth_init_enable_el3

#endif /* ENABLE_PAUTH */

bl psci_warmboot_entrypoint

#if ENABLE_RUNTIME_INSTRUMENTATION

pmf_calc_timestamp_addr rt_instr_svc, RT_INSTR_EXIT_PSCI

mov x19, x0

/*

* Invalidate before updating timestamp to ensure previous timestamp

* updates on the same cache line with caches disabled are properly

* seen by the same core. Without the cache invalidate, the core might

* write into a stale cache line.

*/

mov x1, #PMF_TS_SIZE

mov x20, x30

bl inv_dcache_range

mov x30, x20

mrs x0, cntpct_el0

str x0, [x19]

#endif

b el3_exit

endfunc bl31_warm_entrypoint

4143

4143

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?