go语言grpc之server端源码分析

上一篇文章分析了grpc的实现。接下来分析一个grpc的服务端和客户端的源码实现。这一篇分析源码端的实现,先看一下server端的实现。

package main

import (

"context"

"fmt"

"log"

pb "mygrpc/pb"

"net"

"google.golang.org/grpc"

)

type Server struct {

}

// 实现SayHello接口

func (s *Server) SayHello(ctx context.Context, in *pb.HelloRequest) (*pb.HelloReply, error) {

log.Println(in.Name, in.Message)

return &pb.HelloReply{Name: "婷婷", Message: "不回来了"}, nil

}

func main() {

//协议类型以及ip,port

lis, err := net.Listen("tcp", ":8002")

if err != nil {

fmt.Println(err)

return

}

//定义一个rpc的server

server := grpc.NewServer()

//注册服务,相当与注册SayHello接口

pb.RegisterGreetsServer(server, &Server{})

//进行映射绑定

//reflection.Register(server)

//启动服务

err = server.Serve(lis)

if err != nil {

fmt.Println(err)

return

}

}

首先是 ,这个可以看出来首先是tcp的连接,然后端口是8002。这里的tcp是,socket->bind->listen.然后准备accept新的连接。

net.Listen("tcp", ":8002")

NewServer

然后就是

server := grpc.NewServer()

这个也是比较简单,看一下源码

// NewServer creates a gRPC server which has no service registered and has not

// started to accept requests yet.

func NewServer(opt ...ServerOption) *Server {

opts := defaultServerOptions

for _, o := range opt {

o.apply(&opts)

}

s := &Server{

lis: make(map[net.Listener]bool),

opts: opts,

conns: make(map[string]map[transport.ServerTransport]bool),

services: make(map[string]*serviceInfo),

quit: grpcsync.NewEvent(),

done: grpcsync.NewEvent(),

czData: new(channelzData),

}

chainUnaryServerInterceptors(s)

chainStreamServerInterceptors(s)

s.cv = sync.NewCond(&s.mu)

if EnableTracing {

_, file, line, _ := runtime.Caller(1)

s.events = trace.NewEventLog("grpc.Server", fmt.Sprintf("%s:%d", file, line))

}

if s.opts.numServerWorkers > 0 {

s.initServerWorkers()

}

if channelz.IsOn() {

s.channelzID = channelz.RegisterServer(&channelzServer{s}, "")

}

return s

}

在没有额外的opt的情况下面,这里的NewServer其实就是创建了Server这个结构体。然后来看一下Server这个结构体。

// Server is a gRPC server to serve RPC requests.

type Server struct {

opts serverOptions

mu sync.Mutex // guards following

lis map[net.Listener]bool

// conns contains all active server transports. It is a map keyed on a

// listener address with the value being the set of active transports

// belonging to that listener.

conns map[string]map[transport.ServerTransport]bool

serve bool

drain bool

cv *sync.Cond // signaled when connections close for GracefulStop

services map[string]*serviceInfo // service name -> service info

events trace.EventLog

quit *grpcsync.Event

done *grpcsync.Event

channelzRemoveOnce sync.Once

serveWG sync.WaitGroup // counts active Serve goroutines for GracefulStop

channelzID int64 // channelz unique identification number

czData *channelzData

serverWorkerChannels []chan *serverWorkerData

}

这里主要需要注意的是l

1 lis 这个的key是打开的连接,也就是tcp的描述符

2 就是services这个。看一下描述

// serviceInfo wraps information about a service. It is very similar to

// ServiceDesc and is constructed from it for internal purposes.

type serviceInfo struct {

// Contains the implementation for the methods in this service.

serviceImpl interface{}

methods map[string]*MethodDesc

streams map[string]*StreamDesc

mdata interface{}

}

这个MethodDesc和StreamDesc分别对应着普通的调用和流式的调用,然后因为MethodDesc这个用的比较多,然后看一下这个方法

type methodHandler func(srv interface{}, ctx context.Context, dec func(interface{}) error, interceptor UnaryServerInterceptor) (interface{}, error)

// MethodDesc represents an RPC service's method specification.

type MethodDesc struct {

MethodName string

Handler methodHandler

}

// UnaryServerInfo consists of various information about a unary RPC on

// server side. All per-rpc information may be mutated by the interceptor.

type UnaryServerInfo struct {

// Server is the service implementation the user provides. This is read-only.

Server interface{}

// FullMethod is the full RPC method string, i.e., /package.service/method.

FullMethod string

}

// UnaryHandler defines the handler invoked by UnaryServerInterceptor to complete the normal

// execution of a unary RPC.

//

// If a UnaryHandler returns an error, it should either be produced by the

// status package, or be one of the context errors. Otherwise, gRPC will use

// codes.Unknown as the status code and err.Error() as the status message of the

// RPC.

type UnaryHandler func(ctx context.Context, req interface{}) (interface{}, error)

// UnaryServerInterceptor provides a hook to intercept the execution of a unary RPC on the server. info

// contains all the information of this RPC the interceptor can operate on. And handler is the wrapper

// of the service method implementation. It is the responsibility of the interceptor to invoke handler

// to complete the RPC.

type UnaryServerInterceptor func(ctx context.Context, req interface{}, info *UnaryServerInfo, handler UnaryHandler) (resp interface{}, err error)

可以看出来这里定义了好几个方法,类似于interface的实现。

然后看一下

pb.RegisterGreetsServer(server, &Server{})

这个server就是上面grpc.NewServer()创建的,然后&Server{}就是实现了SayHello的这个方法,实现了Greets这个interface。然后RegisterGreetsServer这个方法,

RegisterGreetsServer

这个就是注册server端的方法,然后看一下这个实现

func RegisterGreetsServer(s *grpc.Server, srv GreetsServer) {

s.RegisterService(&_Greets_serviceDesc, srv)

}

其实就是调用了server的RegisterService方法,然后传入的是_Greets_serviceDesc和srv。srv就是在外面传入的interface,主要看一下_Greets_serviceDesc这个方法,

var _Greets_serviceDesc = grpc.ServiceDesc{

ServiceName: "proto.Greets",

HandlerType: (*GreetsServer)(nil),

Methods: []grpc.MethodDesc{

{

MethodName: "SayHello",

Handler: _Greets_SayHello_Handler,

},

},

Streams: []grpc.StreamDesc{},

Metadata: "helloworld.proto",

}

然后看一下_Greets_SayHello_Handler这个方法,可以看出在没有interceptor的情况下,最后其实就是调用了 srv.(GreetsServer).SayHello方法.

func _Greets_SayHello_Handler(srv interface{}, ctx context.Context, dec func(interface{}) error, interceptor grpc.UnaryServerInterceptor) (interface{}, error) {

in := new(HelloRequest)

if err := dec(in); err != nil {

return nil, err

}

if interceptor == nil {

return srv.(GreetsServer).SayHello(ctx, in)

}

info := &grpc.UnaryServerInfo{

Server: srv,

FullMethod: "/proto.Greets/SayHello",

}

handler := func(ctx context.Context, req interface{}) (interface{}, error) {

return srv.(GreetsServer).SayHello(ctx, req.(*HelloRequest))

}

return interceptor(ctx, in, info, handler)

}

RegisterService

// RegisterService registers a service and its implementation to the gRPC

// server. It is called from the IDL generated code. This must be called before

// invoking Serve. If ss is non-nil (for legacy code), its type is checked to

// ensure it implements sd.HandlerType.

func (s *Server) RegisterService(sd *ServiceDesc, ss interface{}) {

if ss != nil {

ht := reflect.TypeOf(sd.HandlerType).Elem()

st := reflect.TypeOf(ss)

if !st.Implements(ht) {

logger.Fatalf("grpc: Server.RegisterService found the handler of type %v that does not satisfy %v", st, ht)

}

}

s.register(sd, ss)

}

这个其实就是在判断有没有实现指定的方法,然后就是调用register这个方法,

register

func (s *Server) register(sd *ServiceDesc, ss interface{}) {

s.mu.Lock()

defer s.mu.Unlock()

s.printf("RegisterService(%q)", sd.ServiceName)

if s.serve {

logger.Fatalf("grpc: Server.RegisterService after Server.Serve for %q", sd.ServiceName)

}

if _, ok := s.services[sd.ServiceName]; ok {

logger.Fatalf("grpc: Server.RegisterService found duplicate service registration for %q", sd.ServiceName)

}

info := &serviceInfo{

serviceImpl: ss,

methods: make(map[string]*MethodDesc),

streams: make(map[string]*StreamDesc),

mdata: sd.Metadata,

}

for i := range sd.Methods {

d := &sd.Methods[i]

info.methods[d.MethodName] = d

}

for i := range sd.Streams {

d := &sd.Streams[i]

info.streams[d.StreamName] = d

}

s.services[sd.ServiceName] = info

}

这个主要是生成一个serviceInfo的结构体,然后根据这个放到Server的这个services中,key是名称,然后就是proto.Greets。

server.Serve

// Serve accepts incoming connections on the listener lis, creating a new

// ServerTransport and service goroutine for each. The service goroutines

// read gRPC requests and then call the registered handlers to reply to them.

// Serve returns when lis.Accept fails with fatal errors. lis will be closed when

// this method returns.

// Serve will return a non-nil error unless Stop or GracefulStop is called.

func (s *Server) Serve(lis net.Listener) error {

s.mu.Lock()

s.printf("serving")

s.serve = true

if s.lis == nil {

// Serve called after Stop or GracefulStop.

s.mu.Unlock()

lis.Close()

return ErrServerStopped

}

s.serveWG.Add(1)

defer func() {

s.serveWG.Done()

if s.quit.HasFired() {

// Stop or GracefulStop called; block until done and return nil.

<-s.done.Done()

}

}()

ls := &listenSocket{Listener: lis}

s.lis[ls] = true

if channelz.IsOn() {

ls.channelzID = channelz.RegisterListenSocket(ls, s.channelzID, lis.Addr().String())

}

s.mu.Unlock()

defer func() {

s.mu.Lock()

if s.lis != nil && s.lis[ls] {

ls.Close()

delete(s.lis, ls)

}

s.mu.Unlock()

}()

var tempDelay time.Duration // how long to sleep on accept failure

for {

// 接收新的连接

rawConn, err := lis.Accept()

// 忽略新的连接

tempDelay = 0

// Start a new goroutine to deal with rawConn so we don't stall this Accept

// loop goroutine.

//

// Make sure we account for the goroutine so GracefulStop doesn't nil out

// s.conns before this conn can be added.

s.serveWG.Add(1)

go func() {

// 处理新的连接

s.handleRawConn(lis.Addr().String(), rawConn)

s.serveWG.Done()

}()

}

}

可以看出来在接收到新的连接后,也是开了一个goroutine去处理连接,然后方法就是handleRawConn这个方法

// handleRawConn forks a goroutine to handle a just-accepted connection that

// has not had any I/O performed on it yet.

func (s *Server) handleRawConn(lisAddr string, rawConn net.Conn) {

if s.quit.HasFired() {

rawConn.Close()

return

}

rawConn.SetDeadline(time.Now().Add(s.opts.connectionTimeout))

// Finish handshaking (HTTP2)

// 创建st

st := s.newHTTP2Transport(rawConn)

rawConn.SetDeadline(time.Time{})

if st == nil {

return

}

if !s.addConn(lisAddr, st) {

return

}

go func() {

// 服务stream

s.serveStreams(st)

s.removeConn(lisAddr, st)

}()

}

先看一下创建http2的方法,也就是newHTTP2Transport方法。

newHTTP2Transport

// newHTTP2Transport sets up a http/2 transport (using the

// gRPC http2 server transport in transport/http2_server.go).

func (s *Server) newHTTP2Transport(c net.Conn) transport.ServerTransport {

config := &transport.ServerConfig{

MaxStreams: s.opts.maxConcurrentStreams,

ConnectionTimeout: s.opts.connectionTimeout,

Credentials: s.opts.creds,

InTapHandle: s.opts.inTapHandle,

StatsHandler: s.opts.statsHandler,

KeepaliveParams: s.opts.keepaliveParams,

KeepalivePolicy: s.opts.keepalivePolicy,

InitialWindowSize: s.opts.initialWindowSize,

InitialConnWindowSize: s.opts.initialConnWindowSize,

WriteBufferSize: s.opts.writeBufferSize,

ReadBufferSize: s.opts.readBufferSize,

ChannelzParentID: s.channelzID,

MaxHeaderListSize: s.opts.maxHeaderListSize,

HeaderTableSize: s.opts.headerTableSize,

}

st, err := transport.NewServerTransport(c, config)

// 忽略错误处理

return st

}

可以看到这个最后是调用transport.NewServerTransport 这个方法,

// NewServerTransport creates a http2 transport with conn and configuration

// options from config.

//

// It returns a non-nil transport and a nil error on success. On failure, it

// returns a nil transport and a non-nil error. For a special case where the

// underlying conn gets closed before the client preface could be read, it

// returns a nil transport and a nil error.

func NewServerTransport(conn net.Conn, config *ServerConfig) (_ ServerTransport, err error) {

var authInfo credentials.AuthInfo

rawConn := conn

// 判断有没有设置credentials 这里忽略

writeBufSize := config.WriteBufferSize

readBufSize := config.ReadBufferSize

maxHeaderListSize := defaultServerMaxHeaderListSize

if config.MaxHeaderListSize != nil {

maxHeaderListSize = *config.MaxHeaderListSize

}

// 实例化初始化需要发送的framer

framer := newFramer(conn, writeBufSize, readBufSize, maxHeaderListSize)

// Send initial settings as connection preface to client.

isettings := []http2.Setting{{

ID: http2.SettingMaxFrameSize,

Val: http2MaxFrameLen,

}}

// 初始化配置设置

// 写入到frame中

if err := framer.fr.WriteSettings(isettings...); err != nil {

return nil, connectionErrorf(false, err, "transport: %v", err)

}

// 流控设置

if delta := uint32(icwz - defaultWindowSize); delta > 0 {

if err := framer.fr.WriteWindowUpdate(0, delta); err != nil {

return nil, connectionErrorf(false, err, "transport: %v", err)

}

}

// 初始化http2Server

done := make(chan struct{})

t := &http2Server{

ctx: setConnection(context.Background(), rawConn),

done: done,

conn: conn,

remoteAddr: conn.RemoteAddr(),

localAddr: conn.LocalAddr(),

authInfo: authInfo,

framer: framer,

readerDone: make(chan struct{}),

writerDone: make(chan struct{}),

maxStreams: maxStreams,

inTapHandle: config.InTapHandle,

fc: &trInFlow{limit: uint32(icwz)},

state: reachable,

activeStreams: make(map[uint32]*Stream),

stats: config.StatsHandler,

kp: kp,

idle: time.Now(),

kep: kep,

initialWindowSize: iwz,

czData: new(channelzData),

bufferPool: newBufferPool(),

}

// 初始化controlBuf

t.controlBuf = newControlBuffer(t.done)

if dynamicWindow {

t.bdpEst = &bdpEstimator{

bdp: initialWindowSize,

updateFlowControl: t.updateFlowControl,

}

}

if t.stats != nil {

t.ctx = t.stats.TagConn(t.ctx, &stats.ConnTagInfo{

RemoteAddr: t.remoteAddr,

LocalAddr: t.localAddr,

})

connBegin := &stats.ConnBegin{}

t.stats.HandleConn(t.ctx, connBegin)

}

if channelz.IsOn() {

t.channelzID = channelz.RegisterNormalSocket(t, config.ChannelzParentID, fmt.Sprintf("%s -> %s", t.remoteAddr, t.localAddr))

}

t.connectionID = atomic.AddUint64(&serverConnectionCounter, 1)

// 发送请求

t.framer.writer.Flush()

defer func() {

if err != nil {

t.Close()

}

}()

// 读取response

// Check the validity of client preface.

preface := make([]byte, len(clientPreface))

if _, err := io.ReadFull(t.conn, preface); err != nil {

// In deployments where a gRPC server runs behind a cloud load balancer

// which performs regular TCP level health checks, the connection is

// closed immediately by the latter. Returning io.EOF here allows the

// grpc server implementation to recognize this scenario and suppress

// logging to reduce spam.

if err == io.EOF {

return nil, io.EOF

}

return nil, connectionErrorf(false, err, "transport: http2Server.HandleStreams failed to receive the preface from client: %v", err)

}

if !bytes.Equal(preface, clientPreface) {

return nil, connectionErrorf(false, nil, "transport: http2Server.HandleStreams received bogus greeting from client: %q", preface)

}

frame, err := t.framer.fr.ReadFrame()

if err == io.EOF || err == io.ErrUnexpectedEOF {

return nil, err

}

if err != nil {

return nil, connectionErrorf(false, err, "transport: http2Server.HandleStreams failed to read initial settings frame: %v", err)

}

atomic.StoreInt64(&t.lastRead, time.Now().UnixNano())

sf, ok := frame.(*http2.SettingsFrame)

if !ok {

return nil, connectionErrorf(false, nil, "transport: http2Server.HandleStreams saw invalid preface type %T from client", frame)

}

t.handleSettings(sf)

go func() {

t.loopy = newLoopyWriter(serverSide, t.framer, t.controlBuf, t.bdpEst)

t.loopy.ssGoAwayHandler = t.outgoingGoAwayHandler

if err := t.loopy.run(); err != nil {

if logger.V(logLevel) {

logger.Errorf("transport: loopyWriter.run returning. Err: %v", err)

}

}

t.conn.Close()

t.controlBuf.finish()

close(t.writerDone)

}()

go t.keepalive()

return t, nil

}

可以看出来这个NewServerTransport方法,主要是有着以下的功能,

1 发送初始化的framer。这个和官方的其实是一样的。都是发送初始化的framer,沟通接下来的一些参数的设置,有点类似于三次握手。

2 就是初始化http2Server这个结构体。

3 就是开启一个goroutine,去调用newLoopyWriter中的run方法。然后看一下这个方法

newLoopyWriter run

// run should be run in a separate goroutine.

// It reads control frames from controlBuf and processes them by:

// 1. Updating loopy's internal state, or/and

// 2. Writing out HTTP2 frames on the wire.

//

// Loopy keeps all active streams with data to send in a linked-list.

// All streams in the activeStreams linked-list must have both:

// 1. Data to send, and

// 2. Stream level flow control quota available.

//

// In each iteration of run loop, other than processing the incoming control

// frame, loopy calls processData, which processes one node from the activeStreams linked-list.

// This results in writing of HTTP2 frames into an underlying write buffer.

// When there's no more control frames to read from controlBuf, loopy flushes the write buffer.

// As an optimization, to increase the batch size for each flush, loopy yields the processor, once

// if the batch size is too low to give stream goroutines a chance to fill it up.

func (l *loopyWriter) run() (err error) {

defer func() {

if err == ErrConnClosing {

// Don't log ErrConnClosing as error since it happens

// 1. When the connection is closed by some other known issue.

// 2. User closed the connection.

// 3. A graceful close of connection.

if logger.V(logLevel) {

logger.Infof("transport: loopyWriter.run returning. %v", err)

}

err = nil

}

}()

for {

// 按照上面的描述和定义 从链表里面拿到cbItem。

it, err := l.cbuf.get(true)

if err != nil {

return err

}

// 主要就是这个handle 处理具体的逻辑

if err = l.handle(it); err != nil {

return err

}

// 处理数据

if _, err = l.processData(); err != nil {

return err

}

gosched := true

hasdata:

for {

it, err := l.cbuf.get(false)

if err != nil {

return err

}

if it != nil {

if err = l.handle(it); err != nil {

return err

}

if _, err = l.processData(); err != nil {

return err

}

continue hasdata

}

isEmpty, err := l.processData()

if err != nil {

return err

}

if !isEmpty {

continue hasdata

}

if gosched {

gosched = false

if l.framer.writer.offset < minBatchSize {

runtime.Gosched()

continue hasdata

}

}

l.framer.writer.Flush()

break hasdata

}

}

}

这里主要是看一下handle 这个方法。

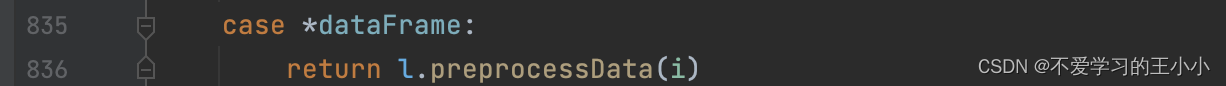

func (l *loopyWriter) handle(i interface{}) error {

switch i := i.(type) {

case *incomingWindowUpdate:

return l.incomingWindowUpdateHandler(i)

case *outgoingWindowUpdate:

return l.outgoingWindowUpdateHandler(i)

case *incomingSettings:

return l.incomingSettingsHandler(i)

case *outgoingSettings:

return l.outgoingSettingsHandler(i)

case *headerFrame:

return l.headerHandler(i)

case *registerStream:

return l.registerStreamHandler(i)

case *cleanupStream:

return l.cleanupStreamHandler(i)

case *earlyAbortStream:

return l.earlyAbortStreamHandler(i)

case *incomingGoAway:

return l.incomingGoAwayHandler(i)

case *dataFrame:

return l.preprocessData(i)

case *ping:

return l.pingHandler(i)

case *goAway:

return l.goAwayHandler(i)

case *outFlowControlSizeRequest:

return l.outFlowControlSizeRequestHandler(i)

default:

return fmt.Errorf("transport: unknown control message type %T", i)

}

}

然后看一个具体的实现,比如说outgoingWindowUpdate或者incomingSettings,

func (l *loopyWriter) outgoingWindowUpdateHandler(w *outgoingWindowUpdate) error {

return l.framer.fr.WriteWindowUpdate(w.streamID, w.increment)

}

func (l *loopyWriter) incomingSettingsHandler(s *incomingSettings) error {

if err := l.applySettings(s.ss); err != nil {

return err

}

return l.framer.fr.WriteSettingsAck()

}

可以看出来这个方法其实就是写入到framer中。

到这里newHTTP2Transport 已经结束了,接下来看一下serveStreams这个方法,

serveStreams

func (s *Server) serveStreams(st transport.ServerTransport) {

defer st.Close()

var wg sync.WaitGroup

var roundRobinCounter uint32

st.HandleStreams(func(stream *transport.Stream) {

wg.Add(1)

if s.opts.numServerWorkers > 0 {

data := &serverWorkerData{st: st, wg: &wg, stream: stream}

select {

case s.serverWorkerChannels[atomic.AddUint32(&roundRobinCounter, 1)%s.opts.numServerWorkers] <- data:

default:

// If all stream workers are busy, fallback to the default code path.

go func() {

s.handleStream(st, stream, s.traceInfo(st, stream))

wg.Done()

}()

}

} else {

go func() {

defer wg.Done()

s.handleStream(st, stream, s.traceInfo(st, stream))

}()

}

}, func(ctx context.Context, method string) context.Context {

if !EnableTracing {

return ctx

}

tr := trace.New("grpc.Recv."+methodFamily(method), method)

return trace.NewContext(ctx, tr)

})

wg.Wait()

}

看一下st也就是http2server的HandleStreams方法,然后在正常的逻辑下面,是传了一个方法, 然后开了一个goroutine去调用handleStream。

HandleStreams

// HandleStreams receives incoming streams using the given handler. This is

// typically run in a separate goroutine.

// traceCtx attaches trace to ctx and returns the new context.

func (t *http2Server) HandleStreams(handle func(*Stream), traceCtx func(context.Context, string) context.Context) {

defer close(t.readerDone)

for {

t.controlBuf.throttle()

frame, err := t.framer.fr.ReadFrame()

atomic.StoreInt64(&t.lastRead, time.Now().UnixNano())

if err != nil {

if se, ok := err.(http2.StreamError); ok {

if logger.V(logLevel) {

logger.Warningf("transport: http2Server.HandleStreams encountered http2.StreamError: %v", se)

}

t.mu.Lock()

s := t.activeStreams[se.StreamID]

t.mu.Unlock()

if s != nil {

t.closeStream(s, true, se.Code, false)

} else {

t.controlBuf.put(&cleanupStream{

streamID: se.StreamID,

rst: true,

rstCode: se.Code,

onWrite: func() {},

})

}

continue

}

if err == io.EOF || err == io.ErrUnexpectedEOF {

t.Close()

return

}

if logger.V(logLevel) {

logger.Warningf("transport: http2Server.HandleStreams failed to read frame: %v", err)

}

t.Close()

return

}

switch frame := frame.(type) {

case *http2.MetaHeadersFrame:

if t.operateHeaders(frame, handle, traceCtx) {

t.Close()

break

}

case *http2.DataFrame:

t.handleData(frame)

case *http2.RSTStreamFrame:

t.handleRSTStream(frame)

case *http2.SettingsFrame:

t.handleSettings(frame)

case *http2.PingFrame:

t.handlePing(frame)

case *http2.WindowUpdateFrame:

t.handleWindowUpdate(frame)

case *http2.GoAwayFrame:

// TODO: Handle GoAway from the client appropriately.

default:

if logger.V(logLevel) {

logger.Errorf("transport: http2Server.HandleStreams found unhandled frame type %v.", frame)

}

}

}

}

HandleStreams这个很明显是读取,然后前面一个是写入,然后主要看一下读取的frame为http2.MetaHeadersFrame和http2.DataFrame的时候。

然后http2.MetaHeadersFrame其实是调用operateHeaders方法,然后http2.DataFrame是调用handleData方法,

先看一下operateHeaders 这个方法

// operateHeader takes action on the decoded headers.

func (t *http2Server) operateHeaders(frame *http2.MetaHeadersFrame, handle func(*Stream), traceCtx func(context.Context, string) context.Context) (fatal bool) {

// Acquire max stream ID lock for entire duration

t.maxStreamMu.Lock()

defer t.maxStreamMu.Unlock()

// 获取streamID

streamID := frame.Header().StreamID

t.maxStreamID = streamID

buf := newRecvBuffer()

s := &Stream{

id: streamID,

st: t,

buf: buf,

fc: &inFlow{limit: uint32(t.initialWindowSize)},

}

var (

// If a gRPC Response-Headers has already been received, then it means

// that the peer is speaking gRPC and we are in gRPC mode.

isGRPC = false

mdata = make(map[string][]string)

httpMethod string

// headerError is set if an error is encountered while parsing the headers

headerError bool

timeoutSet bool

timeout time.Duration

)

// 读取header头

for _, hf := range frame.Fields {

switch hf.Name {

case "content-type":

contentSubtype, validContentType := grpcutil.ContentSubtype(hf.Value)

if !validContentType {

break

}

mdata[hf.Name] = append(mdata[hf.Name], hf.Value)

s.contentSubtype = contentSubtype

isGRPC = true

case "grpc-encoding":

s.recvCompress = hf.Value

case ":method":

httpMethod = hf.Value

case ":path":

s.method = hf.Value

case "grpc-timeout":

timeoutSet = true

var err error

if timeout, err = decodeTimeout(hf.Value); err != nil {

headerError = true

}

// "Transports must consider requests containing the Connection header

// as malformed." - A41

case "connection":

if logger.V(logLevel) {

logger.Errorf("transport: http2Server.operateHeaders parsed a :connection header which makes a request malformed as per the HTTP/2 spec")

}

headerError = true

default:

if isReservedHeader(hf.Name) && !isWhitelistedHeader(hf.Name) {

break

}

v, err := decodeMetadataHeader(hf.Name, hf.Value)

if err != nil {

headerError = true

logger.Warningf("Failed to decode metadata header (%q, %q): %v", hf.Name, hf.Value, err)

break

}

mdata[hf.Name] = append(mdata[hf.Name], v)

}

}

// 异常判断忽略

// 判断是否结束fram

if frame.StreamEnded() {

// s is just created by the caller. No lock needed.

s.state = streamReadDone

}

if timeoutSet {

s.ctx, s.cancel = context.WithTimeout(t.ctx, timeout)

} else {

s.ctx, s.cancel = context.WithCancel(t.ctx)

}

pr := &peer.Peer{

Addr: t.remoteAddr,

}

// Attach Auth info if there is any.

if t.authInfo != nil {

pr.AuthInfo = t.authInfo

}

s.ctx = peer.NewContext(s.ctx, pr)

// Attach the received metadata to the context.

if len(mdata) > 0 {

s.ctx = metadata.NewIncomingContext(s.ctx, mdata)

if statsTags := mdata["grpc-tags-bin"]; len(statsTags) > 0 {

s.ctx = stats.SetIncomingTags(s.ctx, []byte(statsTags[len(statsTags)-1]))

}

if statsTrace := mdata["grpc-trace-bin"]; len(statsTrace) > 0 {

s.ctx = stats.SetIncomingTrace(s.ctx, []byte(statsTrace[len(statsTrace)-1]))

}

}

t.mu.Lock()

t.activeStreams[streamID] = s

if len(t.activeStreams) == 1 {

t.idle = time.Time{}

}

t.mu.Unlock()

if channelz.IsOn() {

atomic.AddInt64(&t.czData.streamsStarted, 1)

atomic.StoreInt64(&t.czData.lastStreamCreatedTime, time.Now().UnixNano())

}

s.requestRead = func(n int) {

t.adjustWindow(s, uint32(n))

}

s.ctx = traceCtx(s.ctx, s.method)

if t.stats != nil {

s.ctx = t.stats.TagRPC(s.ctx, &stats.RPCTagInfo{FullMethodName: s.method})

inHeader := &stats.InHeader{

FullMethod: s.method,

RemoteAddr: t.remoteAddr,

LocalAddr: t.localAddr,

Compression: s.recvCompress,

WireLength: int(frame.Header().Length),

Header: metadata.MD(mdata).Copy(),

}

t.stats.HandleRPC(s.ctx, inHeader)

}

s.ctxDone = s.ctx.Done()

s.wq = newWriteQuota(defaultWriteQuota, s.ctxDone)

s.trReader = &transportReader{

reader: &recvBufferReader{

ctx: s.ctx,

ctxDone: s.ctxDone,

recv: s.buf,

freeBuffer: t.bufferPool.put,

},

windowHandler: func(n int) {

t.updateWindow(s, uint32(n))

},

}

// Register the stream with loopy.

t.controlBuf.put(®isterStream{

streamID: s.id,

wq: s.wq,

})

handle(s)

return false

}

这里主要的逻辑就是从header读取不同值去设置。然后去controlBuf加入一个注册的stream,然后调用handle这个也就是之前传入的方法,也就是Server的handleStream方法,这个handleStream 最后只是调用的

func (s *Server) processUnaryRPC(t transport.ServerTransport, stream *transport.Stream, info *serviceInfo, md *MethodDesc, trInfo *traceInfo) (err error) {

// 判断异常情况

// 判断压缩的情况 因为没有设置 这里忽略

// 判断对端有没有消息

// 这里的Handler就是设置1的sayHello的方法

ctx := NewContextWithServerTransportStream(stream.Context(), stream)

reply, appErr := md.Handler(info.serviceImpl, ctx, df, s.opts.unaryInt)

// 发送response

s.sendResponse(t, stream, reply, cp, opts, comp)

// TODO: Should we be logging if writing status failed here, like above?

// Should the logging be in WriteStatus? Should we ignore the WriteStatus

// error or allow the stats handler to see it?

err = t.WriteStatus(stream, statusOK)

if binlog != nil {

binlog.Log(&binarylog.ServerTrailer{

Trailer: stream.Trailer(),

Err: appErr,

})

}

return err

}

这里的sendresponse就是调用在proto里面的Marshal方法。

func (s *Server) sendResponse(t transport.ServerTransport, stream *transport.Stream, msg interface{}, cp Compressor, opts *transport.Options, comp encoding.Compressor) error {

// 序列化

data, err := encode(s.getCodec(stream.ContentSubtype()), msg)

if err != nil {

channelz.Error(logger, s.channelzID, "grpc: server failed to encode response: ", err)

return err

}

// 压缩

compData, err := compress(data, cp, comp)

if err != nil {

channelz.Error(logger, s.channelzID, "grpc: server failed to compress response: ", err)

return err

}

// 写入到frame

hdr, payload := msgHeader(data, compData)

// TODO(dfawley): should we be checking len(data) instead?

if len(payload) > s.opts.maxSendMessageSize {

return status.Errorf(codes.ResourceExhausted, "grpc: trying to send message larger than max (%d vs. %d)", len(payload), s.opts.maxSendMessageSize)

}

err = t.Write(stream, hdr, payload, opts)

if err == nil && s.opts.statsHandler != nil {

s.opts.statsHandler.HandleRPC(stream.Context(), outPayload(false, msg, data, payload, time.Now()))

}

return err

}

这里的encode其实就是下面的代码

func init() {

encoding.RegisterCodec(codec{})

}

// codec is a Codec implementation with protobuf. It is the default codec for gRPC.

type codec struct{}

func (codec) Marshal(v interface{}) ([]byte, error) {

vv, ok := v.(proto.Message)

if !ok {

return nil, fmt.Errorf("failed to marshal, message is %T, want proto.Message", v)

}

return proto.Marshal(vv)

}

func (codec) Unmarshal(data []byte, v interface{}) error {

vv, ok := v.(proto.Message)

if !ok {

return fmt.Errorf("failed to unmarshal, message is %T, want proto.Message", v)

}

return proto.Unmarshal(data, vv)

}

func (codec) Name() string {

return Name

}

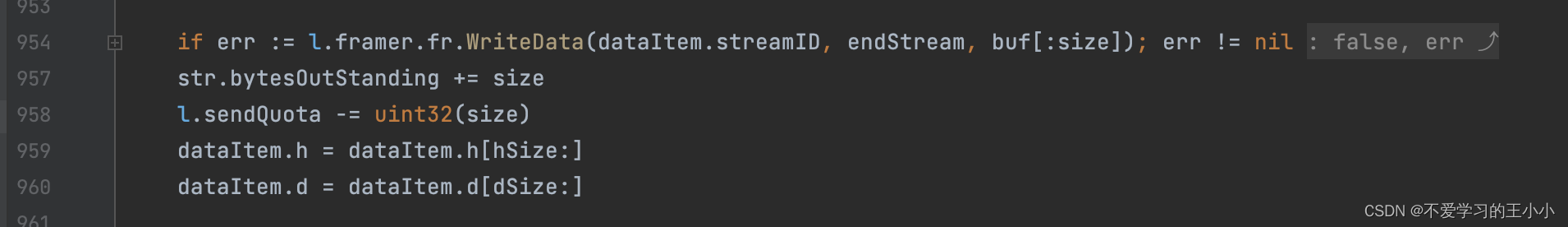

然后看一下write方法,其实就是http2Server的Write方法。

// Write converts the data into HTTP2 data frame and sends it out. Non-nil error

// is returns if it fails (e.g., framing error, transport error).

func (t *http2Server) Write(s *Stream, hdr []byte, data []byte, opts *Options) error {

if !s.isHeaderSent() { // Headers haven't been written yet.

if err := t.WriteHeader(s, nil); err != nil {

if _, ok := err.(ConnectionError); ok {

return err

}

// TODO(mmukhi, dfawley): Make sure this is the right code to return.

return status.Errorf(codes.Internal, "transport: %v", err)

}

} else {

// Writing headers checks for this condition.

if s.getState() == streamDone {

// TODO(mmukhi, dfawley): Should the server write also return io.EOF?

s.cancel()

select {

case <-t.done:

return ErrConnClosing

default:

}

return ContextErr(s.ctx.Err())

}

}

df := &dataFrame{

streamID: s.id,

h: hdr,

d: data,

onEachWrite: t.setResetPingStrikes,

}

if err := s.wq.get(int32(len(hdr) + len(data))); err != nil {

select {

case <-t.done:

return ErrConnClosing

default:

}

return ContextErr(s.ctx.Err())

}

return t.controlBuf.put(df)

}

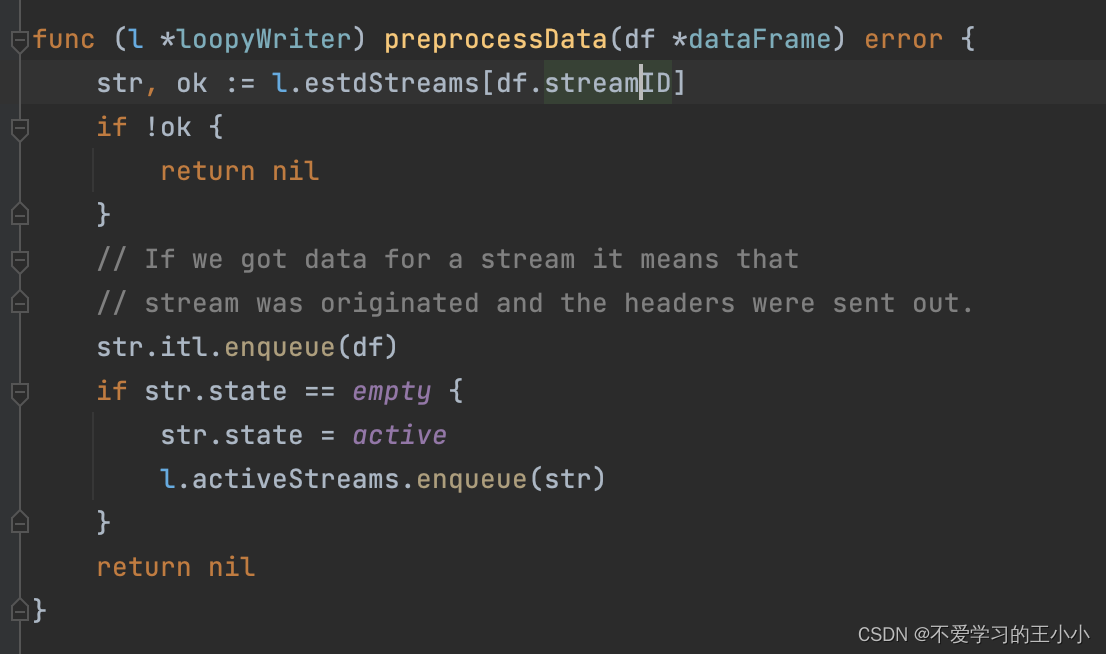

然后看下dataFrame的逻辑,然后走的是preprocessData这个方法。

然后这个在loopyWriter的processData中,其他逻辑就不看了,主要就是在这里写入的frame中.这样就完成了整个整个闭环

801

801

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?