翻译自:https://www.behaviortree.dev

Remapping ports between Trees and SubTrees [在树和子树之间重新映射端口]

In the CrossDoor example we saw that a SubTree looks like a single leaf Node from the point of view of its parent (MainTree in the example). [在 CrossDoor 示例中,我们看到从父节点(示例中的 MainTree)的角度来看,子树看起来像单个叶节点。]

Furthermore, to avoid name clashing in very large trees, any tree and subtree use a different instance of the Blackboard. [此外,为避免在非常大的树中出现名称冲突,任何树和子树都使用不同的 Blackboard 实例。]

For this reason, we need to explicitly connect the ports of a tree to those of its subtrees. [出于这个原因,我们需要将树的端口显式连接到其子树的端口。]

Once again, you won’t need to modify your C++ implementation since this remapping is done entirely in the XML definition. [再一次,您不需要修改 C++ 实现,因为重新映射完全在 XML 定义中完成。]

Example

Let’s consider this Beahavior Tree. [让我们考虑一下这个行为树。]

<root main_tree_to_execute = "MainTree">

<BehaviorTree ID="MainTree">

<Sequence name="main_sequence">

<SetBlackboard output_key="move_goal" value="1;2;3" />

<SubTree ID="MoveRobot" target="move_goal" output="move_result" />

<SaySomething message="{move_result}"/>

</Sequence>

</BehaviorTree>

<BehaviorTree ID="MoveRobot">

<Fallback name="move_robot_main">

<SequenceStar>

<MoveBase goal="{target}"/>

<SetBlackboard output_key="output" value="mission accomplished" />

</SequenceStar>

<ForceFailure>

<SetBlackboard output_key="output" value="mission failed" />

</ForceFailure>

</Fallback>

</BehaviorTree>

</root>

You may notice that: [您可能会注意到:]

- We have a

MainTreethat includes a subtree calledMoveRobot. [我们有一个 MainTree,其中包含一个名为 MoveRobot 的子树。] - We want to “connect” (i.e. “remap”) ports inside the

MoveRobotsubtree with other ports in theMainTree. [我们希望将 MoveRobot 子树中的端口与 MainTree 中的其他端口“连接”(即“重新映射”)。] - This is done using the XMl tag , where the words internal/external refer respectively to a subtree and its parent. [这是使用 XMl 标记完成的,其中内部/外部词分别指子树及其父树。]

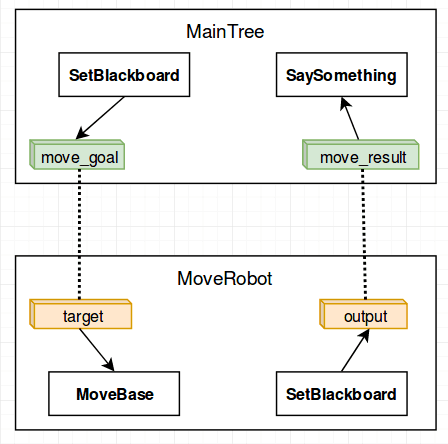

The following image shows remapping between these two different trees. [下图显示了这两个不同树之间的重新映射。]

Note that this diagram represents the dataflow and the entries in the respective blackboard, not the relationship in terms of Behavior Trees. [请注意,此图表示相应黑板上的数据流和条目,而不是行为树方面的关系。]

In terms of C++, we don’t need to do much. For debugging purpose, we may show some information about the current state of a blackboard with the method debugMessage(). [在 C++ 方面,我们不需要做太多事情。出于调试目的,我们可以使用 debugMessage() 方法显示有关黑板当前状态的一些信息。]

int main()

{

BT::BehaviorTreeFactory factory;

factory.registerNodeType<SaySomething>("SaySomething");

factory.registerNodeType<MoveBaseAction>("MoveBase");

auto tree = factory.createTreeFromText(xml_text);

NodeStatus status = NodeStatus::RUNNING;

// Keep on ticking until you get either a SUCCESS or FAILURE state

while( status == NodeStatus::RUNNING)

{

status = tree.tickRoot();

// IMPORTANT: add sleep to avoid busy loops.

// You should use Tree::sleep(). Don't be afraid to run this at 1 KHz.

tree.sleep( std::chrono::milliseconds(1) );

}

// let's visualize some information about the current state of the blackboards.

std::cout << "--------------" << std::endl;

tree.blackboard_stack[0]->debugMessage();

std::cout << "--------------" << std::endl;

tree.blackboard_stack[1]->debugMessage();

std::cout << "--------------" << std::endl;

return 0;

}

/* Expected output:

[ MoveBase: STARTED ]. goal: x=1 y=2.0 theta=3.00

[ MoveBase: FINISHED ]

Robot says: mission accomplished

--------------

move_result (std::string) -> full

move_goal (Pose2D) -> full

--------------

output (std::string) -> remapped to parent [move_result]

target (Pose2D) -> remapped to parent [move_goal]

--------------

*/

671

671

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?