深度学习之图像分类(识别)模型训练技巧(Tricks)

背景及数据集:https://www.kaggle.com/competitions/plant-seedlings-classification/overview

https://www.kaggle.com/code/tadday/plant-classifier-pytorch

常见问题1:类别不均衡

常见问题2:类别特征之间有耦合

Dropout

Dropout字面意思就是“丢掉”,是为了防止神经网络出现过拟合,让隐藏层的节点在每次迭代时(包括正向和反向传播)有一定几率(keep-prob)失效。以提高模型的泛化能力,减少过拟合。

Dropout属于一种正则化技术。Dropout的probability,实践中最常用的是0.5(或0.1,0.05)

Dropout不能用于测试集,只能用于训练集

model.train()和model.eval()的区别

model.train()和model.eval()的区别主要在于Batch Normalization和Dropout两层。

如果模型中有BN层(Batch

Normalization)和Dropout,在测试时添加model.eval()。model.eval()是保证BN层能够用全部训练数据的均值和方差,即测试过程中要保证BN层的均值和方差不变。对于Dropout,model.eval()是利用到了所有网络连接,即不进行随机舍弃神经元。

# ============================ 导入工具包包 ============================

from torchvision import datasets,transforms,models

from torch.utils.data import random_split,Dataset,DataLoader

import numpy as np

import pandas as pd

from PIL import Image

import matplotlib.pyplot as plt

import seaborn as sns

import torch

import torchvision

import os

import copy

from sklearn.model_selection import train_test_split

from torchvision.utils import make_grid

from mpl_toolkits.axes_grid1 import ImageGrid

import time

# ============================ 辅助函数 ============================

def find_classes(fulldir):

classes = os.listdir(fulldir)

classes.sort()

class_to_idx = dict(zip(classes, range(len(classes))))

idx_to_class = {v: k for k, v in class_to_idx.items()}

train = []

for i, label in idx_to_class.items():

path = fulldir + "/" + label

for file in os.listdir(path):

train.append([f'{label}/{file}', label, i])

df = pd.DataFrame(train, columns=["file", "class", "class_index"])

return classes, class_to_idx, idx_to_class, df

root_dir = "./plant-seedlings-classification/train"

classes, class_to_idx, idx_to_class, df = find_classes(root_dir)

num_classes = len(classes)

# ============================ step 0/5 参数设置 ============================

batch_size = 256

num_workers = 0

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# ============================ step 1/5 数据 ============================

class PlantDataset(Dataset):

def __init__(self, dataframe, root_dir, transform=None):

self.transform = transform

self.df = dataframe

self.root_dir = root_dir

#self.classes =

def __len__(self):

return len(self.df)

def __getitem__(self, idx):

fullpath = os.path.join(self.root_dir, self.df.iloc[idx][0])

image = Image.open(fullpath).convert('RGB')

if self.transform:

image = self.transform(image)

return image, self.df.iloc[idx][2]

# 训练数据预处理

train_transform = transforms.Compose([

transforms.RandomRotation(180),

transforms.RandomAffine(degrees = 0, translate = (0.3, 0.3)),

#transforms.CenterCrop(384),

transforms.Resize((324,324)),

transforms.RandomHorizontalFlip(),

transforms.RandomVerticalFlip(),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

# 验证数据预处理

val_transform = transforms.Compose([

#transforms.CenterCrop(384),

transforms.Resize((324, 324)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

X_train, X_val = train_test_split(df,test_size=0.2, random_state=42,stratify=df['class_index'])

# 构建训练集的Dataset和DataLoader

train_dataset = PlantDataset(X_train,root_dir, train_transform)

# 构建验证集的Dataset和DataLoader

val_dataset = PlantDataset(X_val,root_dir, val_transform)

train_loader = DataLoader(train_dataset, batch_size = batch_size, num_workers= num_workers,shuffle=True, drop_last=True )

val_loader = DataLoader(val_dataset, batch_size = batch_size, num_workers= num_workers, drop_last=True )

# ============================ step 2/5 模型 ============================

model = models.resnet50(weights=models.ResNet50_Weights.DEFAULT)

# 禁止梯度

# param.requires_grad = False 不影响误差反向传播的正常进行,但是权重和偏置值不更新了。

# 用法:冻结参数,不参与反向传播,具体实现是将要冻结的参数的requires_grad属性置为false,然后在优化器初始化时将参数组进行筛选,只加入requires_grad为True的参数

for param in model.parameters():

param.requires_grad = False # 固定特征提取层参数,只训练分类器层,缩减训练时间,提高训练效率

# resnet网络最后一层分类层fc是对1000种类型进行划分,对于自己的数据集,如果只有10类,则需要修改最后的fc分类器层

# in_features表示线性层的输入大小,fc = nn.Linear(512, 10),fc.in_features表示512

num_ftrs = model.fc.in_features

# 改换fc层

model.fc = torch.nn.Sequential(

torch.nn.Linear(num_ftrs, 256),

torch.nn.ReLU(),

torch.nn.Dropout(0.4),

torch.nn.Linear(256, num_classes)

)

# ============================ step 3/5 损失函数 ============================

# 交叉熵损失函数

criterion = torch.nn.CrossEntropyLoss()

# ============================ step 4/5 优化器 ============================

# 优化器

optimizer = torch.optim.Adam(model.parameters(), lr=0.005)

## 优化策略

exp_lr_scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.7)

# ============================ step 5/5 训练 ============================

def train_model(model, criterion, optimizer, scheduler, num_epochs=10, device=device):

# since = time.time()

model.to(device)

# 深拷贝模型权重

best_model_wts = copy.deepcopy(model.state_dict())

# 初始化最优准确率

best_acc = 0.0

# 训练

for epoch in range(num_epochs):

for phase in ["train", "val"]:

if phase == "train":

# 设置为训练模式,可以进行权重更新

model.train()

# 初始化损失和准确率

train_loss = 0.0

train_acc = 0

for index,(image, label) in enumerate(train_loader):

# 图像

image = image.to(device)

# 标签

label = label.to(device)

# 前向传播

y_pred = model(image)

# 计算损失

loss = criterion(y_pred, label)

# 打印当前损失

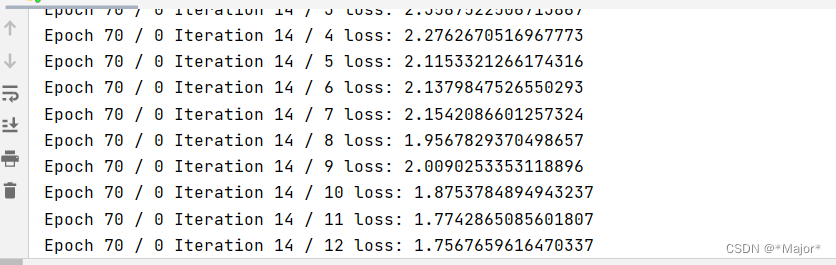

print("Epoch",num_epochs,r'/',epoch,"Iteration",len(train_loader),r'/',index,"loss:",loss.item())

# 累计损失

train_loss += loss.item()

# 梯度清零

optimizer.zero_grad()

# 反向传播

loss.backward()

# 模型参数更新

optimizer.step()

# 获取预测类别

y_pred_class = torch.argmax(torch.softmax(y_pred, dim=1), dim=1)

# 计算准确率

train_acc += (y_pred_class == label).sum().item() / len(y_pred)

# 更新权重更新策略

scheduler.step()

# 计算平均损失

train_loss /= len(train_loader)

# 计算平均准确率

train_acc /= len(train_loader)

# 打印损失和准确率

print("train_loss:",train_loss,"train_acc",train_acc)

# 验证

else:

# 模型设置为评估模式,禁止权重更新

model.eval()

# 初始化验证损失和准确率

test_loss, test_acc = 0, 0

# torch.inference_mode:禁用梯度,加快推理速度

with torch.inference_mode():

# 验证

for image, label in val_loader:

# 获取图像和标签

image = image.to(device)

label = label.to(device)

# 前向推理

test_pred_logits = model(image)

# 计算损失

loss = criterion(test_pred_logits, label)

# 累加损失

test_loss += loss.item()

# 获取预测类别,

test_pred_labels = test_pred_logits.argmax(dim=1) # argmax返回指定维度最大值的序号

# 计算准确率

test_acc += ((test_pred_labels == label).sum().item() / len(test_pred_labels))

# 计算验证平均损失

test_loss = test_loss / len(val_loader)

# 计算验证平均准确率

test_acc = test_acc / len(val_loader)

# 如果平均准确率大于之前记录最优的准确率,则取出权重,后面进行保存

if test_acc > best_acc:

best_acc = test_acc

best_model_wts = copy.deepcopy(model.state_dict())

best_model = model.load_state_dict(best_model_wts)

# 保存最优模型

torch.save(best_model, "best_model.pt")

# 打印当前轮信息

print(

f"Epoch: {epoch + 1} | "

f"train_loss: {train_loss:.4f} | "

f"train_acc: {train_acc:.4f} | "

f"test_loss: {test_loss:.4f} | "

f"test_acc: {test_acc:.4f}"

)

# 训练结束后,返回最优模型

model.load_state_dict(best_model_wts)

return model

# 训练模型,获取最优模型

model_ft = train_model(model, criterion, optimizer,exp_lr_scheduler,num_epochs=70)

# 保存最优模型

torch.save(model_ft, "best_model.pt")

## 训练技巧(Tricks)一 :数据增强(使用albumentations或transforms)

class PlantDataset(Dataset):

def __init__(self, dataframe, root_dir, transform=None):

self.transform = transform

self.df = dataframe

self.root_dir = root_dir

#self.classes =

def __len__(self):

return len(self.df)

def __getitem__(self, idx):

fullpath = os.path.join(self.root_dir, self.df.iloc[idx][0])

image = Image.open(fullpath).convert('RGB')

image = cv2.cvtColor(np.asarray(image), cv2.COLOR_RGB2BGR)

image = image.astype(np.float32)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

if self.transform:

# 方式1:transform

# image = self.transform(image)

# 方式2:albumentations

image = self.transform(image=image)['image']

return image, self.df.iloc[idx][2]

# 训练数据预处理

# 方式1:transforms

# train_transform = transforms.Compose([

# transforms.RandomRotation(180),

# transforms.RandomAffine(degrees = 0, translate = (0.3, 0.3)),

# #transforms.CenterCrop(384),

# transforms.Resize((324,324)),

# transforms.RandomHorizontalFlip(),

# transforms.RandomVerticalFlip(),

# transforms.ToTensor(),

# transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

# ])

# 方式2:albumentations

train_transform = A.Compose([

A.Resize(324, 324),

# A.RandomRotate90(),

# A.RandomCrop(256, 256),

# A.HorizontalFlip(p=0.5),

# A.VerticalFlip(p=0.5),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

])

# 验证数据预处理

# 方式1:transforms

# val_transform = transforms.Compose([

# # transforms.CenterCrop(384),

# transforms.Resize((324, 324)),

# transforms.ToTensor(),

# transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

# ])

# 方式2:albumentations

val_transform = A.Compose([

A.Resize(324, 324),

# A.RandomRotate90(),

# A.RandomCrop(256, 256),

# A.HorizontalFlip(p=0.5),

# A.VerticalFlip(p=0.5),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

])

训练技巧(Tricks)二 :改模型

# ============================ 导入工具包包 ============================

from torchvision import transforms,models

from torch.utils.data import Dataset,DataLoader

import pandas as pd

from PIL import Image

import torch

import os

import copy

from sklearn.model_selection import train_test_split

from sklearn.model_selection import StratifiedKFold

import albumentations as A

from albumentations.pytorch import ToTensorV2

import cv2

import numpy as np

import torch.nn as nn

'''

ETA:

1.图像大一不一致

2.图像都为正方形

'''

# ============================ 辅助函数 ============================

def find_classes(fulldir):

# 获取所有文件夹名称

classes = os.listdir(fulldir)

classes.sort()

# 类名-id 字典

class_to_idx = dict(zip(classes, range(len(classes))))

# id-类名 字典

idx_to_class = {v: k for k, v in class_to_idx.items()}

train = []

for i, label in idx_to_class.items():

path = fulldir + "/" + label

for file in os.listdir(path):

train.append([f'{label}/{file}', label, i])

# 图像路径 标签 标签id

df = pd.DataFrame(train, columns=["file", "class", "class_index"])

return classes, class_to_idx, idx_to_class, df

# ============================ step 0/5 参数设置 ============================

# 训练轮次

num_epoch = 10

# 批大小

batch_size = 24

# 多线程读取数据

num_workers = 0

# 设备

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 训练集路径

root_dir = "./plant-seedlings-classification/train"

# 获取相关信息

classes, class_to_idx, idx_to_class, df = find_classes(root_dir)

# 类别个数

num_classes = len(classes)

# ============================ step 1/5 数据 ============================

class PlantDataset(Dataset):

def __init__(self, dataframe, root_dir, transform=None):

self.transform = transform

self.df = dataframe

self.root_dir = root_dir

#self.classes =

def __len__(self):

return len(self.df)

def __getitem__(self, idx):

fullpath = os.path.join(self.root_dir, self.df.iloc[idx][0])

image = Image.open(fullpath).convert('RGB')

image = cv2.cvtColor(np.asarray(image), cv2.COLOR_RGB2BGR)

image = image.astype(np.float32)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

if self.transform:

# 方式1:transform

# image = self.transform(image)

# 方式2:albumentations

image = self.transform(image=image)['image']

return image, self.df.iloc[idx][2]

# 训练数据预处理

# 方式1:transforms

# train_transform = transforms.Compose([

# transforms.RandomRotation(180),

# transforms.RandomAffine(degrees = 0, translate = (0.3, 0.3)),

# #transforms.CenterCrop(384),

# transforms.Resize((324,324)),

# transforms.RandomHorizontalFlip(),

# transforms.RandomVerticalFlip(),

# transforms.ToTensor(),

# transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

# ])

# 方式2:albumentations

train_transform = A.Compose([

A.Resize(324, 324),

# A.RandomRotate90(),

# A.RandomCrop(256, 256),

# A.HorizontalFlip(p=0.5),

# A.VerticalFlip(p=0.5),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

])

# 验证数据预处理

# 方式1:transforms

# val_transform = transforms.Compose([

# # transforms.CenterCrop(384),

# transforms.Resize((324, 324)),

# transforms.ToTensor(),

# transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

# ])

# 方式2:albumentations

val_transform = A.Compose([

A.Resize(324, 324),

# A.RandomRotate90(),

# A.RandomCrop(256, 256),

# A.HorizontalFlip(p=0.5),

# A.VerticalFlip(p=0.5),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

])

# 划分验证集和训练集

X_train, X_val = train_test_split(df,test_size=0.2, random_state=42,stratify=df['class_index'])

# 构建训练集的Dataset和DataLoader

train_dataset = PlantDataset(X_train,root_dir, train_transform)

train_loader = DataLoader(train_dataset, batch_size = batch_size, num_workers= num_workers,shuffle=True, drop_last=True )

# 构建验证集的Dataset和DataLoader

val_dataset = PlantDataset(X_val,root_dir, val_transform)

val_loader = DataLoader(val_dataset, batch_size = batch_size, num_workers= num_workers, drop_last=True )

# ============================ step 2/5 模型 ============================

'''

模型系列一:resnet

'''

model = models.resnet50(weights=models.ResNet50_Weights.DEFAULT)

# 禁止梯度

# param.requires_grad = False 不影响误差反向传播的正常进行,但是权重和偏置值不更新了。

# 用法:冻结参数,不参与反向传播,具体实现是将要冻结的参数的requires_grad属性置为false,然后在优化器初始化时将参数组进行筛选,只加入requires_grad为True的参数

for param in model.parameters():

param.requires_grad = False

# resnet网络最后一层分类层fc是对1000种类型进行划分,对于自己的数据集,如果只有10类,则需要修改最后的fc分类器层

# in_features表示线性层的输入大小,fc = nn.Linear(512, 10),fc.in_features表示512

num_ftrs = model.fc.in_features

# 改换fc层

model.fc = torch.nn.Sequential(

torch.nn.Linear(num_ftrs, 256),

torch.nn.ReLU(),

torch.nn.Dropout(0.4),

torch.nn.Linear(256, num_classes)

)

'''

模型系列二:efficientnet

'''

# model = models.efficientnet_b2(True)

model = models.efficientnet_b2(weights=models.EfficientNet_B2_Weights.DEFAULT)

# 冻结参数

for param in model.parameters():

param.requires_grad = False

# model.avgpool = nn.AdaptiveAvgPool2d(1)

model.classifier = nn.Sequential(

torch.nn.Linear(1408, 256),

torch.nn.ReLU(),

torch.nn.Dropout(0.4),

torch.nn.Linear(256, num_classes)

)

model = model.to(device)

# ============================ step 3/5 损失函数 ============================

# 交叉熵损失函数

criterion = torch.nn.CrossEntropyLoss()

# ============================ step 4/5 优化器 ============================

# 优化器

optimizer = torch.optim.Adam(model.parameters(), lr=0.005)

## 优化策略

exp_lr_scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.7)

# ============================ step 5/5 训练 ============================

def train_model(model, criterion, optimizer, scheduler, num_epochs=10, device=device):

# since = time.time()

model.to(device)

# 深拷贝模型权重

best_model_wts = copy.deepcopy(model.state_dict())

# 初始化最优准确率

best_acc = 0.0

# 训练

for epoch in range(num_epochs):

for phase in ["train", "val"]:

if phase == "train":

# 设置为训练模式,可以进行权重更新

model.train()

# 初始化损失和准确率

train_loss = 0.0

train_acc = 0

for index,(image, label) in enumerate(train_loader):

# 图像

image = image.to(device)

# 标签

label = label.to(device)

# 前向传播

y_pred = model(image)

# 计算损失

loss = criterion(y_pred, label)

# 打印当前损失

print("Epoch",num_epochs,r'/',epoch,"Iteration",len(train_loader),r'/',index,"loss:",loss.item())

# 累计损失

train_loss += loss.item()

# 梯度清零

optimizer.zero_grad()

# 反向传播

loss.backward()

# 模型参数更新

optimizer.step()

# 获取预测类别

y_pred_class = torch.argmax(torch.softmax(y_pred, dim=1), dim=1)

# 计算准确率

train_acc += (y_pred_class == label).sum().item() / len(y_pred)

# 更新权重更新策略

scheduler.step()

# 计算平均损失

train_loss /= len(train_loader)

# 计算平均准确率

train_acc /= len(train_loader)

# 打印损失和准确率

print("train_loss:",train_loss,"train_acc",train_acc)

# 验证

else:

# 模型设置为评估模式,禁止权重更新

model.eval()

# 初始化验证损失和准确率

test_loss, test_acc = 0, 0

# torch.inference_mode:禁用梯度,加快推理速度

with torch.inference_mode():

# 验证

for image, label in val_loader:

# 获取图像和标签

image = image.to(device)

label = label.to(device)

# 前向推理

test_pred_logits = model(image)

# 计算损失

loss = criterion(test_pred_logits, label)

# 累加损失

test_loss += loss.item()

# 获取预测类别,

test_pred_labels = test_pred_logits.argmax(dim=1) # argmax返回指定维度最大值的序号

# 计算准确率

test_acc += ((test_pred_labels == label).sum().item() / len(test_pred_labels))

# 计算验证平均损失

test_loss = test_loss / len(val_loader)

# 计算验证平均准确率

test_acc = test_acc / len(val_loader)

# 如果平均准确率大于之前记录最优的准确率,则取出权重,后面进行保存

if test_acc > best_acc:

best_acc = test_acc

best_model_wts = copy.deepcopy(model.state_dict())

# 保存最优模型

torch.save(model, "best_model.pt")

# 打印当前轮信息

print(

f"Epoch: {epoch + 1} | "

f"train_loss: {train_loss:.4f} | "

f"train_acc: {train_acc:.4f} | "

f"test_loss: {test_loss:.4f} | "

f"test_acc: {test_acc:.4f}"

)

# 训练结束后,返回最优模型

model.load_state_dict(best_model_wts)

return model

# 训练模型,获取最优模型

model_ft = train_model(model, criterion, optimizer,exp_lr_scheduler,num_epochs=70)

# 保存最优模型

torch.save(model_ft, "best_model.pt")

训练技巧(Tricks)三 :五折训练法

# ============================ 导入工具包包 ============================

from torchvision import transforms,models

from torch.utils.data import Dataset,DataLoader

import pandas as pd

from PIL import Image

import torch

import os

import copy

from sklearn.model_selection import StratifiedKFold

import albumentations as A

from albumentations.pytorch import ToTensorV2

import cv2

import numpy as np

import torch.nn as nn

'''

ETA:

1.图像大一不一致

2.图像都为正方形

'''

# ============================ 辅助函数 ============================

def find_classes(fulldir):

# 获取所有文件夹名称

classes = os.listdir(fulldir)

classes.sort()

# 类名-id 字典

class_to_idx = dict(zip(classes, range(len(classes))))

# id-类名 字典

idx_to_class = {v: k for k, v in class_to_idx.items()}

train = []

for i, label in idx_to_class.items():

path = fulldir + "/" + label

for file in os.listdir(path):

train.append([f'{label}/{file}', label, i])

# 图像路径 标签 标签id

df = pd.DataFrame(train)

return classes, class_to_idx, idx_to_class, df

# ============================ step 0/5 参数设置 ============================

# 训练轮次

num_epoch = 1

# 批大小

batch_size = 64

# 多线程读取数据

num_workers = 0

# 设备

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 训练集路径

root_dir = "./plant-seedlings-classification/train"

# 获取相关信息

classes, class_to_idx, idx_to_class, df = find_classes(root_dir)

# 类别个数

num_classes = len(classes)

'''

StratifiedKFold函数是从sklearn模块中导出的函数,

StratifiedKFold函数采用分层划分的方法(分层随机抽样思想),验证集中不同类别占比与原始样本的比例保持一致,

故StratifiedKFold在做划分的时候需要传入标签特征。

'''

kf = StratifiedKFold(n_splits=5)

fold_idx = 0

# ============================ step 1/5 数据 ============================

class PlantDataset(Dataset):

def __init__(self, dataframe, root_dir, transform=None):

self.transform = transform

self.df = dataframe

self.root_dir = root_dir

#self.classes =

def __len__(self):

return len(self.df)

def __getitem__(self, idx):

fullpath = os.path.join(self.root_dir, self.df.iloc[idx][0])

image = Image.open(fullpath).convert('RGB')

image = cv2.cvtColor(np.asarray(image), cv2.COLOR_RGB2BGR)

image = image.astype(np.float32)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

if self.transform:

# 方式1:transform

# image = self.transform(image)

# 方式2:albumentations

image = self.transform(image=image)['image']

return image, self.df.iloc[idx][2]

# 训练数据预处理

# 方式1:transforms

# train_transform = transforms.Compose([

# transforms.RandomRotation(180),

# transforms.RandomAffine(degrees = 0, translate = (0.3, 0.3)),

# #transforms.CenterCrop(384),

# transforms.Resize((324,324)),

# transforms.RandomHorizontalFlip(),

# transforms.RandomVerticalFlip(),

# transforms.ToTensor(),

# transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

# ])

# 方式2:albumentations

train_transform = A.Compose([

A.Resize(324, 324),

# A.RandomRotate90(),

# A.RandomCrop(256, 256),

# A.HorizontalFlip(p=0.5),

# A.VerticalFlip(p=0.5),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

])

# 验证数据预处理

# 方式1:transforms

# val_transform = transforms.Compose([

# # transforms.CenterCrop(384),

# transforms.Resize((324, 324)),

# transforms.ToTensor(),

# transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

# ])

# 方式2:albumentations

val_transform = A.Compose([

A.Resize(324, 324),

# A.RandomRotate90(),

# A.RandomCrop(256, 256),

# A.HorizontalFlip(p=0.5),

# A.VerticalFlip(p=0.5),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

])

# ============================ step 5/5 训练 ============================

def train_model(model, criterion, optimizer, scheduler, num_epochs=10, device=device,fold_idx=1):

# since = time.time()

model.to(device)

# 深拷贝模型权重

best_model_wts = copy.deepcopy(model.state_dict())

# 初始化最优准确率

best_acc = 0.0

# 训练

for epoch in range(num_epochs):

for phase in ["train", "val"]:

if phase == "train":

# 设置为训练模式,可以进行权重更新

model.train()

# 初始化损失和准确率

train_loss = 0.0

train_acc = 0

for index,(image, label) in enumerate(train_loader):

# 图像

image = image.to(device)

# 标签

label = label.to(device)

# 前向传播

y_pred = model(image)

# 计算损失

loss = criterion(y_pred, label)

# 打印当前损失

print("Epoch",num_epochs,r'/',epoch,"Iteration",len(train_loader),r'/',index,"loss:",loss.item())

# 累计损失

train_loss += loss.item()

# 梯度清零

optimizer.zero_grad()

# 反向传播

loss.backward()

# 模型参数更新

optimizer.step()

# 获取预测类别

y_pred_class = torch.argmax(torch.softmax(y_pred, dim=1), dim=1)

# 计算准确率

train_acc += (y_pred_class == label).sum().item() / len(y_pred)

# 更新权重更新策略

scheduler.step()

# 计算平均损失

train_loss /= len(train_loader)

# 计算平均准确率

train_acc /= len(train_loader)

# 打印损失和准确率

print("train_loss:",train_loss,"train_acc",train_acc)

# 验证

else:

# 模型设置为评估模式,禁止权重更新

model.eval()

# 初始化验证损失和准确率

test_loss, test_acc = 0, 0

# torch.inference_mode:禁用梯度,加快推理速度

with torch.inference_mode():

# 验证

for image, label in val_loader:

# 获取图像和标签

image = image.to(device)

label = label.to(device)

# 前向推理

test_pred_logits = model(image)

# 计算损失

loss = criterion(test_pred_logits, label)

# 累加损失

test_loss += loss.item()

# 获取预测类别,

test_pred_labels = test_pred_logits.argmax(dim=1) # argmax返回指定维度最大值的序号

# 计算准确率

test_acc += ((test_pred_labels == label).sum().item() / len(test_pred_labels))

# 计算验证平均损失

test_loss = test_loss / len(val_loader)

# 计算验证平均准确率

test_acc = test_acc / len(val_loader)

# 如果平均准确率大于之前记录最优的准确率,则取出权重,后面进行保存

if test_acc > best_acc:

best_acc = test_acc

best_model_wts = copy.deepcopy(model.state_dict())

# 保存最优模型

torch.save(model, "best_model_fold_"+str(fold_idx)+".pt")

# 打印当前轮信息

print(

f"Epoch: {epoch + 1} | "

f"train_loss: {train_loss:.4f} | "

f"train_acc: {train_acc:.4f} | "

f"test_loss: {test_loss:.4f} | "

f"test_acc: {test_acc:.4f}"

)

# 训练结束后,返回最优模型

model.load_state_dict(best_model_wts)

return model

for train_idx, val_idx in kf.split(df[0], df[2]):

# print(train_idx, val_idx)

X_train = df.iloc[train_idx]

X_val = df.iloc[val_idx]

# 构建训练集的Dataset和DataLoader

train_dataset = PlantDataset(X_train, root_dir, train_transform)

train_loader = DataLoader(train_dataset, batch_size=batch_size, num_workers=num_workers, shuffle=True,

drop_last=True)

# 构建验证集的Dataset和DataLoader

val_dataset = PlantDataset(X_val, root_dir, val_transform)

val_loader = DataLoader(val_dataset, batch_size=batch_size, num_workers=num_workers, drop_last=True)

# ============================ step 2/5 模型 ============================

'''

模型系列:efficientnet

'''

# model = models.efficientnet_b2(True)

model = models.efficientnet_b2(weights=models.EfficientNet_B2_Weights.DEFAULT)

# 冻结参数

for param in model.parameters():

param.requires_grad = False

# model.avgpool = nn.AdaptiveAvgPool2d(1)

model.classifier = nn.Sequential(

torch.nn.Linear(1408, 256),

torch.nn.ReLU(),

torch.nn.Dropout(0.4),

torch.nn.Linear(256, num_classes)

)

model = model.to(device)

# ============================ step 3/5 损失函数 ============================

# 交叉熵损失函数

criterion = torch.nn.CrossEntropyLoss()

# ============================ step 4/5 优化器 ============================

# 优化器

optimizer = torch.optim.Adam(model.parameters(), lr=0.005)

## 优化策略

exp_lr_scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.7)

# 训练

model_ft = train_model(model, criterion, optimizer, exp_lr_scheduler, num_epochs=num_epoch,fold_idx=fold_idx)

# 折数加1

fold_idx +=1

Kaggle结果提交代码样例

class TestPlant(Dataset):

def __init__(self, rootdir, transform=None):

self.transform=transform

self.rootdir=rootdir

self.image_files = os.listdir(self.rootdir)

def __len__(self):

return len(self.image_files)

def __getitem__(self, idx):

fullpath = os.path.join(self.rootdir, self.image_files[idx])

image = Image.open(fullpath).convert('RGB')

image = cv2.cvtColor(np.asarray(image), cv2.COLOR_RGB2BGR)

image = image.astype(np.float32)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

if self.transform:

# 方式1:transform

# image = self.transform(image)

# 方式2:albumentations

image = self.transform(image=image)['image']

return image, self.image_files[idx]

test_dataset = TestPlant(rootdir="/kaggle/input/plant-seedlings-classification/test", transform=val_transform)

test_loader = DataLoader(test_dataset, batch_size = batch_size, num_workers= num_workers, shuffle=False)

test_filenames = os.listdir("/kaggle/input/plant-seedlings-classification/test")

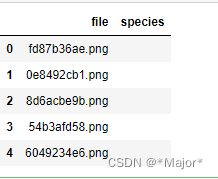

submission = pd.DataFrame(test_filenames, columns=["file"])

submission["species"] = ""

submission.head()

def test(submission, test_loader, model, device=device):

model.to(device)

with torch.no_grad():

for image, file_names in test_loader:

image = image.to(device)

y_pred = model(image)

y_pred_labels = y_pred.argmax(dim=1)

y_pred_labels = y_pred_labels.cpu().numpy()

submission.loc[submission["file"].isin(file_names), "species"] = y_pred_labels

model = torch.load("/kaggle/working/best_model.pt")

model.eval()

test(submission, test_loader, model)

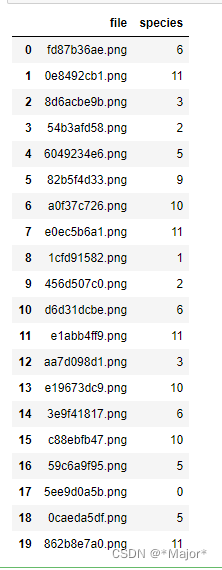

submission.head(20)

submission = submission.replace({"species": idx_to_class})

submission.to_csv("/kaggle/working/submission.csv", index=False)

对比不同的数据增强方法(对比mixup和cutmix)

对比不同的预训练模型对最终精度的影响

使用交叉验证划分数据集,对测试集进行预测集成

CutMix_MixUp_Raw_5F_Eff_Train

from torch.utils.data import Dataset, DataLoader

import pandas as pd

from PIL import Image

import torch

import os

from torchvision import transforms

import time

import numpy as np

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

batch_size = 16

num_workers = 0

def find_classes(fulldir):

classes = os.listdir(fulldir)

classes.sort()

class_to_idx = dict(zip(classes, range(len(classes))))

idx_to_class = {v: k for k, v in class_to_idx.items()}

train = []

for i, label in idx_to_class.items():

path = fulldir + "/" + label

for file in os.listdir(path):

train.append([f'{label}/{file}', label, i])

df = pd.DataFrame(train, columns=["file", "class", "class_index"])

return classes, class_to_idx, idx_to_class, df

# 训练集路径

root_dir = "./plant-seedlings-classification/train"

# 获取相关信息

classes, class_to_idx, idx_to_class, df = find_classes(root_dir)

class TestPlant(Dataset):

def __init__(self, rootdir, transform=None):

self.transform=transform

self.rootdir=rootdir

self.image_files = os.listdir(self.rootdir)

def __len__(self):

return len(self.image_files)

def __getitem__(self, idx):

fullpath = os.path.join(self.rootdir, self.image_files[idx])

image = Image.open(fullpath).convert('RGB')

if self.transform:

image = self.transform(image)

return image, self.image_files[idx]

# 验证数据预处理

val_transform = transforms.Compose([

#transforms.CenterCrop(384),

transforms.Resize((324, 324)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

test_dataset = TestPlant(rootdir="./plant-seedlings-classification/test", transform=val_transform)

# 关闭shuffle,确保每次读取图像顺序一致

test_loader = DataLoader(test_dataset, batch_size = batch_size, num_workers= num_workers, shuffle=False)

test_filenames = os.listdir("./plant-seedlings-classification/test")

submission = pd.DataFrame(test_filenames, columns=["file"])

submission["species"] = ""

submission.head()

def test(submission, test_loader, model, device=device):

model.to(device)

with torch.no_grad():

for image, file_names in test_loader:

image = image.to(device)

y_pred = model(image)

y_pred_labels = y_pred.argmax(dim=1)

y_pred_labels = y_pred_labels.cpu().numpy()

submission.loc[submission["file"].isin(file_names), "species"] = y_pred_labels

'''

tta: Test Time Augmentation

定义:TTA(Test Time Augmentation):测试时数据增强

方法:测试时将原始数据做不同形式的增强,然后取结果的平均值作为最终结果

作用:可以进一步提升最终结果的精度

采用TTA(测试时增强),可以对一幅图像做多种变换,创造出多个不同版本,包括不同区域裁剪和更改缩放程度等,

然后对多个版本数据进行计算最后得到平均输出作为最终结果,提高了结果的稳定性和精准度.

'''

def predict(test_loader, model, tta=10):

# 模型设为预测模式

model.eval()

# 初始化单模型tta预测

test_pred_tta = None

# 多次tta预测

for _ in range(tta):

print("tta time")

test_pred = []

with torch.no_grad():

for image, file_names in test_loader:

image = image.to(device)

output = model(image)

# shape 16 * 12

# if torch.cuda.is_available():

# output = output.data.cuda().cpu().numpy()

# else:

output = output.data.cpu().numpy()

# list

test_pred.append(output)

# list竖向排列合并 shape 794 * 12

test_pred = np.vstack(test_pred)

if test_pred_tta is None:

# shape 794 12

test_pred_tta = test_pred

else:

# shape 794 12 (值相加)

test_pred_tta += test_pred

return test_pred_tta

'''

遍历所有模型预测

'''

# 不同模型的综合预测

test_pred = None

for model_path in ['best_model_fold_0.pt', 'best_model_fold_1.pt', 'best_model_fold_2.pt', 'best_model_fold_3.pt', 'best_model_fold_4.pt']:

print("当前加载模型:",model_path)

# 加载模型

model = torch.load(model_path)

if test_pred is None:

# shape: 794 12

test_pred = predict(test_loader, model, 5)

else:

# shape: 794 12 (值相加)

test_pred += predict(test_loader, model, 5)

# 获取所有测试图像照片名称

test_filenames = os.listdir("./plant-seedlings-classification/test")

# 初始化dataframe

submission = pd.DataFrame(test_filenames, columns=["file"])

# 预测类别索引id

submission["species"] = np.argmax(test_pred, 1)

# 预测id转为类别名称

submission = submission.replace({"species": idx_to_class})

# 保存为submission.csv

submission.to_csv("submission.csv", index=False)

# ============================ 导入工具包包 ============================

from torchvision import transforms,models

from torch.utils.data import Dataset,DataLoader

import pandas as pd

from PIL import Image

import torch

import os

import copy

from sklearn.model_selection import StratifiedKFold

import albumentations as A

from albumentations.pytorch import ToTensorV2

import cv2

import numpy as np

import torch.nn as nn

'''

ETA:

1.图像大一不一致

2.图像都为正方形

'''

# ============================ 辅助函数 ============================

def find_classes(fulldir):

# 获取所有文件夹名称

classes = os.listdir(fulldir)

classes.sort()

# 类名-id 字典

class_to_idx = dict(zip(classes, range(len(classes))))

# id-类名 字典

idx_to_class = {v: k for k, v in class_to_idx.items()}

train = []

for i, label in idx_to_class.items():

path = fulldir + "/" + label

for file in os.listdir(path):

train.append([f'{label}/{file}', label, i])

# 图像路径 标签 标签id

df = pd.DataFrame(train)

return classes, class_to_idx, idx_to_class, df

# ============================ step 0/5 参数设置 ============================

# 训练轮次

num_epoch = 1

# 批大小

batch_size = 64

# 多线程读取数据

num_workers = 0

# 设备

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 训练集路径

root_dir = "./plant-seedlings-classification/train"

# 获取相关信息

classes, class_to_idx, idx_to_class, df = find_classes(root_dir)

# 类别个数

num_classes = len(classes)

'''

StratifiedKFold函数是从sklearn模块中导出的函数,

StratifiedKFold函数采用分层划分的方法(分层随机抽样思想),验证集中不同类别占比与原始样本的比例保持一致,

故StratifiedKFold在做划分的时候需要传入标签特征。

'''

kf = StratifiedKFold(n_splits=5)

fold_idx = 0

# ============================ step 1/5 数据 ============================

'''

CutMix数据增强

'''

def rand_bbox(size, lam):

W = size[2]

H = size[3]

cut_rat = np.sqrt(1. - lam)

cut_w = np.int(W * cut_rat)

cut_h = np.int(H * cut_rat)

# uniform

cx = np.random.randint(W)

cy = np.random.randint(H)

bbx1 = np.clip(cx - cut_w // 2, 0, W)

bby1 = np.clip(cy - cut_h // 2, 0, H)

bbx2 = np.clip(cx + cut_w // 2, 0, W)

bby2 = np.clip(cy + cut_h // 2, 0, H)

return bbx1, bby1, bbx2, bby2

def cutmix(data, targets, alpha):

# 确定beta系数 如果alpha小于0,则lam设为1

if alpha > 0:

lam = np.random.beta(alpha, alpha)

else:

lam = 1

# 打乱索引

indices = torch.randperm(data.size(0))

# 打乱后的图像数据

shuffled_data = data[indices]

# 打乱后的标签

shuffled_targets = targets[indices]

# lam系数

bbx1, bby1, bbx2, bby2 = rand_bbox(data.size(), lam)

data[:, :, bbx1:bbx2, bby1:bby2] = data[indices, :, bbx1:bbx2, bby1:bby2]

# adjust lambda to exactly match pixel ratio

lam = 1 - ((bbx2 - bbx1) * (bby2 - bby1) / (data.size()[-1] * data.size()[-2]))

return data, targets, shuffled_targets, lam

'''

MixUp数据增强

'''

def mixup(data, targets, alpha=1.0):

# 确定beta系数 如果alpha小于0,则lam设为1

if alpha > 0:

lam = np.random.beta(alpha, alpha)

else:

lam = 1

# 随机索引序列 shape 24

indices = torch.randperm(data.size(0)) # data.size(0) 是batch_size

# 打乱后的图像数据 shape 24 3 324 324

shuffled_data = data[indices]

# 随机标签列表 shape 24

shuffled_targets = targets[indices]

# 图像融合

mix_data = data * lam + shuffled_data * (1 - lam)

# 结果

return mix_data, targets, shuffled_targets, lam

class PlantDataset(Dataset):

def __init__(self, dataframe, root_dir, transform=None):

self.transform = transform

self.df = dataframe

self.root_dir = root_dir

def __len__(self):

return len(self.df)

def __getitem__(self, idx):

fullpath = os.path.join(self.root_dir, self.df.iloc[idx][0])

image = Image.open(fullpath).convert('RGB')

image = cv2.cvtColor(np.asarray(image), cv2.COLOR_RGB2BGR)

image = image.astype(np.float32)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

if self.transform:

# 方式1:transform

# image = self.transform(image)

# 方式2:albumentations

image = self.transform(image=image)['image']

return image, self.df.iloc[idx][2]

# 训练数据预处理

# 方式1:transforms

# train_transform = transforms.Compose([

# transforms.RandomRotation(180),

# transforms.RandomAffine(degrees = 0, translate = (0.3, 0.3)),

# #transforms.CenterCrop(384),

# transforms.Resize((324,324)),

# transforms.RandomHorizontalFlip(),

# transforms.RandomVerticalFlip(),

# transforms.ToTensor(),

# transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

# ])

# 方式2:albumentations

train_transform = A.Compose([

A.Resize(324, 324),

# A.RandomRotate90(),

# A.RandomCrop(256, 256),

# A.HorizontalFlip(p=0.5),

# A.VerticalFlip(p=0.5),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

])

# 验证数据预处理

# 方式1:transforms

# val_transform = transforms.Compose([

# # transforms.CenterCrop(384),

# transforms.Resize((324, 324)),

# transforms.ToTensor(),

# transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

# ])

# 方式2:albumentations

val_transform = A.Compose([

A.Resize(324, 324),

# A.RandomRotate90(),

# A.RandomCrop(256, 256),

# A.HorizontalFlip(p=0.5),

# A.VerticalFlip(p=0.5),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

ToTensorV2(),

])

# ============================ step 5/5 训练 ============================

def mixup_criterion(criterion, pred, y_a, y_b, lam):

return lam * criterion(pred, y_a) + (1 - lam) * criterion(pred, y_b)

def cutmix_criterion(criterion, output, target_a, target_b, lam):

return criterion(output, target_a) * lam + criterion(output, target_b) * (1. - lam)

'''

模型训练(单折)

'''

def train_model(model, criterion, optimizer, scheduler, num_epochs=10, device=device,fold_idx=1):

# since = time.time()

model.to(device)

# 深拷贝模型权重

best_model_wts = copy.deepcopy(model.state_dict())

# 初始化最优准确率

best_acc = 0.0

# 训练

for epoch in range(num_epochs):

for phase in ["train", "val"]:

if phase == "train":

# 设置为训练模式,可以进行权重更新

model.train()

# 初始化损失和准确率

train_loss = 0.0

train_acc = 0

for index,(image, label) in enumerate(train_loader):

# 图像

image = image.to(device)

# 标签

label = label.to(device)

probality_ = np.random.rand()

# mixup 数据增强

if probality_ < 0.3:

# mixup 数据增强

inputs, targets_a, targets_b, lam = mixup(image, label, alpha=1.0)

# 前向传播

outputs = model(inputs)

# 计算损失

loss = mixup_criterion(criterion, outputs, targets_a, targets_b, lam)

# cutmix 数据增强

elif probality_ >= 0.3 and np.random.rand() < 0.6:

# cutmix 数据增强

inputs, targets_a, targets_b, lam = cutmix(image, label, alpha=1.0)

# 前向传播

outputs = model(inputs)

# 计算损失

loss = cutmix_criterion(criterion, outputs, targets_a, targets_b, lam)

# 原图

else:

# 前向传播

outputs = model(image)

# 计算损失

loss = criterion(outputs, label)

# 打印当前损失

print("Epoch",num_epochs,r'/',epoch,"Iteration",len(train_loader),r'/',index,"loss:",loss.item())

# 累计损失

train_loss += loss.item()

# 梯度清零

optimizer.zero_grad()

# 反向传播

loss.backward()

# 模型参数更新

optimizer.step()

# 获取预测类别

y_pred_class = torch.argmax(torch.softmax(outputs, dim=1), dim=1)

# 计算准确率

train_acc += (y_pred_class == label).sum().item() / len(outputs)

# 更新权重更新策略

scheduler.step()

# 计算平均损失

train_loss /= len(train_loader)

# 计算平均准确率

train_acc /= len(train_loader)

# 打印损失和准确率

print("train_loss:",train_loss,"train_acc",train_acc)

# 验证

else:

# 模型设置为评估模式,禁止权重更新

model.eval()

# 初始化验证损失和准确率

test_loss, test_acc = 0, 0

# torch.inference_mode:禁用梯度,加快推理速度

with torch.inference_mode():

# 验证

for image, label in val_loader:

# 获取图像和标签

image = image.to(device)

label = label.to(device)

# 前向推理

test_pred_logits = model(image)

# 计算损失

loss = criterion(test_pred_logits, label)

# 累加损失

test_loss += loss.item()

# 获取预测类别,

test_pred_labels = test_pred_logits.argmax(dim=1) # argmax返回指定维度最大值的序号

# 计算准确率

test_acc += ((test_pred_labels == label).sum().item() / len(test_pred_labels))

# 计算验证平均损失

test_loss = test_loss / len(val_loader)

# 计算验证平均准确率

test_acc = test_acc / len(val_loader)

# 如果平均准确率大于之前记录最优的准确率,则取出权重,后面进行保存

if test_acc > best_acc:

best_acc = test_acc

best_model_wts = copy.deepcopy(model.state_dict())

# 保存最优模型

torch.save(model, "best_model_fold_"+str(fold_idx)+".pt")

# 打印当前轮信息

print(

f"Epoch: {epoch + 1} | "

f"train_loss: {train_loss:.4f} | "

f"train_acc: {train_acc:.4f} | "

f"test_loss: {test_loss:.4f} | "

f"test_acc: {test_acc:.4f}"

)

# 训练结束后,返回最优模型

model.load_state_dict(best_model_wts)

return model

'''

五折训练(kf.split(训练图像,标签))

'''

for train_idx, val_idx in kf.split(df[0], df[2]):

# print(train_idx, val_idx)

X_train = df.iloc[train_idx]

X_val = df.iloc[val_idx]

# 构建训练集的Dataset和DataLoader

train_dataset = PlantDataset(X_train, root_dir, train_transform)

train_loader = DataLoader(train_dataset, batch_size=batch_size, num_workers=num_workers, shuffle=True,

drop_last=True)

# 构建验证集的Dataset和DataLoader

val_dataset = PlantDataset(X_val, root_dir, val_transform)

val_loader = DataLoader(val_dataset, batch_size=batch_size, num_workers=num_workers, drop_last=True)

# ============================ step 2/5 模型 ============================

'''

模型系列:efficientnet

'''

# model = models.efficientnet_b2(True)

model = models.efficientnet_b2(weights=models.EfficientNet_B2_Weights.DEFAULT)

# 冻结参数

for param in model.parameters():

param.requires_grad = False

# model.avgpool = nn.AdaptiveAvgPool2d(1)

model.classifier = nn.Sequential(

torch.nn.Linear(1408, 256),

torch.nn.ReLU(),

torch.nn.Dropout(0.4),

torch.nn.Linear(256, num_classes)

)

model = model.to(device)

# ============================ step 3/5 损失函数 ============================

# 交叉熵损失函数

criterion = torch.nn.CrossEntropyLoss()

# ============================ step 4/5 优化器 ============================

# 优化器

optimizer = torch.optim.Adam(model.parameters(), lr=0.005)

## 优化策略

exp_lr_scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.7)

# 训练

model_ft = train_model(model, criterion, optimizer, exp_lr_scheduler, num_epochs=num_epoch,fold_idx=fold_idx)

# 折数加1

fold_idx +=1

'''

测试方式

1.将所有训练的KFold进行融合(投票或者预测值合并)

2.最优模型重新训练全部数据后预测

3.调参验证

'''

不同模型之间的模型预测融合

Ids = test_data.index.tolist()

# 定义两个模型的权重和阈值

ratio_1 = 0.65

ratio_2 = 0.35

# ensemble函数用于将两个模型的预测结果合并

def pred_ensemble(p1, p2):

p = p1*ratio_1 + p2*ratio_2# + p3*w3 # 按权重合并两个模型的预测概率

return p.argmax()

# 遍历图像ID、第一个模型的概率和第二个模型的概率

for img_id, p1, p2 in zip(Ids, Probs_1, Probs_2):

# 使用pred_ensemble函数合并两个模型的预测结果,并用rle_encode函数进行编码,然后添加到提交的数据结构中

test_data.loc[img_id, 'species'] = idx_to_species[pred_ensemble(p1, p2)] #

4611

4611

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?