2017_MobileNetV2_谷歌:

图:

网络描述:

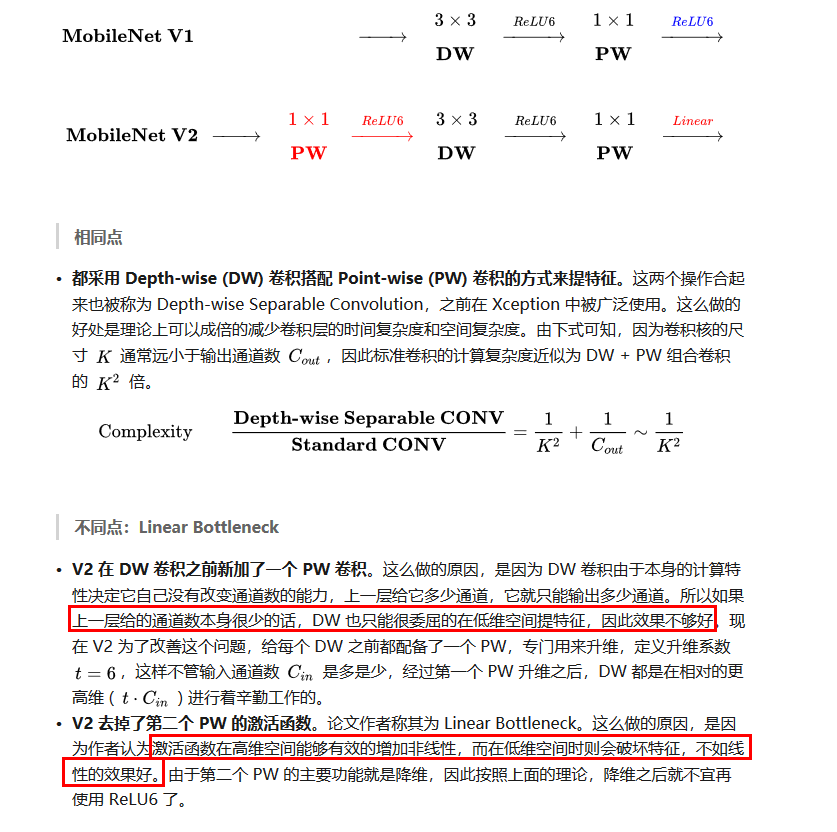

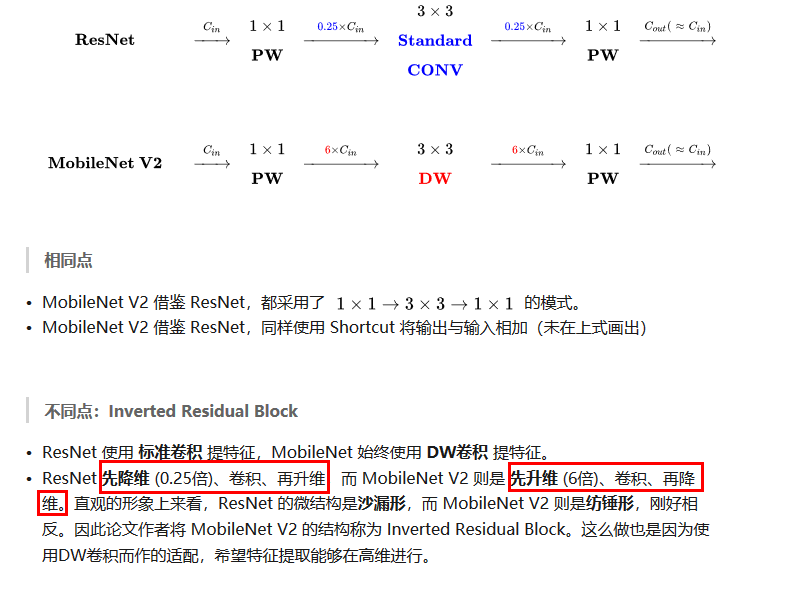

MobileNet V2提出了 the inverted residual with linear bottleneck,线性瓶颈反残差结构。

扩张(1x1 conv) -> 抽取特征(3x3 depthwise)-> 压缩(1x1 conv)

特点,优点:

(1)引入残差结构,先升维再降维,增强梯度的传播,显著减少推理期间所需的内存占用

(2)去掉 Narrow layer(low dimension or depth) 后的 ReLU,保留特征多样性,增强网络的表达能力(Linear Bottlenecks)

(3)网络为全卷积的,使得模型可以适应不同尺寸的图像;使用 RELU6(最高输出为 6)激活函数,使得模型在低精度计算下具有更强的鲁棒性

(4)MobileNetV2 building block 如下所示,若需要下采样,可在 DWise 时采用步长为 2 的卷积;小网络使用小的扩张系数(expansion factor),大网络使用大一点的扩张系数(expansion factor),推荐是5~10,论文中 t = 6

代码:

kerea实现:

import tensorflow as tf

from tensorflow import keras

import tensorflow.keras.backend as K

from tensorflow.keras import layers, models, Sequential, backend

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Dense, Flatten, Dropout, BatchNormalization, Activation, GlobalAveragePooling2D

from tensorflow.keras.layers import Concatenate, Lambda, Input, ZeroPadding2D, AveragePooling2D, DepthwiseConv2D, Reshape

def relu6(x):

return K.relu(x, max_value=6)

# 保证特征层数为8的倍数

def make_divisible(v, divisor, min_value=None):

if min_value is None:

min_value = divisor

new_v = max(min_value, int(v+divisor/2)//divisor*divisor) #//向下取整,除

if new_v<0.9*v:

new_v +=divisor

return new_v

def pad_size(inputs, kernel_size):

input_size = inputs.shape[1:3]

if isinstance(kernel_size, int):

kernel_size = (kernel_size, kernel_size)

if input_size[0] is None:

adjust = (1,1)

else:

adjust = (1- input_size[0]%2, 1-input_size[1]%2)

correct = (kernel_size[0]//2, kernel_size[1]//2)

return ((correct[0] - adjust[0], correct[0]),

(correct[1] - adjust[1], correct[1]))

def conv_block (x, nb_filter, kernel=(1,1), stride=(1,1), name=None):

x = Conv2D(nb_filter, kernel, strides=stride, padding='same', use_bias=False, name=name+'_expand')(x)

x = BatchNormalization(axis=3, name=name+'_expand_BN')(x)

x = Activation(relu6, name=name+'_expand_relu')(x)

return x

def depthwise_res_block(x, nb_filter, kernel, stride, t, alpha, resdiual=False, name=None):

input_tensor=x

exp_channels= x.shape[-1]*t #扩展维度

alpha_channels = int(nb_filter*alpha) #压缩维度

x = conv_block(x, exp_channels, (1,1), (1,1), name=name)

if stride[0]==2:

x = ZeroPadding2D(padding=pad_size(x, 3), name=name+'_pad')(x)

x = DepthwiseConv2D(kernel, padding='same' if stride[0]==1 else 'valid', strides=stride, depth_multiplier=1, use_bias=False, name=name+'_depthwise')(x)

x = BatchNormalization(axis=3, name=name+'_depthwise_BN')(x)

x = Activation(relu6, name=name+'_depthwise_relu')(x)

x = Conv2D(alpha_channels, (1,1), padding='same', use_bias=False, strides=(1,1), name=name+'_project')(x)

x = BatchNormalization(axis=3, name=name+'_project_BN')(x)

if resdiual:

x = layers.add([x, input_tensor], name=name+'_add')

return x

def MovblieNetV2 (nb_classes, alpha=1., dropout=0):

img_input = Input(shape=(224, 224, 3))

first_filter = make_divisible(32*alpha, 8)

x = ZeroPadding2D(padding=pad_size(img_input, 3), name='Conv1_pad')(img_input)

x = Conv2D(first_filter, (3,3), strides=(2,2), padding='valid', use_bias=False, name='Conv1')(x)

x = BatchNormalization(axis=3, name='bn_Conv1')(x)

x = Activation(relu6, name='Conv1_relu')(x)

x = DepthwiseConv2D((3,3), padding='same', strides=(1,1), depth_multiplier=1, use_bias=False, name='expanded_conv_depthwise')(x)

x = BatchNormalization(axis=3, name='expanded_conv_depthwise_BN')(x)

x = Activation(relu6, name='expanded_conv_depthwise_relu')(x)

x = Conv2D(16, (1,1), padding='same', use_bias=False, strides=(1,1), name='expanded_conv_project')(x)

x = BatchNormalization(axis=3, name='expanded_conv_project_BN')(x)

x = depthwise_res_block(x, 24, (3,3), (2,2), 6, alpha, resdiual=False, name='block_1')

x = depthwise_res_block(x, 24, (3,3), (1,1), 6, alpha, resdiual=True, name='block_2')

x = depthwise_res_block(x, 32, (3,3), (2,2), 6, alpha, resdiual=False, name='block_3')

x = depthwise_res_block(x, 32, (3,3), (1,1), 6, alpha, resdiual=True, name='block_4')

x = depthwise_res_block(x, 32, (3,3), (1,1), 6, alpha, resdiual=True, name='block_5')

x = depthwise_res_block(x, 64, (3,3), (2,2), 6, alpha, resdiual=False, name='block_6')

x = depthwise_res_block(x, 64, (3,3), (1,1), 6, alpha, resdiual=True, name='block_7')

x = depthwise_res_block(x, 64, (3,3), (1,1), 6, alpha, resdiual=True, name='block_8')

x = depthwise_res_block(x, 64, (3,3), (1,1), 6, alpha, resdiual=True, name='block_9')

x = depthwise_res_block(x, 96, (3,3), (1,1), 6, alpha, resdiual=False, name='block_10')

x = depthwise_res_block(x, 96, (3,3), (1,1), 6, alpha, resdiual=True, name='block_11')

x = depthwise_res_block(x, 96, (3,3), (1,1), 6, alpha, resdiual=True, name='block_12')

x = depthwise_res_block(x, 160, (3,3), (2,2), 6, alpha, resdiual=False, name='block_13')

x = depthwise_res_block(x, 160, (3,3), (1,1), 6, alpha, resdiual=True, name='block_14')

x = depthwise_res_block(x, 160, (3,3), (1,1), 6, alpha, resdiual=True, name='block_15')

x = depthwise_res_block(x, 320, (3,3), (1,1), 6, alpha, resdiual=False, name='block_16')

if alpha >1.0:

last_filter = make_divisible(1280*alpha,8)

else:

last_filter=1280

x = Conv2D(last_filter, (1,1), strides=(1,1), use_bias=False, name='Conv_1')(x)

x = BatchNormalization(axis=3, name='Conv_1_bn')(x)

x = Activation(relu6, name='out_relu')(x)

x = GlobalAveragePooling2D()(x)

x = Dense(nb_classes, activation='softmax',use_bias=True, name='Logits')(x)

model = models.Model(img_input, x, name='MobileNetV2')

return model

def prepocess(x):

x = tf.io.read_file(x)

x = tf.image.decode_jpeg(x, channels=3)

x = tf.image.resize(x, [224,224])

x =tf.expand_dims(x, 0) # 扩维

x = preprocess_input(x)

return x

#preprocess_input 等效于/255 -0.5 *2(缩放到-1 ,1)

import os

from tensorflow.keras.applications.mobilenet_v2 import preprocess_input, decode_predictions

def main():

model = MovblieNetV2(1000, 1.0, 0.2)

model.summary()

weight_path=r'C:\Users\jackey\.keras\models\mobilenet_v2_weights_tf_dim_ordering_tf_kernels_1.0_224.h5'

if os.path.exists(weight_path):

model.load_weights(weight_path, )

img=prepocess('Car.jpg')

Predictions = model.predict(img)

print('Predicted:', decode_predictions(Predictions, top=3)[0])

if __name__=='__main__':

main()

pytorch实现:

import torch

import torch.nn as nn

import torch.nn.functional as F

class Block(nn.Module):

'''expand + depthwise + pointwise'''

def __init__(self, in_planes, out_planes, expansion, stride):

super(Block, self).__init__()

self.stride = stride

planes = expansion * in_planes

self.conv1 = nn.Conv2d(in_planes, planes, kernel_size=1,

stride=1, padding=0, bias=False)

self.bn1 = nn.BatchNorm2d(planes)

self.conv2 = nn.Conv2d(planes, planes, kernel_size=3,

stride=stride, padding=1, groups=planes,

bias=False)

self.bn2 = nn.BatchNorm2d(planes)

self.conv3 = nn.Conv2d(planes, out_planes, kernel_size=1,

stride=1, padding=0, bias=False)

self.bn3 = nn.BatchNorm2d(out_planes)

self.shortcut = nn.Sequential()

if stride == 1 and in_planes != out_planes:

self.shortcut = nn.Sequential(

nn.Conv2d(in_planes, out_planes, kernel_size=1,

stride=1, padding=0, bias=False),

nn.BatchNorm2d(out_planes),

)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = F.relu(self.bn2(self.conv2(out)))

out = self.bn3(self.conv3(out))

out = out + self.shortcut(x) if self.stride==1 else out

return out

class MobileNetV2(nn.Module):

# (expansion, out_planes, num_blocks, stride)

cfg = [(1, 16, 1, 1),

(6, 24, 2, 1), # NOTE: change stride 2 -> 1 for CIFAR10

(6, 32, 3, 2),

(6, 64, 4, 2),

(6, 96, 3, 1),

(6, 160, 3, 2),

(6, 320, 1, 1)]

def __init__(self, num_classes=10):

super(MobileNetV2, self).__init__()

# NOTE: change conv1 stride 2 -> 1 for CIFAR10

self.conv1 = nn.Conv2d(3, 32, kernel_size=3, stride=1,

padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(32)

self.layers = self._make_layers(in_planes=32)

self.conv2 = nn.Conv2d(320, 1280, kernel_size=1, stride=1,

padding=0, bias=False)

self.bn2 = nn.BatchNorm2d(1280)

self.linear = nn.Linear(1280, num_classes)

def _make_layers(self, in_planes):

layers = []

for expansion, out_planes, num_blocks, stride in self.cfg:

strides = [stride] + [1]*(num_blocks-1)

for stride in strides:

layers.append(

Block(in_planes, out_planes, expansion, stride))

in_planes = out_planes

return nn.Sequential(*layers)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = self.layers(out)

out = F.relu(self.bn2(self.conv2(out)))

# NOTE: change pooling kernel_size 7 -> 4 for CIFAR10

out = F.avg_pool2d(out, 4)

out = out.view(out.size(0), -1)

out = self.linear(out)

return out

def test():

net = MobileNetV2()

x = torch.randn(2,3,32,32)

y = net(x)

print(y.size())

# test()

600

600

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?