目录

1.赛题理解

Tip:心电图心跳信号多分类预测挑战赛。

2016年6月,国务院办公厅印发《国务院办公厅关于促进和规范健康医疗大数据应用发展的指导意见》,文件指出健康医疗大数据应用发展将带来健康医疗模式的深刻变化,有利于提升健康医疗服务效率和质量。

赛题以心电图数据为背景,要求选手根据心电图感应数据预测心跳信号,其中心跳信号对应正常病例以及受不同心律不齐和心肌梗塞影响的病例,这是一个多分类的问题。通过这道赛题来引导大家了解医疗大数据的应用,帮助竞赛新人进行自我练习、自我提高。

赛题链接:https://tianchi.aliyun.com/competition/entrance/531883/introduction

1.1赛题概况

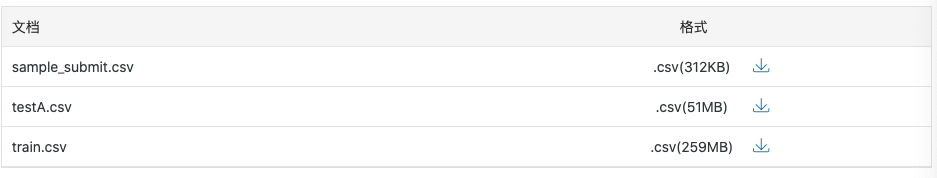

比赛要求参赛选手根据给定的数据集,建立模型,预测不同的心跳信号。赛题以预测心电图心跳信号类别为任务,数据集报名后可见并可下载,该该数据来自某平台心电图数据记录,总数据量超过20万,主要为1列心跳信号序列数据,其中每个样本的信号序列采样频次一致,长度相等。为了保证比赛的公平性,将会从中抽取10万条作为训练集,2万条作为测试集A,2万条作为测试集B,同时会对心跳信号类别(label)信息进行脱敏。

通过这道赛题来引导大家走进医疗大数据的世界,主要针对于于竞赛新人进行自我练习,自我提高。

1.2数据概况

一般而言,对于数据在比赛界面都有对应的数据概况介绍(匿名特征除外),说明列的性质特征。了解列的性质会有助于我们对于数据的理解和后续分析。

Tip:匿名特征,就是未告知数据列所属的性质的特征列。

train.csv

- id 为心跳信号分配的唯一标识

- heartbeat_signals 心跳信号序列(数据之间采用“,”进行分隔)

- label 心跳信号类别(0、1、2、3)

testA.csv

- id 心跳信号分配的唯一标识

- heartbeat_signals 心跳信号序列(数据之间采用“,”进行分隔)

##1.3预测指标

选手需提交4种不同心跳信号预测的概率,选手提交结果与实际心跳类型结果进行对比,求预测的概率与真实值差值的绝对值。

具体计算公式如下:

总共有n个病例,针对某一个信号,若真实值为[y1,y2,y3,y4],模型预测概率值为[a1,a2,a3,a4],那么该模型的评价指标abs-sum为

a b s − s u m = ∑ j = 1 n ∑ i = 1 4 ∣ y i − a i ∣ {abs-sum={\mathop{ \sum }\limits_{

{j=1}}^{

{n}}{

{\mathop{ \sum }\limits_{

{i=1}}^{

{4}}{

{ \left| {y\mathop{

{}}\nolimits_{

{i}}-a\mathop{

{}}\nolimits_{

{i}}} \right| }}}}}} abs−sum=j=1∑ni=1∑4∣yi−ai∣

例如,某心跳信号类别为1,通过编码转成[0,1,0,0],预测不同心跳信号概率为[0.1,0.7,0.1,0.1],那么这个信号预测结果的abs-sum为

a b s − s u m = ∣ 0.1 − 0 ∣ + ∣ 0.7 − 1 ∣ + ∣ 0.1 − 0 ∣ + ∣ 0.1 − 0 ∣ = 0.6 {abs-sum={ \left| {0.1-0} \right| }+{ \left| {0.7-1} \right| }+{ \left| {0.1-0} \right| }+{ \left| {0.1-0} \right| }=0.6} abs−sum=∣0.1−0∣+∣0.7−1∣+∣0.1−0∣+∣0.1−0∣=0.6

多分类算法常见的评估指标如下:

其实多分类的评价指标的计算方式与二分类完全一样,只不过我们计算的是针对于每一类来说的召回率、精确度、准确率和 F1分数。

1、混淆矩阵(Confuse Matrix)

- (1)若一个实例是正类,并且被预测为正类,即为真正类TP(True Positive )

- (2)若一个实例是正类,但是被预测为负类,即为假负类FN(False Negative )

- (3)若一个实例是负类,但是被预测为正类,即为假正类FP(False Positive )

- (4)若一个实例是负类,并且被预测为负类,即为真负类TN(True Negative )

第一个字母T/F,表示预测的正确与否;第二个字母P/N,表示预测的结果为正例或者负例。如TP就表示预测对了,预测的结果是正例,那它的意思就是把正例预测为了正例。

2.准确率(Accuracy)

准确率是常用的一个评价指标,但是不适合样本不均衡的情况,医疗数据大部分都是样本不均衡数据。

A c c u r a c y = C o r r e c t T o t a l A c c u r a c y = T P + T N T P + T N + F P + F N Accuracy=\frac{Correct}{Total}\\ Accuracy = \frac{TP + TN}{TP + TN + FP + FN} Accuracy=TotalCorrectAccuracy=TP+TN+FP+FNTP+TN

3、精确率(Precision)也叫查准率简写为P

精确率(Precision)是针对预测结果而言的,其含义是在被所有预测为正的样本中实际为正样本的概率在被所有预测为正的样本中实际为正样本的概率,精确率和准确率看上去有些类似,但是是两个完全不同的概念。精确率代表对正样本结果中的预测准确程度,准确率则代表整体的预测准确程度,包括正样本和负样本。

P r e c i s i o n = T P T P + F P Precision = \frac{TP}{TP + FP} Precision=TP+FPTP

4.召回率(Recall) 也叫查全率 简写为R

召回率(Recall)是针对原样本而言的,其含义是在实际为正的样本中被预测为正样本的概率。

R e c a l l = T P T P + F N Recall = \frac{TP}{TP + FN} Recall=TP+FN<

心电图信号多分类预测

心电图信号多分类预测

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

3058

3058

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?