1.环境配置

1.1 ollama配置

在ollama官网下载ollama软件并安装,安装时需将安装路径添加到环境变量path里面。

1.2 ollama加载模型

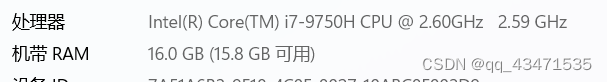

ollama官网上有对应大模型,通过ollama run 模型名称,即可下载。例如 ollama run qwen:7b;下载完成后即可进行聊天对话功能。模型大小根据自己的电脑性能进行选择,当前事例16G内存可运行。

1.3 python环境搭建,langchain 安装,vscode软件下载

2.pdf文档处理

2.1扫描版pdf文字识别并保存到world

当前大模型在处理扫描版pdf时,对扫描版的处理会报错,需先将其转换成可进行识别的pdf文件

代码如下,运行前需先进行相应包的安装,通过pip install 对应安装包即可:

from docx import Document

from paddleocr import PaddleOCR

import docx

import os

from docx import Document

from docx.enum.text import WD_PARAGRAPH_ALIGNMENT

from docx.shared import Cm

import fitz

from PIL import Image, ImageEnhance, ImageFilter

# 初始化 OCR 模型

ocr = PaddleOCR(use_gpu=True, lang='ch' or 'en')

def enhance_and_process_image(image_path, lang="chi_sim+eng"):

"""图像增强后执行OCR处理,返回纯文本内容,忽略布局信息"""

try:

with Image.open(image_path) as im:

# 图像增强(根据需要调整增强参数)

enhancer = ImageEnhance.Contrast(im)

im = enhancer.enhance(1.5) # 增强对比度

#enhancer = ImageEnhance.Brightness(im)

#im = enhancer.enhance(1.2) # 增亮

im = im.filter(ImageFilter.MedianFilter(size=3)) # 减少噪声

im.save(image_path)

except FileNotFoundError:

print(f"文件未找到: {image_path}")

except Exception as e:

print(f"处理{image_path}时发生未知错误:{e}")

def img_deal(image_path):

try:

result = ocr.ocr(image_path)

if result: # Check if result is not None or empty before iterating

all_data = []

for page_items in result:

for region in page_items:

raw_bbox, (text, _) = region

all_data.append((raw_bbox, text))

return all_data

else:

print(f"No text detected in image: {image_path}")

return [] # Return an empty list to avoid iteration over None

except Exception as e:

print(f"An error occurred processing image {image_path}: {e}")

return [] # In case of an exception, also return an empty list

def coord_to_indent(coord, scale_factor=1000):

"""将坐标转换为Word的缩进量,这里假设1单位坐标等于scale_factor的厘米缩进"""

x, _ = coord

return Cm(x / scale_factor) # 仅使用x坐标调整左缩进,您可以根据需要加入y坐标处理上下边距

def process_and_save_images_to_word(image_paths, output_word_path):

doc = Document()

all_recognized_data = []

for image_path in image_paths:

print(image_path)

#enhance_and_process_image(image_path) # 先增强并保存图片

recognized_data = img_deal(image_path)

if recognized_data: # 确保有数据才保存

all_recognized_data.append(recognized_data)

# 实时保存当前图片的识别结果到Word

for bbox, text in recognized_data:

left_indent = coord_to_indent(bbox[0])

paragraph = doc.add_paragraph(style='Normal')

paragraph.paragraph_format.left_indent = left_indent

paragraph.alignment = WD_PARAGRAPH_ALIGNMENT.LEFT

run = paragraph.add_run(text)

font = run.font

font.size = docx.shared.Pt(12)

doc.add_page_break() # 每处理完一个图片后添加分页符

# 实时保存文档,以确保每处理完一张图片就更新一次

temp_output_path = f"{output_word_path}_temp.docx"

doc.save(temp_output_path)

# 最终保存文档并删除临时文件(如果需要的话)

os.rename(temp_output_path, output_word_path)

print(f"Text with position saved to Word document at: {output_word_path}")

if __name__ == "__main__":

pdf_file_path = 'F:/ai/output'

image_folder_path = "F:/ai/output/images/"

image_paths =sorted([os.path.join(image_folder_path, img) for img in os.listdir(image_folder_path) if img.endswith(('.png', '.jpg', '.jpeg'))])

output_word_path = os.path.splitext(pdf_file_path)[0] + '.docx'

process_and_save_images_to_word(image_paths, output_word_path)

2.2 world文档转pdf

可将doc文件打开另存为pdf,亦可通过代码直接转换

2.3 pdf分割,实现多线程共同训练加载

通过将大文件分解成小文件,再通过多线程的方式进而提升训练速度,代码如下:

import os

import fitz # PyMuPDF

def split_pdf(input_path, output_dir, max_pages=20):

"""

将一个PDF文件分割成多个不超过max_pages的PDF文件,

且在分割后的文件名中保留原文档的名称。

:param input_path: 输入PDF文件的路径。

:param output_dir: 输出PDF文件的目录。

:param max_pages: 单个输出PDF文件的最大页数,默认为20。

"""

for input_path_file in os.listdir(input_path):

file = os.path.join(input_path, input_path_file)

base_name = os.path.splitext(input_path_file)[0] # 提取不含扩展名的文件名

# 打开PDF文件

doc = fitz.open(file)

total_pages = doc.page_count

# 分割PDF

for i in range(0, total_pages, max_pages):

# 计算当前批次结束的页码,确保不会超过总页数

end_page = min(i + max_pages, total_pages)

# 创建一个新的PDF文档用于存储当前批次的页面

new_doc = fitz.Document()

# 将页面复制到新文档

for page_num in range(i, end_page):

page = doc.load_page(page_num)

new_doc.insert_pdf(doc, from_page=page_num, to_page=page_num)

# 构造包含原文档名称的输出文件名

output_filename = f"{base_name}_split_{i+1}-{end_page}.pdf"

output_path = os.path.join(output_dir, output_filename)

new_doc.save(output_path)

new_doc.close()

doc.close()

# 示例使用

input_pdf_path = r'F:\ai\DB\文件\原文件2'

output_directory =r'F:\ai\DB\文件\文件2'

# 确保输出目录存在

os.makedirs(output_directory, exist_ok=True)

split_pdf(input_pdf_path, output_directory)3.开始pdf文件训练,并将其保存,代码如下,该代码的优势是不仅可以对新文档进行训练保存,亦可在原有已训练模型的基础上进行加载,进而将两个模型保存:

import os

from langchain_community.document_loaders import PDFPlumberLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.embeddings import OllamaEmbeddings

from langchain_community.vectorstores import FAISS

from concurrent.futures import ProcessPoolExecutor

import time

import threading

import concurrent.futures

import pickle

from typing import List, Tuple

def process_pdf(file_path):

"""处理单个PDF文件并返回切分后的文档"""

loader = PDFPlumberLoader(file_path)

data = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=256, chunk_overlap=128)

return text_splitter.split_documents(data)

def batch_process_pdfs(paths,embeddings):

"""批量处理PDF文件并构建FAISS数据库,每次最多并行处理2个文件"""

all_docs = []

failed_files = [] # 用于记录处理失败的文件路径

# 定义每次处理的文件数

batch_size = 2

print(len(paths))

# 分批次处理文件

for i in range(0, len(paths), batch_size):

# 获取当前批次的文件路径

current_batch_paths = paths[i:i+batch_size]

try:

with ProcessPoolExecutor(max_workers=batch_size) as executor:

# 并行处理每个PDF文件并捕获异常

futures = {executor.submit(process_pdf, path): path for path in current_batch_paths}

for future in futures:

path = futures[future]

try:

docs = future.result()

all_docs.extend(docs)

except Exception as e:

print(f"处理文件 {path} 时发生错误: {e}")

failed_files.append(path)

continue

# 确保当前批次所有任务完成后再继续

concurrent.futures.wait(futures)

except Exception as e:

print(f"处理过程中发生未知错误: {e}")

return None

# 确保所有文件处理完毕后才进行后续操作

if all_docs:

print(f"处理完成,共处理 {len(all_docs)} 个文档。")

db = FAISS.from_documents(all_docs, embeddings)

print(f"成功构建数据库")

db.save_local("faiss_index.pkl")

else:

print("没有成功处理的文档,无法继续构建数据库。")

return None

print(f"处理失败的文件有: {failed_files}")

return db

def save_db_async(db, path):

try:

if db is None:

print("db is not initialized properly.")

else:

db.save_local(path)

except Exception as e:

print(f"异步保存数据库时发生错误: {e}")

def load_existing_db(file_path,embeddings) :

try:

db = FAISS.load_local(file_path, embeddings=embeddings, allow_dangerous_deserialization=True)

return db

except FileNotFoundError:

print("未找到现有数据库,将创建新的数据库。")

return None

except Exception as e:

print(f"加载现有数据库时发生其他错误: {e}")

return None

def merge_dbs(old_db_data, new_db):

"""合并旧数据库和新文档"""

if old_db_data:

# 合并

old_db_data.merge_from(new_db)

db=old_db_data

print(db.docstore._dict)

else:

db=new_db

return db

def main():

start_time = time.time()

path = r"F:\ai\DB\文件\文件2"

pdf_files = [os.path.join(path, filename) for filename in os.listdir(path) if filename.endswith(".pdf")]

embeddings = OllamaEmbeddings(model="shaw/dmeta-embedding-zh:latest")

# 加载可能存在的旧数据库

path1=r"F:\ai\DB\IMAGE"

existing_db = load_existing_db(path1,embeddings)

# 批量处理所有PDF文件

vector_db = batch_process_pdfs(pdf_files,embeddings)

if vector_db is None:

print("警告:处理文档时出现问题,无法继续。")

return

# 合并新旧数据

final_db = merge_dbs(existing_db, vector_db)

save_db_async(final_db, r"F:\ai\DB\IMAGE")

end_time = time.time()

elapsed_time = end_time - start_time

print(f"处理完成,总耗时: {elapsed_time:.2f} 秒")

os._exit(0)

if __name__ == '__main__':

main()4.加载训练模型并对话

4.1 单次多伦对话,并添加有记忆功能,代码如下:

# -*- coding: utf-8 -*-

import logging

from langchain_community.embeddings import OllamaEmbeddings

from langchain_community.vectorstores import FAISS

from langchain_community.llms import Ollama

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

from langchain.memory import ConversationBufferWindowMemory

from langchain.prompts import PromptTemplate

from langchain.chains import RetrievalQA

from langchain.callbacks.base import BaseCallbackManager

from typing import List

# 配置日志

logging.basicConfig(level=logging.INFO)

def load_file(path):

embeddings = OllamaEmbeddings(model="shaw/dmeta-embedding-zh:latest")

db = FAISS.load_local(path, embeddings=embeddings,

allow_dangerous_deserialization=True)

retriever = db.as_retriever(search_type="similarity", search_kwargs={"k": 24})

callback_manager = BaseCallbackManager([StreamingStdOutCallbackHandler()])

ollama_llm = Ollama(model="qwen:7b", temperature=0.2, top_p=0.9,

callback_manager=callback_manager)

memory = ConversationBufferWindowMemory(memory_key="history", k=5,

return_messages=True)

# 直接将memory作为参数传递

qa_chain = RetrievalQA.from_chain_type(

llm=ollama_llm,

chain_type="stuff",

retriever=retriever,

memory=memory,

verbose=True,

)

return qa_chain, retriever

def handle_user_query(qa, retriever, user_query: str):

try:

docs = retriever.invoke(user_query)

answer = qa.invoke(user_query, documents=docs)

# 检查answer类型并打印

if isinstance(answer, dict) and 'result' in answer:

#print(f"\n问题: {user_query}\n回答: {answer['result']}")

# 判断回答是否明显引用了文档

if answer['result'] not in docs:

# 检查回答内容是否提及了检索到的文档内容

referenced = any(doc.page_content in answer['result'] for doc in docs)

if not referenced:

print("回答未直接基于现有文件。")

else:

print("没有找到直接的参考文献")

return

else:

print("\n参考文献或相关文档:")

# 显示检索到的文档信息

unique_sources = set()

for doc in docs:

source = doc.metadata.get('source', '未知')

unique_sources.add(source)

i=0

for source in unique_sources:

if i==4:

break

i+=1

print(f"来源: {source}")

else:

print("未预期的响应类型,请检查qa.invoke的返回值。")

return answer

except Exception as e:

logging.error(f"Error handling user query: {e}")

if __name__ == "__main__":

path = r"F:\ai\DB\IMAGE"

qa_chain, retriever = load_file(path)

if qa_chain and retriever:

while True:

user_query = input("请输入您的问题(输入'退出'以结束): ")

if user_query.lower() == '退出':

break

handle_user_query(qa_chain, retriever, user_query)

else:

logging.warning("Initialization failed. QA Chain or Retriever not properly loaded.")

4.2 通过读取word文档,添加辅助内容,进行多轮问答,并将问和答写入在word文档中,代码如下:

# -*- coding: utf-8 -*-

import logging

from langchain.memory import ConversationBufferWindowMemory

from langchain.chains import RetrievalQA

from langchain.callbacks.base import BaseCallbackManager

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

from langchain_community.embeddings import OllamaEmbeddings

from langchain_community.llms import Ollama

from langchain_community.vectorstores import FAISS

from typing import Dict, List

from docx import Document

# 配置日志

logging.basicConfig(level=logging.INFO)

def read_docx_lines(file_path):

"""从.docx文件中读取每一行文本"""

doc = Document(file_path)

lines = []

for para in doc.paragraphs:

lines.extend(para.text.split('\n'))

return [line.strip() for line in lines if line.strip()]

def save_answers_to_word(answers, output_path):

"""将问题与回答保存至新的Word文档"""

doc = Document()

for question, answer in answers.items():

print(answer)

doc.add_paragraph(question)

doc.add_paragraph(answer)

doc.save(output_path)

print(f"问答已保存至: {output_path}")

def process_doc_and_query_ai(file_path, qa_chain, retriever):

"""从.docx文件读取内容,逐行查询AI并附加附加信息进行回答"""

lines = read_docx_lines(file_path)

answers = {}

# 将附加信息定义为一个常量或直接在询问时添加,而不是在循环内修改列表项

additional_info = (

"请结合以下信息进行回答:结合医疗器械可用性工程注册审查指导原则;”

)

for line in lines:

if line: # 确保行不为空

# 正确的方式是构造一个包含原始问题和附加信息的新问题字符串

full_query = f"{line} {additional_info}"

answer = handle_user_query(qa_chain, retriever, full_query)

answers[line] = answer['result']

#print(f"问题: {line}\n回答: {answer['result']}\n")

return answers

def load_file(path):

embeddings = OllamaEmbeddings(model="shaw/dmeta-embedding-zh:latest")

db = FAISS.load_local(path, embeddings=embeddings,

allow_dangerous_deserialization=True)

retriever = db.as_retriever(search_type="similarity", search_kwargs={"k": 24})

callback_manager = BaseCallbackManager([StreamingStdOutCallbackHandler()])

ollama_llm = Ollama(model="qwen:7b", temperature=0.2, top_p=0.9,

callback_manager=callback_manager)

memory = ConversationBufferWindowMemory(memory_key="history", k=5,

return_messages=True)

# 直接将memory作为参数传递

qa_chain = RetrievalQA.from_chain_type(

llm=ollama_llm,

chain_type="stuff",

retriever=retriever,

memory=memory,

verbose=True,

)

return qa_chain, retriever

def handle_user_query(qa, retriever, user_query):

"""处理用户查询并返回答案及出处"""

# 使用retriever检索最相关的文档

docs = retriever.invoke(user_query)

# 构建答案

answer = qa.invoke(user_query, documents=docs)

# 检查answer类型并打印

if isinstance(answer, dict) and 'result' in answer:

#print(f"\n问题: {user_query}\n回答: {answer['result']}")

# 判断回答是否明显引用了文档

if answer['result'] not in docs:

# 检查回答内容是否提及了检索到的文档内容

referenced = any(doc.page_content in answer['result'] for doc in docs)

if not referenced:

print("回答未直接基于现有文件。")

else:

print("没有找到直接的参考文献")

return

else:

print("\n参考文献或相关文档:")

# 显示检索到的文档信息

unique_sources = set()

for doc in docs:

source = doc.metadata.get('source', '未知')

unique_sources.add(source)

i=0

for source in unique_sources:

if i==4:

break

i+=1

print(f"来源: {source}")

else:

print("未预期的响应类型,请检查qa.invoke的返回值。")

return answer

if __name__ == "__main__":

path=r"F:\ai\DB\inventor\db_faiss"

# 初始化资源

qa_chain ,retriever= load_file(path)

# .docx文件路径

docx_file_path = r"F:\ai\path\可用性模板文件.docx"

processed_answers = process_doc_and_query_ai(docx_file_path, qa_chain,retriever)

# 保存结果到新的Word文档

output_docx_path = r"F:\ai\path\output.docx"

save_answers_to_word(processed_answers, output_docx_path)

1737

1737

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?