Reinforcement Learning with Code

This note records how the author begin to learn RL. Both theoretical understanding and code practice are presented. Many material are referenced such as ZhaoShiyu’s Mathematical Foundation of Reinforcement Learning, .

文章目录

Chapter 4. Value Iteration and Policy Iteration

Value iteration and policy iteration have a common name called dynamic programming. Dynamic programming is model-based algorithm, which is the simplest RL algorithm. Its helpful to us to understand the model-free algorithm.

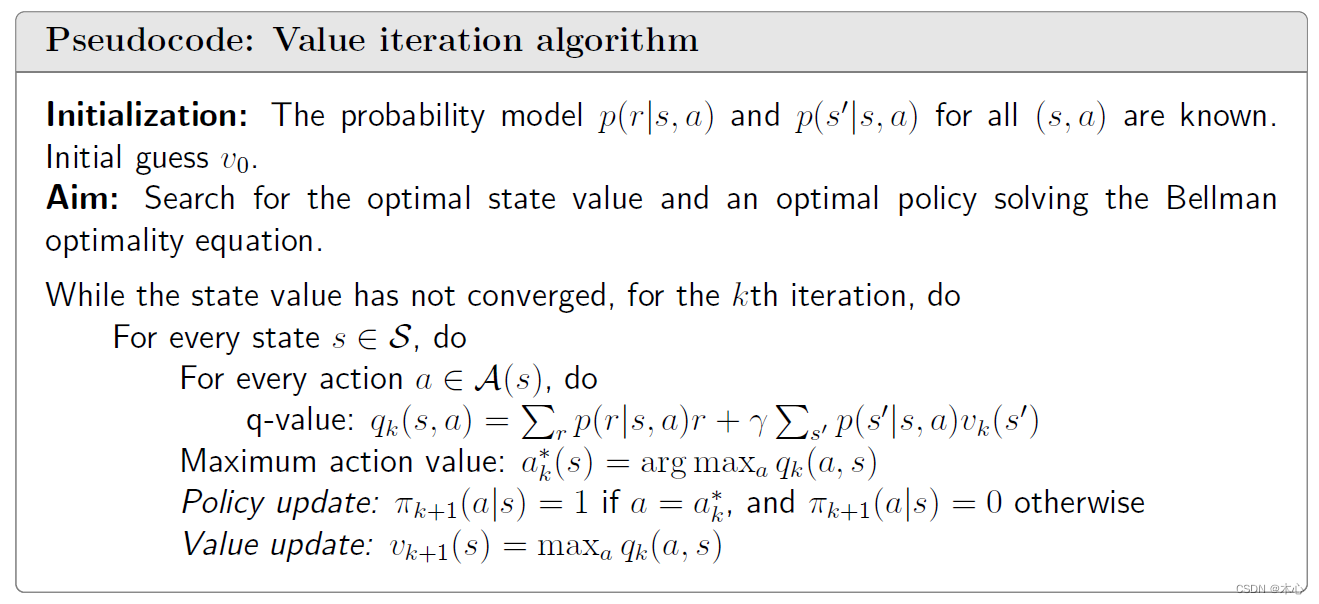

4.1 Value iteration

Value iteration is solving the Bellman optimal equation directly.

Matrix-vector form:

The value iteration is exactly the algorithm suggested by the contraction mapping in chapter 3. Value iteration concludes two parts. First, in every iteration is called policy update.

π

k

+

1

=

arg

max

π

k

(

r

π

k

+

γ

P

π

v

k

)

\pi_{k+1} = \arg \textcolor{red}{\max_{\pi_k}(r_{\pi_k} + \gamma P_\pi v_k)}

πk+1=argπkmax(rπk+γPπvk)

Second, the step is value update. Mathematically, it is to substitute

π

k

+

1

\pi_{k+1}

πk+1 and do the following operation:

v k + 1 = r π k + 1 + γ P π k + 1 v k v_{k+1} = r_{\pi_{k+1}} + \gamma P_{\pi_{k+1}}v_k vk+1=rπk+1+γPπk+1vk

The above algorithm is matrix-vector form which is useful to understand the core idea. The above equation is iterative which means we calculate v k + 1 v_{k+1} vk+1 is only one step calculation.

There is a misunderstanding that we doesn’t use Bellman equation to calculate state value v k + 1 v_{k+1} vk+1 directly. Instead, when we use greedy policy update strategy the state value v k + 1 v_{k+1} vk+1 is actually the maximum action value max a q k ( s , a ) \max_a q_k(s,a) maxaqk(s,a).

The value iteration includes solving the Bellman optimal equation part as show in red.

Elementwise form:

First, the elementwise form policy update is

π k + 1 = arg max π k ( r π k + γ P π v k ) (matrx-vector form) → π k + 1 ( s ) = arg max π k ∑ a π k ( a ∣ s ) ( ∑ r p ( r ∣ s , a ) r + ∑ s ′ p ( s ′ ∣ s , a ) v k ( s ′ ) ) ⏟ q π k ( s , a ) (elementwise form) → π k + 1 ( s ) = arg max π k ∑ a π k ( a ∣ s ) q π k ( s , a ) → π k + 1 ( s ) = ∑ a π k ( a ∣ s ) arg max a k q π k ( s , a ) \begin{aligned} \pi_{k+1} & = \arg \max_{\pi_k}(r_{\pi_k} + \gamma P_\pi v_k) \quad \text{(matrx-vector form)}\\ \to \pi_{k+1}(s) & = \arg \max_{\pi_k} \sum_a \pi_k(a|s) \underbrace{ \Big(\sum_r p(r|s,a)r + \sum_{s^\prime} p(s^\prime|s,a) v_k(s^\prime) \Big)}_{q_{\pi_k}(s,a)} \quad \text{(elementwise form)}\\ \to \pi_{k+1}(s) & = \arg \max_{\pi_k} \sum_a \pi_k(a|s) q_{\pi_k}(s,a) \\ \to \pi_{k+1}(s) & = \sum_a \pi_k(a|s) \arg \max_{a_k}q_{\pi_k}(s,a) \end{aligned} πk+1→πk+1(s)→πk+1(s)→πk+1(s)=argπkmax(rπk+γPπvk)(matrx-vector form)=argπkmaxa∑πk(a∣s)qπk(s,a) (r∑p(r∣s,a)r+s′∑p(s′∣s,a)vk(s′))(elementwise form)=argπkmaxa∑πk(a∣s)qπk(s,a)=a∑πk(a∣s)argakmaxqπk(s,a)

Then use the greedy policy update algorithm

π k + 1 ( a ∣ s ) = { 1 a = a k ∗ ( s ) 0 a ≠ a k ∗ ( s ) \pi_{k+1}(a|s) = \left \{ \begin{aligned} & 1 & a=a_k^*(s) \\ & 0 & a\ne a_k^*(s) \end{aligned} \right. πk+1(a∣s)={10a=ak∗(s)a=ak∗(s)

where a k ∗ ( s ) = arg max a q k ( a , s ) a_k^*(s) = \arg\max_a q_k(a,s) ak∗(s)=argmaxaqk(a,s).

Second, the elementwise form value update is

v k + 1 = r π k + 1 + γ P π k + 1 v k (matrx-vector form) → v k + 1 ( s ) = ∑ a π k + 1 ( a ∣ s ) ( ∑ r p ( r ∣ s , a ) r + γ ∑ s ′ p ( s ′ ∣ s , a ) v k ( s ′ ) ) ⏟ q k ( s , a ) (elementwise form) \begin{aligned} v_{k+1} & = r_{\pi_{k+1}} + \gamma P_{\pi_{k+1}}v_k \quad \text{(matrx-vector form)}\\ \to v_{k+1}(s) & = \sum_a \pi_{k+1}(a|s) \underbrace{ \Big(\sum_r p(r|s,a)r + \gamma \sum_{s^\prime}p(s^\prime|s,a)v_k(s^\prime) \Big)}_{q_k(s,a)} \quad \text{(elementwise form)} \end{aligned} vk+1→vk+1(s)=rπk+1+γPπk+1vk(matrx-vector form)=a∑πk+1(a∣s)qk(s,a) (r∑p(r∣s,a)r+γs′∑p(s′∣s,a)vk(s′))(elementwise form)

Since π k + 1 \pi_{k+1} πk+1 is a greedy policy, the above equation is simply

v k + 1 ( s ) = max a q k ( s , a ) v_{k+1}(s) = \max_a q_k(s,a) vk+1(s)=amaxqk(s,a)

Pesudocode:

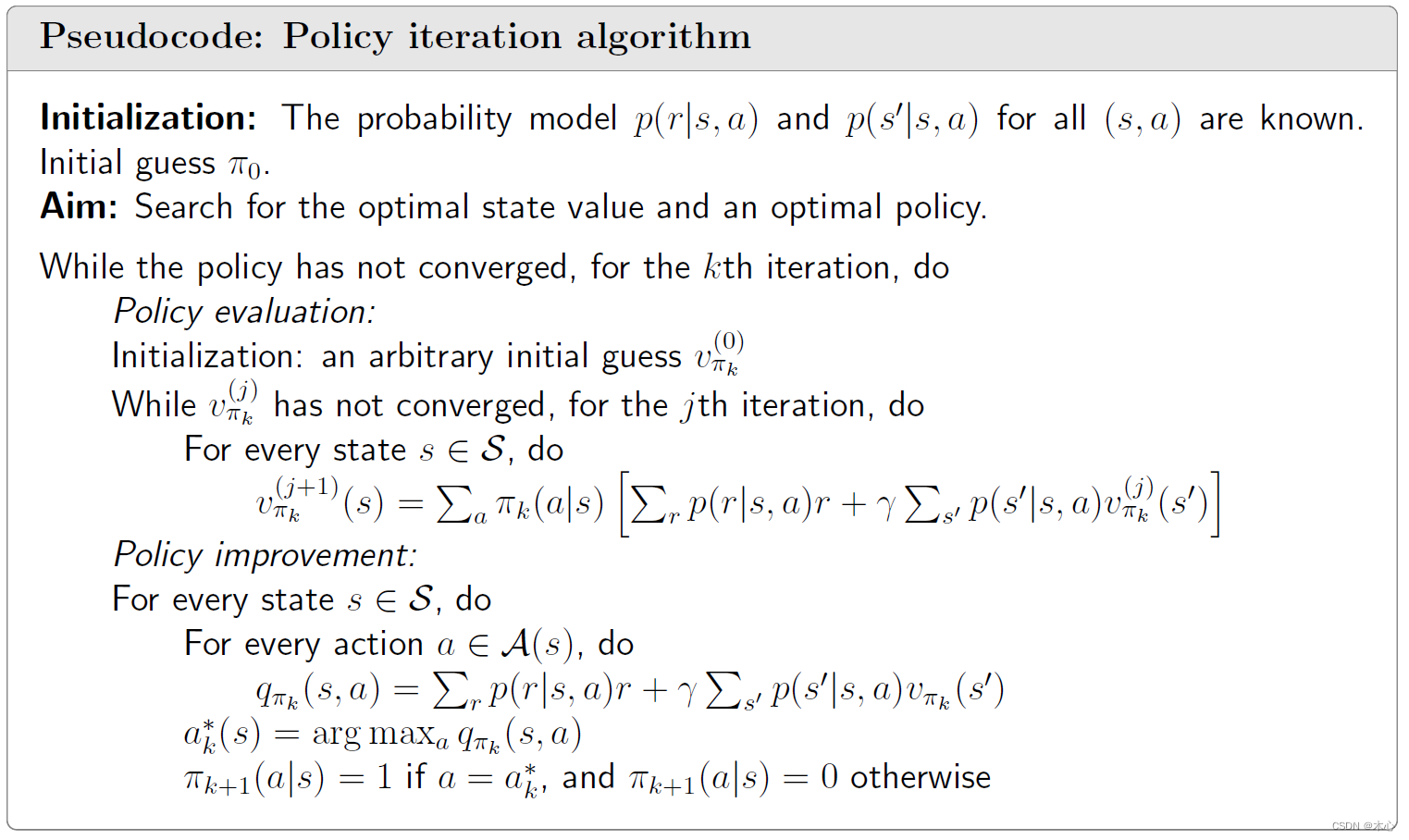

4.2 Policy iteration

The policy iteration is not an algorithm directly solving the Bellman optimality equation. However, it has an intimate relationship to value iteration. The policy iteration includes two parts policy evaluation and policy improvement.

Policy evaluation:

The first step is policy evaluation. Mathematically, it is to sovle Bellman equation of π k \pi_k πk:

v π k = r π k + γ P π k v π k v_{\pi_k} = r_{\pi_k} + \gamma P_{\pi_k}v_{\pi_k} vπk=rπk+γPπkvπk

This is the matrix-vector form of the Bellman equation, where r π k r_{\pi_k} rπk and P π k P_{\pi_k} Pπk are known. Here, v π k v_{\pi_k} vπk is the state value to be solved.

How to calculate state value v π k v_{\pi_k} vπk is important. In section 2.4 we have already introduced how to solve Bellman equation to get state value v π k v_{\pi_k} vπk as following

v π k ( j + 1 ) = r π k + γ P π k v π k ( j ) , j = 0 , 1 , 2 , … \textcolor{blue}{v_{\pi_k}^{(j+1)} = r_{\pi_k} + \gamma P_{\pi_k}v_{\pi_k}^{(j)}}, \quad j=0,1,2,\dots vπk(j+1)=rπk+γPπkvπk(j),j=0,1,2,…

Its clear that policy iteration is directly calculate the state value using the above equation, which means the calculation is infity step calculation.

Policy improvement:

The second step is to imporve the policy. How to do that? Once v π k v_{\pi_k} vπk is calculated in the first step, a new and improved policy could be obtained as

π k + 1 = arg max π ( r π + γ P π v π k ) \pi_{k+1} = \arg \max_\pi (r_\pi + \gamma P_\pi v_{\pi_k}) πk+1=argπmax(rπ+γPπvπk)

Elementwise form:

The policy evaluation step is to solve v π k v_{\pi_k} vπk from the Bellman equation dirctly.

v

π

k

(

j

+

1

)

=

r

π

k

+

γ

P

π

k

v

π

k

(

j

)

(matrix-vector form)

→

v

π

k

(

j

+

1

)

(

s

)

=

∑

a

π

k

(

a

∣

s

)

(

∑

r

p

(

r

∣

s

,

a

)

r

+

∑

s

′

p

(

s

′

∣

s

,

a

)

v

π

k

(

j

)

(

s

′

)

)

(elementwise form)

\begin{aligned} v_{\pi_k}^{(j+1)} & = r_{\pi_k} + \gamma P_{\pi_k} v_{\pi_k}^{(j)} \quad \text{(matrix-vector form)} \\ \to v_{\pi_k}^{(j+1)}(s) & = \sum_a \pi_k(a|s) \Big(\sum_r p(r|s,a)r + \sum_{s^\prime} p(s^\prime|s,a) v_{\pi_k}^{(j)} (s^\prime) \Big) \quad \text{(elementwise form)} \end{aligned}

vπk(j+1)→vπk(j+1)(s)=rπk+γPπkvπk(j)(matrix-vector form)=a∑πk(a∣s)(r∑p(r∣s,a)r+s′∑p(s′∣s,a)vπk(j)(s′))(elementwise form)

where

j

=

0

,

1

,

2

,

…

j=0,1,2,\dots

j=0,1,2,…

The policy improvement step is to solve π k + 1 = arg max π ( r π + γ P π k v π k ) \pi_{k+1}=\arg \max_\pi (r_\pi + \gamma P_{\pi_k}v_{\pi_k}) πk+1=argmaxπ(rπ+γPπkvπk).

π k + 1 = arg max π k ( r π k + γ P π k v π k ) (matrix-vector form) → π k + 1 ( s ) = arg max π k ∑ a π ( a ∣ s ) ( ∑ r p ( r ∣ s , a ) r + ∑ s ′ p ( s ′ ∣ s , a ) v π k ( s ′ ) ) ⏟ q π k ( s , a ) (elementwise form) → π k + 1 ( s ) = ∑ a π ( a ∣ s ) arg max a q π k ( s , a ) \begin{aligned} \pi_{k+1} & =\arg \max_{\pi_k} (r_{\pi_k} + \gamma P_{\pi_k}v_{\pi_k}) \quad \text{(matrix-vector form)} \\ \to \pi_{k+1}(s) & = \arg \max_{\pi_k} \sum_a \pi(a|s) \underbrace{ \Big(\sum_r p(r|s,a)r + \sum_{s^\prime} p(s^\prime|s,a) v_{\pi_k} (s^\prime) \Big)}_{q_{\pi_k}(s,a)} \quad \text{(elementwise form)} \\ \to \pi_{k+1}(s) & = \sum_a \pi(a|s) \arg \max_a q_{\pi_k}(s,a) \end{aligned} πk+1→πk+1(s)→πk+1(s)=argπkmax(rπk+γPπkvπk)(matrix-vector form)=argπkmaxa∑π(a∣s)qπk(s,a) (r∑p(r∣s,a)r+s′∑p(s′∣s,a)vπk(s′))(elementwise form)=a∑π(a∣s)argamaxqπk(s,a)

Let a k ∗ ( s ) = arg max a q π k ( s , a ) a^*_k(s) = \arg \max_a q_{\pi_k}(s,a) ak∗(s)=argmaxaqπk(s,a). The greedy optimal policy is

π k + 1 ( a ∣ s ) = { 1 a = a k ∗ ( s ) 0 a ≠ a k ∗ ( s ) \pi_{k+1}(a|s) = \left \{ \begin{aligned} & 1 & a=a_k^*(s) \\ & 0 & a\ne a_k^*(s) \end{aligned} \right. πk+1(a∣s)={10a=ak∗(s)a=ak∗(s)

Pesudocode:

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?