Reinforcement Learning with Code

This note records how the author begin to learn RL. Both theoretical understanding and code practice are presented. Many material are referenced such as ZhaoShiyu’s Mathematical Foundation of Reinforcement Learning, .

文章目录

Chapter 5. Monte Carlo Learning

What is Monte Carlo estimation? Monte Carlo estimation refers to a broad class of techniques that use stochastic samples to solve approximation problems using the Law of Large Numbers.

ChatGpt tells us that “Monte Carlo estimation is a statistical method used to estimate unknown quantities or solve problems by generating random samples and using the law of large numbers to approximate the true value.”

5.1 Law of large numbers

(Law of Large Numbers) For a random variable

X

X

X. Suppose

{

x

i

}

i

=

1

n

\{x_i\}_{i=1}^n

{xi}i=1n are some independent and indentically distribution (iid) samples. Let

x

ˉ

=

1

n

∑

i

=

1

n

x

i

\bar{x}=\frac{1}{n}\sum_{i=1}^n x_i

xˉ=n1∑i=1nxi be the average of the samples. Then,

E

[

x

ˉ

]

=

E

[

X

]

v

a

r

[

x

ˉ

]

=

1

n

v

a

r

[

X

]

\mathbb{E}[\bar{x}] = \mathbb{E}[X] \\ \mathrm{var}[\bar{x}] = \frac{1}{n} \mathrm{var}[X]

E[xˉ]=E[X]var[xˉ]=n1var[X]

The above two equations indicate that

x

ˉ

\bar{x}

xˉ is an unbiased estimate of

E

[

X

]

\mathbb{E}[X]

E[X] and its variance decreases to zero as

n

n

n increases to infinity.

Proof, First, E [ x ˉ ] = E [ 1 n ∑ i = 1 n x i ] = 1 n ∑ i = 1 n E [ x i ] = 1 n n E [ X ] = E [ X ] \mathbb{E}[\bar{x}] = \mathbb{E}[\frac{1}{n}\sum_{i=1}^n x_i]=\frac{1}{n}\sum_{i=1}^n\mathbb{E}[x_i]=\frac{1}{n}n\mathbb{E}[X]=\mathbb{E}[X] E[xˉ]=E[n1∑i=1nxi]=n1∑i=1nE[xi]=n1nE[X]=E[X], where the last equability is because the samples are independent and indentically distribution (iid) (that is, E [ x i ] = E [ X ] \mathbb{E}[x_i]=\mathbb{E}[X] E[xi]=E[X]).

Second, v a r [ x ˉ ] = v a r [ 1 n ∑ i = 1 n x i ] = 1 n 2 ∑ i = 1 n v a r [ x i ] = 1 n 2 n ∗ v a r [ X ] = 1 n v a r [ X ] \mathrm{var}[\bar{x}]=\mathrm{var}[\frac{1}{n}\sum_{i=1}^n x_i]=\frac{1}{n^2}\sum_{i=1}^n\mathrm{var}[x_i]=\frac{1}{n^2}n*\mathrm{var}[X]=\frac{1}{n}\mathrm{var}[X] var[xˉ]=var[n1∑i=1nxi]=n21∑i=1nvar[xi]=n21n∗var[X]=n1var[X], where the second equality is because the samples are independent and indentically distribution (iid) (that is, v a r [ x i ] = v a r [ X ] \mathrm{var}[x_i]=\mathrm{var}[X] var[xi]=var[X])

5.2 Simple example

Consider a problem that we need to calculate the expectation E [ X ] \mathbb{E}[X] E[X] of random variable X X X which takes value in the finite set X \mathcal{X} X. There are two ways.

First, model-based approach, we can use the definition of expectation that

E

[

X

]

=

∑

x

∈

X

p

(

x

)

x

\mathbb{E}[X] = \sum_{x\in\mathcal{X}} p(x)x

E[X]=x∈X∑p(x)x

Second, model-free approach, if the probability of distribution is unknown we can use the Law of Large Numbers to estimate the expectation.

E

[

X

]

≈

x

ˉ

=

1

n

∑

i

=

1

n

x

i

\mathbb{E}[X]\approx \bar{x} = \frac{1}{n} \sum_{i=1}^n x_i

E[X]≈xˉ=n1i=1∑nxi

The example gives us an intutition that: When the system model is available, the expectation can be calculated based on the model.

When the model is unavailable, the expectation can be estimated approximately using stochastic samples.

5.3 Monte Carlo Basic

Review policy iteration:

The simplest Monte Carlo learning also called Monte Carlo Basic (MC Basic) just replaces the policy iteration model-based part by MC estimation. Recall the matrix-vector form of policy iteration

v π k ( j + 1 ) = r π k + γ P π k v π k ( j ) (policy evaluation) π k + 1 = arg max π k ( r π k + γ P π k v π k ) (policy improvement) \begin{aligned} v_{\pi_k}^{(j+1)} & = r_{\pi_k} + \gamma P_{\pi_k} v_{\pi_k}^{(j)} \quad \text{(policy evaluation)} \\ \pi_{k+1} & = \arg \max_{\pi_k}(r_{\pi_k} + \gamma P_{\pi_k}v_{\pi_k}) \quad \text{(policy improvement)} \end{aligned} vπk(j+1)πk+1=rπk+γPπkvπk(j)(policy evaluation)=argπkmax(rπk+γPπkvπk)(policy improvement)

The elementwise form of policy iteration is

v π k ( j + 1 ) ( s ) = ∑ a π k ( a ∣ s ) ( ∑ r p ( r ∣ s , a ) r + ∑ s ′ p ( s ′ ∣ s , a ) v π k ( j ) ( s ′ ) ) (policy evaluation) π k + 1 ( s ) = arg max π k ∑ a π ( a ∣ s ) ( ∑ r p ( r ∣ s , a ) r + ∑ s ′ p ( s ′ ∣ s , a ) v π k ( s ′ ) ) ⏟ q π k ( s , a ) (policy improvement) → π k + 1 ( s ) = ∑ a π ( a ∣ s ) arg max a q π k ( s , a ) \begin{aligned} v_{\pi_k}^{(j+1)}(s) & = \sum_a \pi_k(a|s) \Big(\sum_r p(r|s,a)r + \sum_{s^\prime} p(s^\prime|s,a) v_{\pi_k}^{(j)} (s^\prime) \Big) \quad \text{(policy evaluation)} \\ \pi_{k+1}(s) & = \arg \max_{\pi_k} \sum_a \pi(a|s) \underbrace{ \Big(\sum_r p(r|s,a)r + \sum_{s^\prime} p(s^\prime|s,a) v_{\pi_k} (s^\prime) \Big)}_{q_{\pi_k}(s,a)} \quad \text{(policy improvement)} \\ \to \pi_{k+1}(s) & = \sum_a \pi(a|s) \arg \max_a q_{\pi_k}(s,a) \end{aligned} vπk(j+1)(s)πk+1(s)→πk+1(s)=a∑πk(a∣s)(r∑p(r∣s,a)r+s′∑p(s′∣s,a)vπk(j)(s′))(policy evaluation)=argπkmaxa∑π(a∣s)qπk(s,a) (r∑p(r∣s,a)r+s′∑p(s′∣s,a)vπk(s′))(policy improvement)=a∑π(a∣s)argamaxqπk(s,a)

Its obvious that the core is to calculate the action values by usting

q

π

k

(

s

,

a

)

=

∑

r

p

(

r

∣

s

,

a

)

r

+

∑

s

′

p

(

s

′

∣

s

,

a

)

v

π

k

(

s

′

)

q_{\pi_k}(s,a) = \sum_r p(r|s,a)r + \sum_{s^\prime}p(s^\prime|s,a)v_{\pi_k}(s^\prime)

qπk(s,a)=r∑p(r∣s,a)r+s′∑p(s′∣s,a)vπk(s′)

However, the above equation needs the model of the environment (that

p

(

r

∣

s

,

a

)

p(r|s,a)

p(r∣s,a) and

p

(

s

′

∣

s

,

a

)

p(s^\prime|s,a)

p(s′∣s,a) are required). We can replace this part by Monte Carlo estimation. Recall the definition of action value refer to section 2.5.

q

π

(

s

,

a

)

≜

E

[

G

t

∣

S

t

=

s

,

A

t

=

a

]

q_\pi(s,a) \triangleq \mathbb{E}[G_t|S_t=s, A_t=a]

qπ(s,a)≜E[Gt∣St=s,At=a]

Action value can be interpreted as average return along trajectory generated by policy

π

\pi

π after taking a specific action

a

a

a.

Convert to model free:

Suppose there are

n

n

n episodes sampling starting at state

s

s

s and takeing action

a

a

a, and then denote the

i

i

i th episode return as

g

(

i

)

(

s

,

a

)

\textcolor{blue}{g^{(i)}(s,a)}

g(i)(s,a). Then using the idea of Monte Carlo estimation and Law of Large Numbers, the action values can be approxiamte as

q

π

(

s

,

a

)

≜

E

[

G

t

∣

S

t

=

s

,

A

t

=

a

]

≈

1

n

∑

j

=

1

n

g

(

j

)

(

s

,

a

)

q_\pi(s,a) \triangleq \mathbb{E}[G_t|S_t=s, A_t=a] \textcolor{blue}{\approx \frac{1}{n} \sum_{j=1}^n g^{(j)}(s,a)}

qπ(s,a)≜E[Gt∣St=s,At=a]≈n1j=1∑ng(j)(s,a)

Suppose the number of episodes

n

n

n is sufficiently large. So, the above equation is the unbiased estimation of action value

q

π

(

s

,

a

)

q_\pi(s,a)

qπ(s,a).

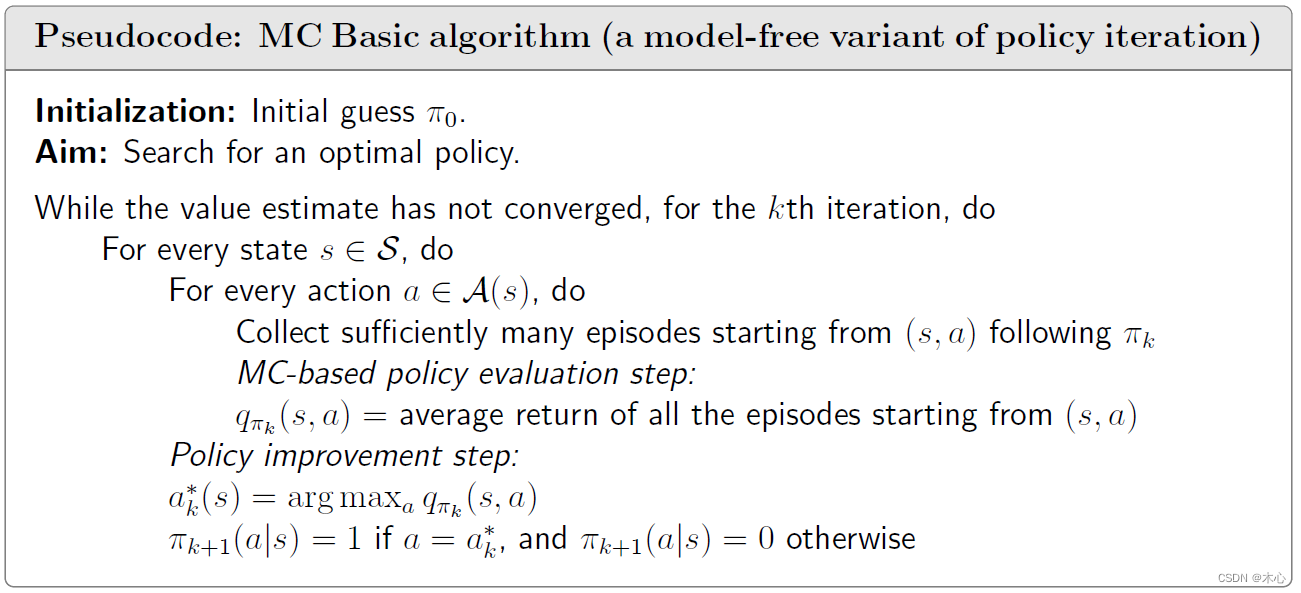

Pesudocode:

Here are some questions. Why does the MC Basic algorithm estimate action values instead of state values? That is because state value cannot be used to improve policies directly. Even if we are given state values, we still need to calculate action values from these state value using q π k ( s , a ) = ∑ r p ( r ∣ s , a ) r + ∑ s ′ p ( s ′ ∣ s , a ) v π k ( s ′ ) q_{\pi_k}(s,a) = \sum_r p(r|s,a)r + \sum_{s^\prime}p(s^\prime|s,a)v_{\pi_k}(s^\prime) qπk(s,a)=∑rp(r∣s,a)r+∑s′p(s′∣s,a)vπk(s′). But this calculation requires the system model. Therefore, when models are not available, we should directly estimate action values.

5.4 Monte Carlo Exploring Starts

Some concepts:

-

Vist: a state-action pair appears in the episode, it is called a vist of the state-action pair. Such as

s 1 → a 2 s 2 → a 4 s 1 → a 2 s 2 → a 3 s 5 → a 1 3 ⋯ \textcolor{blue}{s_1 \xrightarrow{a_2}} s_2 \xrightarrow{a_4} \textcolor{blue}{s_1 \xrightarrow{a_2}} s_2 \xrightarrow{a_3} s_5 \xrightarrow{a_13}\cdots s1a2s2a4s1a2s2a3s5a13⋯

( s 1 , a 2 ) (s_1,a_2) (s1,a2) is called a visit. -

First visit: In the above trajectory ( s 1 , a 2 ) (s_1,a_2) (s1,a2) is visited twice. If we only count the first-time visit, such kind of strategy is called first-visit.

-

Every visit: If every time state-action pair is visited and the rest of the episode is used to estimate its action value, such a strategy is called every-visit.

For example,

s 1 → a 2 s 2 → a 4 s 1 → a 2 s 2 → a 3 s 5 → a 1 3 ⋯ [ origianl episode ] s 2 → a 4 s 1 → a 2 s 2 → a 3 s 5 → a 1 3 ⋯ [ episode starting from ( s 2 , a 4 ) ] s 1 → a 2 s 2 → a 3 s 5 → a 1 3 ⋯ [ episode starting from ( s 1 , a 2 ) ] s 2 → a 3 s 5 → a 1 3 ⋯ [ episode starting from ( s 2 , a 3 ) ] s 5 → a 1 3 ⋯ [ episode starting from ( s 2 , a 4 ) ] \begin{aligned} s_1 \xrightarrow{a_2} s_2 \xrightarrow{a_4} s_1 \xrightarrow{a_2} s_2 \xrightarrow{a_3} s_5 \xrightarrow{a_13}\cdots & \quad [\textcolor{blue}{\text{origianl episode}}] \\ s_2 \xrightarrow{a_4} s_1 \xrightarrow{a_2} s_2 \xrightarrow{a_3} s_5 \xrightarrow{a_13}\cdots & \quad [\text{episode starting from }(s_2,a_4)] \\ s_1 \xrightarrow{a_2} s_2 \xrightarrow{a_3} s_5 \xrightarrow{a_13}\cdots & \quad [\textcolor{red}{\text{episode starting from }(s_1,a_2)}] \\ s_2 \xrightarrow{a_3} s_5 \xrightarrow{a_13}\cdots & \quad [\text{episode starting from }(s_2,a_3)] \\ s_5 \xrightarrow{a_13}\cdots & \quad [\text{episode starting from }(s_2,a_4)] \end{aligned} s1a2s2a4s1a2s2a3s5a13⋯s2a4s1a2s2a3s5a13⋯s1a2s2a3s5a13⋯s2a3s5a13⋯s5a13⋯[origianl episode][episode starting from (s2,a4)][episode starting from (s1,a2)][episode starting from (s2,a3)][episode starting from (s2,a4)]

Suppose we need to estimate the action value q π ( s 1 , a 2 ) q_\pi(s_1,a_2) qπ(s1,a2). The first-visit only counts the first visit of ( s 1 , a 2 ) (s_1,a_2) (s1,a2). So we need a huge numbers of episodes starting from ( s 1 , a 2 ) (s_1,a_2) (s1,a2). As the blue one episode in the above equations. The every-visit counts every time the visit of ( s 1 , a 2 ) (s_1,a_2) (s1,a2). Hence, we can use the blue one and the red one to estimate the action value q π ( s 1 , a 2 ) q_\pi(s_1,a_2) qπ(s1,a2). In this way, the samples in the episode are utilized more sufficiently.

Using sample more efficiently:

From the example, we are informed that if an episode is sufficiently long so that it can visit all the state-action pairs many times, then this single episode is sufficient to estimate all the action values by using the every-visit strategy. However, one single episode is pretty ideal result. Because the samples obtained by the every-visit is relevant due to the trajectory starting from the second visit is merely a subset of the trajectory starting from the first. Nevertheless, if the two visits are far away from each other, which means there is a siginificant non-overlap portion, the relevance would not be strong. Moreover, the relevance can be further suppressed due to the discount rate. Therefore, when there are few episodes and each episode is very long, the every-visit strategy is a good option.

Using estimation more efficiently:

The first startegy in MC Basic, in the policy evaluation step, to collect all the episodes starting from the same state-action pair and then approximate the action value using the average return of these episodes. The drawback of the strategy is that the agent must wait until all episodes have been collected.

The second strategy, which can overcome the drawback, is to use the return of a single episode to approximate the corresponding action value (the idea of stochastic estimation). In this way, we can improve the policy in an episode-by-episode fasion.

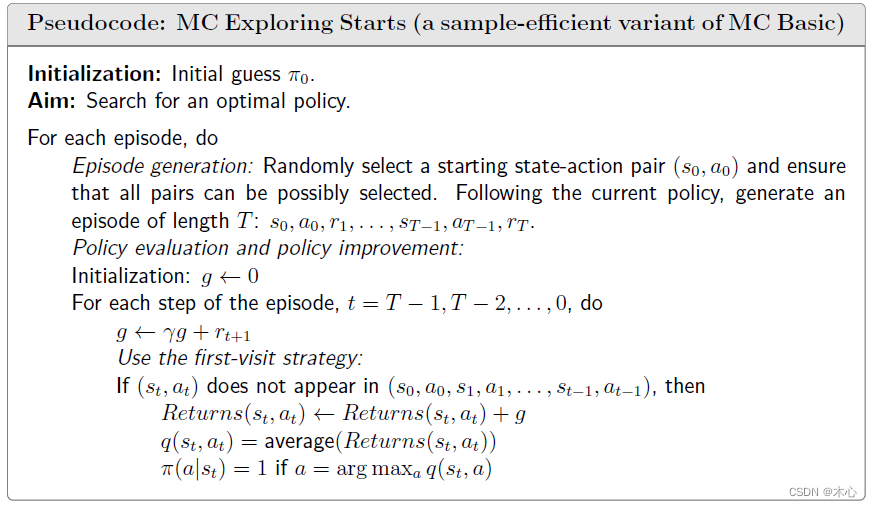

Pesudocode:

MC Exploring Starts algorithm compared to MC Basic, the sample usage and estimation update are more efficient.

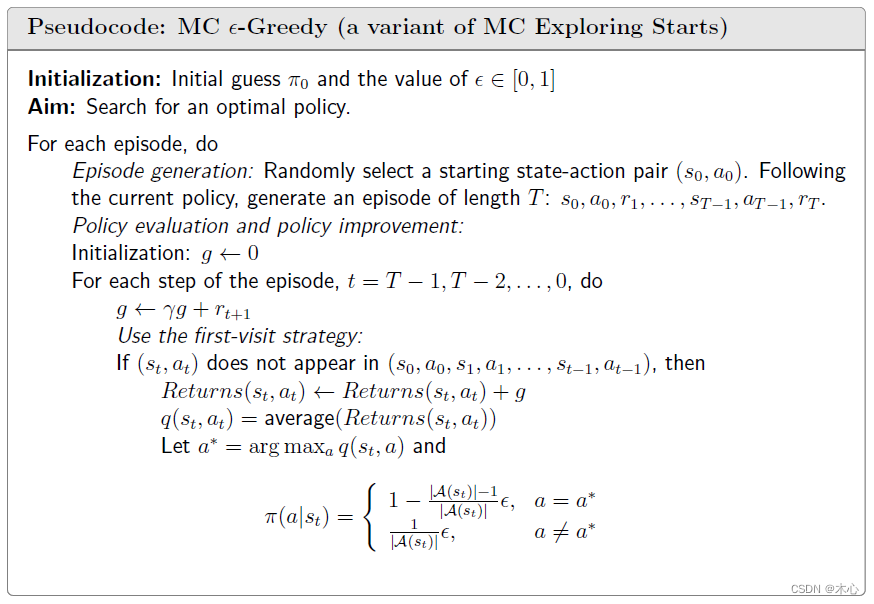

5.5 Monte Carlo ϵ \epsilon ϵ-Greedy

Why is exploring starts important? In theory, exploring starts is necessary to find optimal policies. Only if every state-action pair is well explored, can we select optimal policies. However, in practice, exploring starts is difficult to achieve. Because its difficult to collect episodes starting from every state-action pair.

We can use soft policy to remove the requirement of exploring starts. There are many soft policies. The most common one is

ϵ

\textcolor{blue}{\epsilon}

ϵ-greedy. The

ϵ

\epsilon

ϵ-greedy has the form of

π

(

a

∣

s

)

=

{

1

−

ϵ

∣

A

(

s

)

∣

(

∣

A

(

s

)

∣

−

1

)

,

for the geedy action

ϵ

∣

A

(

s

)

∣

,

for the other

∣

A

(

s

)

∣

−

1

actions

\pi(a|s) = \left \{ \begin{aligned} 1 - \frac{\epsilon}{|\mathcal{A}(s)|}(|\mathcal{A(s)}|-1), & \quad \text{for the geedy action} \\ \frac{\epsilon}{|\mathcal{A}(s)|}, & \quad \text{for the other } |\mathcal{A}(s)|-1 \text{ actions} \end{aligned} \right.

π(a∣s)=⎩

⎨

⎧1−∣A(s)∣ϵ(∣A(s)∣−1),∣A(s)∣ϵ,for the geedy actionfor the other ∣A(s)∣−1 actions

where

∣

A

(

s

)

∣

|\mathcal{A}(s) |

∣A(s)∣ denotes the number of actions associated with

s

s

s. It is worth noting taht the probability to take the greedy action is always greater than other action, because

1

−

ϵ

∣

A

(

s

)

∣

(

∣

A

(

s

)

∣

−

1

)

=

1

−

ϵ

+

ϵ

∣

A

(

s

)

∣

≥

ϵ

∣

A

(

s

)

∣

1 - \frac{\epsilon}{|\mathcal{A}(s)|}(|\mathcal{A(s)}|-1) = 1 - \epsilon + \frac{\epsilon}{|\mathcal{A}(s)|} \ge \frac{\epsilon}{|\mathcal{A}(s)|}

1−∣A(s)∣ϵ(∣A(s)∣−1)=1−ϵ+∣A(s)∣ϵ≥∣A(s)∣ϵ

for any

ϵ

∈

[

0

,

1

]

\epsilon\in[0,1]

ϵ∈[0,1], when

ϵ

=

0

\epsilon=0

ϵ=0 the

ϵ

\epsilon

ϵ-greedy becomes greedy policy.

Pesudocode:

941

941

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?