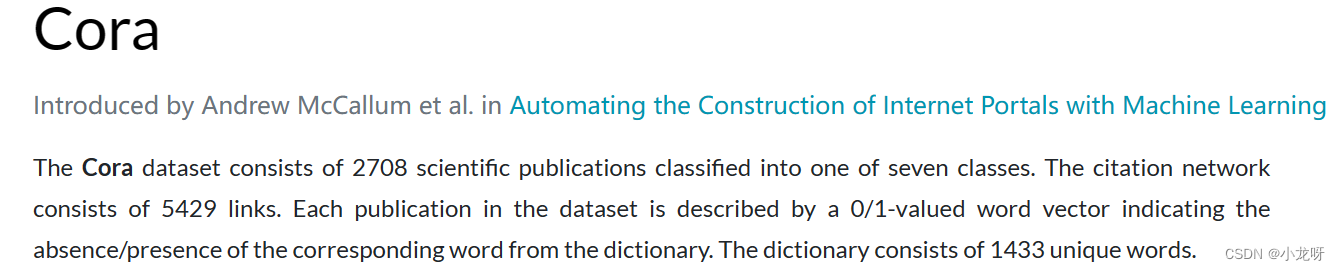

一、数据集

该数据集为2708个科学出版物,被分成七类。每个出版物为一个点,每个点为1433维向量,每个类别只有20个点有标注,最终要对每个点进行分类。

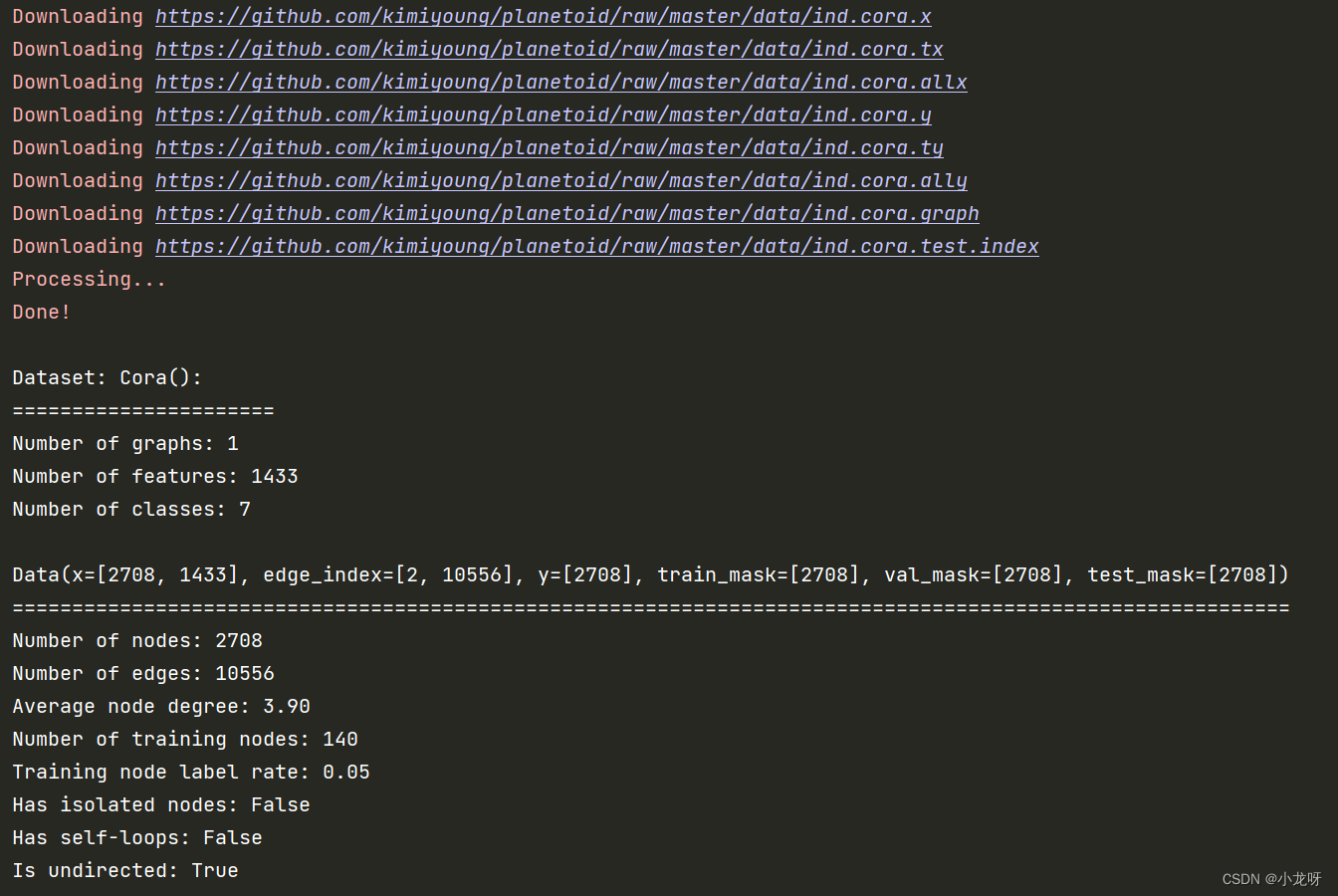

from torch_geometric.datasets import Planetoid # 下载数据集使用

from torch_geometric.transforms import NormalizeFeatures

dataset = Planetoid(root='', name='Cora', transform=NormalizeFeatures()) # transform预处理

print()

print(f'Dataset: {dataset}:')

print('======================')

print(f'Number of graphs: {len(dataset)}')

print(f'Number of features: {dataset.num_features}')

print(f'Number of classes: {dataset.num_classes}')

data = dataset[0] # Get the first graph object.

print()

print(data)

print('===========================================================================================================')

# Gather some statistics about the graph.

print(f'Number of nodes: {data.num_nodes}')

print(f'Number of edges: {data.num_edges}')

print(f'Average node degree: {data.num_edges / data.num_nodes:.2f}')

print(f'Number of training nodes: {data.train_mask.sum()}')

print(f'Training node label rate: {int(data.train_mask.sum()) / data.num_nodes:.2f}')

print(f'Has isolated nodes: {data.has_isolated_nodes()}')

print(f'Has self-loops: {data.has_self_loops()}')

print(f'Is undirected: {data.is_undirected()}')

train_mask,val_mask,test_mask分别表示哪些节点可用在训练集,验证集,测试集上。

二、Multi-layer Perception Network

import torch

from torch.nn import Linear

import torch.nn.functional as F

from torch_geometric.datasets import Planetoid # 下载数据集使用

from torch_geometric.transforms import NormalizeFeatures

dataset = Planetoid(root='', name='Cora', transform=NormalizeFeatures()) # transform预处理

data = dataset[0]

class MLP(torch.nn.Module):

def __init__(self, hidden_channels):

super().__init__()

torch.manual_seed(1234567)

self.lin1 = Linear(dataset.num_features, hidden_channels)

self.lin2 = Linear(hidden_channels, dataset.num_classes)

def forward(self, x):

x = self.lin1(x)

x = x.relu()

x = F.dropout(x, p=0.5, training=self.training)

x = self.lin2(x)

return x

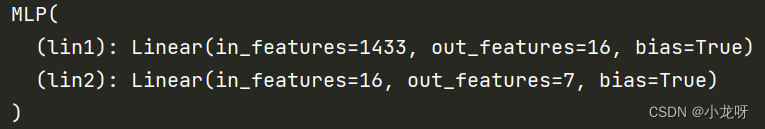

model = MLP(hidden_channels=16)

print(model)

criterion = torch.nn.CrossEntropyLoss() # Define loss criterion.

optimizer = torch.optim.Adam(model.parameters(), lr=0.01, weight_decay=5e-4) # Define optimizer.

def train():

model.train()

optimizer.zero_grad() # Clear gradients.

out = model(data.x) # Perform a single forward pass.

loss = criterion(out[data.train_mask], data.y[data.train_mask]) # Compute the loss solely based on the training nodes.

loss.backward() # Derive gradients.

optimizer.step() # Update parameters based on gradients.

return loss

def test():

model.eval()

out = model(data.x)

pred = out.argmax(dim=1) # Use the class with highest probability.

test_correct = pred[data.test_mask] == data.y[data.test_mask] # Check against ground-truth labels.

test_acc = int(test_correct.sum()) / int(data.test_mask.sum()) # Derive ratio of correct predictions.

return test_acc

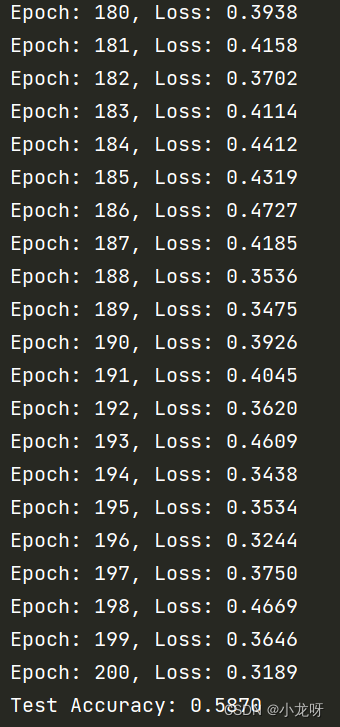

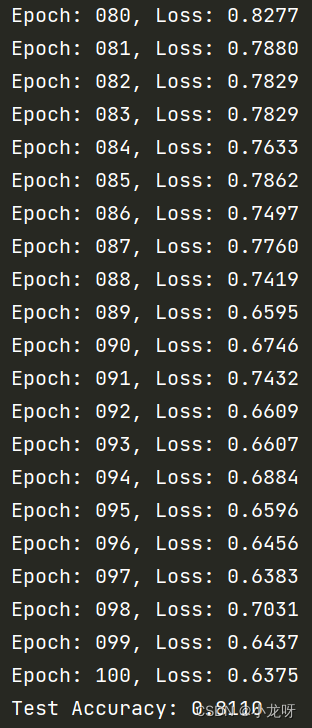

for epoch in range(1, 201):

loss = train()

print(f'Epoch: {epoch:03d}, Loss: {loss:.4f}')

test_acc = test()

print(f'Test Accuracy: {test_acc:.4f}')

输出(部分截图):

模型输入为1433维张量,经过为16的全连接层,输出为7维张量:

三、Graph Neural Network

import torch

import torch.nn.functional as F

from torch_geometric.nn import GCNConv

from torch_geometric.datasets import Planetoid # 下载数据集使用

from torch_geometric.transforms import NormalizeFeatures

# 可视化部分

import matplotlib.pyplot as plt

from sklearn.manifold import TSNE

def visualize(h, color):

z = TSNE(n_components=2).fit_transform(h.detach().cpu().numpy())

plt.figure(figsize=(10,10))

plt.xticks([])

plt.yticks([])

plt.scatter(z[:, 0], z[:, 1], s=70, c=color, cmap="Set2")

plt.show()

dataset = Planetoid(root='', name='Cora', transform=NormalizeFeatures()) # transform预处理

data = dataset[0]

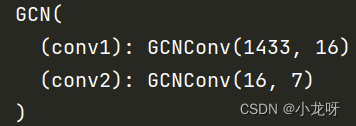

class GCN(torch.nn.Module):

def __init__(self, hidden_channels):

super().__init__()

torch.manual_seed(1234567)

self.conv1 = GCNConv(dataset.num_features, hidden_channels)

self.conv2 = GCNConv(hidden_channels, dataset.num_classes)

def forward(self, x, edge_index):

x = self.conv1(x, edge_index)

x = x.relu()

x = F.dropout(x, p=0.5, training=self.training)

x = self.conv2(x, edge_index)

return x

model = GCN(hidden_channels=16)

print(model)

model.eval()

out = model(data.x, data.edge_index)

visualize(out, color=data.y)

optimizer = torch.optim.Adam(model.parameters(), lr=0.01, weight_decay=5e-4)

criterion = torch.nn.CrossEntropyLoss()

def train():

model.train()

optimizer.zero_grad()

out = model(data.x, data.edge_index)

loss = criterion(out[data.train_mask], data.y[data.train_mask])

loss.backward()

optimizer.step()

return loss

def test():

model.eval()

out = model(data.x, data.edge_index)

pred = out.argmax(dim=1)

test_correct = pred[data.test_mask] == data.y[data.test_mask]

test_acc = int(test_correct.sum()) / int(data.test_mask.sum())

return test_acc

for epoch in range(1, 101):

loss = train()

print(f'Epoch: {epoch:03d}, Loss: {loss:.4f}')

test_acc = test()

print(f'Test Accuracy: {test_acc:.4f}')

model.eval()

out = model(data.x, data.edge_index)

visualize(out, color=data.y)

输出(部分截图):

模型输入为1433维张量,经过为16的卷积层,输出为7维张量:

训练前二维可视化图:

训练后二维可视化图:

3294

3294

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?