前言

Gold-YOLO github url:https://github.com/huawei-noah/Efficient-Computing/tree/master/Detection/Gold-YOLO

RepGDNeck github url : https://github.com/huawei-noah/Efficient-Computing/blob/master/Detection/Gold-YOLO/gold_yolo/reppan.py

RepGDNeck类作为Gold-YOLO的s和n模型的颈部,位于Gold-YOLO/gold_yolo/reppan.py文件中。

下文将根据RepGDNeck类的源码结合Gold-YOLO的论文来解析该Neck类。

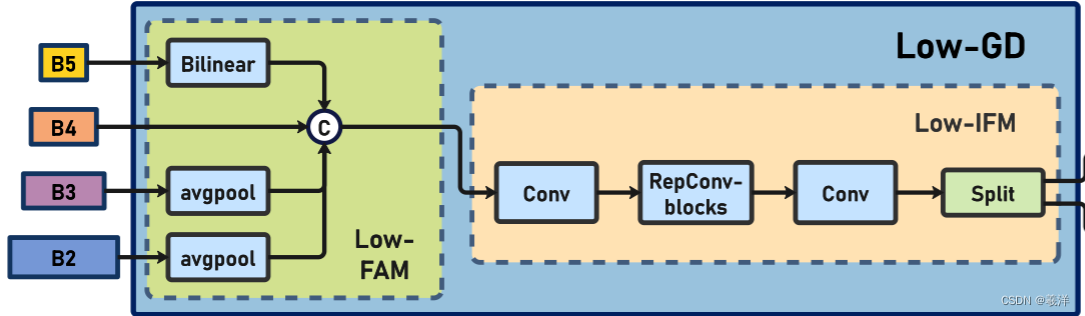

一、Low-GD

Low-GD用于得到低层的全局特征。

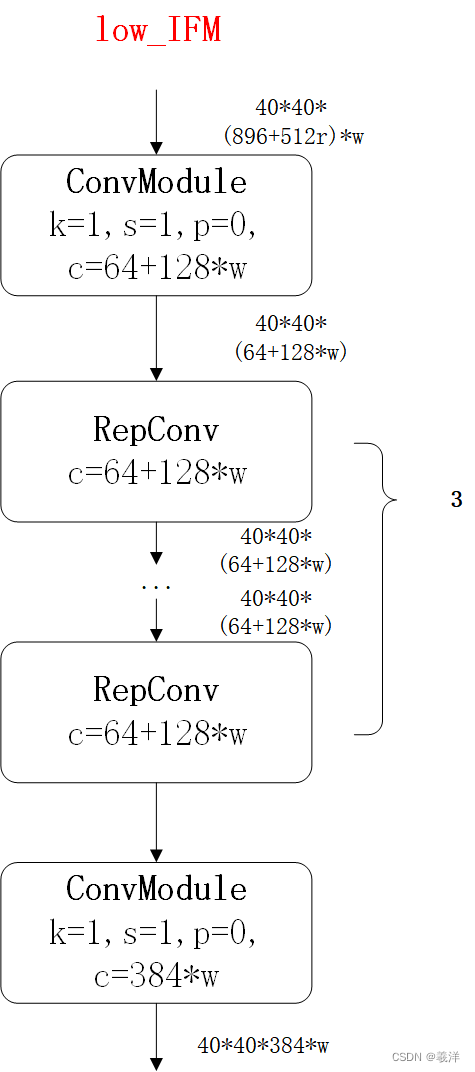

__init__中对应

self.low_FAM = SimFusion_4in()

self.low_IFM = nn.Sequential(

Conv(extra_cfg.fusion_in, extra_cfg.embed_dim_p, kernel_size=1, stride=1, padding=0),

*[block(extra_cfg.embed_dim_p, extra_cfg.embed_dim_p) for _ in range(extra_cfg.fuse_block_num)],

Conv(extra_cfg.embed_dim_p, sum(extra_cfg.trans_channels[0:2]), kernel_size=1, stride=1, padding=0),

)

forward中对应

(c2, c3, c4, c5) = input

# Low-GD 得到global全局特征

## use conv fusion global info

low_align_feat = self.low_FAM(input)

low_fuse_feat = self.low_IFM(low_align_feat)

low_global_info = low_fuse_feat.split(self.trans_channels[0:2], dim=1)

论文中中对应

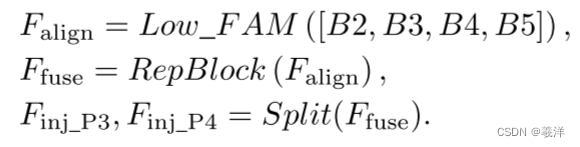

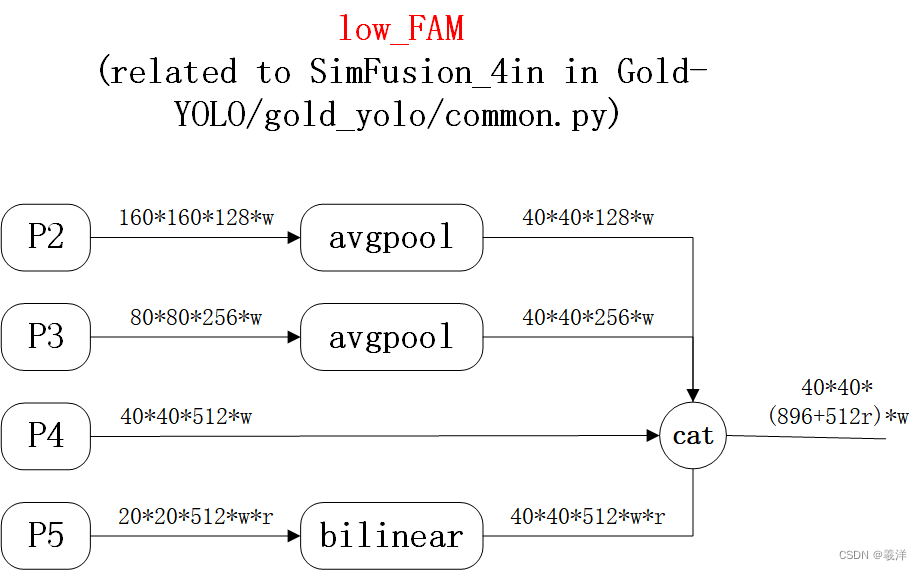

Low-GD主要包括两部分:

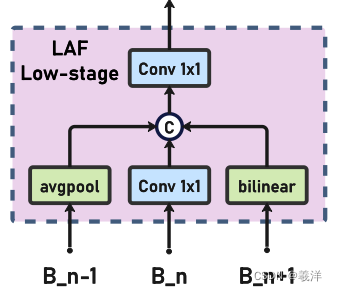

(1)Low-FAM

Low-FAM(Low-stage feature alignment module):低层的特征对齐模块

The target alignment size is chosen based on two conflicting considerations: (1) To retain more low-level information, larger feature sizes are preferable; however, (2) as the feature size increases,the computational latency of subsequent blocks also increases. To control the latency in the neck part,it is necessary to maintain a smaller feature size.

(更大的特征图包含更多的低层次的信息,但是另一方面又会计算延迟也会增加)

Therefore, we choose the RB4 as the target size of feature alignment to achieve a balance betweenspeed and accuracy.

(RB4就对应下图的P4,将输入特征对齐P4)

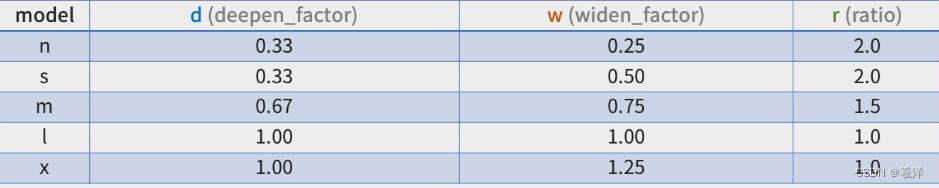

注意:这里说明一下,这里结合YOLOv8的backbone来看的,所以存在w,r参数

YOLOv8网络结构图戳这

简单理解Low-FAM: 将不同size的特征图转为同一size特征,然后再channel维度上concat

(2)Low-IFM

Low-IFM(Low-stage information fusion module):低层的信息融合模块

简单理解Low-LAF:之前融合的特征只是在通道上相加,可能会有些“排斥”反应啥的,再通过一些CBN结构来加强特征,得到更具代表性的低层的全局特征

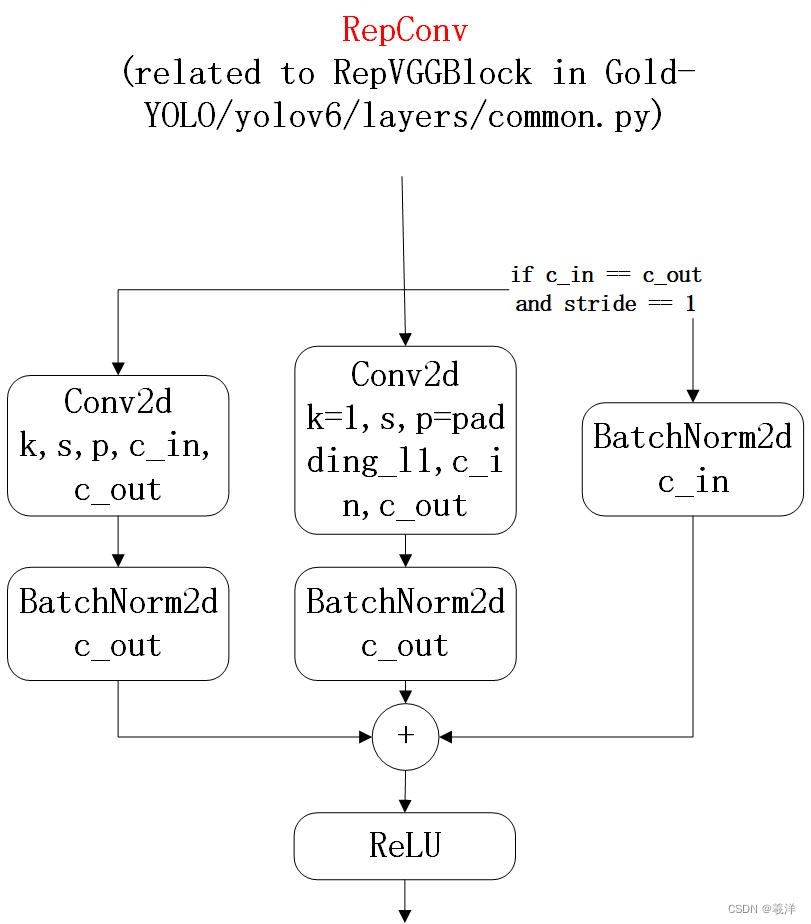

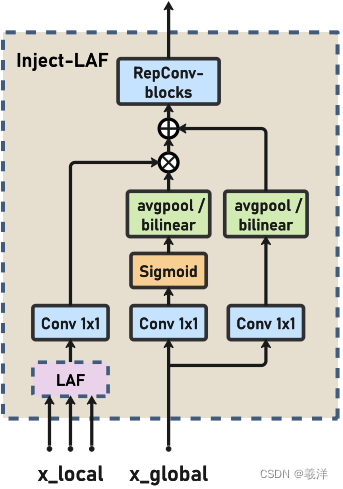

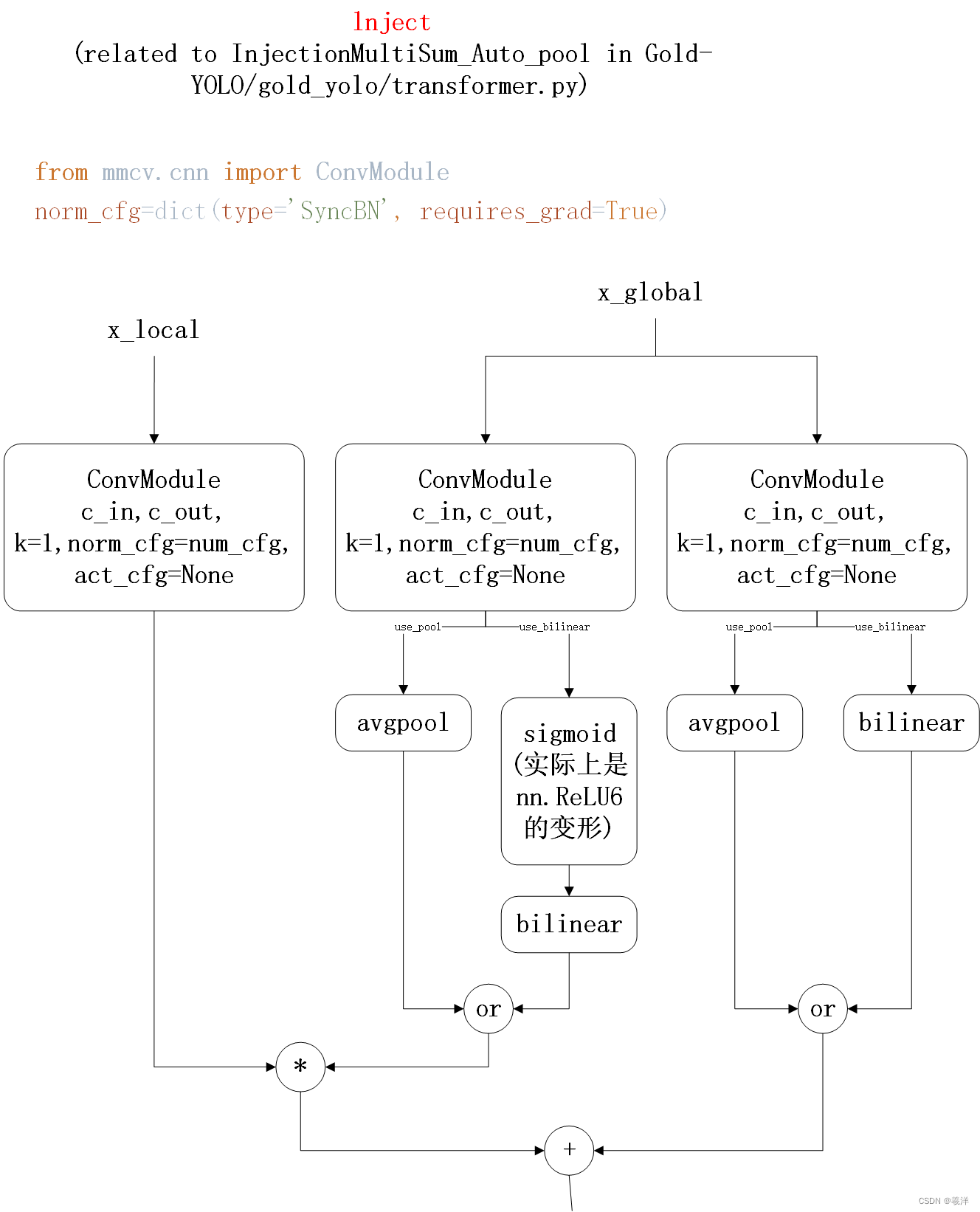

二、Low stage Inject-LAF

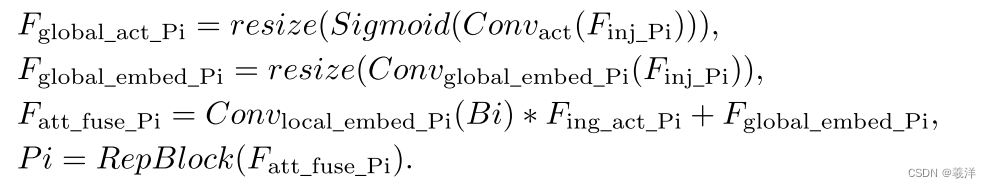

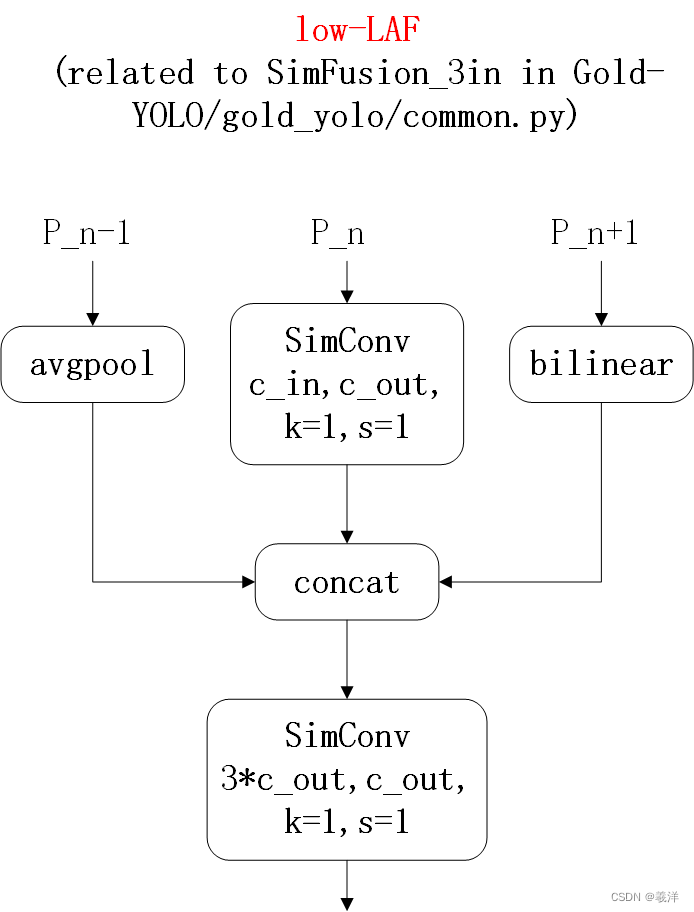

LAF(lightweight adjacent layer fusion),轻量的邻接层融合,和之前的FAM的结构类似,只不过加入了SimConv类,来得到局部融合特征。

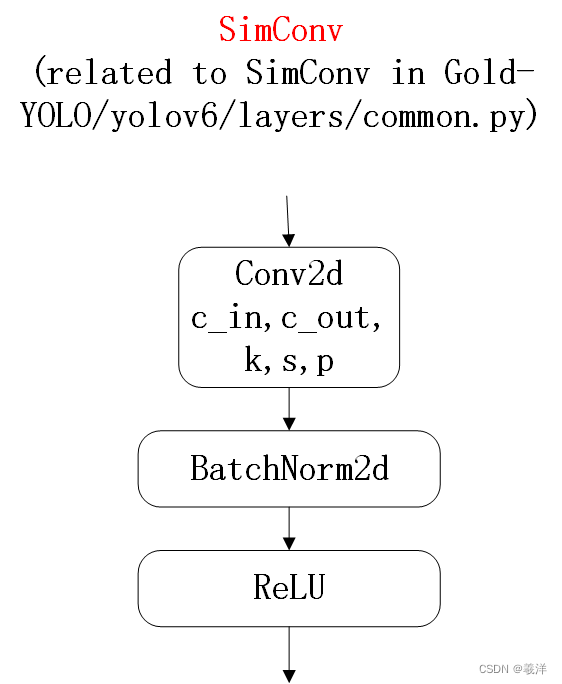

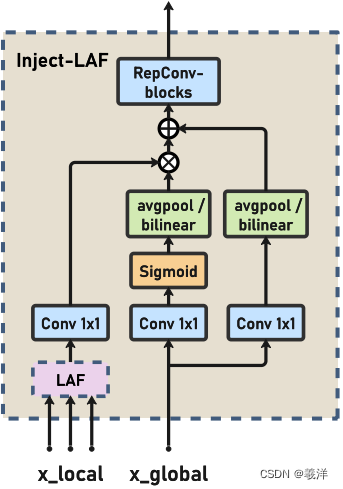

Inject模块主要用于特征注入,将GD模块得到的全局特征和LAF得到的局部特征结合。

__init__中对应

self.reduce_layer_c5 = SimConv(

in_channels=channels_list[4], # 1024

out_channels=channels_list[5], # 512

kernel_size=1,

stride=1

)

self.LAF_p4 = SimFusion_3in(

in_channel_list=[channels_list[3], channels_list[3]], # 512, 256

out_channels=channels_list[5], # 256

)

self.Inject_p4 = InjectionMultiSum_Auto_pool(channels_list[5], channels_list[5], norm_cfg=extra_cfg.norm_cfg,

activations=nn.ReLU6)

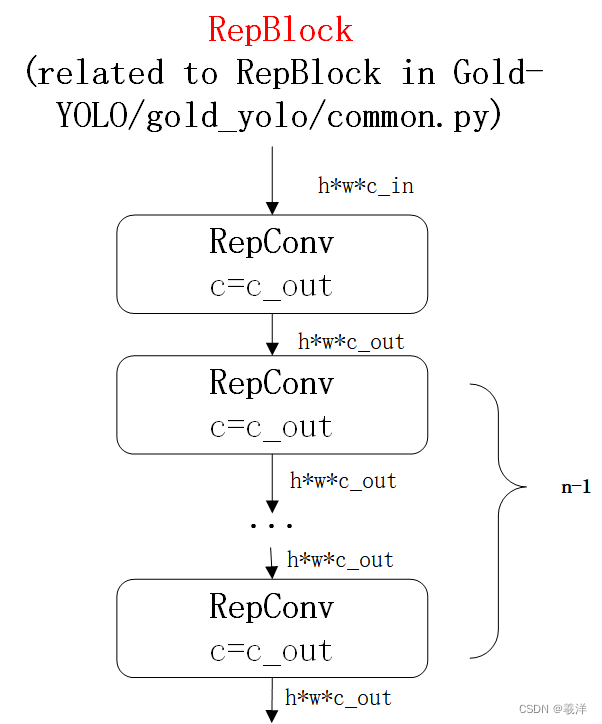

self.Rep_p4 = RepBlock(

in_channels=channels_list[5], # 256

out_channels=channels_list[5], # 256

n=num_repeats[5],

block=block

)

self.reduce_layer_p4 = SimConv(

in_channels=channels_list[5], # 256

out_channels=channels_list[6], # 128

kernel_size=1,

stride=1

)

self.LAF_p3 = SimFusion_3in(

in_channel_list=[channels_list[5], channels_list[5]], # 512, 256

out_channels=channels_list[6], # 256

)

self.Inject_p3 = InjectionMultiSum_Auto_pool(channels_list[6], channels_list[6], norm_cfg=extra_cfg.norm_cfg,

activations=nn.ReLU6)

self.Rep_p3 = RepBlock(

in_channels=channels_list[6], # 128

out_channels=channels_list[6], # 128

n=num_repeats[6],

block=block

)

forward中对应

## inject low-level global info to p4

c5_half = self.reduce_layer_c5(c5)

p4_adjacent_info = self.LAF_p4([c3, c4, c5_half])

p4 = self.Inject_p4(p4_adjacent_info, low_global_info[0])

p4 = self.Rep_p4(p4)

## inject low-level global info to p3

p4_half = self.reduce_layer_p4(p4)

p3_adjacent_info = self.LAF_p3([c2, c3, p4_half])

p3 = self.Inject_p3(p3_adjacent_info, low_global_info[1])

p3 = self.Rep_p3(p3)

论文中对应

(1)Low-LAF

To achieve a balance between speed and accuracy, we designed two LAF models: LAF low-level model and LAF high-level model, which are respectively used for low-level injection (merging features from adjacent two layers) (融合邻接两层的特征)and high-level injection (merging features from adjacent one layer).

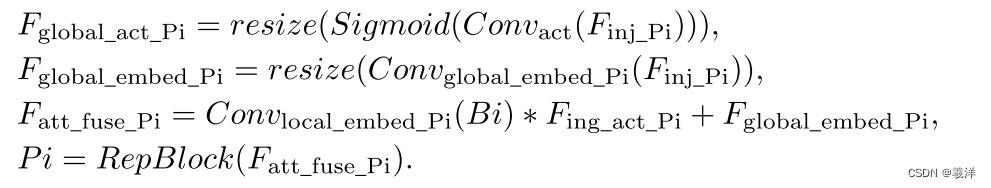

(2)Inject+RepBlock

注意注意:Inject模块之后还跟了一个RepBlock,我这里把它们分开了,详情可看论文中的Inject-LAF的图

该模块的主要作用就是将全局特征和局部特征融合

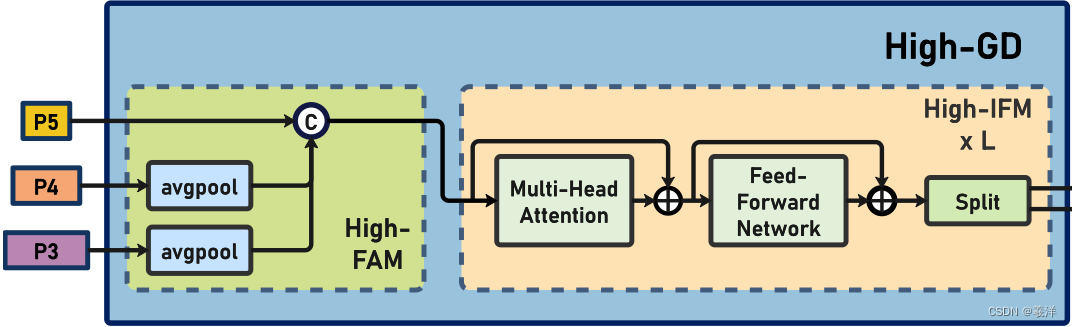

三、High-GD

High-GD得到高层的全局global信息

__init__中对应

self.high_FAM = PyramidPoolAgg(stride=extra_cfg.c2t_stride, pool_mode=extra_cfg.pool_mode)

dpr = [x.item() for x in torch.linspace(0, extra_cfg.drop_path_rate, extra_cfg.depths)]

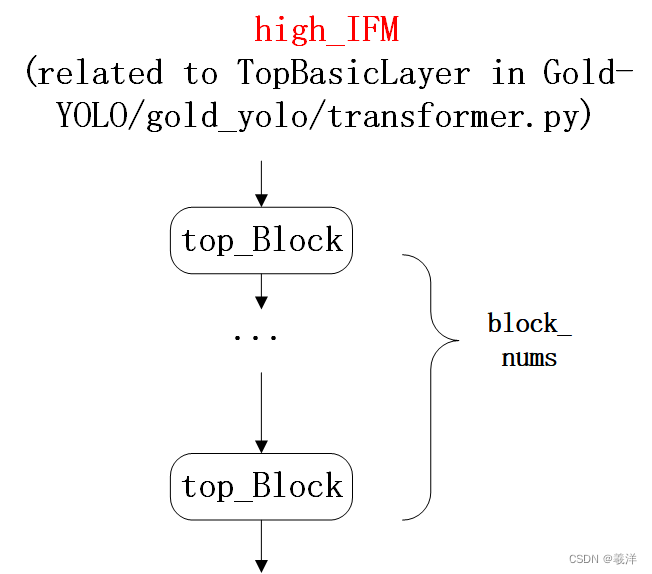

self.high_IFM = TopBasicLayer(

block_num=extra_cfg.depths,

embedding_dim=extra_cfg.embed_dim_n,

key_dim=extra_cfg.key_dim,

num_heads=extra_cfg.num_heads,

mlp_ratio=extra_cfg.mlp_ratios,

attn_ratio=extra_cfg.attn_ratios,

drop=0, attn_drop=0,

drop_path=dpr,

norm_cfg=extra_cfg.norm_cfg

)

self.conv_1x1_n = nn.Conv2d(extra_cfg.embed_dim_n, sum(extra_cfg.trans_channels[2:4]), 1, 1, 0)

forward中对应

# High-GD

## use transformer fusion global info

high_align_feat = self.high_FAM([p3, p4, c5])

high_fuse_feat = self.high_IFM(high_align_feat)

high_fuse_feat = self.conv_1x1_n(high_fuse_feat)

high_global_info = high_fuse_feat.split(self.trans_channels[2:4], dim=1)

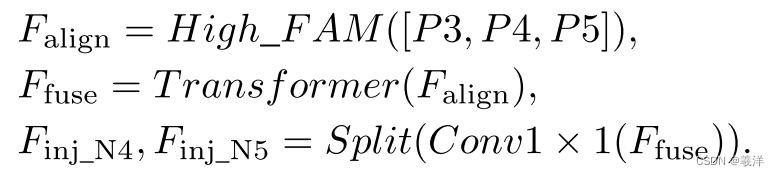

论文中对应

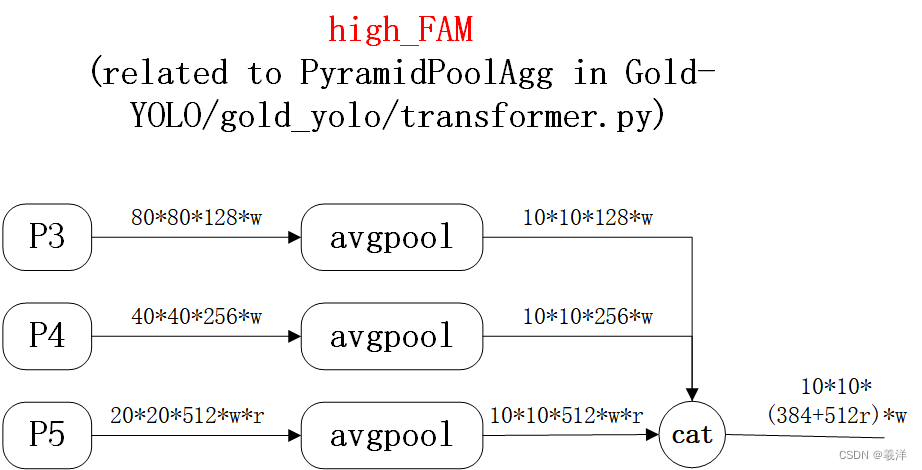

(1)High_FAM

其实高层的FAM特征对齐和低层的差不多

看完这张有点疑惑对嘛??那就对了,为什么特征图的size是变为10*10,而不是20*20?

且看源码

H = (H - 1) // self.stride + 1

W = (W - 1) // self.stride + 1

H,W对应P5的特征维度,而stride作为参数传入的2(/Gold-YOLO/configs/gold-yolo-n.py里面extra_cfg.c2t_stride)。

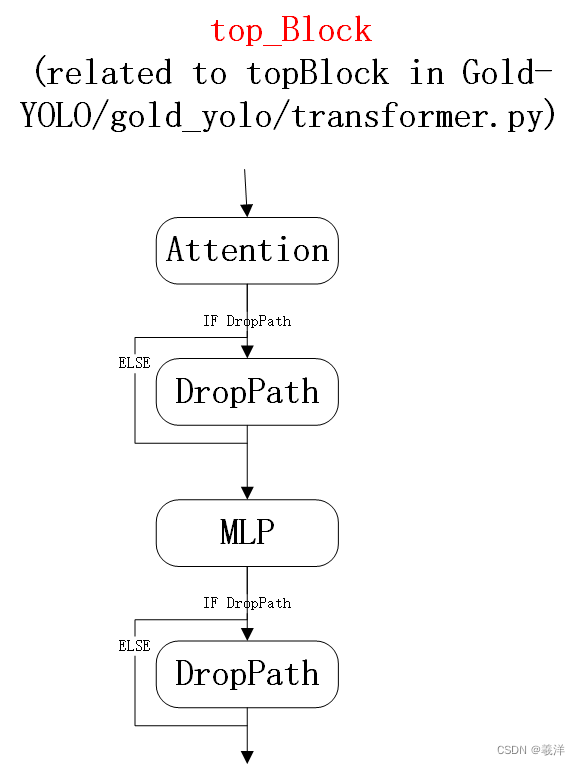

(2)High_IFM

High_IFM = block_nums * (Attention + MLP),简言之,就是Block_nums个(Attention+MLP)组合。

四、High stage Inject-LAF

__init__中对应

self.LAF_n4 = AdvPoolFusion()

self.Inject_n4 = InjectionMultiSum_Auto_pool(channels_list[8], channels_list[8],

norm_cfg=extra_cfg.norm_cfg, activations=nn.ReLU6)

self.Rep_n4 = RepBlock(

in_channels=channels_list[6] + channels_list[7], # 128 + 128

out_channels=channels_list[8], # 256

n=num_repeats[7],

block=block

)

self.LAF_n5 = AdvPoolFusion()

self.Inject_n5 = InjectionMultiSum_Auto_pool(channels_list[10], channels_list[10],

norm_cfg=extra_cfg.norm_cfg, activations=nn.ReLU6)

self.Rep_n5 = RepBlock(

in_channels=channels_list[5] + channels_list[9], # 256 + 256

out_channels=channels_list[10], # 512

n=num_repeats[8],

block=block

)

__forward__中对应

## inject low-level global info to n4

n4_adjacent_info = self.LAF_n4(p3, p4_half)

n4 = self.Inject_n4(n4_adjacent_info, high_global_info[0])

n4 = self.Rep_n4(n4)

## inject low-level global info to n5

n5_adjacent_info = self.LAF_n5(n4, c5_half)

n5 = self.Inject_n5(n5_adjacent_info, high_global_info[1])

n5 = self.Rep_n5(n5)

论文中对应

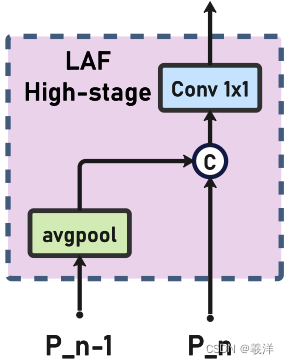

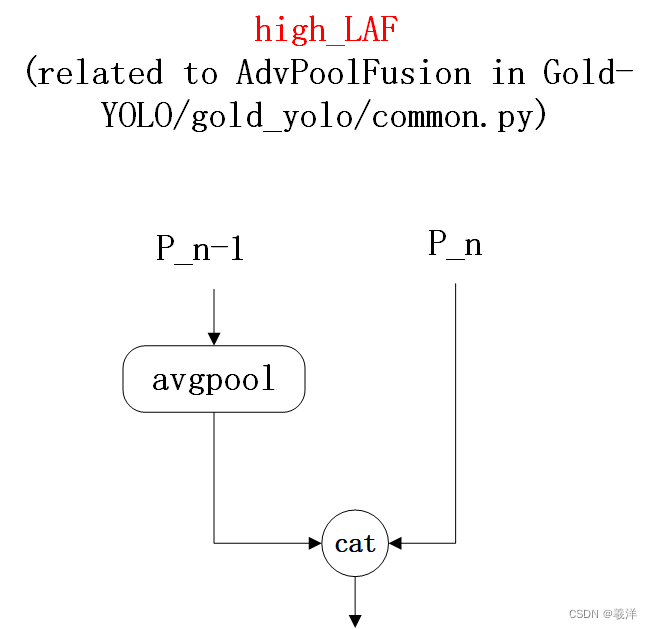

(1)High-LAF

To achieve a balance between speed and accuracy, we designed two LAF models: LAF low-level model and LAF high-level model, which are respectively used for low-level injection (merging features from adjacent two layers) and high-level injection (merging features from adjacent one layer)(融合邻接一层的特征).

(2)Inject+RepBlock

pass(和低层的Inject+RepBlock是一样的哦)

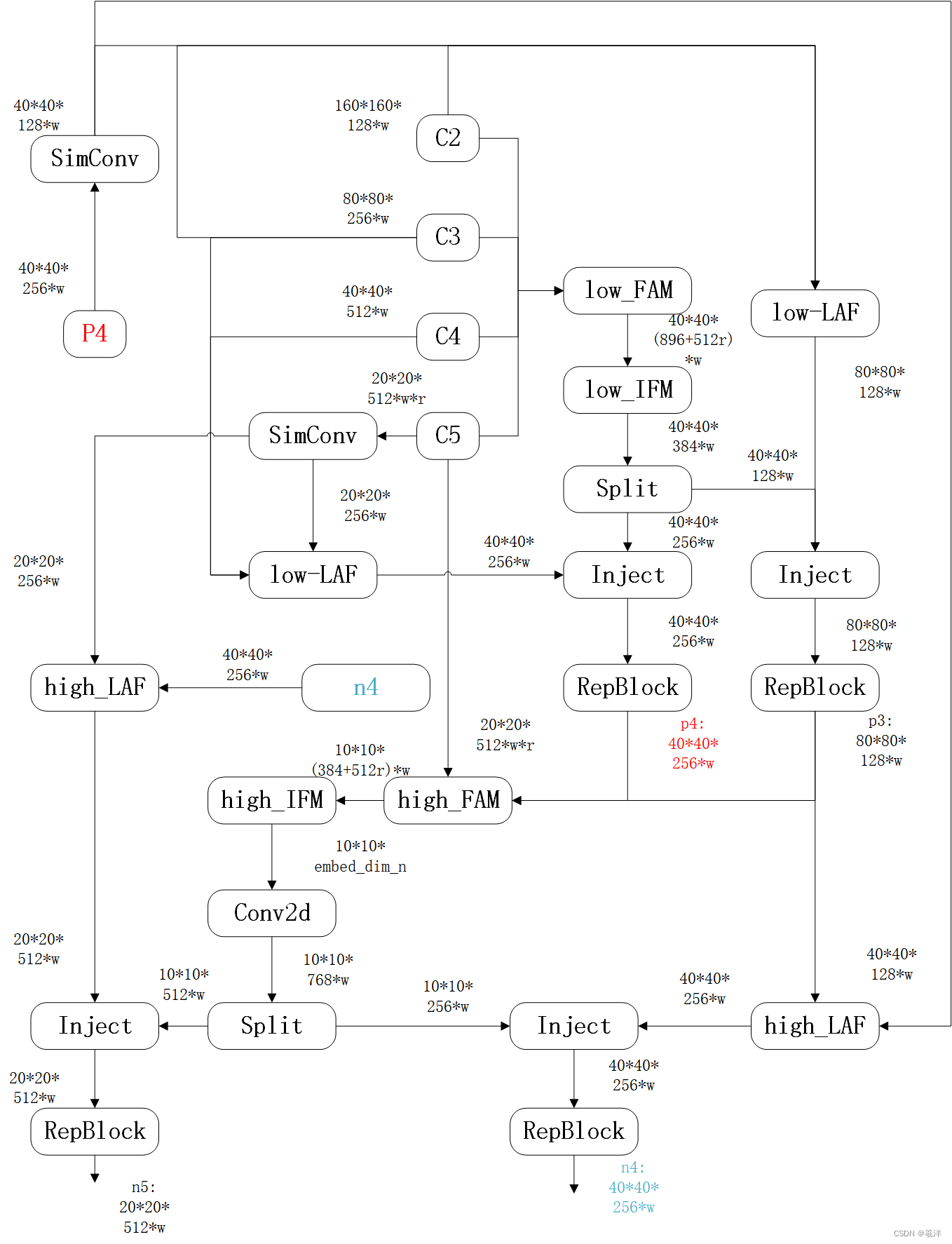

五、RepGDNeck总览图

问题

各位看官老爷们,有啥问题可以留言评论区或者私信我,看到会第一时间回复。

在下才疏学浅,难免会有错误的地方,有疑问请指出来,谢谢。

相关资源

(1)图是用visio画的, 戳这下载

参考内容

[1]Gold-YOLO: Efficient Object Detector via Gather-and-Distribute Mechanism

[2]MMYOLO: YOLOV8 原理和实现全解析

312

312

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?