学习资源来自b站,一点点手敲代码初步接触深度学习训练模型。感觉还是很神奇的!!

将训练资源下载下来并通过训练模型来实现,本篇主要用来记录当时的一些代码和注释,方便后续回顾。

####################################### net.py ########################################

import torch

from torch import nn

# 定义一个网络模型

class MyLeNet5(nn.Module):

# 初始化网络

# 主要是复现LeNet-5

def __init__(self):

super(MyLeNet5, self).__init__()

# 卷积层c1

self.c1 = nn.Conv2d(in_channels=1, out_channels=6, kernel_size=5, padding=2)

# 单纯单通道 so in=1,输出为6,约定俗成的卷积核是5,padding可以用公式算出来设置为2

# 激活函数

self.Sigmoid = nn.Sigmoid()

# 平均池化(定义一个池化层) !注意! 池化层不改变通道大小,但是会改变特征图片的窗口大小

self.s2 = nn.AvgPool2d(kernel_size=2, stride=2)

# 卷积核为2,步长为2

# 卷积层c3

self.c3 = nn.Conv2d(in_channels=6, out_channels=16, kernel_size=5)

# 池化层s4

self.s4 = nn.AvgPool2d(kernel_size=2, stride=2)

# 卷积层c5

self.c5 = nn.Conv2d(in_channels=16, out_channels=120, kernel_size=5)

# 平展层

self.flatten = nn.Flatten()

# 设置线性连接层

self.f6 = nn.Linear(120, 84)

# 输入、输出

self.output = nn.Linear(84, 10)

def forward(self, x):

# 用Sigmoid函数激活

x = self.Sigmoid(self.c1(x))

# 池化层

x = self.s2(x)

# 以此类推

x = self.Sigmoid(self.c3(x))

x = self.s4(x)

x = self.c5(x)

x = self.flatten(x)

x = self.f6(x)

x = self.output(x)

return x

if __name__ == "__main__":

# 随机生成一个 批次1,通道1,大小是28*28 实例化

x = torch.rand([1, 1, 28, 28])

model = MyLeNet5()

y = model(x)

######################################## test.py ########################################

import torch

from net import MyLeNet5

from torch.autograd import Variable

from torchvision import datasets, transforms

from torchvision.transforms import ToPILImage

# 将数据转化为tensor格式(数据是矩阵格式,要进行转化为tensor格式)

data_transform = transforms.Compose([

transforms.ToTensor()

])

# 加载训练数据集

train_dataset = datasets.MNIST(root='./data', train=True, transform=data_transform, download=True)

train_dataloader = torch.utils.data.DataLoader(dataset=train_dataset, batch_size=16, shuffle=True)

# 加载测试数据集

test_dataset = datasets.MNIST(root='./data', train=False, transform=data_transform, download=True)

test_dataloader = torch.utils.data.DataLoader(dataset=test_dataset, batch_size=16, shuffle=True)

# 如果有显卡,转到GPU

device = "cuda" if torch.cuda.is_available() else 'cpu'

# 调用net里面定义的模型,将模型数据转到GPU

model = MyLeNet5().to(device)

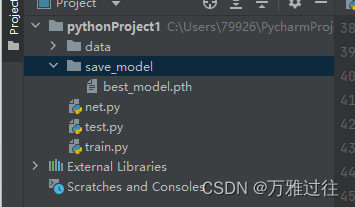

model.load_state_dict(torch.load("C:/Users/79926/PycharmProjects/pythonProject1/save_model/best_model.pth"))

# 获取结果

classes = [

"0",

"1",

"2",

"3",

"4",

"5",

"6",

"7",

"8",

"9",

]

# 把tensor转化为图片,方便可视化

show = ToPILImage()

# 进入验证

for i in range(5):

X, y = test_dataset[i][0], test_dataset[i][1]

show(X).show()

# 这里会显示出5张图片

X = Variable(torch.unsqueeze(X, dim=0).float(), requires_grad=False).to(device)

with torch.no_grad():

pred = model(X)

predicted, actual = classes[torch.argmax(pred[0])], classes[y]

print(f'predicted:"{predicted}",actual:"{actual}"')

######################################## train.py ########################################

import torch

from torch import nn

from net import MyLeNet5

from torch.optim import lr_scheduler

from torchvision import datasets, transforms

import os

# 将数据转化为tensor格式(数据是矩阵格式,要进行转化为tensor格式)

data_transform = transforms.Compose([

transforms.ToTensor()

])

# 加载训练数据集

train_dataset = datasets.MNIST(root='./data', train=True, transform=data_transform, download=True)

train_dataloader = torch.utils.data.DataLoader(dataset=train_dataset, batch_size=16, shuffle=True)

# 加载测试数据集

test_dataset = datasets.MNIST(root='./data', train=False, transform=data_transform, download=True)

test_dataloader = torch.utils.data.DataLoader(dataset=test_dataset, batch_size=16, shuffle=True)

# 如果有显卡,转到GPU

device = "cuda" if torch.cuda.is_available() else 'cpu'

# 调用net里面定义的模型,将模型数据转到GPU

model = MyLeNet5().to(device)

# 定义一个损失函数(交叉熵损失)

loss_fn = nn.CrossEntropyLoss()

# 定义一个优化器

# (梯度下降)

optimizer = torch.optim.SGD(model.parameters(), lr=1e-3, momentum=0.9)

# 学习率每隔10轮变为原来的0.1

lr_scheduler = lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1)

# 定义训练函数

def train(dataloader, model, loss_fn, optimizer):

loss, current, n = 0.0, 0.0, 0

for batch, (X, y) in enumerate(dataloader):

# 前向传播

X, y = X.to(device), y.to(device)

output = model(X)

# 损失函数(用来反向传播)

cur_loss = loss_fn(output, y)

_, pred = torch.max(output, axis=1)

# 计算精确度(累加->一轮的)

cur_acc = torch.sum(y == pred) / output.shape[0]

optimizer.zero_grad()

cur_loss.backward()

optimizer.step()

loss += cur_loss.item()

current += cur_acc.item()

n = n + 1

print("train_loss" + str(loss / n))

print("train_acc" + str(current / n))

def val(dataloader, model, loss_fn):

model.eval()

loss, current, n = 0.0, 0.0, 0

with torch.no_grad():

for batch, (X, y) in enumerate(dataloader):

# 前向传播

X, y = X.to(device), y.to(device)

output = model(X)

# 损失函数(用来反向传播)

cur_loss = loss_fn(output, y)

_, pred = torch.max(output, axis=1)

cur_acc = torch.sum(y == pred) / output.shape[0]

loss += cur_loss.item()

current += cur_acc.item()

n = n + 1

print("val_loss" + str(loss / n))

print("val_acc" + str(current / n))

return current/n

# 开始训练

epoch = 50

min_acc = 0

for t in range(epoch):

print(f'epoch{t + 1}\n--------------')

train(train_dataloader, model, loss_fn, optimizer)

a=val(test_dataloader, model, loss_fn)

#保存最好模型权重

if a>min_acc:

folder = 'save_model'

if not os.path.exists(folder):

os.mkdir('save_model')

min_acc = a

print('save best model')

torch.save(model.state_dict(),'save_model/best_model.pth')

print('Done!')

附:该up主视频资源:(讲的很棒)

3437

3437

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?