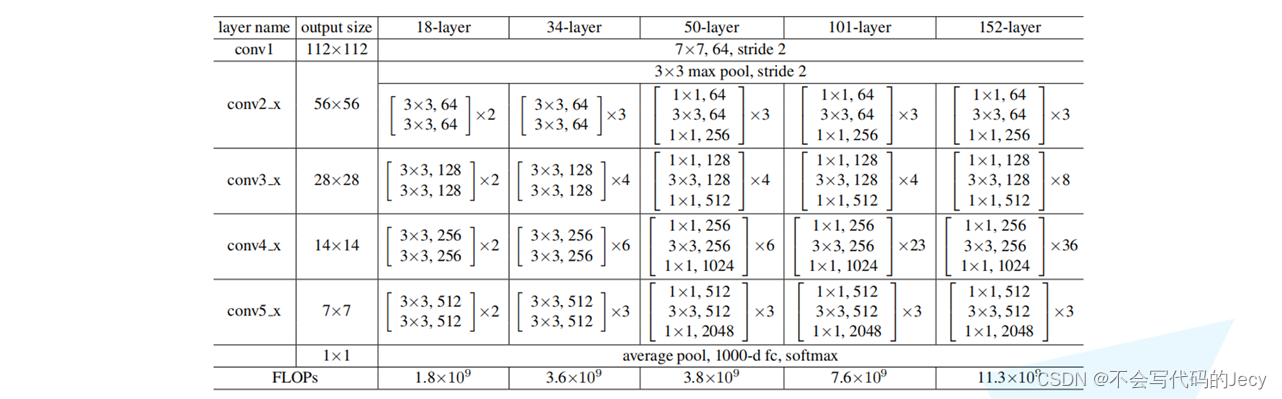

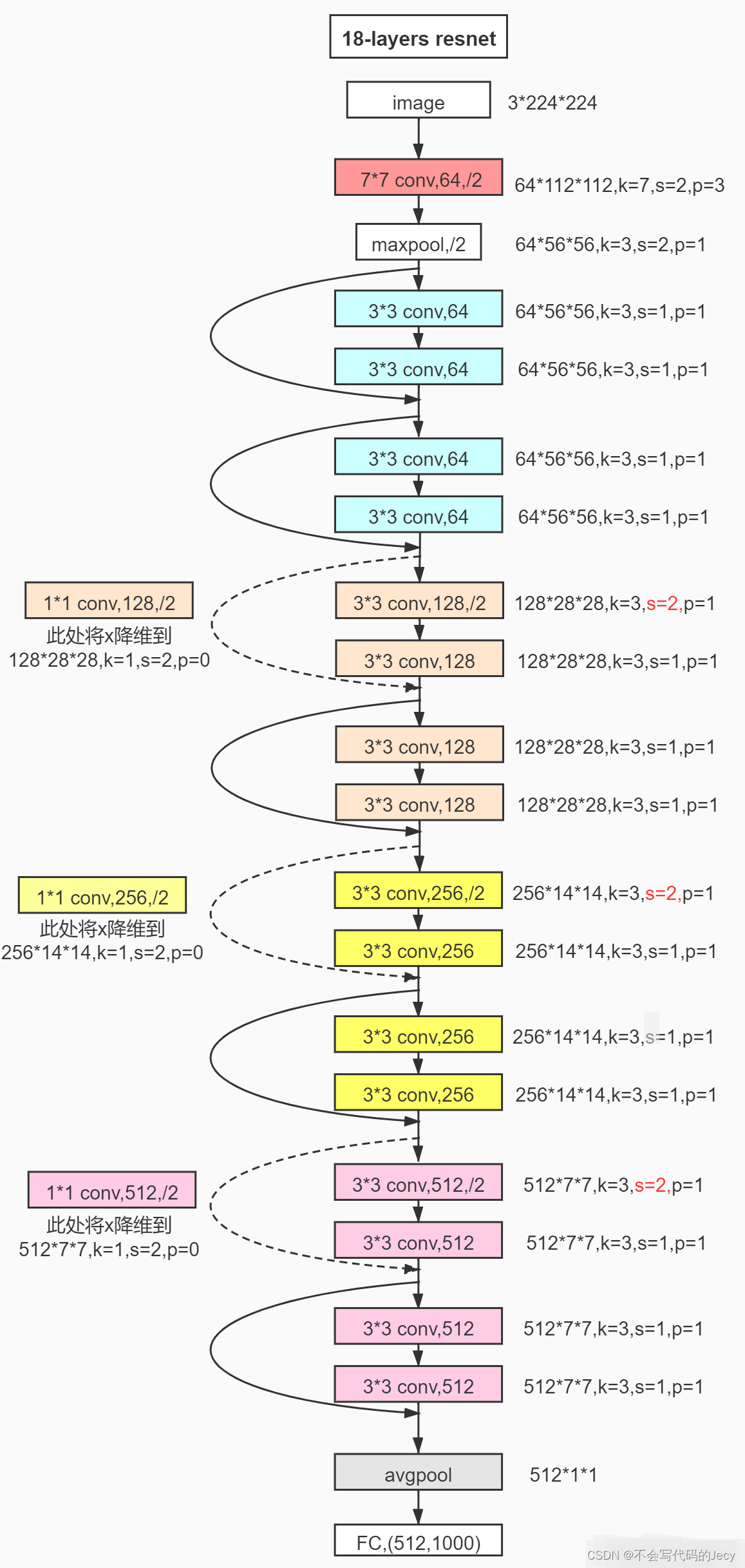

残差结构

模型

模型代码

import torch

from torch import nn

#18,34层

class BasicBlock(nn.Module):

expansion=1

def __init__(self,in_channel,out_channel,stride=1,downsample=None):

super(BasicBlock,self).__init__()

self.conv1=nn.Conv2d(in_channels=in_channel,out_channels=out_channel,kernel_size=3,stride=stride,padding=1,bias=False)

self.bn1=nn.BatchNorm2d(out_channel)

self.relu=nn.ReLU()

self.conv2=nn.Conv2d(in_channels=out_channel,out_channels=out_channel,kernel_size=3,stride=1,padding=1,bias=False)

self.bn2=nn.BatchNorm2d(out_channel)

self.downsample=downsample

def forward(self,x):

identity =x

if self.downsample is not None:

identity=self.downsample(x)

out=self.conv1(x)

out=self.bn1(out)

out=self.relu(out)

out=self.conv2(out)

out+=identity

out=self.relu(out)

return out

#50层以上

class Bottleneck(nn.Module):

expansion=4

def __init__(self,in_channel,out_channel,stride=1,downsample=None): #out_channel是第一层卷积的输出通道数

super(Bottleneck,self).__init__()

self.conv1=nn.Conv2d(in_channels=in_channel,out_channels=out_channel,kernel_size=1,stride=1,bias=False)

self.bn1=nn.BatchNorm2d(out_channel)

self.conv2=nn.Conv2d(in_channels=out_channel,out_channels=out_channel,kernel_size=3,stride=stride,padding=1,bias=False)

self.bn2=nn.BatchNorm2d(out_channel)

self.conv3=nn.Conv2d(in_channels=out_channel,out_channels=in_channel*self.expansion,kernel_size=1,stride=1,bias=False)

self.relu=nn.ReLU(inplace=True)

self.downsample=downsample

def forward(self,x):

identity=x

if self.downsample is not None:

identity=self.downsample(x)

out = self.conv1(x)

out=self.bn1(out)

out=self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out=self.bn3(out)

out+=identity

out=self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self,block,blocks_num,num_classes=1000,include_top=True):

super(ResNet,self).__init__()

self.include_top=include_top

self.in_channel=64

self.conv1 = nn.Conv2d(3,self.in_channel,kernel_size=7,stride=2,padding=3,bias=False)

self.bn1=nn.BatchNorm2d(self.in_channel)

self.relu=nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3,stride=2,padding=1)

self.layer1=self._make_layer(block,64,blocks_num[0])

self.layer2=self._make_layer(block,128,blocks_num[1],stride=2)

self.layer3=self._make_layer(block,256,blocks_num[2],stride=2)

self.layer4=self._make_layer(block,512,blocks_num[3],stride=2)

if self.include_top:

self.avgpool = nn.AdaptiveAvgPool2d((1,1)) #output size =(1,1)

self.fc =nn.Linear(512*block.expansion,num_classes)

for m in self.modules():

if isinstance(m,nn.Conv2d):

nn.init.kaiming_normal_(m.weight,mode='fan_out',nonlinearity='relu')

def _make_layer(self,block,channel,block_num,stride=1):

downsample=None

if stride !=1 or self.in_channel!=channel * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.in_channel,channel*block.expansion,kernel_size=1,stride=stride,bias=False),

nn.BatchNorm2d(channel*block.expansion)

)

layers=[]

layers.append(block(self.in_channel,channel,downsample=downsample,stride=stride))

self.in_channel = channel*block.expansion

for _ in range(1,block_num):

layers.append(block(self.in_channel,channel))

return nn.Sequential(*layers)

def forward(self,x):

x=self.conv1(x)

x=self.bn1(x)

x=self.relu(x)

x=self.maxpool(x)

x=self.layer1(x)

x=self.layer2(x)

x=self.layer3(x)

x=self.layer4(x)

if self.include_top:

x=self.avgpool(x)

x=torch.flatten(x,1)

x=self.fc(x)

return x

def resnet34(num_classes=1000,include_top=True):

return ResNet(BasicBlock,[3,4,6,3],num_classes=num_classes,include_top=include_top)

def resnet101(num_classes=1000,include_top=True):

return ResNet(Bottleneck,[3,4,23,3],num_classes=num_classes,include_top=include_top)

训练代码(resnet34)

import json

import torch

from torch import nn

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from ResNetModule import resnet34,resnet101

import torchvision.models.resnet

device=torch.device("cuda:0")

print(device)

data_transform={

"train":torchvision.transforms.Compose([

torchvision.transforms.RandomResizedCrop(224),

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize([0.485,0.456,0.406],[0.229,0.224,0.225])

]),

"val":torchvision.transforms.Compose([

torchvision.transforms.Resize(256),

torchvision.transforms.CenterCrop(224),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize([0.485,0.456,0.406],[0.229,0.224,0.225])

])

}

image_path="D:\pycharm project\噼里啪啦pytroch\\flower_data"

#加载训练数据集

train_dataset = torchvision.datasets.ImageFolder(image_path+"/train",transform=data_transform["train"])

train_num=len(train_dataset)

flower_list= train_dataset.class_to_idx

cla_dict = dict((val,key) for key,val in flower_list.items())

json_str=json.dumps(cla_dict,indent=4)

with open('class_indices.json','w') as json_file:

json_file.write(json_str)

batch_size=16

train_loader=DataLoader(train_dataset,batch_size=batch_size,shuffle=True,num_workers=0,drop_last=False)

#加载验证数据集

validate_dataset = torchvision.datasets.ImageFolder(root=image_path+"/val",transform=data_transform["val"])

val_num=len(validate_dataset)

validate_loader = DataLoader(validate_dataset,batch_size=batch_size,shuffle=True,num_workers=0,drop_last=False)

#实例化模型

net=resnet34()

#加载预训练权重(迁移学习)

model_weight_path="D:\pycharm project\噼里啪啦pytroch\\resnet34-pre.pth"

missing_keys,unexpected_keys = net.load_state_dict(torch.load(model_weight_path),strict=False)

inchannel = net.fc.in_features

net.fc = nn.Linear(inchannel,5)

net.to(device)

loss_function = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(),lr=0.0001)

best_acc = 0.0

#保存模型的路径

save_path ='resnet34.pth'

for epoch in range(3):

#train

net.train()

running_loss=0.0

for data in train_loader:

images,labels=data

optimizer.zero_grad()

outputs=net(images.to(device))

loss=loss_function(outputs,labels.to(device))

loss.backward()

optimizer.step()

running_loss+=loss.item()

#validate

net.eval()

acc = 0.0

with torch.no_grad():

for data_test in validate_loader:

test_images, test_labels = data_test

outputs = net(test_images.to(device))

predict = torch.max(outputs, dim=1)[1]

acc += (predict == test_labels.to(device)).sum().item()

accuracy_test = acc / val_num

if accuracy_test > best_acc:

torch.save(net.state_dict(), save_path)

print("epoch={0}的精确度={1},训练损失={2}".format(epoch + 1, accuracy_test, running_loss))

print("训练完成")

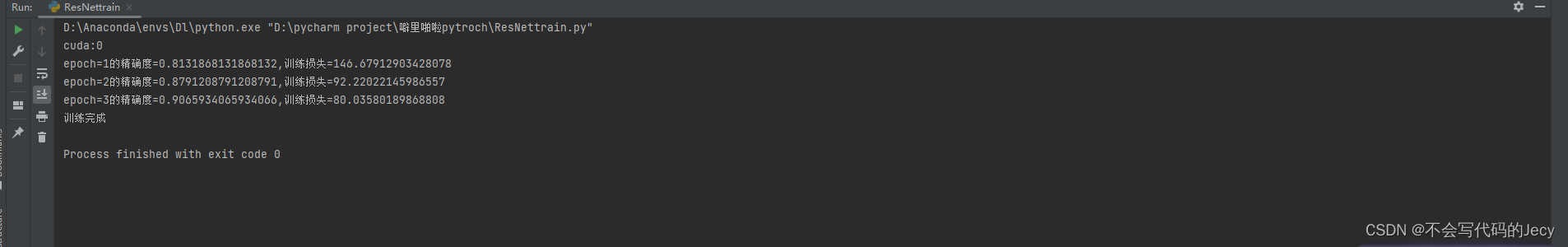

运行结果

3146

3146

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?