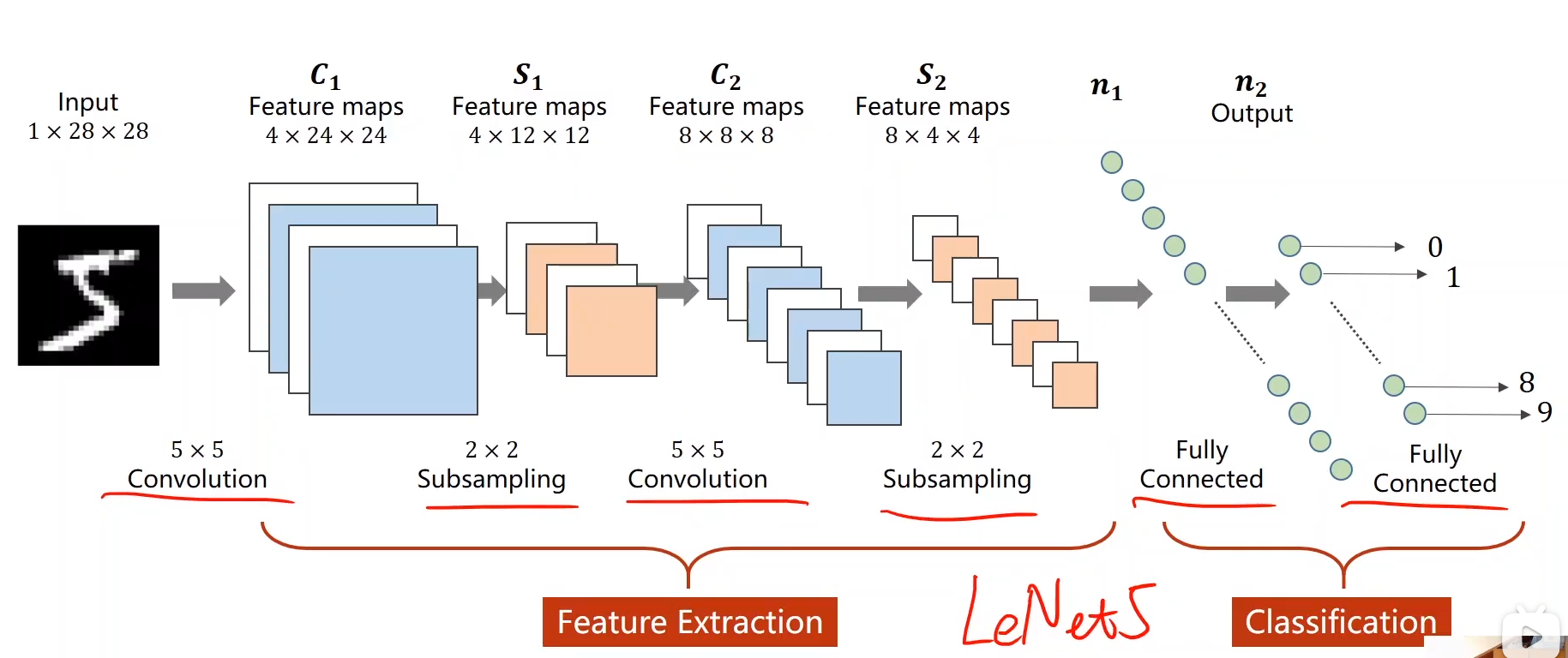

基本的CNN 串行网络结构

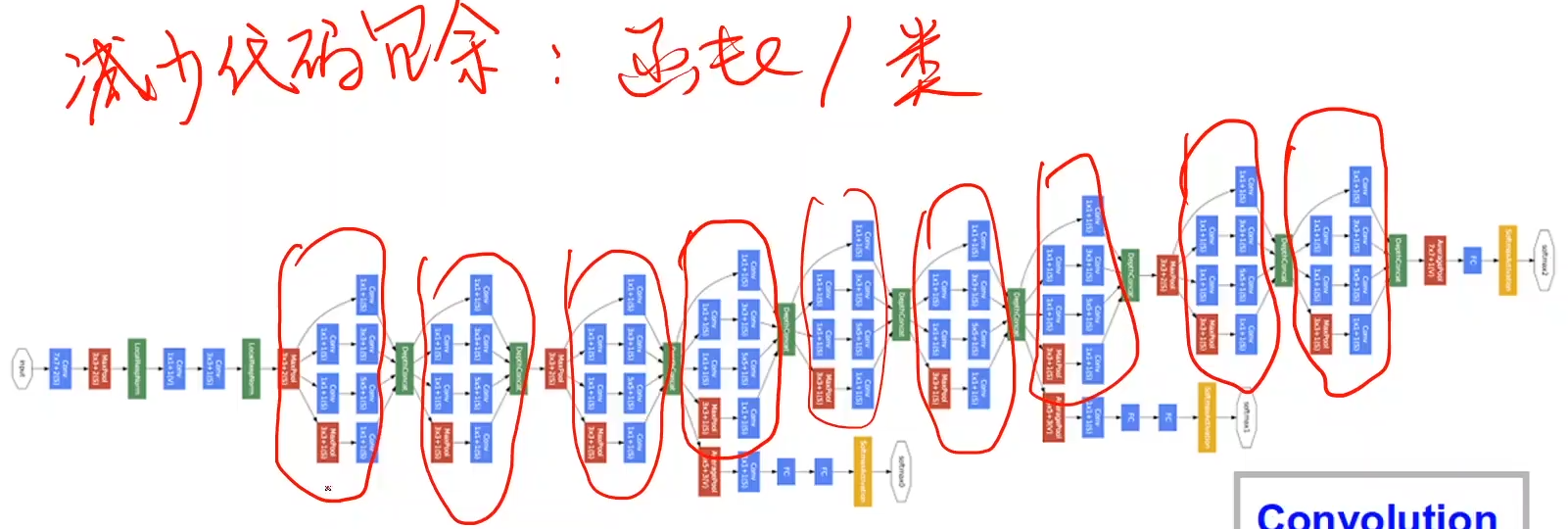

更为复杂的 CNN 网络结构-- GoogleNet

如何实现?

首先找到相同的网络结构,将其封装为一个类。

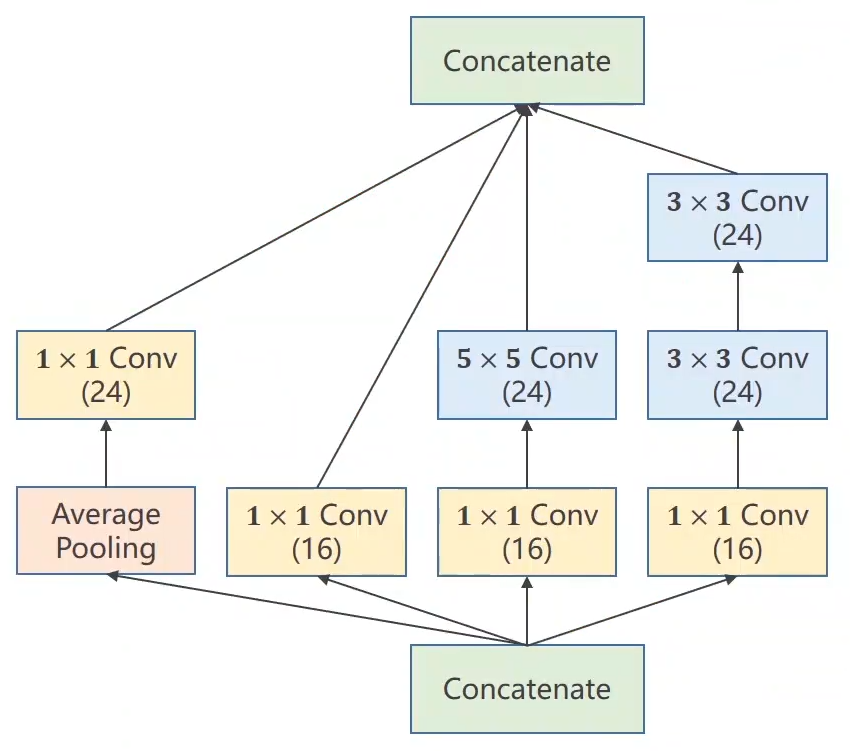

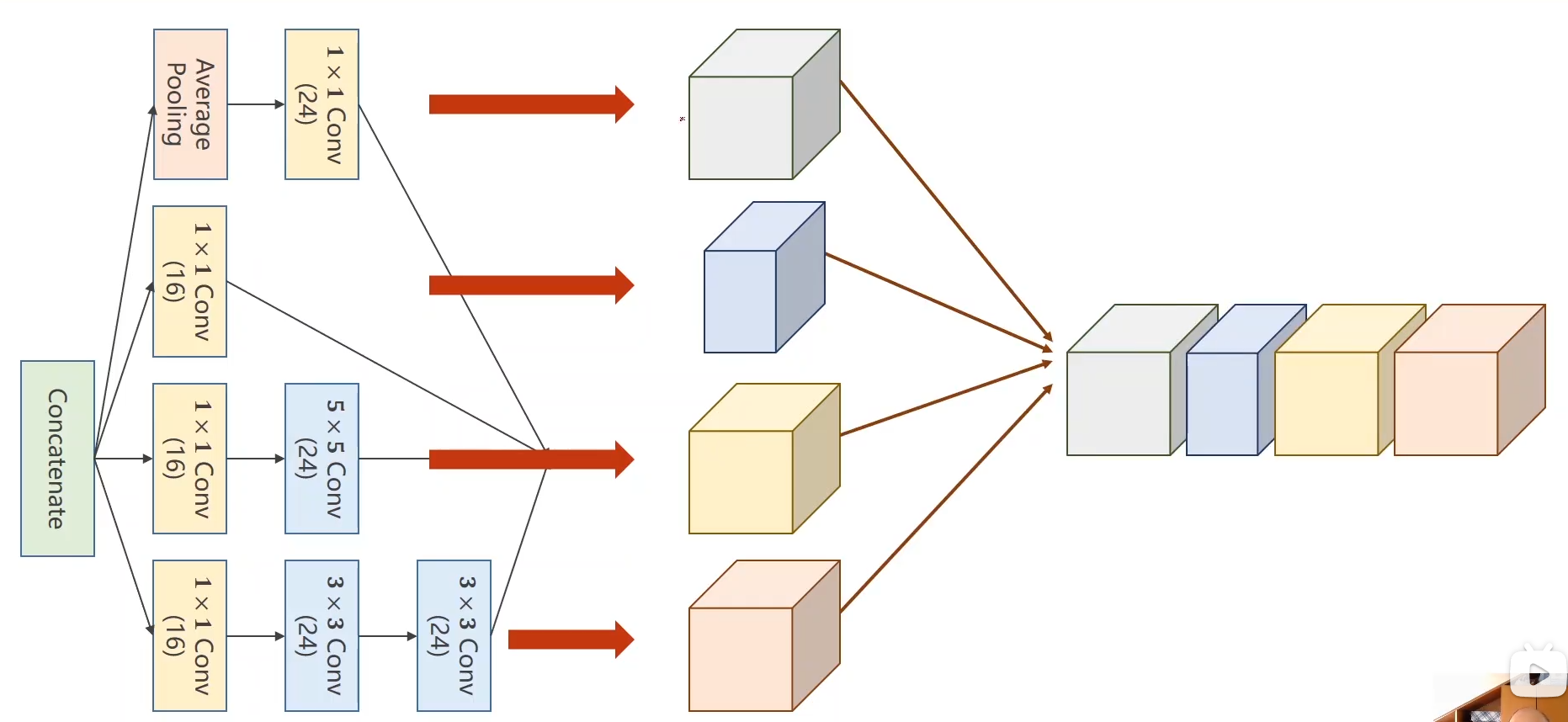

上述红圈部分的模型称之为 Inception Module 其结构如下

其思想是:我们不知道卷积核的大小究竟为3 * 3还是5 * 5 更好,索性都用上,然后在梯度更新中,比较好的卷积核尺寸会被给予更高的权重;

这四个路径出来的张量 w 和 h 必须保持一致,通道可以不同

1 * 1 的卷积核可以改变 输入的通道数,并且减少运算量

concatenate 表示张量拼接

实现块

class InceptionA(torch.nn.Module):

def __init__(self,in_channels):

super(InceptionA, self).__init__()

self.branch1x1 = torch.nn.Conv2d(in_channels,16,kernel_size=1)

self.branch5x5_1 = torch.nn.Conv2d(in_channels,16,kernel_size=1)

self.branch5x5_2 = torch.nn.Conv2d(16,24,kernel_size=5,padding=2)

self.branch3x3_1 = torch.nn.Conv2d(in_channels,16,kernel_size=1)

self.branch3x3_2 = torch.nn.Conv2d(16,24,kernel_size=3,padding=1)

self.branch3x3_3 = torch.nn.Conv2d(24,24,kernel_size=3,padding=1)

self.branch_pool = torch.nn.Conv2d(in_channels,24,kernel_size=1)

def forward(self,x):

branch1x1 = self.branch1x1(x)

branch5x5 = self.branch5x5_1(x)

branch5x5 = self.branch5x5_2(branch5x5)

branch3x3 = self.branch3x3_1(x)

branch3x3 = self.branch3x3_2(branch3x3)

branch3x3 = self.branch3x3_3(branch3x3)

branch_pool = F.avg_pool2d(x,kernel_size = 3,stride = 1,padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1,branch5x5,branch3x3,branch_pool]

# 张量为 (b,c,w,h) ,下标从0开始,故dim=1 按照通道维度来拼接

return torch.cat(outputs,dim=1)

所有代码

import numpy as np

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets

from torchvision import transforms

from torch.utils.data import DataLoader

import torch

import matplotlib.pyplot as plt

transform = transforms.Compose([

transforms.ToTensor(), #将图像转为tensor向量即每一行叠加起来,会丧失空间结构,且取值为0-1

transforms.Normalize((0.1307,),(0.3081,)) #第一个是均值,第二个是标准差,需要提前算出,这两个参数都是mnist的

])

batch_size = 64

train_dataset = datasets.MNIST(root='../dataset/mnist',

train = True,

download=False,

transform=transform

)

train_loader = DataLoader(train_dataset,

shuffle=True,

batch_size=batch_size)

test_dataset = datasets.MNIST(root='../dataset/mnist',

train = False,

download=False,

transform=transform

)

test_loader = DataLoader(test_dataset,

shuffle=False,

batch_size=batch_size)

class InceptionA(torch.nn.Module):

#经过InceptionA ,数据通道变为 88 ,输入的宽高与输出的宽高保持一致

def __init__(self,in_channels):

super(InceptionA, self).__init__()

self.branch1x1 = torch.nn.Conv2d(in_channels,16,kernel_size=1)

self.branch5x5_1 = torch.nn.Conv2d(in_channels,16,kernel_size=1)

self.branch5x5_2 = torch.nn.Conv2d(16,24,kernel_size=5,padding=2)

self.branch3x3_1 = torch.nn.Conv2d(in_channels,16,kernel_size=1)

self.branch3x3_2 = torch.nn.Conv2d(16,24,kernel_size=3,padding=1)

self.branch3x3_3 = torch.nn.Conv2d(24,24,kernel_size=3,padding=1)

self.branch_pool = torch.nn.Conv2d(in_channels,24,kernel_size=1)

def forward(self,x):

branch1x1 = self.branch1x1(x)

branch5x5 = self.branch5x5_1(x)

branch5x5 = self.branch5x5_2(branch5x5)

branch3x3 = self.branch3x3_1(x)

branch3x3 = self.branch3x3_2(branch3x3)

branch3x3 = self.branch3x3_3(branch3x3)

branch_pool = F.avg_pool2d(x,kernel_size = 3,stride = 1,padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1,branch5x5,branch3x3,branch_pool]

# 张量为 (b,c,w,h) ,下标从0开始,故dim=1 按照通道维度来拼接

return torch.cat(outputs,dim=1)

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = torch.nn.Conv2d(1,10,kernel_size=5)

self.conv2 = torch.nn.Conv2d(88,20,kernel_size=5)

self.incep1 = InceptionA(in_channels=10)

self.incep2 = InceptionA(in_channels=20)

self.mp = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(1408,10)

def forward(self,x):

in_size = x.size(0)

x = F.relu(self.mp(self.conv1(x)))

x = self.incep1(x)

x = F.relu(self.mp(self.conv2(x)))

x = self.incep2(x)

x = x.view(in_size,-1)

x = self.fc(x)

return x

model = Net()

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(),lr=0.01,momentum=0.5)

#使用 GPU 加速

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

def train(epoch):

running_loss = 0.0

# batch_idx 的范围是从 0-937 共938个 因为 batch为64,共60000个数据,所以输入矩阵为 (64*N)

for batch_idx,data in enumerate(train_loader,0):

x ,y = data

x,y = x.to(device),y.to(device) #装入GPU

optimizer.zero_grad()

y_pred = model(x)

loss = criterion(y_pred,y) #计算交叉熵损失

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx%300 == 299:

print("[%d,%5d] loss:%.3f"%(epoch+1,batch_idx+1,running_loss/300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

x,y = data

x,y = x.to(device),y.to(device)

y_pred = model(x)

_,predicted = torch.max(y_pred.data,dim=1)

total += y.size(0)

correct += (predicted==y).sum().item()

print('accuracy on test set:%d%% [%d/%d]'%(100*correct/total,correct,total))

accuracy_list.append(100*correct/total)

if __name__ == '__main__':

accuracy_list = []

for epoch in range(10):

train(epoch)

test()

plt.plot(np.linspace(1,10,10),accuracy_list)

plt.xlabel('epoch')

plt.ylabel('accuracy')

plt.show()

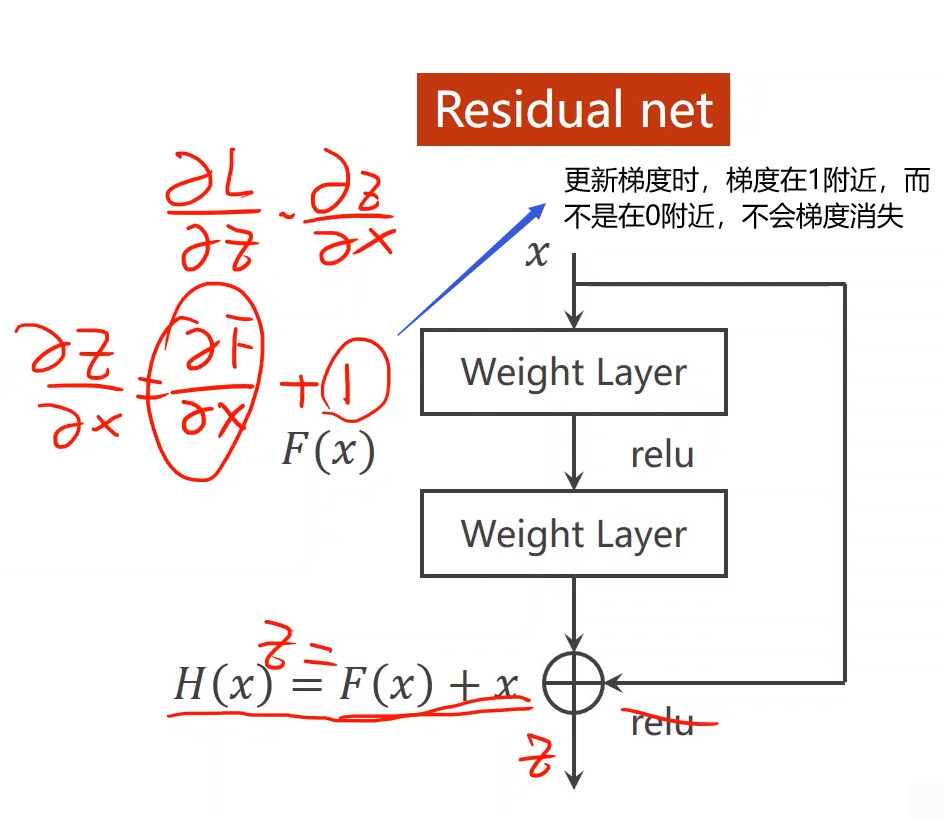

梯度消失

网络变得复杂但是性能却降低了。原因:假如更新到后面,梯度都小于1,那么根据链式法则,把这些梯度小于1的值乘起来会趋于0,w=w-ag,权重就得不到更新。

如何解决?

增加一个跳连接——残差网络

F(x) + x , 表示张量相加,通道,宽度,高度都得一样

#残差网络

class ResidualBlock(torch.nn.Module):

def __init__(self,channels):

super(ResidualBlock, self).__init__()

self.channels = channels

self.conv1 = torch.nn.Conv2d(channels,channels,kernel_size=3,padding=1)

self.conv2 = torch.nn.Conv2d(channels,channels,kernel_size=3,padding=1)

def forward(self,x):

y = F.relu(self.conv1(x))

y = self.conv2(y)

y = F.relu(x+y)

return y

网络结构

class Net2(torch.nn.Module):

def __init__(self):

super(Net2, self).__init__()

self.conv1 = torch.nn.Conv2d(1,16,kernel_size=5)

self.conv2 = torch.nn.Conv2d(16,32,kernel_size=5)

self.mp = torch.nn.MaxPool2d(kernel_size=2)

self.rblock1 = ResidualBlock(16)

self.rblock2 = ResidualBlock(32)

self.fc = torch.nn.Linear(512,10)

def forward(self,x):

in_size = x.size(0)

x = self.mp(F.relu(self.conv1(x)))

x = self.rblock1(x)

x = self.mp(F.relu(self.conv2(x)))

x = self.rblock2(x)

x = x.view(in_size,-1)

x = self.fc(x)

return x

2834

2834

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?