实验环境:anaconda、jupyter notebook、spyder

实验用到的包:tensorflow

一、卷积神经网络CNN

卷积

建立一个卷积核(一般为c×1×1 或者 c×3×3, c为输入图像的通道数),使用卷积核扫过图像,调整计算每个位置的权重。

池化

主要功能为:特征降维、防止过拟合、下采样等。 Max pooling:取区域的最大值作为本区域对应块的值 Avg pooling:取区域的平均值作为本区域对应块的值

二、VGG13实验

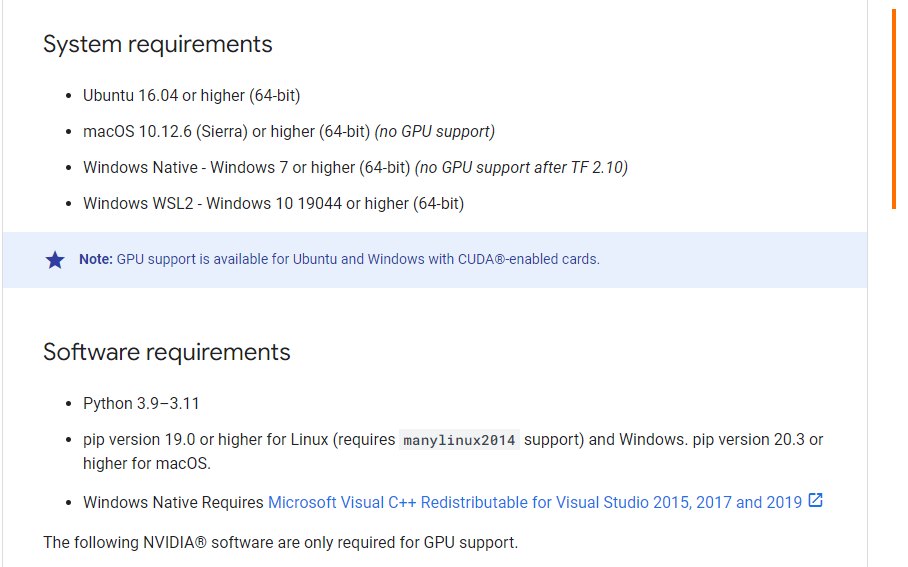

tensorflow安装

实验之前需要安装tensorflow包,tensorflow安装需求可以看官网文档,我使用的是python3.9 + tensorflow2.4.1 + numpy1.19.5

环境准备

import tensorflow as tf

from tensorflow.keras import layers, optimizers, datasets, Sequential

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

tf.random.set_seed(2345)

卷积层模型构建

conv_layers=[

# unit 1

# 输出通道数64 卷积核为2×2矩阵 输入数据边缘填充0 使用relu作为激活函数

layers.Conv2D(64, kernel_size=[2, 2],padding='same',activation=tf.nn.relu),

layers.Conv2D(64, kernel_size=[2, 2],padding='same',activation=tf.nn.relu),

# 池化窗口为2×2矩阵 步长为2 输入数据边缘填充0

layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same'),

# unit 2

layers.Conv2D(128, kernel_size=[2, 2],padding='same',activation=tf.nn.relu),

layers.Conv2D(128, kernel_size=[2, 2],padding='same',activation=tf.nn.relu),

layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same'),

# unit 3

layers.Conv2D(256, kernel_size=[2, 2],padding='same',activation=tf.nn.relu),

layers.Conv2D(256, kernel_size=[2, 2],padding='same',activation=tf.nn.relu),

layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same'),

# unit 4

layers.Conv2D(512, kernel_size=[2, 2],padding='same',activation=tf.nn.relu),

layers.Conv2D(512, kernel_size=[2, 2],padding='same',activation=tf.nn.relu),

layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same'),

# unit 5

layers.Conv2D(512, kernel_size=[2, 2],padding='same',activation=tf.nn.relu),

layers.Conv2D(512, kernel_size=[2, 2],padding='same',activation=tf.nn.relu),

layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same')

]

加载cifar100数据集以及数据处理

# 数据预处理函数

def preprocess(x, y):

# 把入参转为float 再归一化到[0,1]区间

x = tf.cast(x, dtype=tf.float32) / 255

# 标签数据转换

y = tf.cast(y, dtype=tf.int32)

return x, y

# 加载cifar100数据集

(x, y), (x_test, y_test) = datasets.cifar100.load_data()

# 在二维数组中等同于y[:,1]

y = tf.squeeze(y, axis=1)

y_test = tf.squeeze(y_test, axis=1)

print('原始数据shape:', x.shape, y.shape, x_test.shape, y_test.shape)

# 训练集打包

train_db = tf.data.Dataset.from_tensor_slices((x,y))

# 训练集洗牌,预处理,分为64批次

train_db = train_db.shuffle(100).map(preprocess).batch(64)

test_db = tf.data.Dataset.from_tensor_slices((x_test,y_test))

test_db = test_db.map(preprocess).batch(64)

sample = next(iter(train_db))

print('sample:', sample[0].shape, sample[1].shape,

tf.reduce_min(sample[0]),tf.reduce_max(sample[0]))

定义运行函数

def run():

# 卷积层

# conv_net是实现输入数据[b,32,32,3]到输出输出[b,1,1,512]的变换

conv_net = Sequential(conv_layers)

conv_net.build(input_shape=[None,32,32,3])

# 全连接层

fc_net = Sequential([

layers.Dense(256, activation=tf.nn.relu),

layers.Dense(128, activation=tf.nn.relu),

layers.Dense(100, activation=None),

])

fc_net.build(input_shape=[None,512])

# trainable_variables包含权重矩阵weights和偏执矩阵baises

variables = conv_net.trainable_variables + fc_net.trainable_variables

# 梯度下降优化器 学习率为0.0001

optimizer = optimizers.Adam(lr=1e-4)

for epoch in range(50):

for step,(x,y) in enumerate(train_db):

with tf.GradientTape() as tape:

# [b,32,32,3]=>[b,1,1,512]

out = conv_net(x)

# 变成b个1×512的矩阵

out = tf.reshape(out,[-1,512])

# [b,512] => [b,100]

logits = fc_net(out)

"""

将分类变量转变为向量

如果y = [0,2,1]

y_onehot = tf.one_hot(y, depth=5)

y_onehot=

[

[1, 0, 0, 0, 0], # 对应 y 中的 0

[0, 0, 1, 0, 0], # 对应 y 中的 2

[0, 1, 0, 0, 0] # 对应 y 中的 1

]

这里是转变为100个类的分类向量

"""

y_onehot = tf.one_hot(y, depth=100)

# 损失函数

loss = tf.losses.categorical_crossentropy(y_onehot,logits,from_logits=True)

loss = tf.reduce_mean(loss)

grads = tape.gradient(loss, variables)

# 梯度下降

optimizer.apply_gradients(zip(grads,variables))

# 打印参数

if step % 100 == 0:

print(epoch, step, 'loss:',float(loss))

# 每次梯度下降结束后测试模型

total_num = 0

total_correct = 0

for x,y in test_db:

out = conv_net(x)

out = tf.reshape(out, [-1,512])

logits = fc_net(out)

prob = tf.nn.softmax(logits, axis=1)

pred = tf.argmax(prob, axis=1)

pred = tf.cast(pred, dtype=tf.int32)

correct = tf.cast(tf.equal(pred, y), dtype=tf.int32)

correct = tf.reduce_sum(correct)

total_num += x.shape[0]

total_correct += int(correct)

acc = total_correct / total_num

print(epoch, 'acc:', acc)

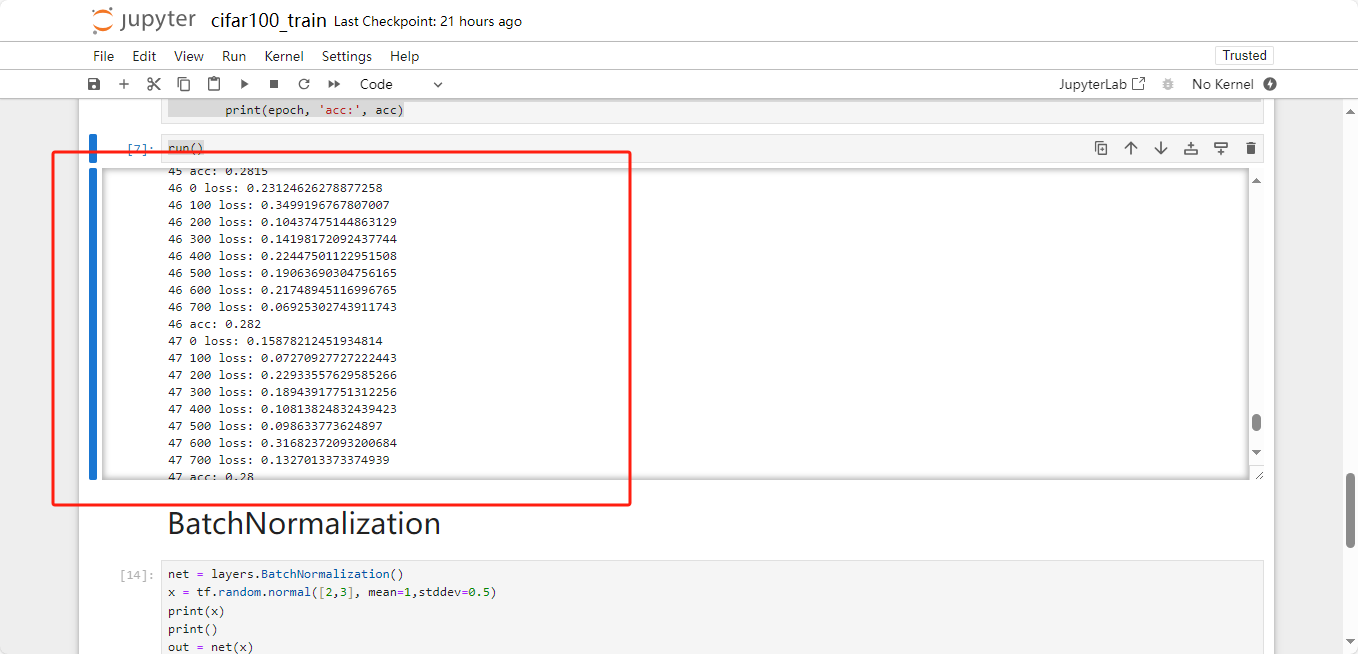

运行结果

run()

看到准确度在一点一点上升

三、ResNet实验

ResNet的原理:神经网络在达到22层后,随着层数的增加表现不一定会更好。ResNet在22层后(可以在一开始就)对每层添加一条短路,如果加上这层表现更好则不短路,如果添加后表现更差则短路。

basic block

把两个卷积单元称为一个basicblock

一个卷积单元包含卷积、归一化、激活函数等功能。

class BasicBlock(layers.Layer):

"""

* filter_num:通道数量

* stride:步长

"""

def __init__(self, filter_num, stride=1):

super(BasicBlock, self).__init__()

# 步长大于1进行下采样

self.conv1 = layers.Conv2D(filter_num, (3, 3), strides=stride, padding='same')

self.bn1 = layers.BatchNormalization()

self.relu = layers.Activation('relu')

# 第二个卷积单元不做下采样

self.conv2 = layers.Conv2D(filter_num, (3, 3), strides=1, padding='same')

self.bn2 = layers.BatchNormalization()

# identity层,短接,如果步长不为1,需要下采样

if stride != 1:

self.downsample = Sequential()

self.downsample.add(layers.Conv2D(filter_num, (1, 1), strides=stride))

else:

self.downsample = lambda x:x

def call(self, inputs, training=None):

# 卷积

out = self.conv1(inputs)

# 归一化

out = self.bn1(out)

# 激活函数

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

identity = self.downsample(inputs)

output = layers.add([out, identity])

# 这个激活函数放在bn2后也可以

output = tf.nn.relu(output)

return output

Res Net

多个basic block构成了ResNet

class ResNet(keras.Model):

"""

* layer_dims

"""

def __init__(self, layer_dims, num_classes=10):

super(ResNet, self).__init__()

# stride=(1,1)代表在w、h两个维度上步长都是1

self.stem = Sequential([layers.Conv2D(64, (3, 3), strides=(1, 1)),

layers.BatchNormalization(),

layers.Activation('relu'),

layers.MaxPool2D(pool_size=(2, 2), strides=(1, 1), padding='same')

])

self.layer1 = self.build_res_block(64, layer_dims[0])

self.layer2 = self.build_res_block(128, layer_dims[1], stride=2)

self.layer3 = self.build_res_block(256, layer_dims[2], stride=2)

self.layer4 = self.build_res_block(512, layer_dims[3], stride=2)

# output[b,512,h,w]转为[b,512,1,1]

self.avgpool = layers.GlobalAveragePooling2D()

self.fc = layers.Dense(num_classes)

def call(self, inputs, training=None):

out = self.stem(inputs)

out = self.layer1(out)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

# [b, num_channels]

out = self.avgpool(out)

# [b, num_classes]

out = self.fc(out)

return out

def build_res_block(self, filter_num, blocks, stride=1):

res_blocks = Sequential()

# 只有第一个basic block可能具有下采样能力

res_blocks.add(BasicBlock(filter_num, stride))

for _ in range(1, blocks):

res_blocks.add(BasicBlock(filter_num, stride=1))

return res_blocks

封装简化ResNet建立过程

def resnet18():

return ResNet([2,2,2,2])

def resnet34():

return ResNet([3,4,6,3])

加载预处理cifar10数据集

# 数据预处理函数

def preprocess(x, y):

# 把入参转为float 再归一化到[-1,1]区间

x = 2 * tf.cast(x, dtype=tf.float32) / 255 - 1

# 标签数据转换

y = tf.cast(y, dtype=tf.int32)

return x, y

# 加载cifar100数据集

(x, y), (x_test, y_test) = datasets.cifar10.load_data()

# 在二维数组中等同于y[:,1]

y = tf.squeeze(y, axis=1)

y_test = tf.squeeze(y_test, axis=1)

print('原始数据shape:', x.shape, y.shape, x_test.shape, y_test.shape)

# 训练集打包

train_db = tf.data.Dataset.from_tensor_slices((x,y))

# 训练集洗牌,预处理,分为64批次

train_db = train_db.shuffle(100).map(preprocess).batch(64)

test_db = tf.data.Dataset.from_tensor_slices((x_test,y_test))

test_db = test_db.map(preprocess).batch(64)

sample = next(iter(train_db))

print('sample:', sample[0].shape, sample[1].shape,

tf.reduce_min(sample[0]),tf.reduce_max(sample[0]))

# 这里del是因为我的服务器只有2g内存,哈哈哈哈

del x, y, x_test, y_test, sample

运行函数

def run():

# ResNet18网络构建

model = resnet18()

model.build(input_shape=(None,32,32,3))

# 梯度下降优化器 学习率为0.0001

optimizer = optimizers.Adam(lr=1e-4)

for epoch in range(100):

for step,(x,y) in enumerate(train_db):

with tf.GradientTape() as tape:

# [b,32,32,3]=>[b,100]

logits = model(x)

"""

将分类变量转变为向量

如果y = [0,2,1]

y_onehot = tf.one_hot(y, depth=5)

y_onehot=

[

[1, 0, 0, 0, 0], # 对应 y 中的 0

[0, 0, 1, 0, 0], # 对应 y 中的 2

[0, 1, 0, 0, 0] # 对应 y 中的 1

]

这里是转变为100个类的分类向量

"""

y_onehot = tf.one_hot(y, depth=10)

# 损失函数

loss = tf.losses.categorical_crossentropy(y_onehot,logits,from_logits=True)

loss = tf.reduce_mean(loss)

grads = tape.gradient(loss, model.trainable_variables)

# 梯度下降

optimizer.apply_gradients(zip(grads,model.trainable_variables))

# 打印参数

if step % 100 == 0:

print(epoch, step, 'loss:', float(loss))

# 每次梯度下降结束后测试模型

total_num = 0

total_correct = 0

for x,y in test_db:

logits = model(x)

prob = tf.nn.softmax(logits, axis=1)

pred = tf.argmax(prob, axis=1)

pred = tf.cast(pred, dtype=tf.int32)

correct = tf.cast(tf.equal(pred, y), dtype=tf.int32)

correct = tf.reduce_sum(correct)

total_num += x.shape[0]

total_correct += int(correct)

acc = total_correct / total_num

print(epoch, 'acc:', acc)

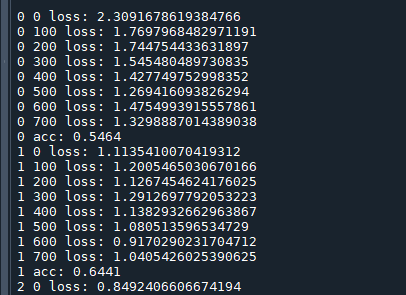

运行结果

run()

本文详细介绍了如何使用TensorFlow在Python中构建CNN(如VGG13)和ResNet模型,并在CIFAR-10/100数据集上进行训练,展示了从环境配置到模型训练的完整流程。

本文详细介绍了如何使用TensorFlow在Python中构建CNN(如VGG13)和ResNet模型,并在CIFAR-10/100数据集上进行训练,展示了从环境配置到模型训练的完整流程。

3113

3113

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?