一 代码流程

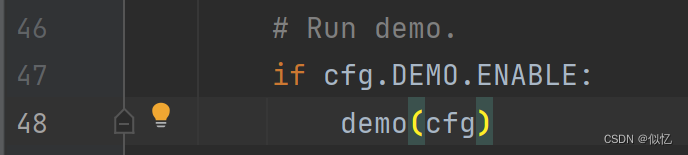

1 主程序入口

run_net.py

配置文件中发现DEMO.ENABLE为True,进入Demo模式

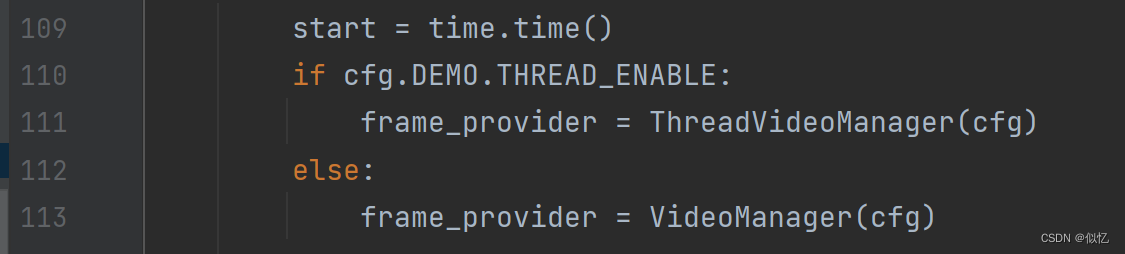

2 创建frame提供器

demo_net.py

执行demo,首先创建frame提供器,进入113行

3 frame提供器初始化

demo_loader.py

进行VideoManager的初始化 py.17

def __init__(self, cfg):

"""

Args:

cfg (CfgNode): configs. Details can be found in

slowfast/config/defaults.py

"""

assert (

cfg.DEMO.WEBCAM > -1 or cfg.DEMO.INPUT_VIDEO != ""

), "Must specify a data source as input."

""" 输入设备配置,要么摄像头要么输入视频"""

self.source = (

cfg.DEMO.WEBCAM if cfg.DEMO.WEBCAM > -1 else cfg.DEMO.INPUT_VIDEO

)

""" 展示宽度"""

self.display_width = cfg.DEMO.DISPLAY_WIDTH

"""展示长度"""

self.display_height = cfg.DEMO.DISPLAY_HEIGHT

"""cv2.VideoCapture:视频抽帧,视频图像"""

self.cap = cv2.VideoCapture(self.source)

"""设置长宽则用,不然与输入同步"""

if self.display_width > 0 and self.display_height > 0:

self.cap.set(cv2.CAP_PROP_FRAME_WIDTH, self.display_width)

self.cap.set(cv2.CAP_PROP_FRAME_HEIGHT, self.display_height)

else:

self.display_width = int(self.cap.get(cv2.CAP_PROP_FRAME_WIDTH))

self.display_height = int(self.cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

"""判断视频是否能打开"""

if not self.cap.isOpened():

raise IOError("Video {} cannot be opened".format(self.source))

self.output_file = None

"""进行fps判断,若cfg中为-1,则与输入视频同步"""

if cfg.DEMO.OUTPUT_FPS == -1:

self.output_fps = self.cap.get(cv2.CAP_PROP_FPS)

else:

self.output_fps = cfg.DEMO.OUTPUT_FPS

if cfg.DEMO.OUTPUT_FILE != "":

self.output_file = self.get_output_file(

cfg.DEMO.OUTPUT_FILE, fps=self.output_fps

)

self.id = -1

self.buffer = []

"""

2个连续剪辑之间的重叠帧数。增加此数字以进行更频繁的动作预测,

重叠帧的数量不能大于序列长度的一半

"""

self.buffer_size = cfg.DEMO.BUFFER_SIZE

""" 帧序列长度 32 * 2 = 64帧"""

self.seq_length = cfg.DATA.NUM_FRAMES * cfg.DATA.SAMPLING_RATE

self.test_crop_size = cfg.DATA.TEST_CROP_SIZE

"""可视化显示区间 [keyframe_idx - CLIP_VIS_SIZE, keyframe_idx + CLIP_VIS_SIZE] """

self.clip_vis_size = cfg.DEMO.CLIP_VIS_SIZE

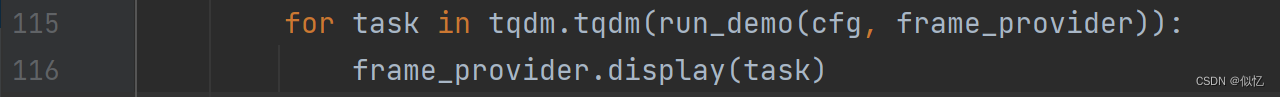

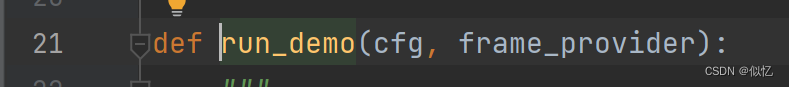

4 正式进入demo部分

demo_net.py

初始化完frame.provider部分,进入迭代器,为获取task

进入同py文件下的run_demo函数

"""设置numpy随机数种子,随机种子设定"""

np.random.seed(cfg.RNG_SEED)

"""设置 CPU 生成随机数的 种子 ,方便下次复现实验结果"""

torch.manual_seed(cfg.RNG_SEED)

# Setup logging format.

logging.setup_logging(cfg.OUTPUT_DIR)

# Print config.

logger.info("Run demo with config:")

logger.info(cfg)

""" 类别设定"""

common_classes = (

cfg.DEMO.COMMON_CLASS_NAMES

if len(cfg.DEMO.LABEL_FILE_PATH) != 0

else None

)

4.1 视频可视化函数配置

video_vis = VideoVisualizer(

num_classes=cfg.MODEL.NUM_CLASSES,

class_names_path=cfg.DEMO.LABEL_FILE_PATH,

top_k=cfg.TENSORBOARD.MODEL_VIS.TOPK_PREDS,

thres=cfg.DEMO.COMMON_CLASS_THRES,

lower_thres=cfg.DEMO.UNCOMMON_CLASS_THRES,

common_class_names=common_classes,

colormap=cfg.TENSORBOARD.MODEL_VIS.COLORMAP,

mode=cfg.DEMO.VIS_MODE,

)

进入VideoVisualizer函数初始化(视频结果可视化函数)

video_visualizer.py py349

assert mode in ["top-k", "thres"], "Mode {} is not supported.".format(

mode

)

"""可视化结果判定选择:阈值或top-k"""

self.mode = mode

"""类别数设定为4"""

self.num_classes = num_classes

"""类别名称:4个"""

self.class_names, _, _ = get_class_names(class_names_path, None, None)

self.top_k = top_k

self.thres = thres

"""设定小众类别阈值"""

self.lower_thres = lower_thres

"""基于“self.thes”和“self.lower_thres”计算所有类的阈值数组。"""

if mode == "thres":

self._get_thres_array(common_class_names=common_class_names)

"""色彩映射表"""

self.color_map = plt.get_cmap(colormap)

4.2 多进程设置

demo_net.py py56

n_workers=cfg.DEMO.NUM_VIS_INSTANCE==2 // 运行视频可视化所用进程

进入async_predictor.py

进行AsyncVis的初始化

py 154

def __init__(self, video_vis, n_workers=None):

"""

Args:

cfg (CfgNode): configs. Details can be found in

slowfast/config/defaults.py

n_workers (Optional[int]): number of CPUs for running video visualizer.

If not given, use all CPUs.

"""

""" 线程数设定,默认为cpu总数"""

num_workers = mp.cpu_count() if n_workers is None else n_workers

"""创建队列"""

self.task_queue = mp.Queue()

self.result_queue = mp.Queue()

self.get_indices_ls = []

"""进程总数"""

self.procs = []

self.result_data = {}

self.put_id = -1

"""进程队列加入AsyncVis的可视化工作程序。"""

""" video_vis为先前配置的视频可视化函数"""

for _ in range(max(num_workers, 1)):

self.procs.append(

AsyncVis._VisWorker(

video_vis, self.task_queue, self.result_queue

)

)

进入AsyncVis的可视化工作程序初始化 py126

class _VisWorker(mp.Process):

def __init__(self, video_vis, task_queue, result_queue):

"""

Visualization Worker for AsyncVis.

Args:

video_vis (VideoVisualizer object): object with tools for visualization.

task_queue (mp.Queue): a shared queue for incoming task for visualization.

result_queue (mp.Queue): a shared queue for visualized results.

"""

self.video_vis = video_vis

self.task_queue = task_queue

self.result_queue = result_queue

"""继承父类的构造函数"""

super().__init__()

在进程队列加入后,分别开始start py178

for p in self.procs:

p.start()

调用进程内的任务的run函数 py 141

def run(self):

"""

Run visualization asynchronously. 异步运行可视化。

"""

while True:

task = self.task_queue.get()

if isinstance(task, _StopToken):

break

4.3 model创建

demo_net.py py58

predictor.py py 119

使用AsyncVis的同步动作预测和可视化管道。

def __init__(self, cfg, async_vis=None, gpu_id=None):

"""

Args:

cfg (CfgNode): configs. Details can be found in

slowfast/config/defaults.py

async_vis (AsyncVis object): asynchronous visualizer.

gpu_id (Optional[int]): GPU id.

"""

""" 动作识别的动作预测器 """

self.predictor = Predictor(cfg=cfg, gpu_id=gpu_id)

self.async_vis = async_vis

进入 py20 Predictor类

def __init__(self, cfg, gpu_id=None):

"""

Args:

cfg (CfgNode): configs. Details can be found in

slowfast/config/defaults.py

gpu_id (Optional[int]): GPU id.

"""

"""选择gpu"""

if cfg.NUM_GPUS:

self.gpu_id = (

torch.cuda.current_device() if gpu_id is None else gpu_id

)

""" Build the video model and print model statistics.

建立视频模型并打印模型统计信息。"""

self.model = build_model(cfg, gpu_id=gpu_id)

进入模型建立环节 build.py

py25

def build_model(cfg, gpu_id=None):

"""

Builds the video model.

Args:

cfg (configs): configs that contains the hyper-parameters to build the

backbone. Details can be seen in slowfast/config/defaults.py.

gpu_id (Optional[int]): specify the gpu index to build model.

"""

if torch.cuda.is_available():

assert (

cfg.NUM_GPUS <= torch.cuda.device_count()

), "Cannot use more GPU devices than available"

else:

assert (

cfg.NUM_GPUS == 0

), "Cuda is not available. Please set `NUM_GPUS: 0 for running on CPUs."

# Construct the model 构建模型

name = cfg.MODEL.MODEL_NAME

model = MODEL_REGISTRY.get(name)(cfg)

if cfg.BN.NORM_TYPE == "sync_batchnorm_apex":

try:

import apex

except ImportError:

raise ImportError("APEX is required for this model, pelase install")

logger.info("Converting BN layers to Apex SyncBN")

process_group = apex.parallel.create_syncbn_process_group(

group_size=cfg.BN.NUM_SYNC_DEVICES

)

model = apex.parallel.convert_syncbn_model(

model, process_group=process_group

)

if cfg.NUM_GPUS:

if gpu_id is None:

# Determine the GPU used by the current process

cur_device = torch.cuda.current_device()

else:

cur_device = gpu_id

# Transfer the model to the current GPU device

model = model.cuda(device=cur_device)

# Use multi-process data parallel model in the multi-gpu setting

if cfg.NUM_GPUS > 1:

# Make model replica operate on the current device

model = torch.nn.parallel.DistributedDataParallel(

module=model,

device_ids=[cur_device],

output_device=cur_device,

find_unused_parameters=True

if cfg.MODEL.DETACH_FINAL_FC

or cfg.MODEL.MODEL_NAME == "ContrastiveModel"

else False,

)

if cfg.MODEL.FP16_ALLREDUCE:

model.register_comm_hook(

state=None, hook=comm_hooks_default.fp16_compress_hook

)

return model

返回构建model至predictor.py py39

"""模型变为测试模式"""

self.model.eval()

self.cfg = cfg

"""detectron2Predictor 初始化"""

if cfg.DETECTION.ENABLE:

self.object_detector = Detectron2Predictor(cfg, gpu_id=self.gpu_id)

4.4 Detectron2Predictor 初始化

Predictor.py py158

class Detectron2Predictor:

"""

Wrapper around Detectron2 to return the required predicted bounding boxes as a ndarray.

围绕Detectron2进行包装,以将所需的预测边界框作为ndarray返回。

"""

def __init__(self, cfg, gpu_id=None):

"""

Args:

cfg (CfgNode): configs. Details can be found in

slowfast/config/defaults.py

gpu_id (Optional[int]): GPU id.

"""

self.cfg = get_cfg()

self.cfg.merge_from_file(

model_zoo.get_config_file(cfg.DEMO.DETECTRON2_CFG)

)

"""检测阈值设定"""

self.cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = cfg.DEMO.DETECTRON2_THRESH

"""检测器权重配置"""

self.cfg.MODEL.WEIGHTS = cfg.DEMO.DETECTRON2_WEIGHTS

"""有BGR和RGB选型,BGR为opencv早先年版本"""

self.cfg.INPUT.FORMAT = cfg.DEMO.INPUT_FORMAT

if cfg.NUM_GPUS and gpu_id is None:

gpu_id = torch.cuda.current_device()

self.cfg.MODEL.DEVICE = (

"cuda:{}".format(gpu_id) if cfg.NUM_GPUS > 0 else "cpu"

)

logger.info("Initialized Detectron2 Object Detection Model.")

"""配置环境中默认detectron2检测器"""

self.predictor = DefaultPredictor(self.cfg)

配置完后返回predictor.py py39

logger.info("Start loading model weights.")

"""加载模型权重"""

cu.load_test_checkpoint(cfg, self.model)

logger.info("Finish loading model weights")

4.5 初始化完成,开始正式检测

"""取帧总数"""

seq_len = cfg.DATA.NUM_FRAMES * cfg.DATA.SAMPLING_RATE

""" 缓冲区大小不能大于序列长度的一半"""

assert (

cfg.DEMO.BUFFER_SIZE <= seq_len // 2

), "Buffer size cannot be greater than half of sequence length."

num_task = 0

# Start reading frames. 开始读帧

frame_provider.start()

进入demo_loader.py py140

def start(self):

""" return self 的意思是返回类的实例,链式调用"""

return self

进行循环迭代 demo_net.py py 72

for able_to_read, task in frame_provider:

进入demo_loader.py py140

"""返回迭代对象"""

def __iter__(self):

return self

"""返回容器的下一个元素"""

def __next__(self):

"""

Read and return the required number of frames for 1 clip.

**读取并返回1个剪辑所需的帧数。**

Returns:

was_read (bool): False if not enough frames to return.

task (TaskInfo object): object contains metadata for the current clips.

"""

"""初始值为-1"""

self.id += 1

"""任务信息类加载"""

task = TaskInfo()

task.img_height = self.display_height

task.img_width = self.display_width

task.crop_size = self.test_crop_size

"""可视化区间设定"""

task.clip_vis_size = self.clip_vis_size

frames = []

if len(self.buffer) != 0:

frames = self.buffer

was_read = True

"""cap为cv2.videocapture read为读帧操作"""

while was_read and len(frames) < self.seq_length:

was_read, frame = self.cap.read()

frames.append(frame)

if was_read and self.buffer_size != 0:

self.buffer = frames[-self.buffer_size :]

task.add_frames(self.id, frames)

"""根据任务id进行缓冲区设置"""

task.num_buffer_frames = 0 if self.id == 0 else self.buffer_size

"""返回是否可读,和任务信息类,包含裁切处理等很多信息"""

return was_read, task

返回进 demo_net.py py 72

if not able_to_read:

break

if task is None:

time.sleep(0.02)

continue

""" task计数 """

num_task += 1

model.put(task)

进入model.put函数 predictor.py py148

def put(self, task):

"""

Make prediction and put the results in `async_vis` task queue.

**进行预测并将结果放入“async_vis”任务队列中。**

Args:

task (TaskInfo object): task object that contain

the necessary information for action prediction. (e.g. frames, boxes)

"""

task = self.predictor(task)

4.6 检测框部分

进入predictor.py py60

def __call__(self, task):

"""

Returns the prediction results for the current task.

返回当前任务的预测结果。

Args:

task (TaskInfo object): task object that contain

the necessary information for action prediction. (e.g. frames, boxes)

包含动作预测所需信息的任务对象。(例如框架、盒子)

Returns:

task (TaskInfo object): the same task info object but filled with

prediction values (a tensor) and the corresponding boxes for

action detection task.

相同的任务信息对象,但是填充有用于动作检测任务的预测值(张量)和相应的框。

"""

if self.cfg.DETECTION.ENABLE:

task = self.object_detector(task)

进入检测部分predictor.py py187

**可以修改部分 加入检测标签 **

def __call__(self, task):

"""

Return bounding boxes predictions as a tensor.

将边界框预测作为张量返回。

Args:

task (TaskInfo object): task object that contain

the necessary information for action prediction. (e.g. frames)

包含动作预测所需信息的任务对象。(例如框架)

Returns:

task (TaskInfo object): the same task info object but filled with

prediction values (a tensor) and the corresponding boxes for

action detection task.

相同的任务信息对象,但填充了预测值(张量)和动作检测任务的相应框

"""

"""选取中间一帧 64帧取第32帧"""

middle_frame = task.frames[len(task.frames) // 2]

""" 得到预测框坐标 预测框置信度以及检测类别"""

outputs = self.predictor(middle_frame)

# Get only human instances

"""这里是对检测框进行判断,判断是否为人,判断为人,则返回True"""

mask = outputs["instances"].pred_classes == 0

"""得到预测人的框"""

pred_boxes = outputs["instances"].pred_boxes.tensor[mask]

"""在task中增加bbox的属性

可以在TaskInfo中加入我们想要的属性"""

task.add_bboxes(pred_boxes)

return task

得到检测框后返回到predictor.py py62

"""得到任务结果"""

if self.cfg.DETECTION.ENABLE:

task = self.object_detector(task)

"""提取出所有帧和检测框"""

frames, bboxes = task.frames, task.bboxes

if bboxes is not None:

"""对预测框进行大小调整"""

bboxes = cv2_transform.scale_boxes(

self.cfg.DATA.TEST_CROP_SIZE,

bboxes,

task.img_height,

task.img_width,

)

if self.cfg.DEMO.INPUT_FORMAT == "BGR":

frames = [

cv2.cvtColor(frame, cv2.COLOR_BGR2RGB) for frame in frames

]

"""进行帧大小的调整"""

frames = [

cv2_transform.scale(self.cfg.DATA.TEST_CROP_SIZE, frame)

for frame in frames

]

inputs = process_cv2_inputs(frames, self.cfg)

进入下一个环节

4.7 预测动作系列

进入utils.py py303

def process_cv2_inputs(frames, cfg):

"""

Normalize and prepare inputs as a list of tensors. Each tensor

correspond to a unique pathway.

将输入规范化并准备为张量列表。每个张量对应一个唯一的路径

Args:

frames (list of array): list of input images (correspond to one clip) in range [0, 255].

cfg (CfgNode): configs. Details can be found in

slowfast/config/defaults.py

"""

"""归一化处理"""

inputs = torch.from_numpy(np.array(frames)).float() / 255

"""标准化处理"""

inputs = tensor_normalize(inputs, cfg.DATA.MEAN, cfg.DATA.STD)

# T H W C -> C T H W.

inputs = inputs.permute(3, 0, 1, 2)

# Sample frames for num_frames specified.

"""选取帧数"""

index = torch.linspace(0, inputs.shape[1] - 1, cfg.DATA.NUM_FRAMES).long()

"""得到所需帧数图片"""

inputs = torch.index_select(inputs, 1, index)

inputs = pack_pathway_output(cfg, inputs)

进入pack_pathway_output函数

def pack_pathway_output(cfg, frames):

"""

Prepare output as a list of tensors. Each tensor corresponding to a

unique pathway.

将输出准备为张量列表。每个张量对应一个唯一的路径。

Args:

frames (tensor): frames of images sampled from the video. The

dimension is `channel` x `num frames` x `height` x `width`.

Returns:

frame_list (list): list of tensors with the dimension of

`channel` x `num frames` x `height` x `width`.

"""

if cfg.DATA.REVERSE_INPUT_CHANNEL:

frames = frames[[2, 1, 0], :, :, :]

"""判断是否否双通道模型 """

if cfg.MODEL.ARCH in cfg.MODEL.SINGLE_PATHWAY_ARCH:

frame_list = [frames]

elif cfg.MODEL.ARCH in cfg.MODEL.MULTI_PATHWAY_ARCH:

fast_pathway = frames

# Perform temporal sampling from the fast pathway. 得到慢速路径的帧输入

slow_pathway = torch.index_select(

frames,

1,

torch.linspace(

0, frames.shape[1] - 1, frames.shape[1] // cfg.SLOWFAST.ALPHA

).long(),

)

frame_list = [slow_pathway, fast_pathway]

else:

raise NotImplementedError(

"Model arch {} is not in {}".format(

cfg.MODEL.ARCH,

cfg.MODEL.SINGLE_PATHWAY_ARCH + cfg.MODEL.MULTI_PATHWAY_ARCH,

)

)

return frame_list

返回

inputs = pack_pathway_output(cfg, inputs)

"""由(3,4,256,312)-->(1,3,4,256,312)"""

inputs = [inp.unsqueeze(0) for inp in inputs]

return inputs

返回处理后的inputs predictor.py py81

inputs = process_cv2_inputs(frames, self.cfg)

if bboxes is not None:

"""创建一个目标框索引,最终大小为[检测框数量,1],填充内容为float(0)"""

index_pad = torch.full(

size=(bboxes.shape[0], 1),

fill_value=float(0),

device=bboxes.device,

)

# Pad frame index for each box. 给框增加目标索引 (2,4)->(2,5),是在左侧增加目标框索引

bboxes = torch.cat([index_pad, bboxes], axis=1)

if self.cfg.NUM_GPUS > 0:

# Transfer the data to the current GPU device. 将数据转移至GPU

if isinstance(inputs, (list,)):

for i in range(len(inputs)):

inputs[i] = inputs[i].cuda(

device=torch.device(self.gpu_id), non_blocking=True

)

else:

inputs = inputs.cuda(

device=torch.device(self.gpu_id), non_blocking=True

)

if self.cfg.DETECTION.ENABLE and not bboxes.shape[0]:

preds = torch.tensor([])

else:

"""采用的是SlowFast网络,不包含bboxes的计算,得到预测结果"""

preds = self.model(inputs, bboxes)

if self.cfg.NUM_GPUS:

preds = preds.cpu()

if bboxes is not None:

"""detach()使梯度不动"""

bboxes = bboxes.detach().cpu()

preds = preds.detach()

"""增加预测结果置信度矩阵"""

task.add_action_preds(preds)

"""值不变,可能属性变了"""

if bboxes is not None:

task.add_bboxes(bboxes[:, 1:])

return task

得到检测框以及检测结果后,返回至Predictor.py py141

"""增加任务id"""

self.async_vis.get_indices_ls.append(task.id)

"""执行async中的可视化显示程序"""

self.async_vis.put(task)

4.8 绘图函数

跳至async_predictor.py py141

def run(self):

"""

Run visualization asynchronously.

异步运行可视化

"""

while True:

task = self.task_queue.get()

if isinstance(task, _StopToken):

break

"""video_vis为阈值及预测结果函数"""

frames = draw_predictions(task, self.video_vis)

跳转至绘图函数 py 276

def draw_predictions(task, video_vis):

"""

Draw prediction for the given task.

为给定任务绘制预测。

Args:

task (TaskInfo object): task object that contain

the necessary information for visualization. (e.g. frames, preds)

All attributes must lie on CPU devices.

包含可视化所需信息的任务对象。(例如帧、pred)所有属性都必须位于CPU设备上。

video_vis (VideoVisualizer object): the video visualizer object.

"""

""" 获取task中结果"""

boxes = task.bboxes

frames = task.frames

preds = task.action_preds

if boxes is not None:

img_width = task.img_width

img_height = task.img_height

if boxes.device != torch.device("cpu"):

boxes = boxes.cpu()

"""恢复裁切框到原始大小"""

boxes = cv2_transform.revert_scaled_boxes(

task.crop_size, boxes, img_height, img_width

)

"""关键帧及是否加缓存"""

keyframe_idx = len(frames) // 2 - task.num_buffer_frames

"""绘图区间"""

draw_range = [

keyframe_idx - task.clip_vis_size,

keyframe_idx + task.clip_vis_size,

]

"""设定缓存区间"""

buffer = frames[: task.num_buffer_frames]

"""设定帧区间"""

frames = frames[task.num_buffer_frames :]

if boxes is not None:

if len(boxes) != 0:

frames = video_vis.draw_clip_range(

frames,

preds,

boxes,

keyframe_idx=keyframe_idx,

draw_range=draw_range,

)

进入主要画框函数 video_visualizer.py py514

def draw_clip_range(

self,

frames,

preds,

bboxes=None,

text_alpha=0.5,

ground_truth=False,

keyframe_idx=None,

draw_range=None,

repeat_frame=1,

):

"""

Draw predicted labels or ground truth classes to clip. Draw bouding boxes to clip

if bboxes is provided. Boxes will gradually fade in and out the clip, centered around

the clip's central frame, within the provided `draw_range`.

绘制预测标签或地面实况类以进行剪辑。如果提供了bboxes,则绘制束框以进行剪辑。

方框将在提供的“draw_range”范围内,以剪辑的中心框架为中心,逐渐淡出剪辑。

Args:

frames (array-like): video data in the shape (T, H, W, C). 视频数据形状

preds (tensor): a tensor of shape (num_boxes, num_classes) that contains all of the confidence scores

of the model. For recognition task or for ground_truth labels, input shape can be (num_classes,).

形状张量(num_boxes,num_classes),包含模型的所有置信度分数。

对于识别任务或ground_truth标签,输入形状可以是(num_classes,)。

bboxes (Optional[tensor]): shape (num_boxes, 4) that contains the coordinates of the bounding boxes.

包含边界框坐标的形状(num_boxes,4)。

text_alpha (float): transparency label of the box wrapped around text labels.

环绕文本标签的方框的透明度标签。

ground_truth (bool): whether the prodived bounding boxes are ground-truth.

所产生的边界框是否是基本事实。

keyframe_idx (int): the index of keyframe in the clip.

剪辑中关键帧的索引。

draw_range (Optional[list[ints]): only draw frames in range [start_idx, end_idx] inclusively in the clip.

仅在剪辑中的[开始,结束]范围内绘制帧。

If None, draw on the entire clip.

repeat_frame (int): repeat each frame in draw_range for `repeat_frame` time for slow-motion effect.

在draw范围内重复每个帧,重复“repeat frame”时间以获得慢动作效果。

"""

if draw_range is None:

draw_range = [0, len(frames) - 1]

if draw_range is not None:

"""不用绘图区间划分"""

draw_range[0] = max(0, draw_range[0])

left_frames = frames[: draw_range[0]]

right_frames = frames[draw_range[1] + 1 :]

"""绘图区间划分"""

draw_frames = frames[draw_range[0] : draw_range[1] + 1]

if keyframe_idx is None:

keyframe_idx = len(frames) // 2

img_ls = (

list(left_frames)

+ self.draw_clip(

draw_frames,

preds,

bboxes=bboxes,

text_alpha=text_alpha,

ground_truth=ground_truth,

keyframe_idx=keyframe_idx - draw_range[0],

repeat_frame=repeat_frame,

)

+ list(right_frames)

)

进入py568

def draw_clip(

self,

frames,

preds,

bboxes=None,

text_alpha=0.5,

ground_truth=False,

keyframe_idx=None,

repeat_frame=1,

):

"""

Draw predicted labels or ground truth classes to clip. Draw bouding boxes to clip

if bboxes is provided. Boxes will gradually fade in and out the clip, centered around

the clip's central frame.

绘制要剪裁的预测标签或地面真值类。

如果提供检测框,则绘制检测框以夹紧。框将逐渐淡入和淡出剪辑,围绕剪辑的中心框架。

Args:

frames (array-like): video data in the shape (T, H, W, C).

preds (tensor): a tensor of shape (num_boxes, num_classes) that contains all of the confidence scores

of the model. For recognition task or for ground_truth labels, input shape can be (num_classes,).

bboxes (Optional[tensor]): shape (num_boxes, 4) that contains the coordinates of the bounding boxes.

text_alpha (float): transparency label of the box wrapped around text labels.

ground_truth (bool): whether the prodived bounding boxes are ground-truth.

keyframe_idx (int): the index of keyframe in the clip.

repeat_frame (int): repeat each frame in draw_range for `repeat_frame` time for slow-motion effect.

"""

assert repeat_frame >= 1, "`repeat_frame` must be a positive integer."

"""和repeat_frame有关"""

repeated_seq = range(0, len(frames))

repeated_seq = list(

itertools.chain.from_iterable(

itertools.repeat(x, repeat_frame) for x in repeated_seq

)

)

"""修改视频数据,使其数据类型为uint8,值范围为[0,255]。"""

frames, adjusted = self._adjust_frames_type(frames)

if keyframe_idx is None:

half_left = len(repeated_seq) // 2

half_right = (len(repeated_seq) + 1) // 2

else:

mid = int((keyframe_idx / len(frames)) * len(repeated_seq))

half_left = mid

half_right = len(repeated_seq) - mid

"""创建一个具有渐变效果的Alpha值列表,用于图像或视频处理中的淡入淡出等操作"""

alpha_ls = np.concatenate(

[

np.linspace(0, 1, num=half_left),

np.linspace(1, 0, num=half_right),

]

)

"""环绕文本标签的方框的透明度标签。"""

text_alpha = text_alpha

frames = frames[repeated_seq]

img_ls = []

for alpha, frame in zip(alpha_ls, frames):

draw_img = self.draw_one_frame(

frame,

preds,

bboxes,

alpha=alpha,

text_alpha=text_alpha,

ground_truth=ground_truth,

)

进入渐变绘画函数 py 404

def draw_one_frame(

self,

frame,

preds,

bboxes=None,

alpha=0.5,

text_alpha=0.7,

ground_truth=False,

):

"""

Draw labels and bouding boxes for one image. By default, predicted labels are drawn in

the top left corner of the image or corresponding bounding boxes. For ground truth labels

(setting True for ground_truth flag), labels will be drawn in the bottom left corner.

为一个图像绘制标签和束框。默认情况下,

预测标签绘制在图像的左上角或相应的边界框中。对于地面实况标签(将ground_truth标志设置为True),标签将绘制在左下角。

Args:

frame (array-like): a tensor or numpy array of shape (H, W, C), where H and W correspond to

the height and width of the image respectively. C is the number of

color channels. The image is required to be in RGB format since that

is a requirement of the Matplotlib library. The image is also expected

to be in the range [0, 255].

preds (tensor or list): If ground_truth is False, provide a float tensor of shape (num_boxes, num_classes)

that contains all of the confidence scores of the model.

For recognition task, input shape can be (num_classes,). To plot true label (ground_truth is True),

preds is a list contains int32 of the shape (num_boxes, true_class_ids) or (true_class_ids,).

bboxes (Optional[tensor]): shape (num_boxes, 4) that contains the coordinates of the bounding boxes.

alpha (Optional[float]): transparency level of the bounding boxes.

text_alpha (Optional[float]): transparency level of the box wrapped around text labels.

ground_truth (bool): whether the prodived bounding boxes are ground-truth.

"""

if isinstance(preds, torch.Tensor):

if preds.ndim == 1:

preds = preds.unsqueeze(0)

n_instances = preds.shape[0]

elif isinstance(preds, list):

n_instances = len(preds)

else:

logger.error("Unsupported type of prediction input.")

return

if ground_truth:

top_scores, top_classes = [None] * n_instances, preds

"""置信度和结果匹配"""

elif self.mode == "top-k":

top_scores, top_classes = torch.topk(preds, k=self.top_k)

top_scores, top_classes = top_scores.tolist(), top_classes.tolist()

elif self.mode == "thres":

top_scores, top_classes = [], []

for pred in preds:

mask = pred >= self.thres

top_scores.append(pred[mask].tolist())

top_class = torch.squeeze(torch.nonzero(mask), dim=-1).tolist()

top_classes.append(top_class)

# Create labels top k predicted classes with their scores.

""" 创建带分数的前k个预测类标签。"""

text_labels = []

for i in range(n_instances):

text_labels.append(

_create_text_labels(

top_classes[i],

top_scores[i],

self.class_names,

ground_truth=ground_truth,

)

)

进入_create_text_labels py18

def _create_text_labels(classes, scores, class_names, ground_truth=False):

"""

Create text labels.

创建文本标签。

Args:

classes (list[int]): a list of class ids for each example.

scores (list[float] or None): list of scores for each example.

class_names (list[str]): a list of class names, ordered by their ids.

ground_truth (bool): whether the labels are ground truth.

Returns:

labels (list[str]): formatted text labels.

"""

try:

labels = [class_names[i] for i in classes]

except IndexError:

logger.error("Class indices get out of range: {}".format(classes))

return None

if ground_truth:

labels = ["[{}] {}".format("GT", label) for label in labels]

elif scores is not None:

assert len(classes) == len(scores)

"""创建label标签--[0.90]squat"""

labels = [

"[{:.2f}] {}".format(s, label) for s, label in zip(scores, labels)

]

return labels

创建完标签返回上一级 py467

for i in range(n_instances):

text_labels.append(

_create_text_labels(

top_classes[i],

top_scores[i],

self.class_names,

ground_truth=ground_truth,

)

)

""" 装载的是detectron2中的可视化模型 """

frame_visualizer = ImgVisualizer(frame, meta=None)

"""设置字体大小"""

font_size = min(

max(np.sqrt(frame.shape[0] * frame.shape[1]) // 35, 5), 9

)

top_corner = not ground_truth

if bboxes is not None:

assert len(preds) == len(

bboxes

), "Encounter {} predictions and {} bounding boxes".format(

len(preds), len(bboxes)

)

for i, box in enumerate(bboxes):

text = text_labels[i]

pred_class = top_classes[i]

colors = [self._get_color(pred) for pred in pred_class]

box_color = "r" if ground_truth else "g"

line_style = "--" if ground_truth else "-."

"""调用内部函数进行画框"""

frame_visualizer.draw_box(

box,

alpha=alpha,

edge_color=box_color,

line_style=line_style,

)

frame_visualizer.draw_multiple_text(

text,

box,

top_corner=top_corner,

font_size=font_size,

box_facecolors=colors,

alpha=text_alpha,

)

进入画标签函数 py py 109

def draw_multiple_text(

self,

text_ls,

box_coordinate,

*,

top_corner=True,

font_size=None,

color="w",

box_facecolors="black",

alpha=0.5,

):

"""

Draw a list of text labels for some bounding box on the image.

为图像上的某个边界框绘制一个文本标签列表。

Args:

text_ls (list of strings): a list of text labels.

box_coordinate (tensor): shape (4,). The (x_left, y_top, x_right, y_bottom)

coordinates of the box.

top_corner (bool): If True, draw the text labels at (x_left, y_top) of the box.

Else, draw labels at (x_left, y_bottom).

font_size (Optional[int]): font of the text. If not provided, a font size

proportional to the image width is calculated and used.

color (str): color of the text. Refer to `matplotlib.colors` for full list

of formats that are accepted.

box_facecolors (str): colors of the box wrapped around the text. Refer to

`matplotlib.colors` for full list of formats that are accepted.

alpha (float): transparency level of the box.

"""

if not isinstance(box_facecolors, list):

box_facecolors = [box_facecolors] * len(text_ls)

assert len(box_facecolors) == len(

text_ls

), "Number of colors provided is not equal to the number of text labels."

if not font_size:

font_size = self._default_font_size

text_box_width = font_size + font_size // 2

# If the texts does not fit in the assigned location,

# we split the text and draw it in another place.

if top_corner:

num_text_split = self._align_y_top(

box_coordinate, len(text_ls), text_box_width

)

y_corner = 1

进入_align_y_top函数

def _align_y_top(self, box_coordinate, num_text, textbox_width):

"""

Calculate the number of text labels to plot on top of the box

without going out of frames.

计算在框顶部打印而不超出边框的文本标签数。

Args:

box_coordinate (array-like): shape (4,). The (x_left, y_top, x_right, y_bottom) coordinates of the box.

num_text (int): the number of text labels to plot.

textbox_width (float): the width of the box wrapped around text label.

"""

dist_to_top = box_coordinate[1]

num_text_top = dist_to_top // textbox_width

if isinstance(num_text_top, torch.Tensor):

num_text_top = int(num_text_top.item())

return min(num_text, num_text_top)

得到能划分的区域后返回py 146

if top_corner:

num_text_split = self._align_y_top(

box_coordinate, len(text_ls), text_box_width

)

y_corner = 1

else:

num_text_split = len(text_ls) - self._align_y_bottom(

box_coordinate, len(text_ls), text_box_width

)

y_corner = 3

"""是将两个列表(text_ls 和 box_facecolors)中的元素组合成一个元组

列表,并按照 text_ls 中的元素进行排序(降序排列)"""

text_color_sorted = sorted(

zip(text_ls, box_facecolors), key=lambda x: x[0], reverse=True

)

"""进行拆包操作"""

if len(text_color_sorted) != 0:

text_ls, box_facecolors = zip(*text_color_sorted)

else:

text_ls, box_facecolors = [], []

text_ls, box_facecolors = list(text_ls), list(box_facecolors)

self.draw_multiple_text_upward(

// 主要是对一个列表进行切片和反转操作。

text_ls[:num_text_split][::-1],

box_coordinate,

y_corner=y_corner,

font_size=font_size,

color=color,

box_facecolors=box_facecolors[:num_text_split][::-1],

alpha=alpha,

)

进入draw_multiple_text_upward函数 py 184

def draw_multiple_text_upward(

self,

text_ls,

box_coordinate,

*,

y_corner=1,

font_size=None,

color="w",

box_facecolors="black",

alpha=0.5,

):

"""

Draw a list of text labels for some bounding box on the image in upward direction.

The next text label will be on top of the previous one.

向上绘制图像上某个边界框的文本标签列表。下一个文本标签将位于上一个标签的顶部。

Args:

text_ls (list of strings): a list of text labels.

box_coordinate (tensor): shape (4,). The (x_left, y_top, x_right, y_bottom)

coordinates of the box.

y_corner (int): Value of either 1 or 3. Indicate the index of the y-coordinate of

the box to draw labels around.

font_size (Optional[int]): font of the text. If not provided, a font size

proportional to the image width is calculated and used.

color (str): color of the text. Refer to `matplotlib.colors` for full list

of formats that are accepted.

box_facecolors (str or list of strs): colors of the box wrapped around the text. Refer to

`matplotlib.colors` for full list of formats that are accepted.

alpha (float): transparency level of the box.

"""

if not isinstance(box_facecolors, list):

box_facecolors = [box_facecolors] * len(text_ls)

assert len(box_facecolors) == len(

text_ls

), "Number of colors provided is not equal to the number of text labels."

assert y_corner in [1, 3], "Y_corner must be either 1 or 3"

if not font_size:

font_size = self._default_font_size

"""从框中选择一个x坐标,以确保文本标签不会超出框架。

默认情况下,选择左侧的x坐标,并将文本向左对齐。

如果框离图像右侧太近,则会选择右侧的x坐标,并将文本向右对齐

得到结果为:是从左侧开始还是右侧 """

x, horizontal_alignment = self._align_x_coordinate(box_coordinate)

"""判断是在上侧还是下侧"""

y = box_coordinate[y_corner].item()

"""进入标签绘画部分"""

for i, text in enumerate(text_ls):

self.draw_text(

text,

(x, y),

font_size=font_size,

color=color,

horizontal_alignment=horizontal_alignment,

vertical_alignment="bottom",

box_facecolor=box_facecolors[i],

alpha=alpha,

)

进入 draw_text函数 py 61

def draw_text(

self,

text,

position,

*,

font_size=None,

color="w",

horizontal_alignment="center",

vertical_alignment="bottom",

box_facecolor="black",

alpha=0.5,

):

"""

Draw text at the specified position.

在指定位置绘制文本。

Args:

text (str): the text to draw on image.

position (list of 2 ints): the x,y coordinate to place the text.

font_size (Optional[int]): font of the text. If not provided, a font size

proportional to the image width is calculated and used.

color (str): color of the text. Refer to `matplotlib.colors` for full list

of formats that are accepted.

horizontal_alignment (str): see `matplotlib.text.Text`.

vertical_alignment (str): see `matplotlib.text.Text`.

box_facecolor (str): color of the box wrapped around the text. Refer to

`matplotlib.colors` for full list of formats that are accepted.

alpha (float): transparency level of the box.

"""

if not font_size:

font_size = self._default_font_size

x, y = position

"""主要绘图程序"""

self.output.ax.text(

x,

y,

text,

size=font_size * self.output.scale,

family="monospace",

bbox={

"facecolor": box_facecolor,

"alpha": alpha,

"pad": 0.7,

"edgecolor": "none",

},

verticalalignment=vertical_alignment,

horizontalalignment=horizontal_alignment,

color=color,

zorder=10,

)

返回py235

for i, text in enumerate(text_ls):

self.draw_text(

text,

(x, y),

font_size=font_size,

color=color,

horizontal_alignment=horizontal_alignment,

vertical_alignment="bottom",

box_facecolor=box_facecolors[i],

alpha=alpha,

)

"""将绘画框向上移动"""

y -= font_size + font_size // 2

返回py174

"""已完成向上绘制图像上某个边界框的文本标签列表。

下一个文本标签将位于上一个标签的顶部。"""

self.draw_multiple_text_upward(

text_ls[:num_text_split][::-1],

box_coordinate,

y_corner=y_corner,

font_size=font_size,

color=color,

box_facecolors=box_facecolors[:num_text_split][::-1],

alpha=alpha,

)

"""绘制底部,没有就结束"""

self.draw_multiple_text_downward(

text_ls[num_text_split:],

box_coordinate,

y_corner=y_corner,

font_size=font_size,

color=color,

box_facecolors=box_facecolors[num_text_split:],

alpha=alpha,

)

上述整体绘画主体完成,后面就是循环对应过程

2441

2441

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?