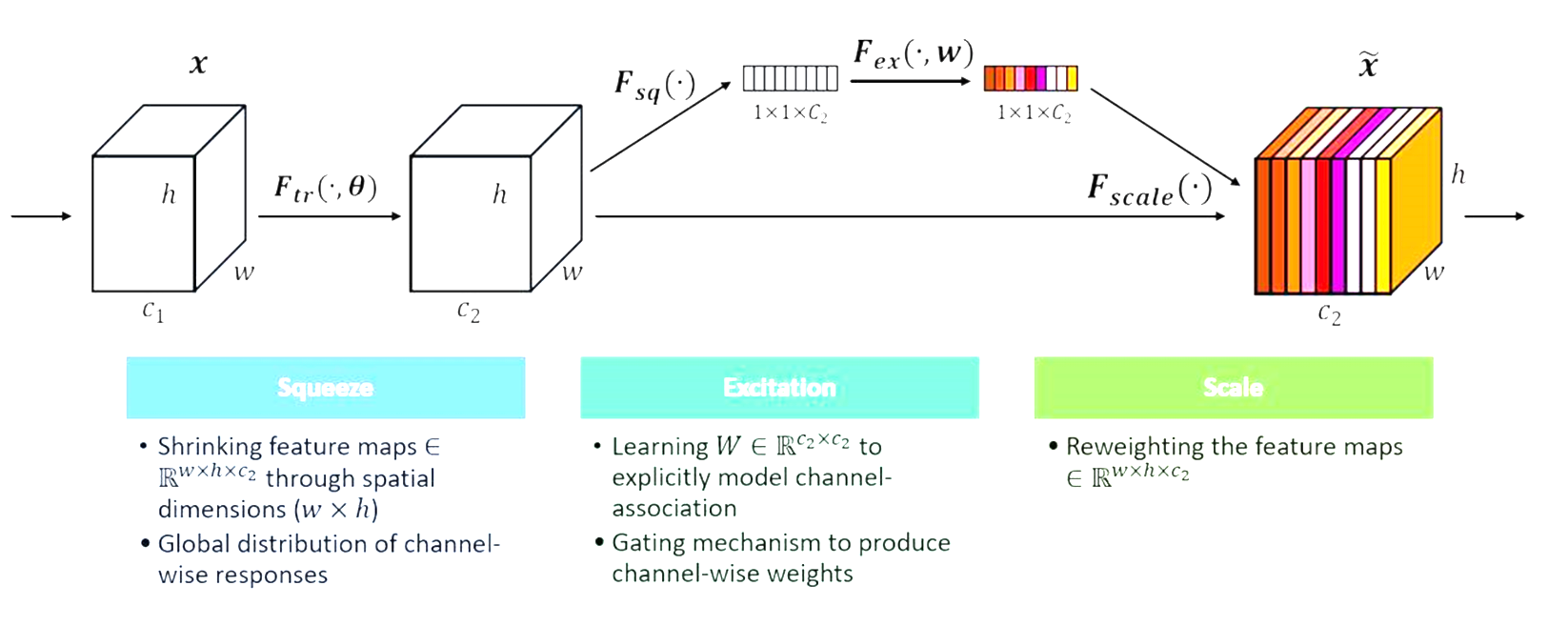

1 SENet

注:主要包括在一维卷积、二维卷积中的代码实现

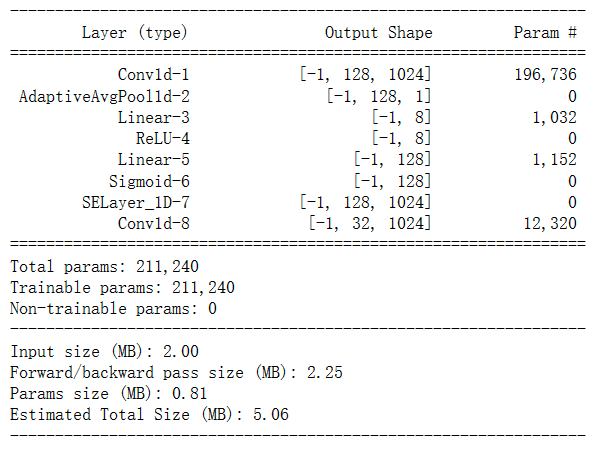

1.1一维卷积实现过程:code

import torch

import torch.nn as nn

class SELayer_1D(nn.Module):

"""Constructs a SE module.

Args:

channel: Number of channels of the input feature map

reduction: Reduction ratio for the Squeeze operation

"""

def __init__(self, channel, reduction=16):

super(SELayer_1D, self).__init__()

self.avg_pool = nn.AdaptiveAvgPool1d(1) # 创建一个自适应平均池化层,将每个通道的特征图的长度缩减为1

self.fc = nn.Sequential(

nn.Linear(channel, channel // reduction), # 第一个线性层,将通道数从channel缩减为channel/reduction

nn.ReLU(inplace=True), # ReLU激活函数

nn.Linear(channel // reduction, channel), # 第二个线性层,将通道数从channel/reduction扩展到channel

nn.Sigmoid() # Sigmoid激活函数

)

def forward(self, x):

b, c, _ = x.size() # b为batch size,c为通道数,_是长度

y = self.avg_pool(x).view(b, c) # 将每个通道的特征图进行平均池化,维数变为(batch size, channel)

y = self.fc(y).view(b, c, 1) # 将特征图通过线性层和激活函数进行处理,并重新调整为(batch size, channel, 1)的形状

return x * y.expand_as(x) # 将特征图与该形状的y进行逐元素乘法,返回最终的特征图

import torch

import torch.nn as nn

class Model_1d(nn.Module):

def __init__(self):

super(Model_1d, self).__init__()

self.conv = nn.Conv1d(512, 128, kernel_size=3, padding=1)

self.se = SELayer_1D(128)

self.conv1 = nn.Conv1d(128, 32, kernel_size=3, padding=1)

def forward(self, x):

x = self.conv(x)

x = self.se(x)

x = self.conv1(x)

return xfrom torchsummary import summary

model = Model_1d()

summary(model,(512, 1024))

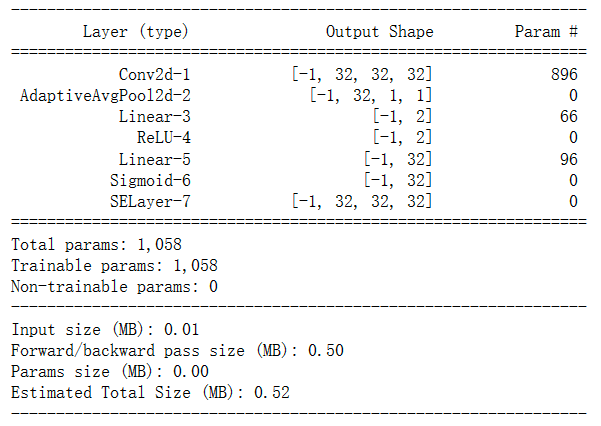

1.2二维卷积实现过程:code

import torch

import torch.nn as nn

class SELayer(nn.Module):

def __init__(self, in_planes, reduction=16):

super(SELayer, self).__init__()

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.fc = nn.Sequential(

nn.Linear(in_planes, in_planes // reduction),

nn.ReLU(inplace=True),

nn.Linear(in_planes // reduction, in_planes),

nn.Sigmoid()

)

def forward(self, x):

b, c, _, _ = x.size()

y = self.avg_pool(x).view(b, c)

y = self.fc(y).view(b, c, 1, 1)

return x * y.expand_as(x)

class ConvNet(nn.Module):

def __init__(self):

super(ConvNet, self).__init__()

self.conv = nn.Conv2d(3, 32, kernel_size=3, stride=1, padding=1)

self.selayer = SELayer(32)

def forward(self, x):

x = self.conv(x)

x = self.selayer(x)

return xfrom torchsummary import summary

model = ConvNet()

summary(model,(3,32,32))

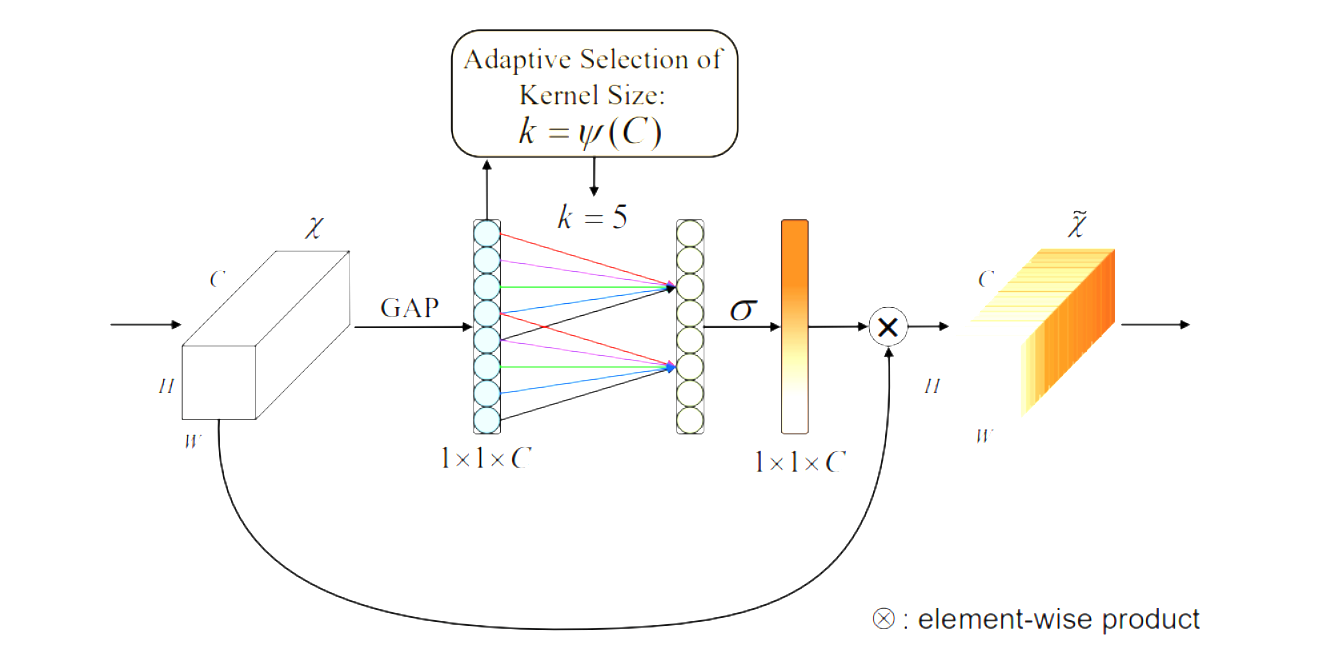

2 ECANet

注:主要包括在一维卷积、二维卷积中的代码实现

2.1一维卷积实现过程:code

import torch

import torch.nn as nn

class ECALayer1D(nn.Module):

def __init__(self, in_planes, reduction=16):

"""

ECALayer1D初始化方法

参数:

- in_planes: 输入的特征图的通道数

- reduction: 比率

"""

super(ECALayer1D, self).__init__()

# 平均池化层,取每一通道的平均值

self.avg_pool = nn.AdaptiveAvgPool1d(1)

# 全连接层,用于计算权重

self.fc = nn.Sequential(

# 第一层线性层,将特征图的通道数除以reduction

nn.Linear(in_planes, in_planes // reduction),

# ReLU激活函数

nn.ReLU(inplace=True),

# 第二层线性层,将输出的结果乘以in_planes

nn.Linear(in_planes // reduction, in_planes),

)

def forward(self, x):

"""

前向传播函数

参数:

- x: 输入的特征图

"""

# 获取batch_size,通道数和长度

b, c, l = x.size()

# 对特征图进行平均池化,并将结果reshape成(batch_size,通道数)的形式

y = self.avg_pool(x).view(b, c)

# 计算权重

y = self.fc(y).view(b, c, 1)

# 对权重进行ReLU激活,权重<0的位置赋值为0

y = torch.where(y >= 0, y, torch.zeros_like(y))

# 将权重扩展到与特征图相同的大小并乘以特征图

return x * y.expand_as(x)import torch

import torch.nn as nn

class Model_1d(nn.Module):

def __init__(self):

super(Model_1d, self).__init__()

self.conv = nn.Conv1d(512, 128, kernel_size=3, padding=1)

self.eca = ECALayer1D(128)

self.conv1 = nn.Conv1d(128, 32, kernel_size=3, padding=1)

def forward(self, x):

x = self.conv(x)

x = self.eca(x)

x = self.conv1(x)

return xfrom torchsummary import summary

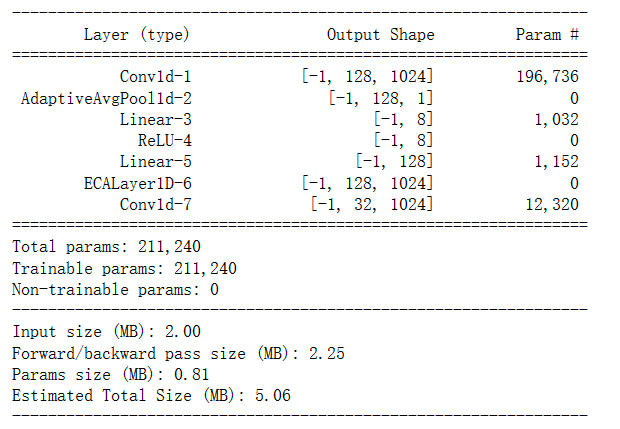

model = Model_1d()

summary(model,(512, 1024))

2.2二维卷积实现过程:code

import torch

import torch.nn as nn

class ECALayer(nn.Module):

def __init__(self, in_planes, reduction=16):

super(ECALayer, self).__init__()

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.fc = nn.Sequential(

nn.Linear(in_planes, in_planes // reduction),

nn.ReLU(inplace=True),

nn.Linear(in_planes // reduction, in_planes),

)

def forward(self, x):

b, c, _, _ = x.size()

y = self.avg_pool(x).view(b, c)

y = self.fc(y).view(b, c, 1, 1)

y = torch.where(y >= 0, y, torch.zeros_like(y))

return x * y.expand_as(x)class ConvNet(nn.Module):

def __init__(self):

super(ConvNet, self).__init__()

self.conv = nn.Conv2d(3, 32, kernel_size=3, stride=1, padding=1)

self.ecalayer = ECALayer(32)

def forward(self, x):

x = self.conv(x)

x = self.ecalayer(x)

return xfrom torchsummary import summary

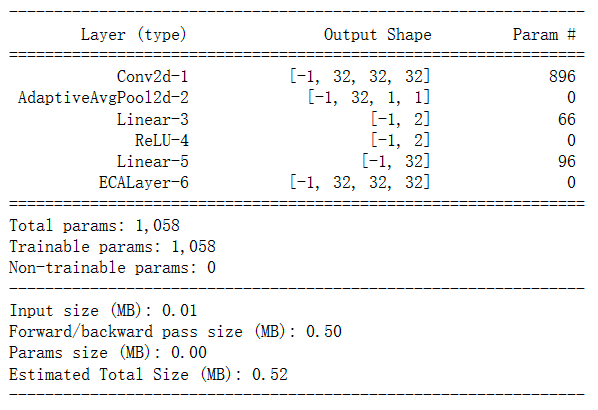

model = ConvNet()

summary(model,(3,32,32))

7941

7941

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?