白话TensorFlow +实战系列(五)

实战MNIST

这篇文章主要用全连接神经网络来实现MNIST手写数字识别的问题。首先介绍下MNIST数据集。

1)MNIST数据集

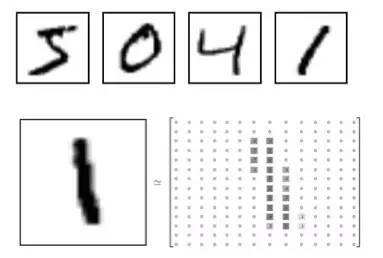

MNIST数据集是一个非常有名的手写数字识别数据集,它包含了60000张图片作为训练集,10000张图片为测试集,每张图为一个手写的0~9数字。如图:

其中每张图的大小均为28*28,这里大小指的的是像素。例如数字1所对应的像素矩阵为:

而我们要做的就是教会电脑识别每个手写数字。这个数据集非常经典,常作为学习神经网络的入门教材,一如每个程序员的第一个程序都是“helloword!”一样。

2)数据处理

数据集下载下来后有四个文件,分别为训练集图片,训练集答案,测试集图片,测试集答案。TensorFlow提供了一个类来处理MNIST数据,这个类会自动的将MNIST数据分为训练集,验证集与测试集,并且这些数据都是可以直接喂给神经网络作为输入用的。示例代码如下:

其中input_data.read_data_sets会自动将数据集进行处理,one_hot = True用独热方式表示,意思是每个数字由one_hot方式表,例如数字0 = [1,0,0,0,0,0,0,0,0,0],1 = [0,1,0,0,0,0,0,0,0,0]。运行结果如下:

接下来就用一个全连接神经网络来识别数字。

3)全连接神经网络

首先定义超参数与参数,没啥好解释的,代码如下:

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

batch_size = 100

learning_rate = 0.8

trainig_step = 30000

n_input = 784

n_hidden = 500

n_labels = 10接着定义网络的结构,构建的网络只有一个隐藏层,隐藏层节点为500。代码如下:

def inference(x_input):

with tf.variable_scope("hidden"):

weights = tf.get_variable("weights", [n_input, n_hidden], initializer = tf.random_normal_initializer(stddev = 0.1))

biases = tf.get_variable("biases", [n_hidden], initializer = tf.constant_initializer(0.0))

hidden = tf.nn.relu(tf.matmul(x_input, weights) + biases)

with tf.variable_scope("out"):

weights = tf.get_variable("weights", [n_hidden, n_labels], initializer = tf.random_normal_initializer(stddev = 0.1))

biases = tf.get_variable("biases", [n_labels], initializer = tf.constant_initializer(0.0))

output = tf.matmul(hidden, weights) + biases

return output在输出层中,output并没有用到relu函数,因为在之后的softmax层中也是非线性激励,所以可以不用。

接着定义训练过程,代码如下:

def train(mnist):

x = tf.placeholder("float", [None, n_input])

y = tf.placeholder("float", [None, n_labels])

pred = inference(x)

#计算损失函数

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits = pred, labels = y))

#定义优化器

optimizer = tf.train.GradientDescentOptimizer(learning_rate = learning_rate).minimize(cross_entropy)

#定义准确率计算

correct_pred = tf.equal(tf.argmax(pred, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

#定义验证集与测试集

validate_data = {x: mnist.validation.images, y: mnist.validation.labels}

test_data = {x: mnist.test.images, y: mnist.test.labels}

for i in range(trainig_step):

#xs,ys为每个batch_size的训练数据与对应的标签

xs, ys = mnist.train.next_batch(batch_size)

_, loss = sess.run([optimizer, cross_entropy], feed_dict={x: xs, y:ys})

#每1000次训练打印一次损失值与验证准确率

if i % 1000 == 0:

validate_accuracy = sess.run(accuracy, feed_dict=validate_data)

print("after %d training steps, the loss is %g, the validation accuracy is %g" % (i, loss, validate_accuracy))

print("the training is finish!")

#最终的测试准确率

acc = sess.run(accuracy, feed_dict=test_data)

print("the test accuarcy is:", acc)其中每一步的函数作用可以参考我的第二篇博客: 罗斯基白话:TensorFlow+实战系列(二)从零构建传统神经网络

里面有详细的解释。

完整代码如下:

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

batch_size = 100

learning_rate = 0.8

trainig_step = 30000

n_input = 784

n_hidden = 500

n_labels = 10

def inference(x_input):

with tf.variable_scope("hidden"):

weights = tf.get_variable("weights", [n_input, n_hidden], initializer = tf.random_normal_initializer(stddev = 0.1))

biases = tf.get_variable("biases", [n_hidden], initializer = tf.constant_initializer(0.0))

hidden = tf.nn.relu(tf.matmul(x_input, weights) + biases)

with tf.variable_scope("out"):

weights = tf.get_variable("weights", [n_hidden, n_labels], initializer = tf.random_normal_initializer(stddev = 0.1))

biases = tf.get_variable("biases", [n_labels], initializer = tf.constant_initializer(0.0))

output = tf.matmul(hidden, weights) + biases

return output

def train(mnist):

x = tf.placeholder("float", [None, n_input])

y = tf.placeholder("float", [None, n_labels])

pred = inference(x)

#计算损失函数

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits = pred, labels = y))

#定义优化器

optimizer = tf.train.GradientDescentOptimizer(learning_rate = learning_rate).minimize(cross_entropy)

#定义准确率计算

correct_pred = tf.equal(tf.argmax(pred, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

#定义验证集与测试集

validate_data = {x: mnist.validation.images, y: mnist.validation.labels}

test_data = {x: mnist.test.images, y: mnist.test.labels}

for i in range(trainig_step):

#xs,ys为每个batch_size的训练数据与对应的标签

xs, ys = mnist.train.next_batch(batch_size)

_, loss = sess.run([optimizer, cross_entropy], feed_dict={x: xs, y:ys})

#每1000次训练打印一次损失值与验证准确率

if i % 1000 == 0:

validate_accuracy = sess.run(accuracy, feed_dict=validate_data)

print("after %d training steps, the loss is %g, the validation accuracy is %g" % (i, loss, validate_accuracy))

print("the training is finish!")

#最终的测试准确率

acc = sess.run(accuracy, feed_dict=test_data)

print("the test accuarcy is:", acc)

def main(argv = None):

mnist = input_data.read_data_sets("/tensorflow/mnst_data", one_hot=True)

train(mnist)

if __name__ == "__main__":

tf.app.run()

最后执行的结果如图:

可以看到最终的准确率能达到98.19%,看来效果还是很不错的。嘿嘿。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?