Hadamard product

Main article: Hadamard product (matrices)

For two matrices of the same dimensions, there is the Hadamard product, also known as the element-wise product, pointwise product, entrywise product and the Schur product.[24] For two matrices A and B of the same dimensions, the Hadamard product A ○ B is a matrix of the same dimensions, the i, j element of A is multiplied with the i, j element of B, that is:

displayed fully:

This operation is identical to multiplying many ordinary numbers (mn of them) all at once; thus the Hadamard product is commutative, associative and distributive over entrywise addition. It is also a principal submatrix of the Kronecker product. It appears in lossy compression algorithms such as JPEG.

Frobenius product

The Frobenius inner product, sometimes denoted A : B, is the component-wise inner product of two matrices as though they are vectors. It is also the sum of the entries of the Hadamard product. Explicitly,

where “tr” denotes the trace of a matrix and vec denotes vectorization. This inner product induces the Frobenius norm.

A:B=B:A A : B = B : A

A:B=AT:BT=BT:AT A : B = A T : B T = B T : A T

A:BC=BTA:C A : B C = B T A : C

A:BC=ACT:B A : B C = A C T : B

A:(B+C)=A:B+A:C A : ( B + C ) = A : B + A : C

∇(A:B)=∇A:B+A:∇B

∇

(

A

:

B

)

=

∇

A

:

B

+

A

:

∇

B

(证明如下:

)

另外,关于导数

证明一下

同理

其中 求导和最后的合并都是elementwise的

对于二阶导数的求法, 这里只推导matrix cookbook上的一个公式(110), 其他的类似

求导的方法,相办法把每个

X

X

单独写在

:

:

的一边, 为了区别两个, 我把它们分别表示为

X1

X

1

,

X2

X

2

跟frobenius norm的关系

如果f(X)为矩阵X的函数,其结果为一标量,则

为什么有转置呢?因为对矩阵或者向量求导,求出来的结果需要转置

The derivative of a scalar y function of a matrix X of independent variables, with respect to the matrix X, is given (in numerator layout notation) by

Notice that the indexing of the gradient with respect to X is transposed as compared with the indexing of X.

(引自于 https://en.wikipedia.org/wiki/Matrix_calculus)

所以为了求

A:B

A

:

B

对

x

x

的导数只需要将

化成

则

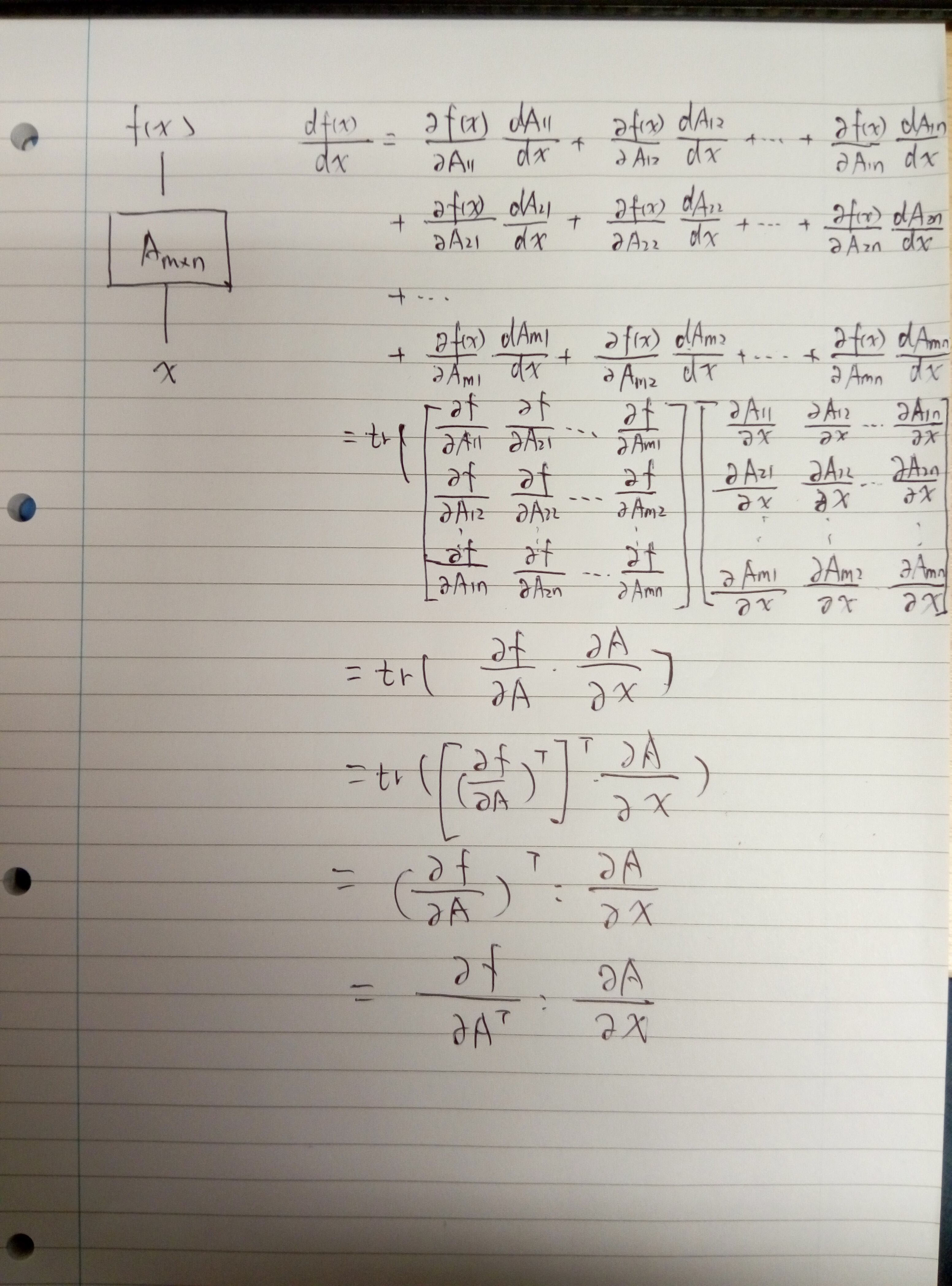

最后让我们看个例子,f(x)为x的函数,f(x)和x都为标量,但是中间有个 Am∗n A m ∗ n 的矩阵作为他们的连接关系,求 df(x)dx d f ( x ) d x

后来参照了一本介绍tensor的书

在30页提到了 ⋅⋅ ⋅ ⋅ 运算符

我想我们这里可以表示成

这里说下我自己对 ⋅⋅ ⋅ ⋅ 运算符的理解

24, Aug, 2018 update

注意 ∂f(x)∂A ∂ f ( x ) ∂ A 的尺寸与A的大小应该一致

392

392

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?