原文:https://hackernoon.com/how-tensorflows-tf-image-resize-stole-60-days-of-my-life-aba5eb093f35

How Tensorflow’s tf.image.resize stole 60 days of my life

That’s a short warning to all Tensorflow users working with visual content. Short notice: don’t use any tf.image.resize functions!

I was rewriting codebase of our neural network image upscaling service — Let’s Enhance to make it ready for bigger and faster models and API we are working on. As we work with image generation (superresolution, deblurring, etc) we do rely on a typical image processing libraries like OpenCV and PIL. I always had suspicions that it makes sense to use Tensorflow image processing capabilities — in theory, they should be faster. So, I decided to stick to native Tensorflow image preprosessing and dataset building tools using dataset.map() to keep all operations in tensors all around my code.

The problem was quite awful — my new and shiny code for superresolution wasn’t able to reproduce not only any state-of-art networks but even my own code that I wrote 4 months ago. And the ugliest part was that results of superresolution itself were pretty good sometimes, the network was working, although not reaching target PSNR and having strange visual artifacts sometimes, like doubling of small lines.

Let the debug begin

What initially was looking as minor bug became 60 days of struggle and sleepless nights. My faulty logic was simple — there’s something wrong with network definition or training process. Data preprocessing is definitely fine, as I am having meaningful results and visual control over image processing in Tensorboard.

I tweaked everything I was able to find, defined network using Keras, Slim, raw TF — nothing, looked for changes in TF 1.3->1.4->1.5 and different CUDA versions, paddings behaviors. I am ashamed even to tell you about my latest suspicions, which involved defects in GPU RAM and statics. I was tweaking perceptual losses and style losses looking for a reason. And each iteration took days to retrain until some meaningful result…

Yesterday I found The Bug, when looking into Tensorboard. It was almost subliminal feeling that something is wrong with the image. I disregarded network output and just overlayed target image and input image(that is a downscaled target image) in Photoshop. Here’s what I got.

Looks strange, somekind of displacement happening here. Totally againts any logic, this just can’t be true! My code is dead simple. Read image, crop image, resize image. All in Tensorflow.

Anyway, RTFM. tf.image.resize_bicubic has a parameter — “align corners”. How in the world would you like to downscale image and not have corners aligned? You can! So there is a very weird behavior of this function known for a long time — read this thread. They can’t fix it as this can break lots of old code and pre-trained networks.

Our

tf.image.resize_area function isn't even reflection equivariant. It would be lovely to fix this, but I'd be worried about breaking old models.

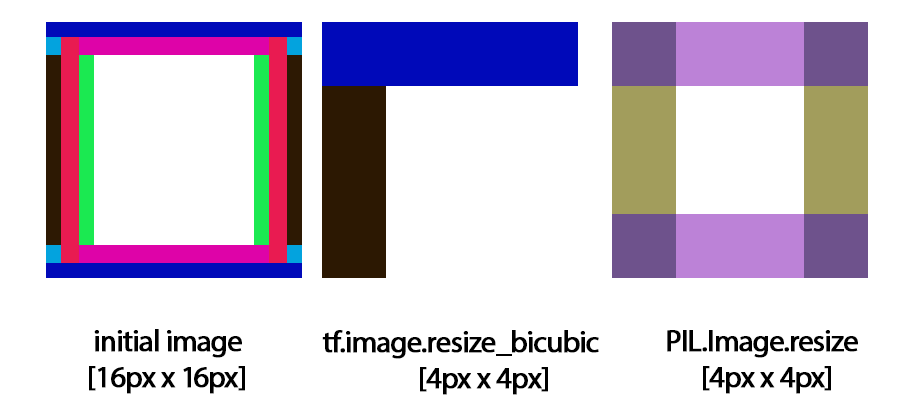

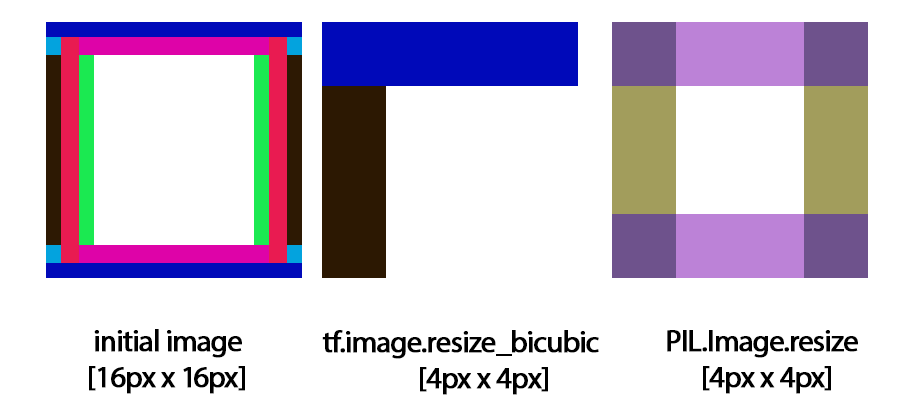

This code does actually displace your image by one pixel to the left and top. Thread suggests that even the interpolation is broken in TensorFlow. It’s 2018, people. Here are the actual downscaling results with TF.

Stick to Scipy/OpenCV/numpy/PIL whatever you prefer for image processing. The second I changed it my network worked like a charm (next day actually, when I saw training results).

这是一个对所有计算机视觉工作者的简短警告:请勿使用任何tf.image.resize函数!

为了让增强图像识别的神经网络,使其更快,更大,并为正在开发的API做好准备,我正在重写这一部分的代码库,如同我们以前处理图像生成任务(超分辨率,去模糊等)时,我们确实依赖OpenCv和PIL这样的典型图像处理库。我总是怀疑使用TensorFlow图像处理功能是否合理——理论上来说,它们应该更快。因此,我决定坚持使用dataset.map()函数,将所有的图像处理的运算放入其中。

问题出现了——我的新代码训练出来的超分辨率模型不仅没法state-of-art,甚至还不如我4个月前写的。而最丑陋的部分是,超分辨率本身的结果有时是非常好的,说明网络是working的,尽管没有达到PSNR目标,有时候还有一些奇怪的视觉产物,比如小线条的倍增。

让我们开始debug

我为这个看起来很小的bug奋斗了60天。我的逻辑很简单--网络结构的定义或者训练过程中出现了问题,数据处理绝对没有问题,因为我在Tensorboard中可视化了图像处理过程与结果。

我调整了我能想到的所有东西,使用Keras,Slim,用原生TensorFlow——没有什么卵用。切换TF1.3→1.4→1.5和不同的CUDA版本。我甚至怀疑GPU内存和静态的缺陷。我甚至调整perceptual losses和style losses来寻找原因。每次训练都需要花几天时间才能获得有意义的结果。

就在昨天,我查看Tensorboard的时候发现了bug。几乎是潜意识里觉得图像出了问题。我直接忽略网络的输出,在Photoshop中叠加了目标图像和输入图像(即缩小的目标图像),下图是我得到的:

这看起来很奇怪,两张图像之间发生了位移,完全违背任何逻辑,这是不可能的! 我的代码其实很简单,读取图像,裁剪图像,调整图像大小,所有操作都在TensorFlow中执行。

无论如何,Read The Fucking Manual ,tf.image.resize_bicubic有一个参数——“对齐角落”。这个有着奇怪行为的函数已经存在了很长时间了,他们无法修复他,因为这会破坏大量旧代码和预先训练的网络

我们的tf.image.resize_area函数甚至不等于反射,虽然可以优雅地解决这个问题,但我会担心会破坏旧的模式。

该代码实际将你的图像向左和向上移动一个像素,这个破坏过程甚至在插值中。这是2018年,很难想象这是TensorFlow对缩小尺度的结果。

无论你偏好什么样的图像处理过程,坚持使用Scipy/OpenCV/numpy/PIL。当我改变了这部分代码,我的网络焕发出了魅力(当我看到训练结果时,实际上是第二天)。

- print("PSNR between cv2 rc and org:", cal_psnr(img_rc_cv2, img_org_cv2))

- print("PSNR between misc rc and org:", cal_psnr(img_rc_misc, img_org_misc))

- print("PSNR between tf rc and org:", cal_psnr(img_rc_tf, img_org_tf))

- # out

- PSNR between cv2 rc and org: 33.32955362820551

- PSNR between misc rc and org: 33.12080493358994

- PSNR between tf rc and org: 26.823069

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?