前言

- 由于毕业设计的课题是通过CycleGAN搭建一个音乐风格转换系统,需要大量的音乐文件来训练神经网络,而MIDI文件作为最广泛使用的一种电脑编曲保存媒介,十分容易搜集资源,也有很多成熟的Python库来对MIDI文件进行处理。

- 如今,相关领域的研究最常用的数据集是The Lakh MIDI Dataset 。这一数据集包括十万余个MIDI格式文件,数据的数量是足够使用了,可是通过个人的测试,其质量没有达到我的预期,主要的原因是一首歌对应多个MIDI文件,而且元数据同数据相比远远不足,这两点导致了使用时的困难和不顺手,让我萌生了自己搭建数据集的想法。下面便是我从 Free Midi Files Download 这个网站爬取数据,构建数据集的过程,涉及简单的爬虫知识,和MongoDB数据库的Python API, PyMongo的简单操作。

- 如果您对爬虫实施过程中Session和Cookies的使用有疑惑之处,本篇文章也可以为您提供借鉴。若对爬虫的内容不感兴趣,尽可以滚动到本篇文章底部的资源链接,通过百度网盘下载这一数据集,使用时请注明本文链接,希望本文内容可以帮助到您!

- 下面的实施过程源代码地址在 josephding23/Free-Midi-Library

实施过程

编写爬虫

爬虫的代码在 Free-Midi-Library/src/midi_scratch.py

在搜索MIDI资源的过程中,我浏览了很多网站,其中 Free Midi Files Download 这个网站从资源数量以及资源组织形式这两个方面来看都是最优秀的一个,包含的音乐风格有17种之多,这之中摇滚乐的MIDI文件数目达到了9866个,通过结构化的爬取操作,这些文件的元数据(风格、歌手、歌名)都十分完整地保存在了MongoDB数据库中,方便之后的训练和测试。

爬虫的过程分为以下三个阶段:

- 爬取音乐风格信息,得到每个风格的艺术家名称、链接等 ,将信息添加到数据库,通过以下函数实现,比较简单:

def free_midi_get_genres():

genres_collection = get_genre_collection()

for genre in get_genres():

if genres_collection.count({'name': genre}) != 0:

continue

url = 'https://freemidi.org/genre-' + genre

text = get_html_text(url)

soup = BeautifulSoup(text, 'html.parser')

urls = []

performers = []

for item in soup.find_all(name='div', attrs={'class': 'genre-link-text'}):

try:

href = item.a['href']

name = item.text

urls.append(href)

performers.append(name)

except:

pass

genres_collection.insert_one({

'name': genre,

'performers_num': len(urls),

'performers': performers,

'performer_urls': urls

})

print(genre, len(urls))

- 构建艺术家数据表,将所有艺术家的信息添加进去,这一步不需要爬虫,而是为之后的爬取工作做准备:

def free_midi_get_performers():

root_url = 'https://freemidi.org/'

genres_collection = get_genre_collection()

performers_collection = get_performer_collection()

for genre in genres_collection.find({'Finished': False}):

genre_name = genre['Name']

performers = genre['Performers']

performer_urls = genre['PerformersUrls']

num = genre['PerformersNum']

for index in range(num):

name = performers[index]

url = root_url + performer_urls[index]

print(name, url)

performers_collection.insert_one({

'Name': name,

'Url': url,

'Genre': genre_name,

'Finished': False

})

genres_collection.update_one(

{'_id': genre['_id']},

{'$set': {'Finished': True}})

print('Progress: {:.2%}\n'.format(genres_collection.count({'Finished': True}) / genres_collection.count()))

- 爬取每个艺术家的页面,将每个艺术家的所有作品信息添加到新建的MIDI数据表,注意这一步需要针对该网站的反爬虫机制来编辑响应头,重点在于Cookie的设置,具体方法在这里不详细展开,若有不解的地方可以在下面评论,与我交流。

def get_free_midi_songs_and_add_performers_info():

root_url = 'https://freemidi.org/'

midi_collection = get_midi_collection()

performer_collection = get_performer_collection()

while performer_collection.count({'Finished': False}) != 0:

for performer in performer_collection.find({'Finished': False}):

num = 0

performer_url = performer['Url']

performer_name = performer['Name']

genre = performer['Genre']

try:

params = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36',

'Cookie': cookie_str,

'Referer': root_url + genre,

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8',

'Connection': 'keep-alive'

}

text = get_html_text(performer_url, params)

if text == '':

print('connection error')

continue

soup = BeautifulSoup(text, 'html.parser')

# print(soup)

for item in soup.find_all(name='div', attrs={'itemprop': 'tracks'}):

try:

download_url = root_url + item.span.a['href']

name = item.span.text

if midi_collection.count({'Genre': genre, 'Name': name}) == 0:

midi_collection.insert_one({

'Name': name.replace('\n', ''),

'DownloadPage': download_url,

'Performer': performer_name,

'PerformerUrl': performer_url,

'Genre': genre,

'Downloaded': False

})

num = num + 1

except:

pass

if num != 0:

performer_collection.update_one(

{'_id': performer['_id']},

{'$set': {'Finished': True, 'Num': num}}

)

time.sleep(uniform(1, 1.6))

print('Performer ' + performer_name + ' finished.')

print('Progress: {:.2%}\n'.format(performer_collection.count({'Finished': True}) / performer_collection.count()))

except:

print('Error connecting.')

- 最后的一步便是爬取MIDI文件,这一步是最复杂的一步,也是我与该网站的反爬虫机制斗志斗勇体验最深刻的一步。因为该网站没有为MIDI资源提供一个直接的下载链接,而是一个getter链接,需要通过GET响应头来获得response中的下载链接。在此处通过响应头来设置cookies已经不足以解决问题,故我使用了Session来维护cookies的一致,这样就更好地模拟了“真人”访问网页时的情况,使得反爬虫无法侦察我的爬虫行为。当然这一方法也不是万无一失,经常遇到的情况是显示错误并循环多次后才能开始下载,有一些无法下载的内容只能从数据库中移除了。

def download_free_midi():

root_url = 'https://freemidi.org/'

root_path = 'E:/free_MIDI'

cookie_path = './cookies.txt'

params = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36',

# 'Cookie': cookie,

'Sec-Fetch-Mode': 'navigate',

'Sec-Fetch-Site': 'same-origin',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8',

'Connection': 'keep-alive'

}

midi_collection = get_midi_collection()

session = requests.Session()

requests.packages.urllib3.disable_warnings()

session.headers.update(params)

session.cookies = cookies

while midi_collection.count({'Downloaded': False}) != 0:

for midi in midi_collection.find({'Downloaded': False}, no_cursor_timeout = True):

performer_link = midi['PerformerUrl']

download_link = midi['DownloadPage']

name = midi['Name']

genre = midi['Genre']

performer = midi['Performer']

try:

params = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36',

# 'Cookie': cookie_str,

'Referer': performer_link,

'Sec-Fetch-Mode': 'navigate',

'Sec-Fetch-Site': 'same-origin',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8',

'Connection': 'keep-alive'

}

session.headers.update({'Referer': performer_link})

r = session.get(download_link, verify=False, timeout=20)

# r.encoding = 'utf-8'

if r.cookies.get_dict():

print(r.cookies.get_dict())

session.cookies = r.cookies

if r.status_code != 200:

print('connection error ' + str(r.status_code))

soup = BeautifulSoup(r.text, 'html.parser')

r.close()

try:

getter_link = root_url + soup.find(name='a', attrs={'id': 'downloadmidi'})['href']

print(getter_link)

download_header = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8',

'Referer': download_link,

# 'Cookie': cookie_str,

'Sec-Fetch-Mode': 'navigate',

'Sec-Fetch-Site': 'same-origin',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36',

}

session.headers.update(download_header)

dir = root_path + '/' + genre

if not os.path.exists(dir):

os.mkdir(dir)

rstr = r'[\\/:*?"<>|\r\n\t]+' # '/ \ : * ? " < > |'

name = re.sub(rstr, '', name).strip()

performer = re.sub(rstr, '', performer).strip()

file_name = name + ' - ' + performer + '.mid'

path = dir + '/' + file_name

try:

with open(path, 'wb') as output:

with session.get(getter_link, allow_redirects=True, verify=False, timeout=20) as r:

if r.history:

print('Request was redirected')

for resp in r.history:

print(resp.url)

print('Final: ' + str(r.url))

r.raise_for_status()

if r.cookies.get_dict():

print(r.cookies)

session.cookies.update(r.cookies)

output.write(r.content)

time.sleep(uniform(2, 3))

# cookie_opener.open(getter_link)

# cj.save(cookie_path, ignore_discard=True)

if is_valid_midi(path):

print(file_name + ' downloaded')

midi_collection.update_one(

{'_id': midi['_id']},

{'$set': {'Downloaded': True, 'GetterLink': getter_link}}

)

print('Progress: {:.2%}\n'.format(midi_collection.count({'Downloaded': True}) / midi_collection.count()))

else:

print('Cannot successfully download midi.')

os.remove(path)

except:

print(traceback.format_exc())

except:

print('Found no download link')

except:

print(traceback.format_exc())

文件名哈希化

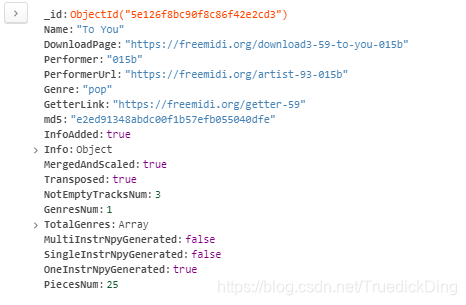

爬取到的MIDI文件夹结构如下,其中每个子文件夹代表不同的风格:

每个子文件夹内就包含该风格的所有MIDI文件:

为了方便处理,我把所有的文件名通过md5算法加密了,并将对应的哈希码保存在数据表,可以通过简单的find语句来查找,哈希化代码保存在 Free-Midi-Library/src/md5_reorganize.py/

统一速度和调性

为了使得训练效果更佳,我将所有的MIDI音乐的速度调整到120bpm,并转调到C调,这两种操作的代码在 src/unify_tempo.py 和 src/transpose_tone.py 可以找到。

- 转调到C

关键函数:

def transpose_to_c():

root_dir = 'E:/free_midi_library/'

transpose_root_dir = 'E:/transposed_midi/'

midi_collection = get_midi_collection()

for midi in midi_collection.find({'Transposed': False}, no_cursor_timeout = True):

original_path = os.path.join(root_dir, midi['Genre'] + '/', midi['md5'] + '.mid')

if not os.path.exists(os.path.join(transpose_root_dir, midi['Genre'])):

os.mkdir(os.path.join(transpose_root_dir, midi['Genre']))

transposed_path = os.path.join(transpose_root_dir, midi['Genre'] + '/', midi['md5'] + '.mid')

try:

original_stream = converter.parse(original_path)

estimate_key = original_stream.analyze('key')

estimate_tone, estimate_mode = (estimate_key.tonic, estimate_key.mode)

c_key = key.Key('C', 'major')

c_tone, c_mode = (c_key.tonic, c_key.mode)

margin = interval.Interval(estimate_tone, c_tone)

semitones = margin.semitones

mid = pretty_midi.PrettyMIDI(original_path)

for instr in mid.instruments:

if not instr.is_drum:

for note in instr.notes:

if note.pitch + semitones < 128 and note.pitch + semitones > 0:

note.pitch += semitones

mid.write(transposed_path)

midi_collection.update_one({'_id': midi['_id']}, {'$set': {'Transposed': True}})

print('Progress: {:.2%}\n'.format(midi_collection.count({'Transposed': True}) / midi_collection.count()))

except:

print(traceback.format_exc())

这一函数中,首先通过music21.converter库中的调性分析函数来得到MIDI文件的调性,并根据与C调的距离来将其转调到C大调或C小调

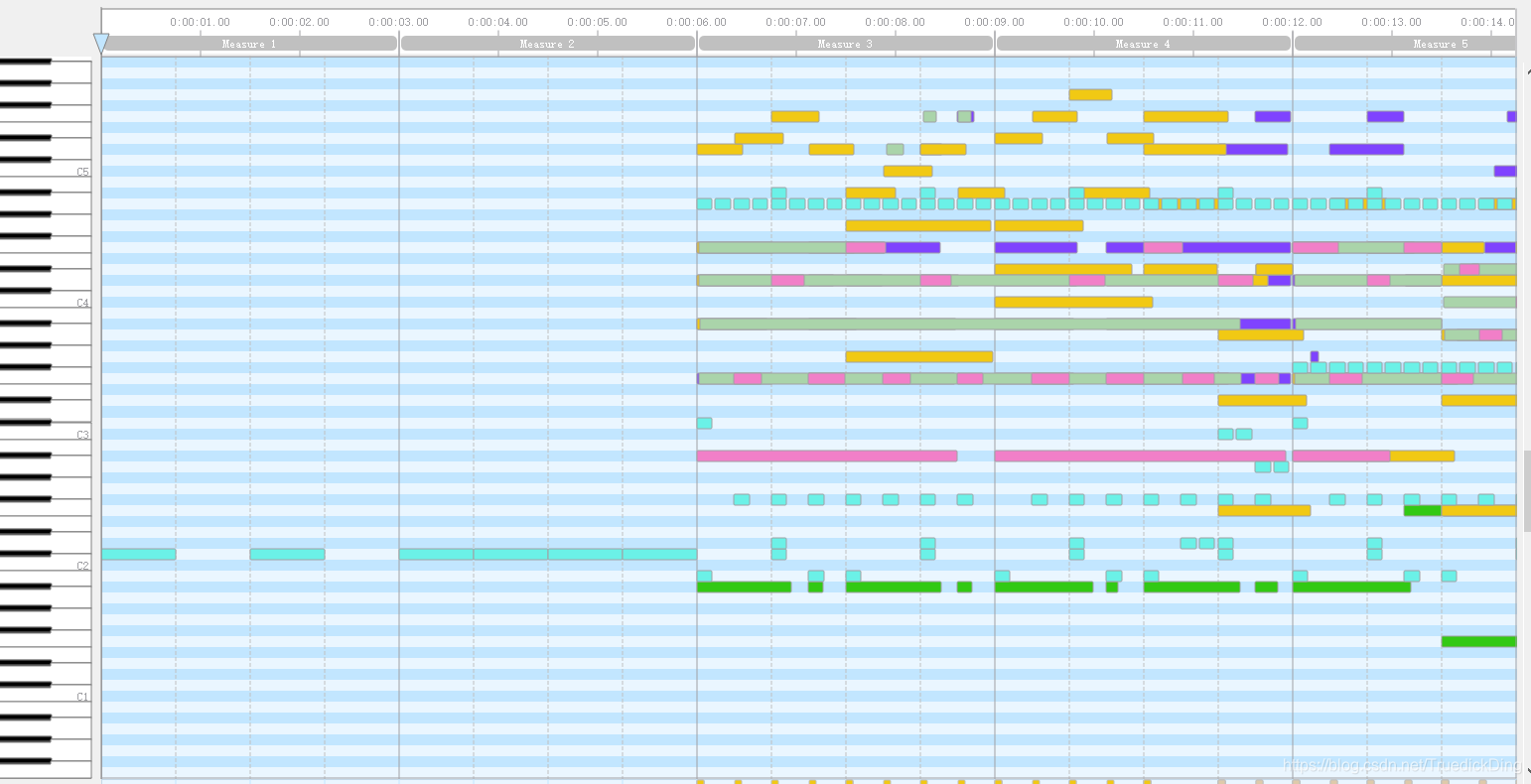

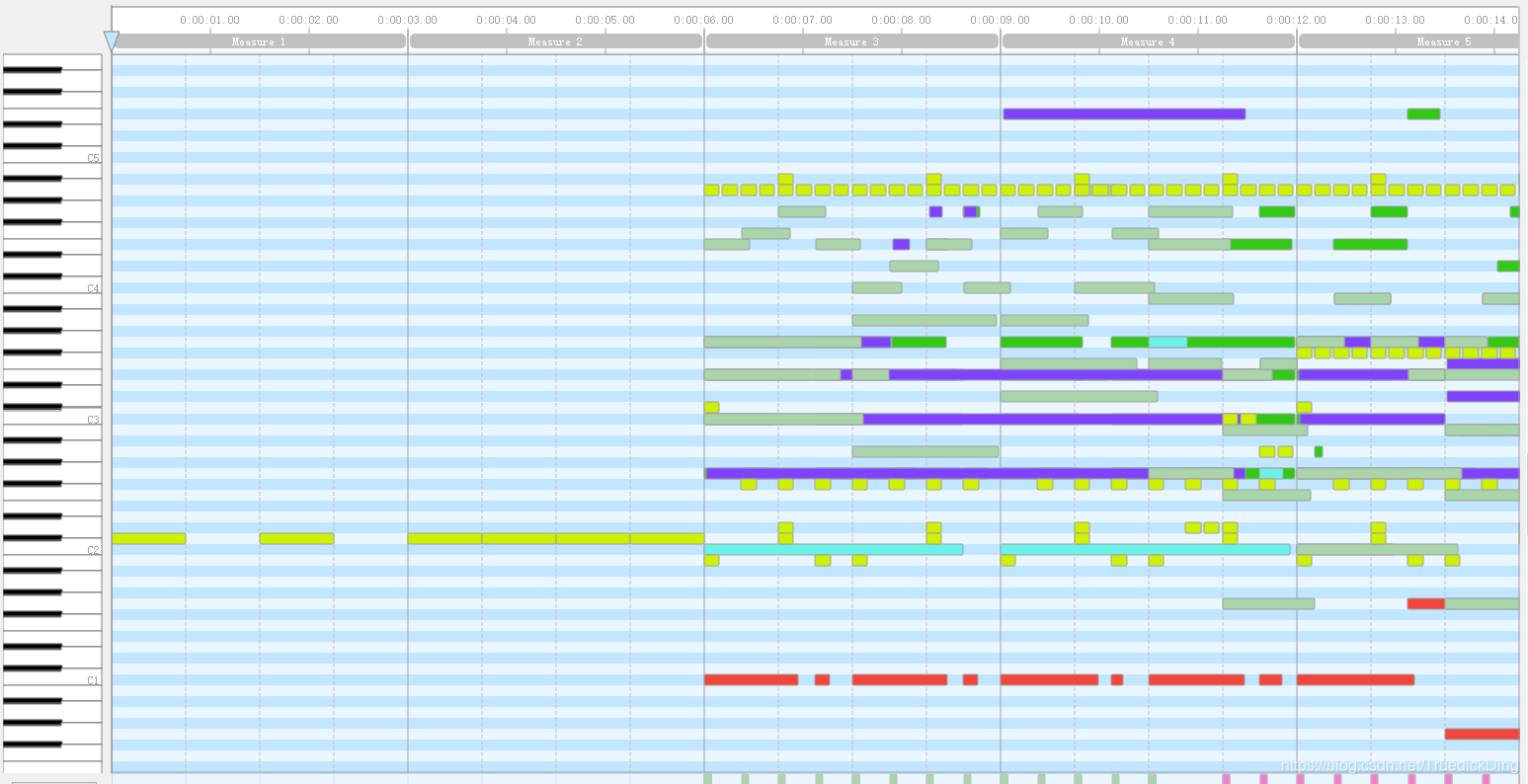

实例

转调前:

转调后:

- 统一速度(BPM)

关键函数:

def tempo_unify_and_merge():

midi_collection = get_midi_collection()

root_dir = 'E:/transposed_midi/'

merged_root_dir = 'E:/merged_midi/'

for midi in midi_collection.find({'MergedAndScaled': False}, no_cursor_timeout = True):

original_path = os.path.join(root_dir, midi['Genre'] + '/', midi['md5'] + '.mid')

try:

original_tempo = get_tempo(original_path)[0]

changed_rate = original_tempo / 120

if not os.path.exists(os.path.join(merged_root_dir, midi['Genre'])):

os.mkdir(os.path.join(merged_root_dir, midi['Genre']))

pm = pretty_midi.PrettyMIDI(original_path)

for instr in pm.instruments:

for note in instr.notes:

note.start *= changed_rate

note.end *= changed_rate

merged_path = os.path.join(merged_root_dir, midi['Genre'] + '/', midi['md5'] + '.mid')

merged = get_merged_from_pm(pm)

merged.write(merged_path)

midi_collection.update_one({'_id': midi['_id']}, {'$set': {'MergedAndScaled': True}})

print('Progress: {:.2%}\n'.format(midi_collection.count({'MergedAndScaled': True}) / midi_collection.count()))

except:

pass

这一函数使用了 pretty_midi 库支持的对MIDI文件的操作,根据源文件的BPM与120BPM的比例,来对所有Note的起始时间和终止时间来进行改变。

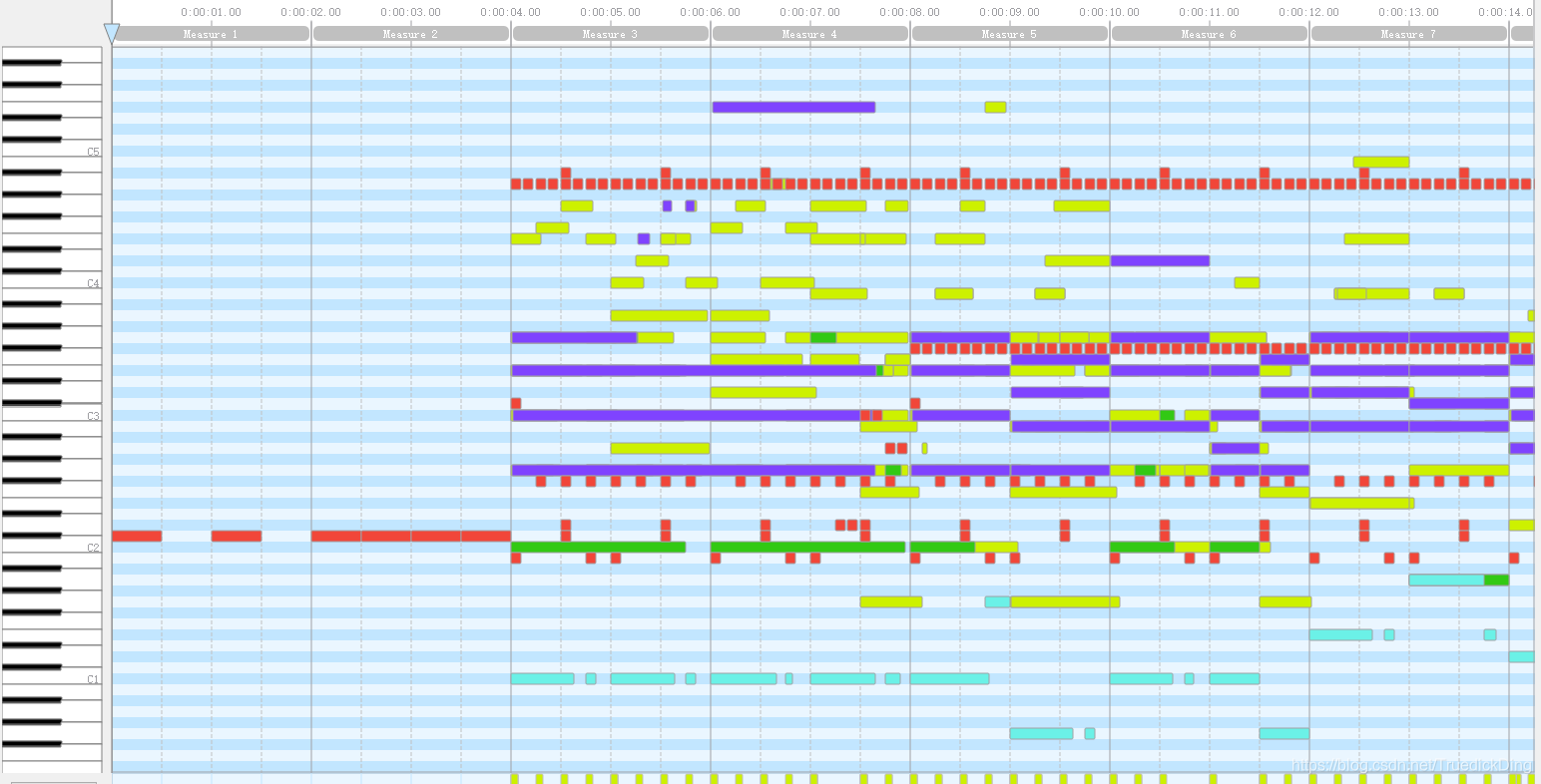

实例:

统一速度后:

下载链接

百度云下载链接,提取码:fm8f

资源介绍:

- unhashed:MIDI文件没有加密,格式为“歌名-艺术家名”

- raw_midi:仅对文件名进行MD5加密后的文件

- transposed_midi:转为C大调之后的MIDI文件

- merged_midi:转调后并且将速度设置为120BPM的文件

- meta:从MongoDB导出的JSON文件,分为genre、performers和midi三个表,可以通过mongoimport命令来导入MongoDB数据库

2199

2199

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?