环境

系统:win10

GPU:NVIDIA RTX4070TI

CUDA:11.8

python版本:3.8

项目配置

1. 从github拉取源码

git clone https://github.com/tinyvision/DAMO-YOLO.git2. 安装环境依赖

cd DAMO-YOLO/

conda create -n DAMO-YOLO python=3.8 -y

conda activate DAMO-YOLO

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

# 环境依赖readme里面是直接安装requirements

pip install -r requirements.txt

# 也可以使用

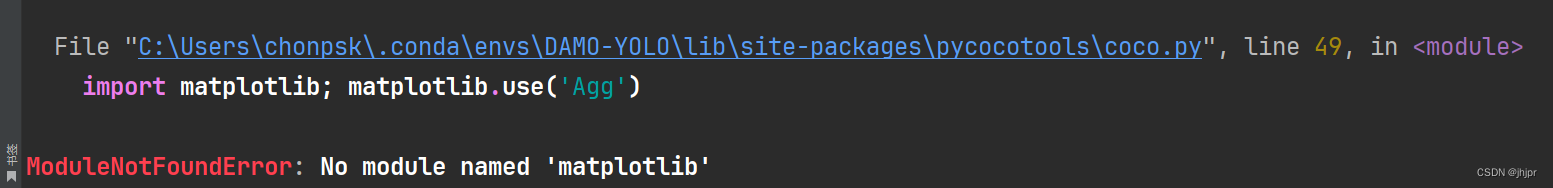

python setup.py install虽然我用pip安装了依赖,但是实际运行时还是因为缺少matplotlib库:

所以进一步安装matplotlib:

所以进一步安装matplotlib:

pip install matplotlib3. 修改模型训练样本配置部分源码

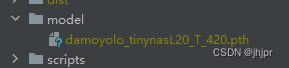

项目根目录新建文件夹:module,把从github上下载的预训练权重放进去

在datasets文件夹下创建:对应图像和标签

datasets

|

|--Custom_coco

| |

| |--annotations

| | |

| | |--train_coco_format.json

| | |--val_coco_format.json

|

|--images

|

|--train

| |--image001.jpg...

|

|--val

|--image修改configs/damoyolo_tinynasL20_T.py文件内容

# 在config类的init函数内添加:

self.train.no_aug_epochs = 16

self.train.warmup_epochs = 5

----------上文-------------------

self.train.finetune_path = 'E:\\proj\\lineCheck\\DAMO-YOLO\\model\\damoyolo_tinynasL20_T_420.pth'

self.train.total_epochs = 10

----------下文-------------------

# augment

self.train.augment.transform.image_max_range = (500, 500)

self.train.augment.mosaic_mixup.mixup_prob = 0.15

.

.

.

.

.

.

.

.

.

ZeroHead = {

'name': 'ZeroHead',

'num_classes': 1, #这里修改成你样本的分类数量

'in_channels': [64, 128, 256],

'stacked_convs': 0,

'reg_max': 16,

'act': 'silu',

'nms_conf_thre': 0.05,

'nms_iou_thre': 0.7,

'legacy': False,

}

self.model.head = ZeroHead

self.dataset.class_names = ['qx'] # 这里写入标签对应的名称进一步修改damo/config/paths_catalog.py文件内容

# 在DatasetCatalog里面吧DATASETS里的数据集路径进行修改,与上面设置的数据集路径一致

DATA_DIR = 'datasets' # 这里设置root

DATASETS = {

'coco_2017_train': { # 训练集

'img_dir': 'Custom_coco/images/train', # 基于root的数据集图片

'ann_file': 'Custom_coco/annotations/train_coco_format.json' # 基于root的数据集标签

},

'coco_2017_val': { # 验证集

'img_dir': 'Custom_coco/images/val',

'ann_file': 'Custom_coco/annotations/val_coco_format.json'

},

'coco_2017_test_dev': { # 测试集

'img_dir': 'Custom_coco/images/val',

'ann_file': 'Custom_coco/annotations/val_coco_format.json'

},将train.py从tools文件夹移动到根目录下并将nccl改成gloo,因为nccl是在linux系统下用的

torch.distributed.init_process_group(backend='gloo', init_method='env://')4. 开始训练

# 官网给出的训练命令:

python -m torch.distributed.launch --nproc_per_node=8 tools/train.py -f configs/damoyolo_tinynasL20_T.py

# 单卡环境且因为找不到damo包路径所以吧train.py移动到了根目录,所以改成

python -m torch.distributed.launch --nproc_per_node=1 train.py -f configs/damoyolo_tinynasL20_T.py

# 又显示命令行参数错误,所以按照要求加上use_env变成:

python -m torch.distributed.launch --nproc_per_node=1 --use_env train.py -f configs/damoyolo_tinynasL20_T.py一些坑

一开始提示找不到damo包:把train.py拖到root

然后是:matplotlib没有安装: pip install matplotlib

再然后是:error:unrecognized argument: --local-rank=0, 在调用train.py前加上--use_env

最后是numpy版本过高导致.float不可用,要么用正确的numpy版本,要么改位于pycocotools的cocoeval.py代码:

# 此为下策,因为直接改动了源码,不知道会出现什么问题,但是能用

# 这里是因为训练时报错说不接受浮点

self.iouThrs = np.linspace(.5, 0.95, int(np.round((0.95 - .5) / .05)) + 1, endpoint=True)

self.recThrs = np.linspace(.0, 1.00, int(np.round((1.00 - .0) / .01)) + 1, endpoint=True)

# 这里是因为np.float不可用

tp_sum = np.cumsum(tps, axis=1).astype(dtype=np.float64)

fp_sum = np.cumsum(fps, axis=1).astype(dtype=np.float64)

网上可以找txt转coco的标签格式转换脚本,我用的是:

这个大佬写的(感谢)

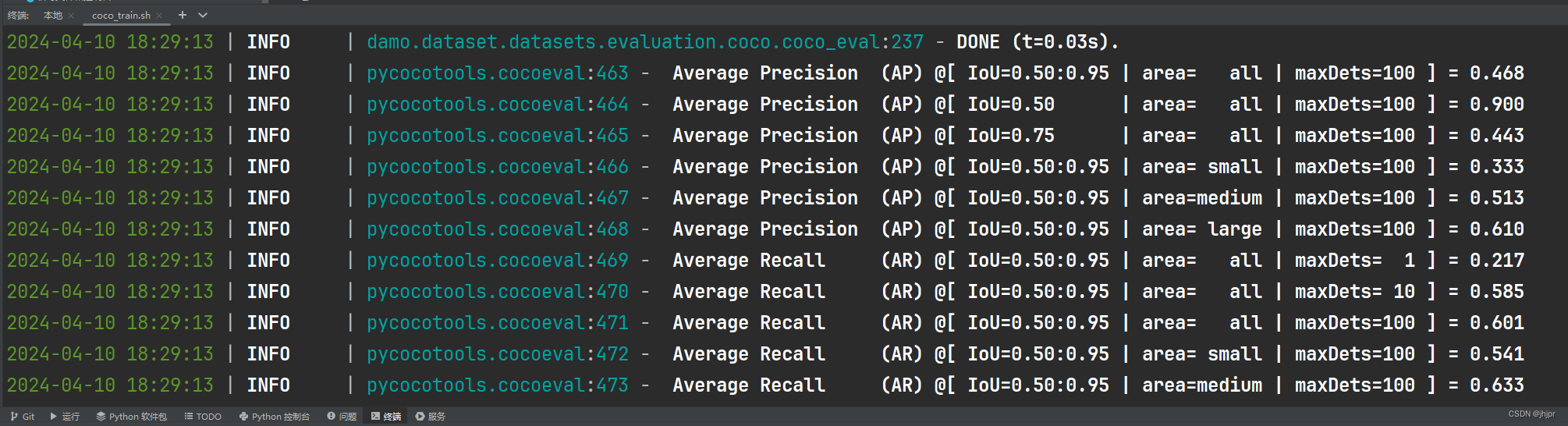

最后跑起来了:

验证+推理

跑了一个晚上,算是得到了pth权重文件,然后尝试验证和推理。有遇到了一些坑,记录一下。。。

验证

验证环节按readme的说法是使用eval.py完成的,和train的问题一样找不到damo包。挪到根目录,在调用前加上--use_env。

python -m torch.distributed.launch --nproc_per_node=1 --use_env eval.py -f configs/damoyolo_tinynasL20_T.py -c workdirs/damoyolo_tinynasL20_T/epoch_300_ckpt.pth --fuse一切顺利,得到结果,感觉不是很好,还是要继续调参。。。

(DAMO-YOLO) PS E:\proj\DAMO-YOLO> python -m torch.distributed.launch --nproc_per_node=1 --use_env eval.py -f configs/damoyolo_tinynasL20_T.py -c workdirs/damoyolo_tinyn

asL20_T/epoch_300_ckpt.pth --fuse

[2024-04-11 09:49:49,208] torch.distributed.elastic.multiprocessing.redirects: [WARNING] NOTE: Redirects are currently not supported in Windows or MacOs.

C:\Users\chonpsk\.conda\envs\DAMO-YOLO\lib\site-packages\torch\distributed\launch.py:183: FutureWarning: The module torch.distributed.launch is deprecated

and will be removed in future. Use torchrun.

Note that --use-env is set by default in torchrun.

If your script expects `--local-rank` argument to be set, please

change it to read from `os.environ['LOCAL_RANK']` instead. See

https://pytorch.org/docs/stable/distributed.html#launch-utility for

further instructions

warnings.warn(

[W socket.cpp:697] [c10d] The client socket has failed to connect to [kubernetes.docker.internal]:29500 (system error: 10049 - 在其上下文中,该请求的地址无效。).

[W socket.cpp:697] [c10d] The client socket has failed to connect to [kubernetes.docker.internal]:29500 (system error: 10049 - 在其上下文中,该请求的地址无效。).

2024-04-11 09:49:51 | INFO | __main__:93 - Args: Namespace(ckpt='workdirs/damoyolo_tinynasL20_T/epoch_300_ckpt.pth', conf=None, config_file='configs/damoyolo_tinynasL20_T.py'

, fuse=True, local_rank=0, nms=None, opts=[], seed=None, test=False, tsize=None)

2024-04-11 09:49:51 | INFO | __main__:102 - loading checkpoint from workdirs/damoyolo_tinynasL20_T/epoch_300_ckpt.pth

2024-04-11 09:49:51 | INFO | __main__:110 - loaded checkpoint done.

2024-04-11 09:49:54 | INFO | __main__:117 - Model Summary: backbone's params(M): 2.17, flops(G): 7.97, latency(ms): 1.125

neck's params(M): 5.71, flops(G): 8.24, latency(ms): 3.125

head's params(M): 0.28, flops(G): 0.89, latency(ms): 1.125

total latency(ms): 5.640, total flops(G): 17.10, total params(M): 8.16

2024-04-11 09:49:54 | INFO | __main__:122 - Fusing model...

2024-04-11 09:49:54 | INFO | torchvision.datasets.coco:36 - loading annotations into memory...

2024-04-11 09:49:54 | INFO | torchvision.datasets.coco:36 - Done (t=0.00s)

2024-04-11 09:49:54 | INFO | pycocotools.coco:89 - creating index...

2024-04-11 09:49:54 | INFO | pycocotools.coco:89 - index created!

2024-04-11 09:49:54 | INFO | damo.apis.detector_inference:75 - Start evaluation on coco_2017_val dataset(160 images).

100%|##############################################################################################################################################| 2/2 [00:09<00:00, 4.70s/it]

2024-04-11 09:50:04 | INFO | damo.apis.detector_inference:87 - Total run time: 0:00:09.420176 (0.058876097202301025 s / img per device, on 1 devices)

2024-04-11 09:50:04 | INFO | damo.apis.detector_inference:92 - Model inference time: 0:00:00.856720 (0.005354499816894532 s / img per device, on 1 devices)

2024-04-11 09:50:04 | INFO | damo.dataset.datasets.evaluation.coco.coco_eval:42 - Preparing results for COCO format

2024-04-11 09:50:04 | INFO | damo.dataset.datasets.evaluation.coco.coco_eval:45 - Preparing bbox results

2024-04-11 09:50:04 | INFO | damo.dataset.datasets.evaluation.coco.coco_eval:49 - Evaluating predictions

2024-04-11 09:50:04 | INFO | damo.dataset.datasets.evaluation.coco.coco_eval:231 - Loading and preparing results...

2024-04-11 09:50:04 | INFO | damo.dataset.datasets.evaluation.coco.coco_eval:231 - DONE (t=0.01s)

2024-04-11 09:50:04 | INFO | pycocotools.coco:362 - creating index...

2024-04-11 09:50:04 | INFO | pycocotools.coco:362 - index created!

2024-04-11 09:50:04 | INFO | damo.dataset.datasets.evaluation.coco.coco_eval:236 - Running per image evaluation...

2024-04-11 09:50:04 | INFO | damo.dataset.datasets.evaluation.coco.coco_eval:236 - Evaluate annotation type *bbox*

2024-04-11 09:50:04 | INFO | damo.dataset.datasets.evaluation.coco.coco_eval:236 - DONE (t=0.10s).

2024-04-11 09:50:04 | INFO | damo.dataset.datasets.evaluation.coco.coco_eval:237 - Accumulating evaluation results...

2024-04-11 09:50:04 | INFO | damo.dataset.datasets.evaluation.coco.coco_eval:237 - DONE (t=0.02s).

2024-04-11 09:50:04 | INFO | pycocotools.cocoeval:463 - Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.473

2024-04-11 09:50:04 | INFO | pycocotools.cocoeval:464 - Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.895

2024-04-11 09:50:04 | INFO | pycocotools.cocoeval:465 - Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.409

2024-04-11 09:50:04 | INFO | pycocotools.cocoeval:466 - Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.340

2024-04-11 09:50:04 | INFO | pycocotools.cocoeval:467 - Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.503

2024-04-11 09:50:04 | INFO | pycocotools.cocoeval:468 - Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.621

2024-04-11 09:50:04 | INFO | pycocotools.cocoeval:469 - Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.215

2024-04-11 09:50:04 | INFO | pycocotools.cocoeval:470 - Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.584

2024-04-11 09:50:04 | INFO | pycocotools.cocoeval:472 - Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.544

2024-04-11 09:50:04 | INFO | pycocotools.cocoeval:473 - Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.611

2024-04-11 09:50:04 | INFO | damo.dataset.datasets.evaluation.coco.coco_eval:59 - OrderedDict([('bbox', OrderedDict([('AP', 0.4730805870212621), ('AP50', 0.895090459391299),

('AP75', 0.4091130859655121), ('APs', 0.3403641442326747), ('APm', 0.5026130249143416), ('APl', 0.6209136920203059)]))])

推理

推理的使用遇到了一个小坑,在运行时突然告诉我numpy数组被定义为只读的,不可被操作。

2024-04-11 10:13:31.168 | ERROR | __main__:<module>:356 - An error has been caught in function '<module>', process 'MainProcess' (980), thread 'MainThread' (23684):

Traceback (most recent call last):

> File "demo.py", line 356, in <module>

main()

└ <function main at 0x000001C91010F820>

File "demo.py", line 321, in main

vis_res = infer_engine.visualize(origin_img, bboxes, scores, cls_inds, conf=args.conf, save_name=os.path.basename(args.path), save_result=args.save_result)

│ │ │ │ │ │ │ │ │ │ │ │ │ │ └ True

│ │ │ │ │ │ │ │ │ │ │ │ │ └ Namespace(camid=0, conf=0.6, confi

g_file='./configs/damoyolo_tinynasL20_T.py', device='cuda', end2end=False, engine='workdirs...

│ │ │ │ │ │ │ │ │ │ │ │ └ 'datasets/Custom_coco/images/val/66_crop_0.jpg'

│ │ │ │ │ │ │ │ │ │ │ └ Namespace(camid=0, conf=0.6, config_file='./configs/damoyo

lo_tinynasL20_T.py', device='cuda', end2end=False, engine='workdirs...

│ │ │ │ │ │ │ │ │ │ └ <function basename at 0x000001C95AD39EE0>

│ │ │ │ │ │ │ │ │ └ <module 'ntpath' from 'C:\\Users\\chonpsk\\.conda\\envs\\DAMO-YOLO\\lib\

\ntpath.py'>

│ │ │ │ │ │ │ │ └ <module 'os' from 'C:\\Users\\chonpsk\\.conda\\envs\\DAMO-YOLO\\lib\\os.py'

>

│ │ │ │ │ │ │ └ 0.6

│ │ │ │ │ │ └ Namespace(camid=0, conf=0.6, config_file='./configs/damoyolo_tinynasL20_T.py', device='cuda', en

d2end=False, engine='workdirs...

│ │ │ │ │ └ tensor([0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

│ │ │ │ │ 0., 0., 0., 0...

│ │ │ │ └ tensor([0.6785, 0.6641, 0.6581, 0.6514, 0.6470, 0.6399, 0.5757, 0.5683, 0.5609,

│ │ │ │ 0.5585, 0.5564, 0.5532, 0.5524, 0.521...

│ │ │ └ tensor([[309.0302, 229.5414, 322.8981, 261.2090],

│ │ │ [267.1926, 28.5143, 280.6399, 46.0551],

│ │ │ [307.3100, 135.57...

│ │ └ array([[[255, 255, 255],

│ │ [255, 255, 255],

│ │ [255, 255, 255],

│ │ ...,

│ │ [255, 255, 255],

│ │ [255...

│ └ <function Infer.visualize at 0x000001C91010F700>

└ <__main__.Infer object at 0x000001C95AE46550>

File "demo.py", line 249, in visualize

vis_img = vis(image, bboxes, scores, cls_inds, conf, self.class_names)

│ │ │ │ │ │ │ └ ['qx']

│ │ │ │ │ │ └ <__main__.Infer object at 0x000001C95AE46550>

│ │ │ │ │ └ 0.6

│ │ │ │ └ tensor([0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0.,

│ │ │ │ 0., 0., 0., 0...

│ │ │ └ tensor([0.6785, 0.6641, 0.6581, 0.6514, 0.6470, 0.6399, 0.5757, 0.5683, 0.5609,

│ │ │ 0.5585, 0.5564, 0.5532, 0.5524, 0.521...

│ │ └ tensor([[309.0302, 229.5414, 322.8981, 261.2090],

│ │ [267.1926, 28.5143, 280.6399, 46.0551],

│ │ [307.3100, 135.57...

│ └ array([[[255, 255, 255],

│ [255, 255, 255],

│ [255, 255, 255],

│ ...,

│ [255, 255, 255],

│ [255...

└ <function vis at 0x000001C910064DC0>

File "E:\proj\DAMO-YOLO\damo\utils\visualize.py", line 30, in vis

cv2.rectangle(img, (x0, y0), (x1, y1), color, 2)

│ │ │ │ │ │ │ └ [0, 113, 188]

│ │ │ │ │ │ └ 261

│ │ │ │ │ └ 322

│ │ │ │ └ 229

│ │ │ └ 309

│ │ └ array([[[255, 255, 255],

│ │ [255, 255, 255],

│ │ [255, 255, 255],

│ │ ...,

│ │ [255, 255, 255],

│ │ [255...

│ └ <built-in function rectangle>

└ <module 'cv2' from 'C:\\Users\\chonpsk\\.conda\\envs\\DAMO-YOLO\\lib\\site-packages\\cv2\\__init__.py'>

cv2.error: OpenCV(4.9.0) :-1: error: (-5:Bad argument) in function 'rectangle'

> Overload resolution failed:

> - img marked as output argument, but provided NumPy array marked as readonly

> - Expected Ptr<cv::UMat> for argument 'img'

> - img marked as output argument, but provided NumPy array marked as readonly

> - Expected Ptr<cv::UMat> for argument 'img'于是进一步修改源码:

# damo/utils/visualize.py

def vis(img, boxes, scores, cls_ids, conf=0.5, class_names=None):

# 根据报错定位到这里的img时只读的,所以把原来需要直接操作img的地方注释掉,改用拷贝数据

img_copy = np.copy(img)

for i in range(len(boxes)):

box = boxes[i]

cls_id = int(cls_ids[i])

score = scores[i]

if score < conf:

continue

x0 = int(box[0])

y0 = int(box[1])

x1 = int(box[2])

y1 = int(box[3])

color = (_COLORS[cls_id] * 255).astype(np.uint8).tolist()

text = '{}:{:.1f}%'.format(class_names[cls_id], score * 100)

txt_color = (0, 0, 0) if np.mean(_COLORS[cls_id]) > 0.5 else (255, 255,

255)

font = cv2.FONT_HERSHEY_SIMPLEX

txt_size = cv2.getTextSize(text, font, 0.4, 1)[0]

# 第一处

cv2.rectangle(img_copy, (x0, y0), (x1, y1), color, 2)

# cv2.rectangle(img, (x0, y0), (x1, y1), color, 2)

txt_bk_color = (_COLORS[cls_id] * 255 * 0.7).astype(np.uint8).tolist()

# 第二处

# cv2.rectangle(img, (x0, y0 + 1),

# (x0 + txt_size[0] + 1, y0 + int(1.5 * txt_size[1])),

# txt_bk_color, -1)

cv2.rectangle(img_copy, (x0, y0 + 1),

(x0 + txt_size[0] + 1, y0 + int(1.5 * txt_size[1])),

txt_bk_color, -1)

# 第三处

# cv2.putText(img,

# text, (x0, y0 + txt_size[1]),

# font,

# 0.4,

# txt_color,

# thickness=1)

cv2.putText(img_copy,

text, (x0, y0 + txt_size[1]),

font,

0.4,

txt_color,

thickness=1)

# 最后返回处理后的拷贝数据

# return img

return img_copy运行:(和上面一样的操作,挪到根目录下)

readme里面说推理支持图像、视频和摄像头。这里使用图片。选择模型,导入对应的权重文件。设置conf为0.6(置信度大于60%才被识别,否则被抛弃), 图片尺寸640,640(这里只接受2^n大小的图片否则报拼接错误),cuda推理加速,图片路径path

python demo.py image -f ./configs/damoyolo_tinynasL20_T.py --engine workdirs/damoyolo_tinynasL20_T/epoch_300_ckpt.pth --conf 0.6 --infer_size 640 640 --device cuda --path datasets/Custom_coco/images/val/199_crop_3.jpg

最后关于拼接错误的问题,我没有继续研究,暂且把报错信息放在这里。怎么会是63呢?怎么辉石呢?

2024-04-11 09:59:15.302 | ERROR | __main__:<module>:356 - An error has been caught in function '<module>', process 'MainProcess' (12708), thread 'MainThread' (25264):

Traceback (most recent call last):

> File "demo.py", line 356, in <module>

main()

└ <function main at 0x00000204F6D40820>

File "demo.py", line 320, in main

bboxes, scores, cls_inds = infer_engine.forward(origin_img)

│ │ └ array([[[255, 255, 255],

│ │ [255, 255, 255],

│ │ [255, 255, 255],

│ │ ...,

│ │ [255, 255, 255],

│ │ [255...

│ └ <function Infer.forward at 0x00000204F6D40670>

└ <__main__.Infer object at 0x00000204C1D46550>

File "demo.py", line 234, in forward

output = self.model(image)

│ │ └ <damo.structures.image_list.ImageList object at 0x00000204F6F42DF0>

│ └ Detector(

│ (backbone): TinyNAS(

│ (block_list): ModuleList(

│ (0): Focus(

│ (conv): ConvBNAct(

│ (conv):...

└ <__main__.Infer object at 0x00000204C1D46550>

File "C:\Users\chonpsk\.conda\envs\DAMO-YOLO\lib\site-packages\torch\nn\modules\module.py", line 1511, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

│ │ │ └ {}

│ │ └ (<damo.structures.image_list.ImageList object at 0x00000204F6F42DF0>,)

│ └ <function Module._call_impl at 0x00000204EC534EE0>

└ Detector(

(backbone): TinyNAS(

(block_list): ModuleList(

(0): Focus(

(conv): ConvBNAct(

(conv):...

File "C:\Users\chonpsk\.conda\envs\DAMO-YOLO\lib\site-packages\torch\nn\modules\module.py", line 1520, in _call_impl

return forward_call(*args, **kwargs)

│ │ └ {}

│ └ (<damo.structures.image_list.ImageList object at 0x00000204F6F42DF0>,)

└ <bound method Detector.forward of Detector(

(backbone): TinyNAS(

(block_list): ModuleList(

(0): Focus(

(c...

File "E:\proj\lineCheck\DAMO-YOLO\damo\detectors\detector.py", line 56, in forward

fpn_outs = self.neck(feature_outs)

│ └ [tensor([[[[0.3254, 0.0000, 0.1727, ..., 0.1379, 0.0000, 2.4748],

│ [0.7851, 0.0000, 0.0000, ..., 0.0000, 0.0000, 2...

└ Detector(

(backbone): TinyNAS(

(block_list): ModuleList(

(0): Focus(

(conv): ConvBNAct(

(conv):...

File "C:\Users\chonpsk\.conda\envs\DAMO-YOLO\lib\site-packages\torch\nn\modules\module.py", line 1511, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

│ │ │ └ {}

│ │ └ ([tensor([[[[0.3254, 0.0000, 0.1727, ..., 0.1379, 0.0000, 2.4748],

│ │ [0.7851, 0.0000, 0.0000, ..., 0.0000, 0.0000, ...

│ └ <function Module._call_impl at 0x00000204EC534EE0>

└ GiraffeNeckV2(

(upsample): Upsample(scale_factor=2.0, mode='nearest')

(bu_conv13): ConvBNAct(

(conv): Conv2d(192, 192...

File "C:\Users\chonpsk\.conda\envs\DAMO-YOLO\lib\site-packages\torch\nn\modules\module.py", line 1520, in _call_impl

return forward_call(*args, **kwargs)

│ │ └ {}

│ └ ([tensor([[[[0.3254, 0.0000, 0.1727, ..., 0.1379, 0.0000, 2.4748],

│ [0.7851, 0.0000, 0.0000, ..., 0.0000, 0.0000, ...

└ <bound method GiraffeNeckV2.forward of GiraffeNeckV2(

(upsample): Upsample(scale_factor=2.0, mode='nearest')

(bu_conv13):...

File "E:\proj\lineCheck\DAMO-YOLO\damo\base_models\necks\giraffe_fpn_btn.py", line 113, in forward

x5 = torch.cat([x2, x45], 1)

│ │ │ └ tensor([[[[1.4854, 1.4854, 1.4719, ..., 0.6666, 0.1078, 0.1078],

│ │ │ [1.4854, 1.4854, 1.4719, ..., 0.6666, 0.1078, 0....

│ │ └ tensor([[[[0.3254, 0.0000, 0.1727, ..., 0.1379, 0.0000, 2.4748],

│ │ [0.7851, 0.0000, 0.0000, ..., 0.0000, 0.0000, 2....

│ └ <built-in method cat of type object at 0x00007FFAACDD2D50>

└ <module 'torch' from 'C:\\Users\\chonpsk\\.conda\\envs\\DAMO-YOLO\\lib\\site-packages\\torch\\__init__.py'>

RuntimeError: Sizes of tensors must match except in dimension 1. Expected size 63 but got size 64 for tensor number 1 in the list.

761

761

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?