Compute Express Link or CXL What it is and Examples

One of the biggest topics coming to the server industry in 2022 will be Compute Express Link or CXL. We started covering CXL when it was first announced in 2019. The project went from an Intel effort to a broad industry consortium and is simply where the industry will head as we enter the 2022 PCIe Gen5 era. Still, there are many who do not necessarily understand CXL, so we are going to try explaining CXL using an easy visual example: limes.

A Video Version

Since we are going to be talking about CXL for years to come, we have a video version of this piece that you can find here.

As always, we suggest opening this in a YouTube tab/ window for a better viewing experience.

The Quick: What is CXL? Answer

Compute Express Link is a cache-coherent link meant to help systems, especially those with accelerators, operate more efficiently. CXL sits atop the PCIe Gen5 link infrastructure. There can be PCIe Gen5 devices, but many PCIe Gen5 (or PCIe 5.0) devices will also support the ability to run lanes in either PCIe or CXL modes. In some ways, this is analogous to how AMD EPYC CPUs can run their I/O lanes as either PCIe or as Infinity Fabric. Instead of being a vendor-specific implementation, this is going to be a broad industry standard. The key advantage of CXL is it allows load/ stores to happen to memory that is directly attached to different endpoints which is what we are going to get into next.

Stephen Van Doren CXL Interconnect PCIe Gen5 And Gen6

We are going to focus a lot on memory here. Memory is a key driver for this as hyper-scalers realized that almost every device these days has onboard memory, and memory both is a large cost driver and also is often stranded by being under-utilized by the device it is attached to. At a higher level, CXL is a tool for systems to efficiently share and utilize memory across a number of devices. We are discussing these in the context of servers today, but there is no reason it cannot be applied beyond servers.

Why is CXL not used in the PCIe Gen3/ Gen4 era?

There are a few reasons that we are waiting until PCIe 5.0 for CXL. The first is simply timing. CXL was going from introduction to adoption in 2019 which means late 2021 and early 2022 are really the first generations of chips that we expect to see support in. These things take time.

A key enabler though is the rapid adoption of PCIe Gen5. It took the industry around seven years to transition from PCIe Gen3 to Gen4. The PCIe Gen5 transition is occurring about 3 years after Gen4. This massive increase in speed effectively doubles the bandwidth of PCIe Gen4 with Gen5. Since CXL sits atop PCIe Gen5 we generally see a controller with a 32GT/s x16 link but CXL can also support bifurcation to x8 and x4. There are modes to go into x2 and x1 links in what is called “Degraded” mode. It is important to remember that a PCIe Gen5 x4 link has enough bandwidth to handle a 100GbE link so that starting level represents a large amount of bandwidth that was akin to a PCIe Gen3 x16 link that Intel still used as a top-end connectivity option in Q1 2021.

Hot Chips 32 Intel Agilex R Tile PCIe Gen5 And CXL

The additional bandwidth means that PCIe will have enough bandwidth to handle a new class of use cases, specifically for those involving remote memory load/stores that previous generations were not fast enough to handle.

For those wondering why did this not happen sooner, there are three main reasons. First, we needed the speed of PCIe Gen5. Second, we needed CXL to be created. Third, it then takes time for industry adoption to occur. Now that we have all three, CXL is the future.

Stephen Van Doren CXL Interconnect Protocol Asymmetry

Compute Express Link Protocol Trifecta

CXL uses three main protocols:

- CXL.io is the protocol used for initialization, link-up, device discovery and enumeration, and register access. It provides a non-coherent load/store interface for I/O devices and is similar to PCIe Gen5. It also is mandatory that CXL devices support CXL.io.

- CXL.cache is the protocol that defines interactions between a Host (usually a CPU) and Device (such as a CXL memory module or accelerator. This allows attached CXL Devices to cache Host memory with low latency. Think of this as a GPU directly caching data stored in the CPU’s memory.

- CXL.memory / CXL.mem is the protocol that provides a Host processor (usually a CPU) with direct access to Device-attached memory using load/ store commands. Think of this as the CPU using a dedicated storage-class memory Device or using the memory found on a GPU/ accelerator Device.

CXL.io is mandatory to get an endpoint on CXL, but from there we can have CXL.io and any of the three combinations of CXL.cache and/or CXL.mem. The CXL folks use Type 1 (CXL.io + CXL.cache), Type 2 (all three), and Type 3 (CXL.io + CXL.mem) as examples.

Type 1 one can think of as an accelerator like a NIC that accesses the host CPU’s memory directly. Type 2 is a case where we have an accelerator, like a GPU with memory, and the GPU can access host memory while the host CPU can access GPU memory. Type 3 one can think of as a memory module where its primary purpose is to expose the CXL memory device’s media to the host CPU.

CXL 1.1 v. 2.0

Most of the early 2022 devices we will see will utilize CXL 1.1. These are still primarily host CPU managed topologies where CXL is used within a system. CXL 2.0 is where we get really interesting use cases. With CXL 2.0 we get CXL switching. CXL switching and pooling allows multiple hosts and multiple devices to be connected to a switch and then devices can be assigned as either complete or as a logical device to different hosts. CXL 2.0 is where we will start to see the game-changing deployment scenarios.

CXL 2.0 Switching Pooling

Beyond CXL 2.0 switching and pooling, we get additional security layers to the protocol.

CXL 2.0 Security

While CXL 1.0/ 1.1 will be big to start the initiative, CXL 2.0 is where we start to see truly game-changing functionality shifts. This is primarily because we get to start changing how server architectures and deployments happen. CXL 1.1 is necessary, and there are use cases especially with accelerators and GPUs. CXL 2.0 though is where hopefully we have enough software maturity that server designs change forever.

Next, we are going to discuss some examples and use our tacos and limes to help those who have difficulty visualizing on slides some common types of CXL usage.

Three Compute Express Link (CXL) Examples… Using Tacos and Limes

So let us get to tacos and limes, and why these use cases matter. This is going to be a very high-level look and is not perfect, but hopefully, this helps folks visualize some of the high-level concepts.

CXL 1.0 And 1.1 Usages

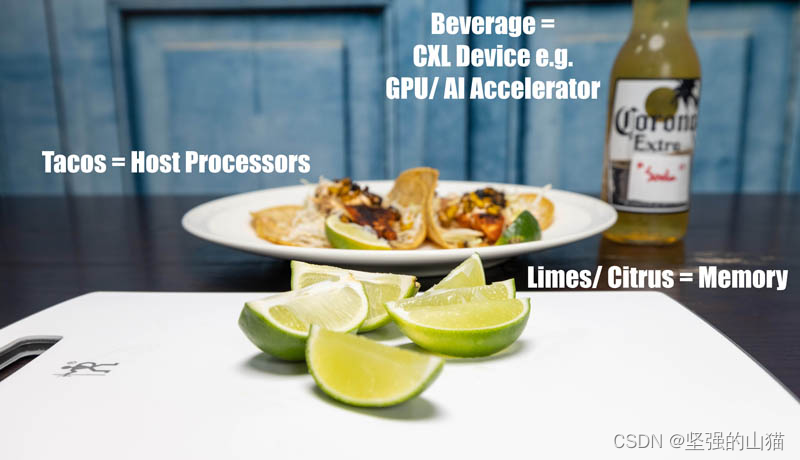

In our example, limes are going to represent memory. Main dishes are going to be represented by wonderful Rubio’s Blackened Mahi Mahi Tacos (also known as my dinner so it did not go to waste.) Accelerators are going to be represented by beverages.

CXL Taco And Lime Example Two Tacos Plate Of Limes Soda Setup

In our first example, perhaps we received one lime on our two Rubio’s blackened Mahi Mahi tacos.

CXL Taco And Lime Example Two Tacos One Lime One Soda

This is not uncommon. Most who have experienced Rubio’s tacos have had one “under-limed” event. Indeed, even the advertisements posted on store windows illustrate that this may happen showing one lime with three tacos.

Rubios Store Window Advertisement 3 Tacos 1 Lime

This shows the resource inefficiencies with provisioning memory (limes.) Our first example though discusses one possible action. Perhaps today is not a lime taco day, but it is a lime beverage day. Here, we can access the lime juice (memory) from the host processors (tacos) and use it for our beverage. That is the idea behind CXL.cache and the Type 1 device example.

CXL Lime Being Accessed Via CXL.cache Beverage

In our second example, our processors (tacos) came from Rubios with only one lime for two tacos. This is an example of where the host processor attached memory was insufficient. We can utilize the lime juice from the dish of limes in the center (CXL memory expansion module.) This is an example of CXL.mem and the Type 3 device.

CXL Taco And Lime Example Plate Of Limes

In theory, especially once we get to CXL 2.0 with switching and pooling, the memory we access does not have to be DDR5. We could (again theoretically) have CXL devices that use SCM like Intel Optane, GDDR6X, or other technologies. Cost and demand will naturally impact availability but that is why this is so important.

CXL Taco And Lime Example Taco With Citrus Variety 2

In this use case, we can have multiple types of memory, attach them to a single system, and then access entire memory devices, or use portions of devices for different purposes. CXL 2.0 has a hot plug functionality that will help make this happen. Since we are already seeing devices come out for this use case, eventually we expect this will be important. It also allows more system memory capacity than traditional DIMM form factors allow.

In our third example, let us get a bit more crazy and assume we wanted to use limes from our beverages (accelerators like GPUs) on the tacos (processors) and some of the lime juice from our tacos for our beverages. This is an example of a Type-2 device.

CXL Taco And Lime Example Many Tacos Many Sodas Citrus Variety

Again, where things start to get really fun is that let us say that instead of our standard DDR5 memory limes, we wanted 3D XPoint/ Intel Optane persistent memory or storage class memory (SCM.) We can package that SCM media (lemons) into something that looks like what we used for limes, and now have access to a Type-3 device with lemons instead of limes or both a mix of lemons and limes. Instead of a GPU and a CPU each needing their own SCM, in the CXL 2.0 world both can access the SCM or even a large pool of connected memory.

While this is not a perfect example by any means, hopefully, the photo above helps show where this is going. Just like in virtualization, the ability for a GPU to access host system or Type-3 connected device memory means that we can have more efficient use of resources and system design. We do not need a GPU/ AI accelerator with Optane memory that is separate from what we have for the host CPUs. That would be cost-prohibitive and inflexible. CXL adds this flexibility to systems. CXL helps control costs while also adding flexibility to architectures which is analogous to what virtualization did for the industry years ago.

Future Enablement Example Beyond Simple Provisioning

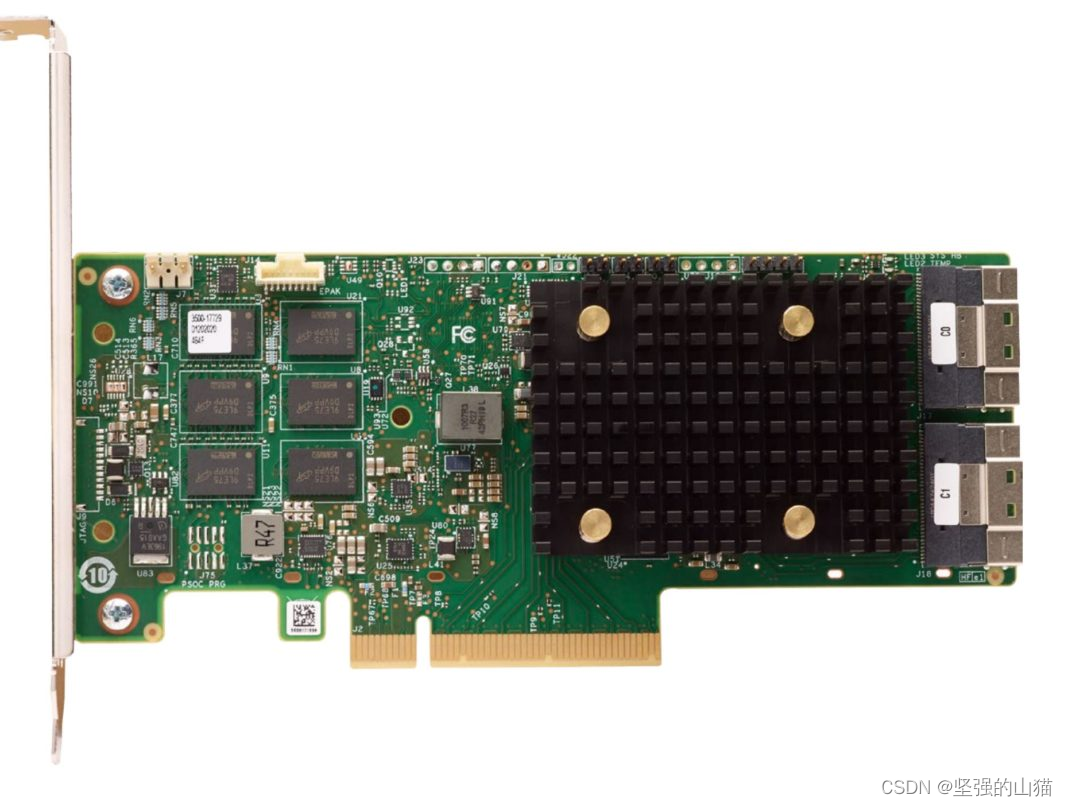

Provisioning is important, as is efficient resource utilization, but let us go one step further and imagine how this changes architecture. Although they are less common in large-scale deployments today, many enterprises still use hardware RAID controllers.

Broadcom 9500 RAID Controller

Those generally operate with a few key components and functions:

- There is a SoC that controls data movement, parity calculations, caching, and storage/ retrieval.

- Onboard DRAM acts as a write cache and often a small read-cache.

- A battery/ capacitor FRU that allows write caches to happen in the onboard DRAM and flush safely even if power is lost.

- Flash storage for the onboard DRAM to flush to in the event of a power loss.

- Storage controllers to manage disks.

- SSDs either for primary storage or managed as cache devices attached to the RAID controller.

- Often those SSDs have their own power-loss protected write caches on each device.

Now imagine the future. DRAM-less NAND SSDs and hard drives are managed at a higher level. This can remove a few GB of NAND per SSD. Instead of the power-loss protection on the NAND SSDs and on the RAID controller, along with the flash to write that power-loss flush event data, we can use a CXL-based SCM such as Optane Persistent Memory and write directly to that device as the write cache for the system. A co-processor such as a DPU can then manage flushing that write cache from SCM to local or network storage. Cache instead of being a few GB on the RAID card could potentially be 1TB of host memory (although this is basically not needed since we have CXL) or CXL DDR5 and accessed directly by the host processor instead of going through the RAID controller.

This type of model makes it practical to start thinking about how to replace traditional storage arrays with higher-performance local resources in servers and using scale-out storage as a back-end. This will be absolutely transformational to the industry and is just one example that we have heard several companies working on. Normally the examples are of memory expanders and GPUs/ AI accelerators just for larger and more diverse pools of memory, so I wanted to give something that is a bit different to illustrate why CXL can be transformational. Other more straightforward examples usually use shelves of memory, compute, and accelerators that are composable based on what is needed for a given instance type.

Final Words

The key to CXL is simply that the rigid constraints of server architecture will start to go away as CXL is adopted. This is extremely important as servers continue to scale up since this requires more efficient resource utilization. In a few years, we will look back on today’s servers and view them as a legacy operating model much as we did dedicated servers for many applications after virtualization (and later containers.) For years, this concept has been discussed, but we are effectively less than a year from it being implemented in products beyond foundational elements such as Astera Labs Aries CXL Retimers.

CXL Taco And Lime Example Many Tacos Many Sodas Citrus Variety

Hopefully, this guide helped you learn a bit about what CXL is and what it is generally trying to accomplish. There is much more to CXL in terms of technical depth, but we wanted to ensure our readers have a base level of understanding. Tacos and Limes were not perfect, but we have been looking at the same diagrams for years and there are folks who still are not aware of CXL’s impact. We are talking about this in terms of early CXL in the PCIe Gen5 era, but there is a roadmap to PCIe Gen6 and beyond. We have already discussed a bit about how this model scales to a fabric such as Gen-Z to enable larger-scale deployments using a somewhat analogous solution.

We will, of course, have more on CXL in the future, but we wanted to provide this basic level-set around what CXL is.

References:

SDC2020: CXL 1.1 Protocol Extensions: Review of the cache and memory protocols in CXL

Introduction to Compute Express Link™ (CXL™)

Compute Express Link™ (CXL™): Exploring Coherent Memory and Innovative Use Cases

4245

4245

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?