接着上一篇 ,上一篇是无杂质的,这个是有杂质的:

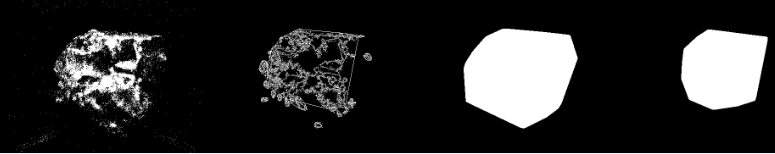

对这样的很多图找轮廓:

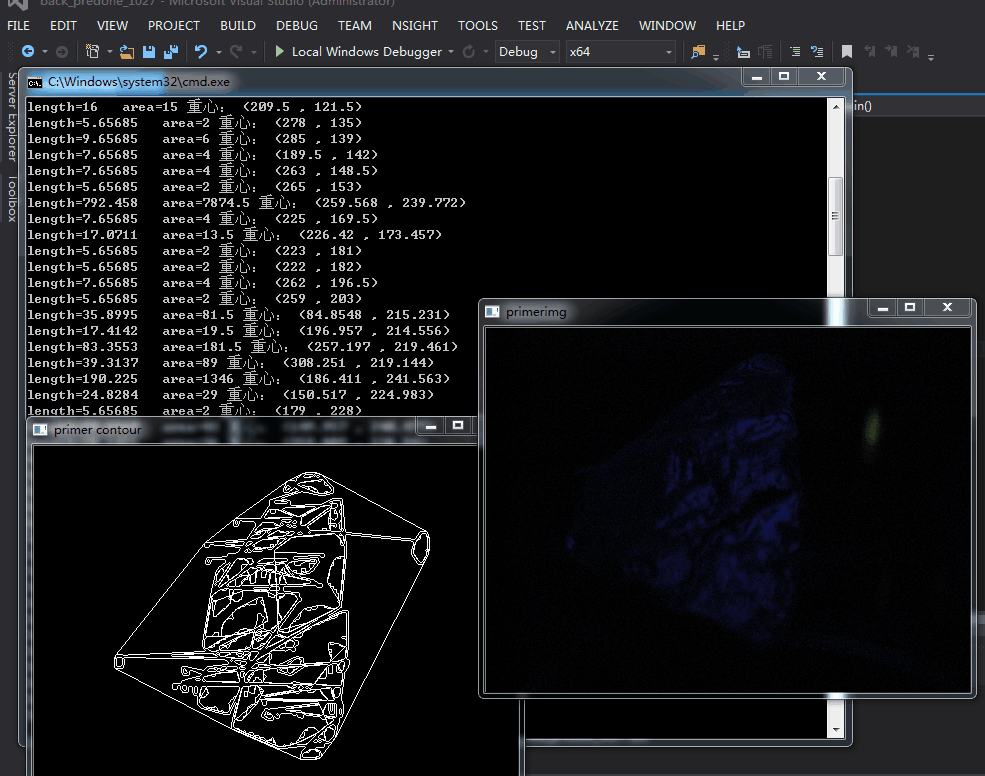

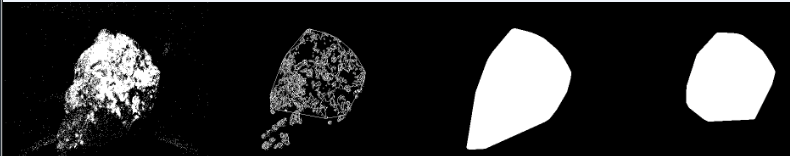

我是这样的:因为上一篇调用opencv的函数 会找到多个凸包 那么我计算各个小凸包的中心或重心 若有单独的凸包位于走光中心或重心处和溜槽中心重心处 则判别为走光或溜槽杂质 就把这个凸包置为NULL 不画它 转而画下一个

凸包重心怎么找:如下:

#include<opencv2/opencv.hpp>

#include<opencv2/imgproc/imgproc.hpp>

#include<opencv2/highgui/highgui.hpp>

#include <afxwin.h>

#include<vector>

using namespace std;

using namespace cv;

void mouseHandler(int event, int x, int y, int flags, void *p)

{

IplImage *img0, *img1;

img0 = (IplImage*)p;

img1 = cvCloneImage(img0);

CvFont font;

uchar *ptr;

char label[20];

cvInitFont(&font, CV_FONT_HERSHEY_PLAIN, 0.8, 0.8, 0, 1, 8);

if (event == CV_EVENT_LBUTTONDOWN)

{

ptr = cvPtr2D(img1, y, x, NULL);

sprintf(label, "(%d, %d, %d)", ptr[0], ptr[1], ptr[2]);

cvRectangle(img1, cvPoint(x, y - 12), cvPoint(x + 100, y + 4), CV_RGB(255, 0, 0), CV_FILLED, 8, 0);

cvPutText(img1, label, cvPoint(x, y), &font, CV_RGB(255, 255, 255));

cvShowImage("src", img1);

}

}

Mat mypredone(IplImage* srcipl, vector<Mat> bgrimgs)

{

//找轮廓

Mat img(srcipl, 0);

split(img, bgrimgs);

Mat bimg(img.size(), CV_8UC1, cv::Scalar(0));

bgrimgs[0].copyTo(bimg);

threshold(bimg, bimg, 12, 255, CV_THRESH_BINARY/*|CV_THRESH_OTSU*/);

Mat element(4, 4, CV_8U, cv::Scalar(1));

morphologyEx(bimg, bimg, cv::MORPH_OPEN, element);

//for (int i = 0; i < 20; i++)

//{

// uchar* data = bimg.ptr<uchar>(i);

// for (int j = 0; j < bimg.size().width; j++)

// data[j] = 0;

//}

//for (int i = bimg.size().height - 19; i < bimg.size().height; i++)

//{

// uchar* data = bimg.ptr<uchar>(i);

// for (int j = 0; j < bimg.size().width; j++)

// data[j] = 0;

//}

return bimg;

}

Mat my_contour(IplImage* dst, IplImage bimgipl)

{

cvZero(dst);

CvMemStorage *storage = cvCreateMemStorage();

CvSeq *contour = NULL, *hull = NULL;

vector<CvPoint> allpoints;

CvContourScanner scanner = cvStartFindContours(&bimgipl, storage);

//原来的找轮廓

while ((contour = cvFindNextContour(scanner)) != NULL)

{

cvDrawContours(dst, contour, cv::Scalar(255), cv::Scalar(255), 0);

hull = cvConvexHull2(contour, 0, CV_CLOCKWISE, 0);

CvPoint pt0 = **(CvPoint**)cvGetSeqElem(hull, hull->total - 1);

allpoints.push_back(pt0);

for (int i = 0; i < hull->total; ++i)

{

CvPoint pt1 = **(CvPoint**)cvGetSeqElem(hull, i);

allpoints.push_back(pt1);

cvLine(dst, pt0, pt1, cv::Scalar(255));

pt0 = pt1;

}

}

//上面是第一次寻找 可能有多个凸包 下面存储每个凸包的凸点到容器中 方便我连线画图再寻找一次 也是最后的

Mat dstmat(dst, 0);

std::vector<std::vector<cv::Point>> myown_contours;

cv::findContours(dstmat, myown_contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_NONE);

//cout << myown_contours.size() << endl;

if (myown_contours.size() > 1)

{

for (int i = 0; i < allpoints.size() - 1; i++)

{

CvPoint firstdot = allpoints[i];

CvPoint secdot = allpoints[i + 1];

cvLine(dst, firstdot, secdot, cv::Scalar(255), 2);

}

CvContourScanner scanner2 = cvStartFindContours(dst, storage);

while ((contour = cvFindNextContour(scanner2)) != NULL)

{

cvDrawContours(dst, contour, cv::Scalar(255), cv::Scalar(255), 0);

hull = cvConvexHull2(contour, 0, CV_CLOCKWISE, 0);

CvPoint pt0 = **(CvPoint**)cvGetSeqElem(hull, hull->total - 1);

for (int i = 0; i < hull->total; ++i)

{

CvPoint pt1 = **(CvPoint**)cvGetSeqElem(hull, i);

cvLine(dst, pt0, pt1, cv::Scalar(255));

pt0 = pt1;

}

}

}

return dst; //方便我的猜想 去走光和溜槽

//Mat bimgdst(dst, 0);

//std::vector<std::vector<cv::Point>> contours;

//cv::findContours(bimgdst, contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_NONE);

//Mat contoursimg(bimgdst.size(), CV_8UC1, cv::Scalar(0));

//drawContours(contoursimg, contours, -1, Scalar(255), CV_FILLED);

//return contoursimg;

}

Mat my_contourforback(IplImage* dst, IplImage bimgipl)

{

cvZero(dst);

CvMemStorage *storage = cvCreateMemStorage();

CvSeq *contour = NULL, *hull = NULL;

vector<CvPoint> allpoints;

CvContourScanner scanner = cvStartFindContours(&bimgipl, storage);

while ((contour = cvFindNextContour(scanner)) != NULL)

{

/去掉中央走光点的 想法

CvMoments *moments = (CvMoments*)malloc(sizeof(CvMoments));

cvMoments(contour, moments, 1);

double moment10 = cvGetSpatialMoment(moments, 1, 0);

double moment01 = cvGetSpatialMoment(moments, 0, 1);

double area = cvGetSpatialMoment(moments, 0, 0);

double posX = moment10 / area;

double posY = moment01 / area;

double mylenth = cvArcLength(contour);

double myarea = cvContourArea(contour);

//cout << "length=" << mylenth << " area=" << myarea << " 重心: " << "(" << posX << " , " << posY << ")" << " " << endl;

//center light

if (posX > double(236.0) && posX<double(243.0) && posY>double(171.0) && posY<double(197.0) && myarea<double(141) && mylenth < double(71))

{

cvSubstituteContour(scanner, NULL);

continue;

}

//right light

if (posX > double(384.0) && posX<double(395.0) && posY>double(90.0) && posY<double(113.0) && myarea<double(316) && mylenth < double(90))

{

cvSubstituteContour(scanner, NULL);

continue;

}

//below

if (posX >160.0 && posX<331.0 && posY>295.0 && posY < 362.0 && myarea < 170 && mylenth < 100)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX >212.0 && posX<251.0 && posY>248.0 && posY < 269.0 && myarea < 21 && mylenth < 20)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX >256.0 && posX<271.0 && posY>296.0 && posY < 299.5 && myarea < 25 && mylenth < 20)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX >210.0 && posX<215.0 && posY>350.0 && posY < 358.0 && myarea < 250 && mylenth < 100)

{

cvSubstituteContour(scanner, NULL);

continue;

}

// special

if (myarea == 0)

{

cvSubstituteContour(scanner, NULL);

continue;

}

///

cvDrawContours(dst, contour, cv::Scalar(255), cv::Scalar(255), 0);

hull = cvConvexHull2(contour, 0, CV_CLOCKWISE, 0);

CvPoint pt0 = **(CvPoint**)cvGetSeqElem(hull, hull->total - 1);

allpoints.push_back(pt0);

for (int i = 0; i < hull->total; ++i)

{

CvPoint pt1 = **(CvPoint**)cvGetSeqElem(hull, i);

allpoints.push_back(pt1);

cvLine(dst, pt0, pt1, cv::Scalar(255));

pt0 = pt1;

}

}

//上面是第一次寻找 可能有多个凸包 下面存储每个凸包的凸点到容器中 方便我连线画图再寻找一次 也是最后的

Mat dstmat(dst, 0);

std::vector<std::vector<cv::Point>> myown_contours;

cv::findContours(dstmat, myown_contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_NONE);

//cout << myown_contours.size() << endl;

if (myown_contours.size() > 1)

{

for (int i = 0; i < allpoints.size() - 1; i++)

{

CvPoint firstdot = allpoints[i];

CvPoint secdot = allpoints[i + 1];

cvLine(dst, firstdot, secdot, cv::Scalar(255), 2);

}

CvContourScanner scanner2 = cvStartFindContours(dst, storage);

while ((contour = cvFindNextContour(scanner2)) != NULL)

{

cvDrawContours(dst, contour, cv::Scalar(255), cv::Scalar(255), 0);

hull = cvConvexHull2(contour, 0, CV_CLOCKWISE, 0);

CvPoint pt0 = **(CvPoint**)cvGetSeqElem(hull, hull->total - 1);

for (int i = 0; i < hull->total; ++i)

{

CvPoint pt1 = **(CvPoint**)cvGetSeqElem(hull, i);

cvLine(dst, pt0, pt1, cv::Scalar(255));

pt0 = pt1;

}

}

}

return dst; //方便我的猜想 去走光和溜槽

Mat bimgdst(dst, 0);

std::vector<std::vector<cv::Point>> contours;

cv::findContours(bimgdst, contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_NONE);

Mat contoursimg(bimgdst.size(), CV_8UC1, cv::Scalar(0));

drawContours(contoursimg, contours, -1, Scalar(255), CV_FILLED);

///imshow("myresult", contoursimg);

return contoursimg;

}

void ImageMerge(IplImage* pImageA, IplImage* pImageB, IplImage*& pImageRes)

{

assert(pImageA != NULL && pImageB != NULL);

assert(pImageA->depth == pImageB->depth && pImageA->nChannels == pImageB->nChannels);

if (pImageRes != NULL)

{

cvReleaseImage(&pImageRes);

pImageRes = NULL;

}

CvSize size;

size.width = pImageA->width + pImageB->width + 10;

size.height = (pImageA->height > pImageB->height) ? pImageA->height : pImageB->height;

pImageRes = cvCreateImage(size, pImageA->depth, pImageA->nChannels);

CvRect rect = cvRect(0, 0, pImageA->width, pImageA->height);

cvSetImageROI(pImageRes, rect);

cvRepeat(pImageA, pImageRes);

cvResetImageROI(pImageRes);

rect = cvRect(pImageA->width + 0, 0, pImageB->width, pImageB->height);

cvSetImageROI(pImageRes, rect);

cvRepeat(pImageB, pImageRes);

cvResetImageROI(pImageRes);

}

char filename[100];

char filename1[100];

char filename2[100];

char primercontour[100];

char newcontour[100];

void main()

{

TickMeter tm;

tm.start();

/*///去掉中央走光点的找轮廓 //many imgs

for (int i = 55124; i <= 56460; i++)

{

sprintf(filename, "待处理背面图\\%d.bmp", i);

//sprintf(primercontour, "所有图原来算法轮廓\\%d.bmp", i);

sprintf(newcontour, "所有图新算法轮廓\\%d.bmp", i);

IplImage* src_ipl = cvLoadImage(filename);

//cvShowImage("primerimg", src_ipl);

vector<Mat> bgrimgs;

Mat bimg = mypredone(src_ipl, bgrimgs);

//原来的找轮廓

//IplImage mybimgipl = bimg;

//IplImage *mydst0 = cvCreateImage(cvGetSize(src_ipl), 8, 1);

//Mat myresultprimer(mydst0, 0);

//myresultprimer = my_contour(mydst0, mybimgipl);

//imwrite(primercontour, myresultprimer);

//imshow("primer contour", myresultprimer);

现在的轮廓 //计算各个小凸包的中心或重心 若有单独的凸包位于走光中心或重心处和溜槽中心重心处 则判别为走光或溜槽

IplImage *mydst1 = cvCreateImage(cvGetSize(src_ipl), 8, 1);

Mat myresultprimer1(mydst1, 0);

IplImage mybimgipl1 = bimg;

myresultprimer1 = my_contourforback(mydst1, mybimgipl1);

imwrite(newcontour, myresultprimer1);

}

*/

/*/for contrast

CvSize mysize = cvSize(484*5, 364);

for (int i = 1; i <= 1337; i++)

{

int newii = i + 55123;

sprintf(filename, "罗彬背面轮廓\\%d.bmp", i);

sprintf(newcontour, "所有图新算法轮廓\\%d.bmp", newii);

sprintf(primercontour, "背面轮廓对比最终\\%d.bmp", i);

IplImage* src_luobin = cvLoadImage(filename);

IplImage* src_me = cvLoadImage(newcontour);

IplImage* contrast = cvCreateImage(mysize, src_me->depth, src_me->nChannels);

cvZero(contrast);

ImageMerge(src_luobin, src_me, contrast);

cvSaveImage(primercontour, contrast);

}

*/

/*

CvSize mysize = cvSize(484*2, 364);

for (int i = 55124; i <= 56460; i++)

{

//int newii = i + 55123;

sprintf(filename, "待处理背面图\\%d.bmp", i);

sprintf(newcontour, "所有图原来算法轮廓\\%d.bmp", i);

sprintf(primercontour, "原图原轮廓\\%d.bmp", i);

IplImage* src_left = cvLoadImage(filename);

IplImage* src_right = cvLoadImage(newcontour);

IplImage* contrast = cvCreateImage(mysize, src_right->depth, src_right->nChannels);

cvZero(contrast);

ImageMerge(src_left, src_right, contrast);

cvSaveImage(primercontour, contrast);

}

*/

/* /批量保存

for (int i = 55124; i <= 56460; i++)

{

sprintf(filename, "所有待处理背面\\%d.bmp", i);

sprintf(filename1, "所有待处理背面\\%da.bmp", i);

sprintf(filename2, "所有待处理背面\\%db.bmp", i);

sprintf(primercontour, "所有图原来算法轮廓\\%d.bmp", i);

sprintf(newcontour, "所有图新算法轮廓\\%d.bmp", i);

IplImage* primer_contour = cvLoadImage(primercontour);

IplImage* new_contour = cvLoadImage(newcontour);

cvSaveImage(filename1, primer_contour);

cvSaveImage(filename2, new_contour);

}

*/

/去掉中央走光点的找轮廓 1 img

IplImage* src_ipl = cvLoadImage("55501.bmp");

cvShowImage("primerimg", src_ipl);

vector<Mat> bgrimgs;

Mat bimg = mypredone(src_ipl, bgrimgs);

//

IplImage mybimgipl = bimg;

IplImage *mydst0 = cvCreateImage(cvGetSize(src_ipl), 8, 1);

cvZero(mydst0);

Mat myresultprimer(mydst0, 0);

myresultprimer = my_contour(mydst0, mybimgipl);

imshow("primer contour", myresultprimer);

//

IplImage *mydst = cvCreateImage(cvGetSize(src_ipl), 8, 1);

cvZero(mydst);

IplImage mybimgipl1 = bimg;

CvMemStorage *storage = cvCreateMemStorage();

CvSeq *contour = NULL, *hull = NULL;

vector<CvPoint> allpoints;

CvContourScanner scanner = cvStartFindContours(&mybimgipl1, storage);

while ((contour = cvFindNextContour(scanner)) != NULL)

{

/去掉中央走光点的 想法

CvMoments *moments = (CvMoments*)malloc(sizeof(CvMoments));

cvMoments(contour, moments, 1);

double moment10 = cvGetSpatialMoment(moments, 1, 0);

double moment01 = cvGetSpatialMoment(moments, 0, 1);

double area = cvGetSpatialMoment(moments, 0, 0);

double posX = moment10 / area;

double posY = moment01 / area;

double mylenth = cvArcLength(contour);

double myarea = cvContourArea(contour);

cout << "length=" << mylenth << " area=" << myarea << " 重心: " << "(" << posX << " , " << posY << ")" << " " << endl;

//center light

if (posX > double(236.0) && posX<double(243.0) && posY>double(171.0) && posY<double(197.0) && myarea<double(141) && mylenth < double(71))

{

cvSubstituteContour(scanner, NULL);

continue;

}

//right light

if (posX > double(384.0) && posX<double(395.0) && posY>double(90.0) && posY<double(113.0) && myarea<double(316) && mylenth < double(90))

{

cvSubstituteContour(scanner, NULL);

continue;

}

//below

if (posX >160.0 && posX<331.0 && posY>295.0 && posY < 362.0 && myarea < 170 && mylenth < 100)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX >212.0 && posX<251.0 && posY>248.0 && posY < 269.0 && myarea < 21 && mylenth < 20)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX >256.0 && posX<271.0 && posY>296.0 && posY < 299.5 && myarea < 25 && mylenth < 20)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX >210.0 && posX<215.0 && posY>350.0 && posY < 358.0 && myarea < 250 && mylenth < 100)

{

cvSubstituteContour(scanner, NULL);

continue;

}

// special

if (myarea ==0)

{

cvSubstituteContour(scanner, NULL);

continue;

}

///

cvDrawContours(mydst, contour, cv::Scalar(255), cv::Scalar(255), 0);

hull = cvConvexHull2(contour, 0, CV_CLOCKWISE, 0);

CvPoint pt0 = **(CvPoint**)cvGetSeqElem(hull, hull->total - 1);

allpoints.push_back(pt0);

for (int i = 0; i < hull->total; ++i)

{

CvPoint pt1 = **(CvPoint**)cvGetSeqElem(hull, i);

allpoints.push_back(pt1);

cvLine(mydst, pt0, pt1, cv::Scalar(255));

pt0 = pt1;

}

}

//上面是第一次寻找 可能有多个凸包 下面存储每个凸包的凸点到容器中 方便我连线画图再寻找一次 也是最后的

Mat dstmat(mydst, 0);

std::vector<std::vector<cv::Point>> myown_contours;

cv::findContours(dstmat, myown_contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_NONE);

//cout << myown_contours.size() << endl;

if (myown_contours.size() > 1)

{

for (int i = 0; i < allpoints.size() - 1; i++)

{

CvPoint firstdot = allpoints[i];

CvPoint secdot = allpoints[i + 1];

cvLine(mydst, firstdot, secdot, cv::Scalar(255), 2);

}

CvContourScanner scanner2 = cvStartFindContours(mydst, storage);

while ((contour = cvFindNextContour(scanner2)) != NULL)

{

cvDrawContours(mydst, contour, cv::Scalar(255), cv::Scalar(255), 0);

hull = cvConvexHull2(contour, 0, CV_CLOCKWISE, 0);

CvPoint pt0 = **(CvPoint**)cvGetSeqElem(hull, hull->total - 1);

for (int i = 0; i < hull->total; ++i)

{

CvPoint pt1 = **(CvPoint**)cvGetSeqElem(hull, i);

cvLine(mydst, pt0, pt1, cv::Scalar(255));

pt0 = pt1;

}

}

}

cvShowImage("mycontour", mydst);

Mat bimgdst(mydst, 0);

std::vector<std::vector<cv::Point>> contours;

cv::findContours(bimgdst, contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_NONE);

Mat contoursimg(bimgdst.size(), CV_8UC1, cv::Scalar(0));

drawContours(contoursimg, contours, -1, Scalar(255), CV_FILLED);

imshow("myresult", contoursimg);

waitKey(0);

//从颜色减去中央走光 不行!!!!

//assert(src);

//cvNamedWindow("src", 1);

//cvSetMouseCallback("src", mouseHandler, (void*)src);

//mouseHandler(0, 0, 0, 0, src);

//cvShowImage("src", src);

//cvWaitKey(0);

tm.stop();

cout << "count=" << tm.getCounter() << ",process time=" << tm.getTimeMilli() << endl;

}

然后通过下面这个:

#include<opencv2/opencv.hpp>

#include<opencv2/imgproc/imgproc.hpp>

#include<opencv2/highgui/highgui.hpp>

#include <afxwin.h>

#include<vector>

using namespace std;

using namespace cv;

void mouseHandler(int event, int x, int y, int flags, void *p)

{

IplImage *img0, *img1;

img0 = (IplImage*)p;

img1 = cvCloneImage(img0);

CvFont font;

uchar *ptr;

char label[20];

cvInitFont(&font, CV_FONT_HERSHEY_PLAIN, 0.8, 0.8, 0, 1, 8);

if (event == CV_EVENT_LBUTTONDOWN)

{

ptr = cvPtr2D(img1, y, x, NULL);

sprintf(label, "(%d, %d, %d)", ptr[0], ptr[1], ptr[2]);

cvRectangle(img1, cvPoint(x, y - 12), cvPoint(x + 100, y + 4), CV_RGB(255, 0, 0), CV_FILLED, 8, 0);

cvPutText(img1, label, cvPoint(x, y), &font, CV_RGB(255, 255, 255));

cvShowImage("src", img1);

}

}

Mat mypredone(IplImage* srcipl, vector<Mat> bgrimgs)

{

//找轮廓

Mat img(srcipl, 0);

split(img, bgrimgs);

Mat bimg(img.size(), CV_8UC1, cv::Scalar(0));

bgrimgs[0].copyTo(bimg);

threshold(bimg, bimg, 12, 255, CV_THRESH_BINARY/*|CV_THRESH_OTSU*/);

Mat element(4, 4, CV_8U, cv::Scalar(1));

morphologyEx(bimg, bimg, cv::MORPH_OPEN, element);

//for (int i = 0; i < 20; i++)

//{

// uchar* data = bimg.ptr<uchar>(i);

// for (int j = 0; j < bimg.size().width; j++)

// data[j] = 0;

//}

//for (int i = bimg.size().height - 19; i < bimg.size().height; i++)

//{

// uchar* data = bimg.ptr<uchar>(i);

// for (int j = 0; j < bimg.size().width; j++)

// data[j] = 0;

//}

return bimg;

}

Mat my_contour(IplImage* dst, IplImage bimgipl)

{

cvZero(dst);

CvMemStorage *storage = cvCreateMemStorage();

CvSeq *contour = NULL, *hull = NULL;

vector<CvPoint> allpoints;

CvContourScanner scanner = cvStartFindContours(&bimgipl, storage);

//原来的找轮廓

while ((contour = cvFindNextContour(scanner)) != NULL)

{

cvDrawContours(dst, contour, cv::Scalar(255), cv::Scalar(255), 0);

hull = cvConvexHull2(contour, 0, CV_CLOCKWISE, 0);

CvPoint pt0 = **(CvPoint**)cvGetSeqElem(hull, hull->total - 1);

allpoints.push_back(pt0);

for (int i = 0; i < hull->total; ++i)

{

CvPoint pt1 = **(CvPoint**)cvGetSeqElem(hull, i);

allpoints.push_back(pt1);

cvLine(dst, pt0, pt1, cv::Scalar(255));

pt0 = pt1;

}

}

//上面是第一次寻找 可能有多个凸包 下面存储每个凸包的凸点到容器中 方便我连线画图再寻找一次 也是最后的

Mat dstmat(dst, 0);

std::vector<std::vector<cv::Point>> myown_contours;

cv::findContours(dstmat, myown_contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_NONE);

//cout << myown_contours.size() << endl;

if (myown_contours.size() > 1)

{

for (int i = 0; i < allpoints.size() - 1; i++)

{

CvPoint firstdot = allpoints[i];

CvPoint secdot = allpoints[i + 1];

cvLine(dst, firstdot, secdot, cv::Scalar(255), 2);

}

CvContourScanner scanner2 = cvStartFindContours(dst, storage);

while ((contour = cvFindNextContour(scanner2)) != NULL)

{

cvDrawContours(dst, contour, cv::Scalar(255), cv::Scalar(255), 0);

hull = cvConvexHull2(contour, 0, CV_CLOCKWISE, 0);

CvPoint pt0 = **(CvPoint**)cvGetSeqElem(hull, hull->total - 1);

for (int i = 0; i < hull->total; ++i)

{

CvPoint pt1 = **(CvPoint**)cvGetSeqElem(hull, i);

cvLine(dst, pt0, pt1, cv::Scalar(255));

pt0 = pt1;

}

}

}

return dst; //方便我的猜想 去走光和溜槽

//Mat bimgdst(dst, 0);

//std::vector<std::vector<cv::Point>> contours;

//cv::findContours(bimgdst, contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_NONE);

//Mat contoursimg(bimgdst.size(), CV_8UC1, cv::Scalar(0));

//drawContours(contoursimg, contours, -1, Scalar(255), CV_FILLED);

//return contoursimg;

}

Mat my_contourforback(IplImage* dst, IplImage bimgipl)

{

cvZero(dst);

CvMemStorage *storage = cvCreateMemStorage();

CvSeq *contour = NULL, *hull = NULL;

vector<CvPoint> allpoints;

CvContourScanner scanner = cvStartFindContours(&bimgipl, storage);

while ((contour = cvFindNextContour(scanner)) != NULL)

{

/去掉中央走光点的 想法

CvMoments *moments = (CvMoments*)malloc(sizeof(CvMoments));

cvMoments(contour, moments, 1);

double moment10 = cvGetSpatialMoment(moments, 1, 0);

double moment01 = cvGetSpatialMoment(moments, 0, 1);

double area = cvGetSpatialMoment(moments, 0, 0);

double posX = moment10 / area;

double posY = moment01 / area;

double mylenth = cvArcLength(contour);

double myarea = cvContourArea(contour);

//cout << "length=" << mylenth << " area=" << myarea << " 重心: " << "(" << posX << " , " << posY << ")" << " " << endl;

//center light

if (posX > double(236.0) && posX<double(243.0) && posY>double(171.0) && posY<double(197.0) && myarea<double(141) && mylenth < double(71))

{

cvSubstituteContour(scanner, NULL);

continue;

}

//right light

if (posX > double(384.0) && posX<double(395.0) && posY>double(90.0) && posY<double(113.0) && myarea<double(316) && mylenth < double(90))

{

cvSubstituteContour(scanner, NULL);

continue;

}

//below

if (posX >160.0 && posX<331.0 && posY>295.0 && posY < 362.0 && myarea < 170 && mylenth < 100)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX >212.0 && posX<251.0 && posY>248.0 && posY < 269.0 && myarea < 21 && mylenth < 20)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX >256.0 && posX<271.0 && posY>296.0 && posY < 299.5 && myarea < 25 && mylenth < 20)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX >210.0 && posX<215.0 && posY>350.0 && posY < 358.0 && myarea < 250 && mylenth < 100)

{

cvSubstituteContour(scanner, NULL);

continue;

}

// special

if (myarea == 0)

{

cvSubstituteContour(scanner, NULL);

continue;

}

///

cvDrawContours(dst, contour, cv::Scalar(255), cv::Scalar(255), 0);

hull = cvConvexHull2(contour, 0, CV_CLOCKWISE, 0);

CvPoint pt0 = **(CvPoint**)cvGetSeqElem(hull, hull->total - 1);

allpoints.push_back(pt0);

for (int i = 0; i < hull->total; ++i)

{

CvPoint pt1 = **(CvPoint**)cvGetSeqElem(hull, i);

allpoints.push_back(pt1);

cvLine(dst, pt0, pt1, cv::Scalar(255));

pt0 = pt1;

}

}

//上面是第一次寻找 可能有多个凸包 下面存储每个凸包的凸点到容器中 方便我连线画图再寻找一次 也是最后的

Mat dstmat(dst, 0);

std::vector<std::vector<cv::Point>> myown_contours;

cv::findContours(dstmat, myown_contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_NONE);

//cout << myown_contours.size() << endl;

if (myown_contours.size() > 1)

{

for (int i = 0; i < allpoints.size() - 1; i++)

{

CvPoint firstdot = allpoints[i];

CvPoint secdot = allpoints[i + 1];

cvLine(dst, firstdot, secdot, cv::Scalar(255), 2);

}

CvContourScanner scanner2 = cvStartFindContours(dst, storage);

while ((contour = cvFindNextContour(scanner2)) != NULL)

{

cvDrawContours(dst, contour, cv::Scalar(255), cv::Scalar(255), 0);

hull = cvConvexHull2(contour, 0, CV_CLOCKWISE, 0);

CvPoint pt0 = **(CvPoint**)cvGetSeqElem(hull, hull->total - 1);

for (int i = 0; i < hull->total; ++i)

{

CvPoint pt1 = **(CvPoint**)cvGetSeqElem(hull, i);

cvLine(dst, pt0, pt1, cv::Scalar(255));

pt0 = pt1;

}

}

}

//return dst; //方便我的猜想 去走光和溜槽

Mat bimgdst(dst, 0);

std::vector<std::vector<cv::Point>> contours;

cv::findContours(bimgdst, contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_NONE);

Mat contoursimg(bimgdst.size(), CV_8UC1, cv::Scalar(0));

drawContours(contoursimg, contours, -1, Scalar(255), CV_FILLED);

///imshow("myresult", contoursimg);

return contoursimg;

}

void ImageMerge(IplImage* pImageA, IplImage* pImageB, IplImage*& pImageRes)

{

assert(pImageA != NULL && pImageB != NULL);

assert(pImageA->depth == pImageB->depth && pImageA->nChannels == pImageB->nChannels);

if (pImageRes != NULL)

{

cvReleaseImage(&pImageRes);

pImageRes = NULL;

}

CvSize size;

size.width = pImageA->width + pImageB->width + 10;

size.height = (pImageA->height > pImageB->height) ? pImageA->height : pImageB->height;

pImageRes = cvCreateImage(size, pImageA->depth, pImageA->nChannels);

CvRect rect = cvRect(0, 0, pImageA->width, pImageA->height);

cvSetImageROI(pImageRes, rect);

cvRepeat(pImageA, pImageRes);

cvResetImageROI(pImageRes);

rect = cvRect(pImageA->width + 0, 0, pImageB->width, pImageB->height);

cvSetImageROI(pImageRes, rect);

cvRepeat(pImageB, pImageRes);

cvResetImageROI(pImageRes);

}

char filename[100];

char filename1[100];

char filename2[100];

char primercontour[100];

char newcontour[100];

void main()

{

///去掉中央走光点的找轮廓 //many imgs

for (int i = 55124; i <= 56460; i++)

{

sprintf(filename, "待处理背面图\\%d.bmp", i);

//sprintf(primercontour, "所有图原来算法轮廓\\%d.bmp", i);

sprintf(newcontour, "所有图新算法轮廓\\%d.bmp", i);

IplImage* src_ipl = cvLoadImage(filename);

//cvShowImage("primerimg", src_ipl);

vector<Mat> bgrimgs;

Mat bimg = mypredone(src_ipl, bgrimgs);

//原来的找轮廓

//IplImage mybimgipl = bimg;

//IplImage *mydst0 = cvCreateImage(cvGetSize(src_ipl), 8, 1);

//Mat myresultprimer(mydst0, 0);

//myresultprimer = my_contour(mydst0, mybimgipl);

//imwrite(primercontour, myresultprimer);

//imshow("primer contour", myresultprimer);

现在的轮廓 //计算各个小凸包的中心或重心 若有单独的凸包位于走光中心或重心处和溜槽中心重心处 则判别为走光或溜槽

IplImage *mydst1 = cvCreateImage(cvGetSize(src_ipl), 8, 1);

Mat myresultprimer1(mydst1, 0);

IplImage mybimgipl1 = bimg;

myresultprimer1 = my_contourforback(mydst1, mybimgipl1);

imwrite(newcontour, myresultprimer1);

}

}

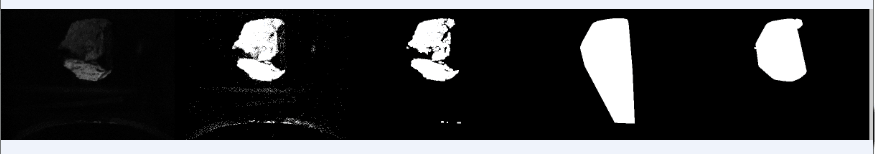

然后将上一篇的结果和这一次的结果对比:

/for contrast

CvSize mysize = cvSize(484*5, 364);

for (int i = 1; i <= 1337; i++)

{

int newii = i + 55123;

sprintf(filename, "罗彬背面轮廓\\%d.bmp", i);

sprintf(newcontour, "所有图新算法轮廓\\%d.bmp", newii);

sprintf(primercontour, "背面轮廓对比最终\\%d.bmp", i);

IplImage* src_luobin = cvLoadImage(filename);

IplImage* src_me = cvLoadImage(newcontour);

IplImage* contrast = cvCreateImage(mysize, src_me->depth, src_me->nChannels);

cvZero(contrast);

ImageMerge(src_luobin, src_me, contrast);

cvSaveImage(primercontour, contrast);

}

右边杂质的 对比!

另外百度到的这个函数:

void ImageMerge(IplImage* pImageA, IplImage* pImageB, IplImage*& pImageRes)

{

assert(pImageA != NULL && pImageB != NULL);

assert(pImageA->depth == pImageB->depth && pImageA->nChannels == pImageB->nChannels);

if (pImageRes != NULL)

{

cvReleaseImage(&pImageRes);

pImageRes = NULL;

}

CvSize size;

size.width = pImageA->width + pImageB->width + 10;

size.height = (pImageA->height > pImageB->height) ? pImageA->height : pImageB->height;

pImageRes = cvCreateImage(size, pImageA->depth, pImageA->nChannels);

CvRect rect = cvRect(0, 0, pImageA->width, pImageA->height);

cvSetImageROI(pImageRes, rect);

cvRepeat(pImageA, pImageRes);

cvResetImageROI(pImageRes);

rect = cvRect(pImageA->width + 0, 0, pImageB->width, pImageB->height);

cvSetImageROI(pImageRes, rect);

cvRepeat(pImageB, pImageRes);

cvResetImageROI(pImageRes);

}

是将左图和右图拼在一起 对比 但我之前是想将一幅图复制到另一幅图的ROI区域 开始自己写的没有cvRepeat 一直实现不了 没有这个函数 那么实现的就只是将一幅图的ROI区域取出来显示或保存 与我想要的效果不同 我还试了那种复制矩阵头 指向区域的指针 也是实现的取出ROI区域并保存显示的功能 和我想要的不一样 :

void main()

{

IplImage* left = cvLoadImage("378.bmp");

IplImage* right = cvLoadImage("55501.bmp");

CvSize mysize = cvSize(484 * 5, 364);

IplImage* combine = cvCreateImage(mysize, left->depth, left->nChannels);

cvZero(combine);

//结果图combine的ROI区域

cvSetImageROI(combine, cvRect(1935, 0, right->width, right->height));

//把right复制到这个区域 我想

cvCopy(right, combine);

//释放combine的ROI区域

cvResetImageROI(combine);

cvShowImage("result", combine);

waitKey(0);

}然后没有将图复制进来?!

然后我又试着将它保存 而不是显示:

结果又证明复制进来了???????????????????!!!!!!!!!真奇葩

void main()

{

IplImage* left = cvLoadImage("378.bmp");

IplImage* right = cvLoadImage("55501.bmp");

CvSize mysize = cvSize(484 * 5, 364);

IplImage* combine = cvCreateImage(mysize, left->depth, left->nChannels);

cvZero(combine);

//结果图combine的ROI区域

cvSetImageROI(combine, cvRect(1935, 0, right->width, right->height));

//把right复制到这个区域 我想

cvCopy(right, combine);

//释放combine的ROI区域

cvResetImageROI(combine);

cvSaveImage("right.bmp", combine);

cvSetImageROI(combine, cvRect(0, 0, left->width, left->height));

//把left复制到这个区域 我想

cvCopy(left, combine);

//释放combine的ROI区域

cvResetImageROI(combine);

cvSaveImage("rightandleft.bmp", combine);

waitKey(0);

}

这个我没有像那个百度到的函数那样用什么cvRepeat 竟然也行???????????为什么都显示不出来显示出来都是错误的 保存的结果却是对的呢???

我还试了另一种:

void main()

{

cv::Mat leftmat = imread("378.bmp");

Mat rightmat = imread("55501.bmp");

Mat combinemat(364, 484*5,CV_8UC1,cv::Scalar(0));

Mat combinematROI = combinemat(Rect(1935, 0, rightmat.cols, rightmat.rows));

//【3】加载掩模(必须是灰度图)

Mat mask = imread("55501.bmp", 0);

//【4】将掩膜拷贝到ROI

rightmat.copyTo(combinematROI, mask);

imshow("resultmat", combinemat);

waitKey(0);

}突然发现 定义一幅图片IplImage和Mat不一样 Mat是先写行再写列 而IplImage是相反的 开始我显示的重心坐标也是反的 它是先列后行!!!!!!!!!!!!!!!

最近看了两部很悲伤的小说《东宫》《犹记惊鸿照影》///

Mat change_wg(Mat bimg){

for (int j = 0; j < bimg.rows; j++){

uchar* data = bimg.ptr<uchar>(j);

for (int i = 0; i < bimg.cols; i++){

if (data[i] == 255){

bool flag;

flag = area_ratio(bimg, j, i, 6, 0.17);

if (flag == false)

data[i] = 0;

}

else

continue;

}

}

return bimg;

}

char filename[100];

char primercontour[100];

char newcontour[100];

void main()

{

TickMeter tm;

tm.start();

for (int i = 55124; i <= 56460; i++)

{

sprintf(filename, "待处理背面图\\%d.bmp", i);

sprintf(newcontour, "背面轮廓1122\\%d.bmp", i);

IplImage* src_ipl = cvLoadImage(filename);

///魏工找轮廓想法 改进 1 img

//IplImage* src_ipl = cvLoadImage("55507.bmp");

vector<Mat> bgrimgs;

Mat img(src_ipl, 0);

split(img, bgrimgs);

Mat bimg(img.size(), CV_8UC1, cv::Scalar(0));

bgrimgs[0].copyTo(bimg);

threshold(bimg, bimg, 12, 255, CV_THRESH_BINARY);

//imshow("thresh", bimg);

Mat bimg_done(img.size(), CV_8UC1, cv::Scalar(0));

bimg_done = change_wg(bimg);

//imshow("changewg", bimg_done);

IplImage *mydst0 = cvCreateImage(cvGetSize(src_ipl), 8, 1);

cvZero(mydst0);

Mat myresultprimer(mydst0, 0);

IplImage mybimgipl = bimg_done;

myresultprimer = my_contour(mydst0, mybimgipl);

IplImage *mydst = cvCreateImage(cvGetSize(src_ipl), 8, 1);

cvZero(mydst);

//int belownum = 0;

//bool belowflag = false;

Mat mylastimg;

mylastimg = wg_wd_contour(bimg_done, myresultprimer, bimg, mydst);

imwrite(newcontour, mylastimg);

}

}

但在下方溜槽噪声处 有的图片还是会被我自己去噪声的苛刻条件丢失部分用模板法找到的轮廓:

这是个遗憾 。。。

但上面时间略长 和quickhull结合下缩短时间:

#include <algorithm>

#include <cmath>

#include <vector>

#include <chrono>

#include <cstdio>

#include <random>

#include <vector>

#include<opencv2/opencv.hpp>

#include<opencv2/imgproc/imgproc.hpp>

#include<opencv2/highgui/highgui.hpp>

using namespace std;

using namespace cv;

struct myPoint {

int x;

int y;

bool operator<(const myPoint& pt) {

if (x == pt.x)

{

return (y < pt.y);

}

return (x < pt.x);

}

};

// http://stackoverflow.com/questions/1560492/how-to-tell-whether-a-myPoint-is-to-the-right-or-left-side-of-a-line

int SideOfLine(const myPoint &P1, const myPoint &P2, const myPoint &P3) {

return (P2.x - P1.x) * (P3.y - P1.y) - (P2.y - P1.y) * (P3.x - P1.x);

}

// https://en.wikipedia.org/wiki/Distance_from_a_myPoint_to_a_line#Line_defined_by_two_myPoints

float DistanceFromLine(const myPoint &P1, const myPoint &P2, const myPoint &P3) {

return (std::abs((P2.y - P1.y) * P3.x - (P2.x - P1.x) * P3.y + P2.x * P1.y - P2.y * P1.x)

/ std::sqrt((P2.y - P1.y) * (P2.y - P1.y) + (P2.x - P1.x) * (P2.x - P1.x)));

}

// http://stackoverflow.com/questions/13300904/determine-whether-myPoint-lies-inside-triangle

bool myPointInTriangle(const myPoint &p, const myPoint &p1, const myPoint &p2, const myPoint &p3) {

float a = ((p2.y - p3.y) * (p.x - p3.x) + (p3.x - p2.x) * (p.y - p3.y)) / ((p2.y - p3.y) * (p1.x - p3.x) + (p3.x - p2.x) * (p1.y - p3.y));

float b = ((p3.y - p1.y) * (p.x - p3.x) + (p1.x - p3.x) * (p.y - p3.y)) / ((p2.y - p3.y) * (p1.x - p3.x) + (p3.x - p2.x) * (p1.y - p3.y));

float c = 1.0f - a - b;

return (0.0f < a && 0.0f < b && 0.0f < c);

}

// http://www.cse.yorku.ca/~aaw/Hang/quick_hull/Algorithm.html

void FindHull(const std::vector<myPoint> &Sk, const myPoint P, const myPoint Q, std::vector<myPoint> &hullmyPoints) {

if (Sk.size() == 0) return;

std::vector<myPoint> S0;

std::vector<myPoint> S1;

std::vector<myPoint> S2;

float furthestDistance = 0.0f;

myPoint C;

for (const auto &pt : Sk) {

float distance = DistanceFromLine(P, Q, pt);

if (distance > furthestDistance) {

furthestDistance = distance;

C = pt;

}

}

hullmyPoints.push_back(C);

for (const auto &pt : Sk) {

if (myPointInTriangle(pt, P, C, Q)) {

S0.push_back(pt);

}

else if (0 < SideOfLine(P, C, pt)) {

S1.push_back(pt);

}

else if (0 < SideOfLine(C, Q, pt)) {

S2.push_back(pt);

}

}

FindHull(S1, P, C, hullmyPoints);

FindHull(S2, C, Q, hullmyPoints);

}

// http://www.cse.yorku.ca/~aaw/Hang/quick_hull/Algorithm.html

void QuickHull(const std::vector<myPoint> &s, std::vector<myPoint> &hullmyPoints) {

myPoint A = s[0];

myPoint B = s[s.size() - 1];

hullmyPoints.push_back(A);

hullmyPoints.push_back(B);

std::vector<myPoint> S1;

std::vector<myPoint> S2;

for (auto it = s.begin() + 1; it != s.end() - 1; ++it) {

const myPoint pt = *it;

const int s1 = SideOfLine(A, B, pt);

const int s2 = SideOfLine(B, A, pt);

if (0 < s1) {

S1.push_back(pt);

}

else if (0 < s2) {

S2.push_back(pt);

}

}

FindHull(S1, A, B, hullmyPoints);

FindHull(S2, B, A, hullmyPoints);

}

Mat mypredone(IplImage* srcipl, vector<Mat> bgrimgs)

{

预处理

Mat img(srcipl, 0);

split(img, bgrimgs);

Mat bimg(img.size(), CV_8UC1, cv::Scalar(0));

bgrimgs[0].copyTo(bimg);

threshold(bimg, bimg, 12, 255, CV_THRESH_BINARY);

return bimg;

}

bool area_ratio(Mat bimg, int myrow, int mycol, int size, float threshold){

int one = 0;

for (int j = myrow - size; j < myrow + size; j++){

if (j < 0)

continue;

if (j >= bimg.rows)

continue;

uchar* data = bimg.ptr<uchar>(j);

for (int i = mycol - size; i < mycol + size; i++){

if (i < 0)

continue;

if (i >= bimg.cols)

continue;

if (data[i] != 0)

++one;

}

}

float ratio = (float)one / (4 * size*size);

if (ratio >= threshold)

return true;

else

return false;

}

Mat change_wg(Mat bimg){

for (int j = 0; j < bimg.rows; j++){

uchar* data = bimg.ptr<uchar>(j);

for (int i = 0; i < bimg.cols; i++){

if (data[i] == 255){

bool flag;

flag = area_ratio(bimg, j, i, 6, 0.17);

if (flag == false)

data[i] = 0;

}

else

continue;

}

}

return bimg;

}

Mat wg_wd_contour(Mat bimg_done,Mat bimg, IplImage* mydst, int belownum = 0, bool belowflag = false){

IplImage mybimgipl1 = bimg;

CvMemStorage *storage = cvCreateMemStorage();

CvSeq *contour = NULL, *hull = NULL;

vector<CvPoint> allpoints;

vector<myPoint> myallpoints;

CvContourScanner scanner = cvStartFindContours(&mybimgipl1, storage);

while ((contour = cvFindNextContour(scanner)) != NULL)

{

CvMoments *moments = (CvMoments*)malloc(sizeof(CvMoments));

cvMoments(contour, moments, 1);

double moment10 = cvGetSpatialMoment(moments, 1, 0);

double moment01 = cvGetSpatialMoment(moments, 0, 1);

double area = cvGetSpatialMoment(moments, 0, 0);

double posX = moment10 / area;

double posY = moment01 / area;

double mylenth = cvArcLength(contour);

double myarea = cvContourArea(contour);

//center

if (posX > double(229.0) && posX<double(250.7) && posY>double(167.0) && posY<double(201.8) && myarea<328 && mylenth < 194)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX > double(308.0) && posX<double(321.5) && posY>double(167.0) && posY<double(183.5) && myarea<145 && mylenth < 82)

{

cvSubstituteContour(scanner, NULL);

continue;

}

right light

if (posX > double(376.0) && posX<double(395.0) && posY>double(79.0) && posY<double(122.0) && myarea<double(396) && mylenth < double(185))

{

cvSubstituteContour(scanner, NULL);

continue;

}

//below

if (posX > 269 && posX < 278 && posY > 210.0 && posY < 225.0 && myarea < 22 && mylenth <50)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX > 251 && posX < 268 && posY > 290.0 && posY < 299.0 && mylenth <45 && myarea <37)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX > 258 && posX < 259 && posY > 241.0 && posY < 243.0 && mylenth <19 && myarea <17)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX > 250 && posX < 252 && posY > 236.0 && posY < 238.0 && mylenth <12 && myarea <2)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX > 249 && posX < 251 && posY > 258.0 && posY < 260.0 && mylenth <24 && myarea <21)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX > 233 && posX < 235 && posY > 255.0 && posY < 262.0 && mylenth <15 && myarea <8)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX > 244 && posX < 249 && posY > 246.0 && posY < 249.0 && mylenth <25 && myarea <30)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX > 247 && posX < 249 && posY > 266.0 && posY < 277.0 && mylenth <18 && myarea <9)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX > 161 && posX < 163 && posY > 294.0 && posY < 296.0 && mylenth <29 && myarea <22)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posX > 341 && posX < 342 && posY > 354.0 && posY < 355.0 && myarea >18 && myarea <20)

{

cvSubstituteContour(scanner, NULL);

continue;

}

if (posY > 299.0 && posY < 329.0 && myarea < 377 && mylenth < 356)

{

belowflag = true;

//if (posX > 225 && posX < 256)

if ((posX > 185 && posX < 220) || (posX > 275 && posX < 322))

++belownum;

cvSubstituteContour(scanner, NULL);

continue;

}

if (belowflag == true && posY >= 322 && belownum >= 1 && belownum <15)

{

cvSubstituteContour(scanner, NULL);

//belowflag = false;

continue;

}

// special

if (myarea == 0)

{

cvSubstituteContour(scanner, NULL);

continue;

}

/

hull = cvConvexHull2(contour, 0, CV_CLOCKWISE, 0);

CvPoint pt0 = **(CvPoint**)cvGetSeqElem(hull, hull->total - 1);

myPoint mypt0;

mypt0.x = (float)pt0.x;

mypt0.y = (float)pt0.y;

allpoints.push_back(pt0);

myallpoints.push_back(mypt0);

for (int i = 0; i < hull->total; ++i)

{

CvPoint pt1 = **(CvPoint**)cvGetSeqElem(hull, i);

allpoints.push_back(pt1);

myPoint mypt1;

mypt1.x = (float)pt1.x;

mypt1.y = (float)pt1.y;

myallpoints.push_back(mypt1);

}

}

std::sort(myallpoints.begin(), myallpoints.end());

std::vector<myPoint> myhullPoints;

QuickHull(myallpoints, myhullPoints);

CvSeq *hull2 = NULL;

CvSeq* ptseq = cvCreateSeq(CV_SEQ_KIND_GENERIC | CV_32SC2, sizeof(CvContour), sizeof(CvPoint), storage);

for (int i = 0; i < myhullPoints.size(); ++i)

{

myPoint pt1 = myhullPoints[i];

CvPoint myfinalpot1;

myfinalpot1.x = pt1.x;

myfinalpot1.y = pt1.y;

cvSeqPush(ptseq, &myfinalpot1);

}

hull2 = cvConvexHull2(ptseq, 0, CV_CLOCKWISE, 1);

IplImage *mydst3 = cvCreateImage(cvGetSize(&mybimgipl1), 8, 1);

cvZero(mydst3);

cvDrawContours(mydst3, hull2, CV_RGB(255, 255, 255), CV_RGB(255, 255, 255), -1, CV_FILLED, 8);

return mydst3;

}

char filename[100];

char newcontour[100];

void main()

{

TickMeter tm;

tm.start();

CvSize mysize = cvSize(484, 364);

IplImage *mydst = cvCreateImage(mysize, 8, 1);

for (int i = 55124; i <= 56460; i++){

sprintf(filename, "待处理背面图\\%d.bmp", i);

sprintf(newcontour, "背面轮廓1122\\%d.bmp", i);

IplImage* src_ipl = cvLoadImage(filename);

vector<Mat> bgrimgs;

Mat bimg = mypredone(src_ipl, bgrimgs);

Mat bimg_done(bimg.size(), CV_8UC1, cv::Scalar(0));

bimg_done = change_wg(bimg);

cvZero(mydst);

Mat mylastimg;

mylastimg = wg_wd_contour(bimg_done, bimg, mydst);

imwrite(newcontour, mylastimg);

}

cvReleaseImage(&mydst);

tm.stop();

cout << "count=" << tm.getCounter() << ",process time=" << tm.getTimeMilli() << " ms" << endl;

}

自己搞了一天多 明白///

#include<opencv2/opencv.hpp>

#include<opencv2/imgproc/imgproc.hpp>

#include<opencv2/highgui/highgui.hpp>

#include<iostream>

#include<fstream>

using namespace cv;

using namespace std;

Mat last_light_luobin(uchar* rgbarray,IplImage* testimg){

IplImage* dstimg = cvCreateImage(cvGetSize(testimg), IPL_DEPTH_8U, 1);

cvZero(dstimg);

int dststep = dstimg->widthStep / sizeof(uchar);

uchar* dstdata = (uchar*)dstimg->imageData;

int step = testimg->widthStep / sizeof(uchar);

uchar* data = (uchar*)testimg->imageData;

uchar tempb, tempg, tempr;

for (int i = 0; i < testimg->height; i++)

{

for (int j = 0; j < testimg->width; j++)

{

tempb = data[i*step + j*testimg->nChannels + 0];

tempg = data[i*step + j*testimg->nChannels + 1];

tempr = data[i*step + j*testimg->nChannels + 2];

int rgbpixels = tempr + tempg * 256 + tempb * 256 * 256;

uchar rgbelement = rgbarray[rgbpixels];

if (rgbelement > 0)

dstdata[i*dststep + j*dstimg->nChannels + 0] = 255;

}

}

Mat dstopen(dstimg, 0);

Mat element(2, 2, CV_8U, cv::Scalar(1));

morphologyEx(dstopen, dstopen, cv::MORPH_OPEN, element);

return dstopen;

}

void main()

{

uchar* rgbarray = new uchar[256*256*256];

memset(rgbarray, 255, 256 * 256 * 256 * sizeof(uchar));

/* //三维动态矩阵

uchar*** arr_np3D = NULL;

arr_np3D = (uchar***)new uchar**[256];

for (int i = 0; i<256; i++)

{

arr_np3D[i] = (uchar**)new uchar*[256];

}

for (int i = 0; i<256; i++)

{

for (int j = 0; j<256; j++)

{

arr_np3D[i][j] = new uchar[256];

}

}

for (int i = 0; i < 256; i++)

for (int j = 0; j < 256; j++)

for (int k = 0; k < 256; k++)

arr_np3D[i][j][k] = 255;

*/

CvMLData mlData;

mlData.read_csv("rgb_id.csv");

cv::Mat csvimg = cv::Mat(mlData.get_values(), true);

int csvrows = csvimg.rows;

//CvMat img = csvimg;

for (int j = 0; j < csvrows; j++){

//float x = cvmGet(&img, j, 0);

//float y = cvmGet(&img, j, 1);

//float z = cvmGet(&img, j, 2);

float* pixeldata = csvimg.ptr<float>(j);

float x = pixeldata[0];

float y = pixeldata[1];

float z = pixeldata[2];

int newindex = x + y * 256 + z * 256 * 256;

rgbarray[newindex] = 0;

//arr_np3D[x][y][z] = 0;

}

//cout << "csvimg(csv) = " << "\n" << format(csvimg, "csv") << " , " << endl << endl;

TickMeter tm;

tm.start();

IplImage* testimg = cvLoadImage("2.bmp");

Mat dst;

dst=last_light_luobin(rgbarray, testimg);

imshow("dst", dst);

tm.stop();

cout << "count=" << tm.getCounter() << ",process time=" << tm.getTimeMilli() << endl;

cvWaitKey(0);

delete[] rgbarray;

//delete[] arr_np3D;

}哦错了 多维动态数组的释放那里:

还是不对 原来是 http://blog.csdn.net/myj0513/article/details/6841726 这样

心情不好!!!最近周围太多恋人分手,因为很现实的问题,男方去异地打拼事业,女方因为男方忙,慢慢变得懂事独立,在男方的“在忙”中两人联系减少,感情渐渐枯萎。。。于是分手。。。谁对谁错呢。。。和公司的同事讨论不出个所以然。。。我能理解男方,也心疼女方。。。我自私地觉得男方错得多一些。。。因为他舍了爱情取了他的事业。。。即使女孩离开他 他也有了很好的物质基础 可以去找下一个女孩子了,可是那个女孩子呢 荒废了所剩不多的青春 。。。因为到了我们这个年纪 24/25/26.。。。最等不了最容易有变故最害怕别离。。。我相信他们对方都没有变心 只是感情就这样随着男孩去打拼去忙 而淡了没了 看着爱情荒芜是很可悲。。。听到这些 我是觉得心口压抑的。。。冬天了应该有更多恋人 却这么多无奈。。。我同事他是男生 他觉得两人都没错 但对那个女孩有点意见 觉得女孩应该陪着那个男孩熬过这一段时间。。。。而我站在那个女孩的角度 觉得她是委屈的 感情淡了还熬下去 不改善 那有什么意义 感情淡了两人不补救只是熬到他回来 那也不可能好了 要么男方兼顾事业感情 要么爱情就这样死了回不来了 。。。。。。。。。。。。。。。。。。。。。突然好想哭

6511

6511

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?