伯特斯卡斯

Pre-training Language Models has taken over a majority of tasks in NLP. The 2017 paper, “Attention Is All You Need”, which proposed the Transformer architecture, changed the course of NLP. Based on that, several architectures like BERT, Open AI GPT evolved by leveraging self-supervised learning.

预训练语言模型已接管了NLP中的大部分任务。 2017年的论文《注意就是你所需要的一切》提出了Transformer架构,改变了NLP的发展方向。 在此基础上,通过利用自我监督式学习发展了BERT,Open AI GPT等几种架构。

In this article, we discuss BERT : Bidirectional Encoder Representations from Transformers; which was proposed by Google AI in the paper, “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding”. This is one of the groundbreaking models that has achieved the state of the art in many downstream tasks and is widely used.

在这篇文章中,我们讨论了BERT: 乙 Ëidirectional ncoder在T ransformers [R对产权; 由Google AI在论文“ BERT:用于语言理解的深度双向变压器的预训练 ”中提出。 这是突破性的模型之一,已在许多下游任务中达到了最先进的水平,并得到了广泛使用。

总览 (Overview)

BERT leverages a fine-tuning based approach for applying pre-trained language models; i.e. a common architecture is trained for a relatively generic task, and then, it is fine-tuned on specific downstream tasks that are more or less similar to the pre-training task.

BERT利用基于微调的方法来应用预训练的语言模型; 即,为一个相对通用的任务训练了一个通用的体系结构,然后,在与预训练任务或多或少相似的特定下游任务上对其进行了微调。

To achieve this, the BERT paper proposes 2 pre-training tasks:

为此, BERT论文提出了2个预训练任务 :

Masked Language Modeling (MLM)

屏蔽语言建模(MLM)

Next Sentence Prediction (NSP)

下一句预测(NSP)

and fine-tuning on downstream tasks such as:

并对下游任务进行微调,例如:

Sequence Classification

序列分类

Named Entity Recognition (NER)

命名实体识别(NER)

Natural Language Inference (NLI) or Textual Entailment

自然语言推论(NLI)或文本蕴涵

Grounded Common Sense Inference

接地常识推理

Question Answering (QnA)

问答(QnA)

We will discuss these in-depth in the coming sections of this article.

我们将在本文的后续部分中深入讨论这些内容。

BERT架构 (BERT Architecture)

BERT’s model architecture is a multi-layer bidirectional Transformer encoder based on the original implementation in Vaswani et al.

BERT的模型架构是一种多层双向变压器编码器,基于Vaswani等人的原始实现。

— BERT纸

I have already covered the Transformer architecture in this post. Consider giving it a read if you are interested in knowing about the Transformer.

我已经在这篇文章中介绍了Transformer体系结构。 如果您有兴趣了解《变形金刚》,请考虑阅读。

To elaborate on the BERT-specific architecture, we will compare the encoder and the decoder of the Transformer:

为了详细说明特定于BERT的体系结构, 我们将比较Transformer的编码器和解码器 :

The Transformer Encoder is essentially a Bidirectional Self-Attentive Model, that uses all the tokens in a sequence to attend each token in that sequence

变压器编码器本质上是一个双向自关注模型,它使用序列中的所有令牌来参与该序列中的每个令牌

i.e. for a given word, the attention is computed using all the words in the sentence and not just the words preceding the given word in one of the left-to-right or right-to-left traversal order.

也就是说,对于给定的单词,注意力是使用句子中的所有单词而不是按照从左到右或从右到左的遍历顺序之一在给定单词之前的单词来计算的。

Mathematically:

数学上:

While the Transformer Decoder, is a Unidirectional Self-Attentive Model, that uses only the tokens preceding a given token in the sequence to attend that token

在变压器解码器中 , 是一个单向自我专注模型,仅使用序列中给定令牌之前的令牌来参与该令牌

i.e. for a given word, the attention is computed using only the words preceding the given word in that sentence according to the traversal order, left-to-right or right-to left.

也就是说,对于给定的单词,仅使用该句子中给定单词之前的单词根据从左到右或从右到左的遍历顺序来计算注意力。

Mathematically,

数学上

Therefore, the Transformer Encoder gives BERT its Bidirectional Nature, as it uses tokens from both the directions to attend a given token. We will elaborate on this even further when we’ll discuss the MLM task.

因此,由于Transformer Encoder使用两个方向的令牌来参与给定令牌,因此它赋予BERT双向属性。 当我们讨论MLM任务时,我们将进一步详细说明。

BERT表示 (BERT Representations)

Before moving on to the pre-training methodology, we’ll have a quick glance through the input/output representations used in BERT.

在继续进行预训练方法之前,我们将快速浏览一下BERT中使用的输入/输出表示形式。

[CLS]: This token is called as the ‘classification’ token. It is used at the beginning of a sequence.

[CLS]:此令牌称为“ 分类 ”令牌。 它在序列的开头使用。

[SEP]: This token indicates the separation of 2 sequences i.e. it acts as a delimiter.

[SEP]:此令牌指示2个序列的分离,即,它充当定界符。

[MASK]: Used to indicate masked token in the MLM task.

[MASK]:用于指示MLM任务中的掩码令牌。

Segment Embeddings are used to indicate the sequence to which a token belongs i.e. if there are multiple sequences separated by a [SEP] token in the input, then along with the Positional Embeddings(from Transformers), these are added to the original Word Embeddings for the Model to identify the sequence of the token.

段嵌入用于指示令牌所属的序列,即,如果输入中有多个由[SEP]令牌分隔的序列,则将其与位置嵌入(来自Transformers )一起添加到原始词嵌入中,以用于用于标识令牌序列的模型。

The tokens fed to the BERT model are tokenized using WordPiece embeddings. The working of this tokenization algorithm is out of scope for this discussion, however, it basically is a tokenization technique between purely character-level encoding and complete word-level encoding to increase the coverage for most of the words in the vocabulary with an appreciable reduction in the vocabulary size (i.e. most of the out of vocabulary or OOV words are covered). Check this blog out for WordPiece Embeddings.

使用WordPiece嵌入对提供给BERT模型的令牌进行令牌化。 这种标记化算法的工作超出了本讨论的范围,但是,它基本上是一种纯字符级编码和完整单词级编码之间的标记化技术,可以显着减少词汇量中大多数单词的覆盖范围在词汇量上(即涵盖了大部分词汇量或OOV单词)。 查看此博客以获取WordPiece嵌入。

BERT预训练 (BERT Pre-Training)

As mentioned previously, BERT is trained for 2 pre-training tasks:

如前所述,BERT受过2个预训练任务的训练:

1.屏蔽语言模型(MLM) (1. Masked Language Model (MLM))

In this task, 15% of the tokens from each sequence are randomly masked (replaced with the token [MASK]). The model is trained to predict these tokens using all the other tokens of the sequence.

在此任务中,每个序列的15%的令牌被随机屏蔽(用令牌[MASK]替换)。 训练模型以使用序列的所有其他标记来预测这些标记。

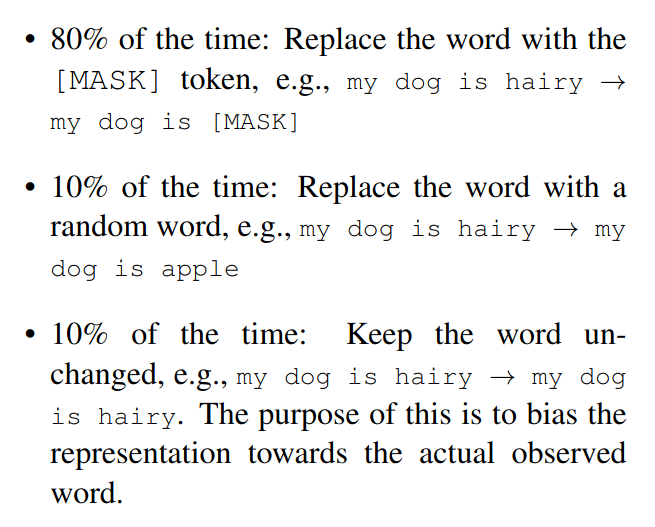

However, the fine-tuning task is in no way going to see the [MASK] token in its input. So, for the model to adapt these cases, 80% of the time, the 15% tokens are masked; 10% of the time, 15% tokens are replaced with random tokens; and 10% of the time, they are kept as it is i.e. untouched.

但是,微调任务绝不会在其输入中看到[MASK]令牌。 因此,为使模型适应这些情况,在80%的时间中,将屏蔽15%的令牌。 10%的时间中,有15%的令牌被随机令牌取代; 和10%的时间,它们保持不变,即保持不变。

This is a type of Denoising Autoencoding and is also known as Cloze Task.

2.下一句预测(NSP) (2. Next Sentence Prediction (NSP))

This task is somewhat similar to the Textual Entailment task. There are two input sequences (separated using [SEP] token, and Segment Embeddings are used). It is a binary classification task involving prediction to tell if the second sentence succeeds the first sentence in the corpus.

该任务有点类似于“文本蕴含”任务。 有两个输入序列(使用[SEP]令牌分隔,并使用段嵌入 )。 这是一个涉及预测的二元分类任务,用于判断语料库中第二句话是否在第一句话之后。

For this, 50% of the time, the next sentence is correctly used as the next sentence, and 50% of the time, a random sentence is taken from the corpus for training. This ensures that the model adapts to training on multiple sequences (for tasks like question answering and natural language inference).

为此,在50%的时间里,下一个句子正确地用作下一个句子,而在50%的时间里,从语料库中提取一个随机句子进行训练。 这样可以确保模型适合于在多个序列上进行训练(用于诸如回答问题和自然语言推理之类的任务)。

微调BERT (Fine-Tuning BERT)

BERT achieved the state of the art on 11 GLUE (General Language Understanding Evaluation) benchmark tasks. We will discuss modeling on a few of them in this section:

BERT在11个GLUE (通用语言理解评估)基准测试任务上达到了最新水平。 在本节中,我们将讨论其中的一些建模:

Sequence Classification: The pre-trained model is trained on a supervised dataset to predict the class of a given sequence. Since the output of the BERT (Transformer Encoder) model is the hidden state for all the tokens in the sequence, the output needs to be pooled to obtain only one label. The Classification token ([CLS] token) is used here. The output of this token is considered as the classifier pooled output and it is further put into a fully-connected classification layer for obtaining the labeled output.

序列分类:在有监督的数据集上训练预训练模型,以预测给定序列的类别。 由于BERT(变压器编码器)模型的输出是序列中所有令牌的隐藏状态 ,因此需要合并输出以仅获得一个标签。 这里使用了分类令牌([CLS]令牌)。 该令牌的输出被视为分类器池中的输出,并且将其进一步放入一个完全连接的分类层中,以获取标记的输出。

Named Entity Recognition (NER): The hidden state outputs are directly put into a classifier layer with the number of tags as the output units for each of the token. Then these logits are used to obtain the predicted class of each token using argmax.

命名实体识别(NER):隐藏状态输出直接以标签数量作为每个令牌的输出单位,放入分类器层。 然后,使用argmax将这些logit用于获得每个令牌的预测类。

Natural Language Inference (NLI) or Textual Entailment: We train BERT the same as in the NSP task, with both the sentences i.e. the text and the hypothesis separated using [SEP] token, and are identified using the Segment Embeddings. The [CLS] token is used to obtain the classification result as explained in the Sequence Classification part.

自然语言推论(NLI)或文本蕴涵:我们像在NSP任务中一样训练BERT,用[SEP]标记将句子(即文本和假设)分开,并使用分段嵌入进行识别。 [CLS]令牌用于获得分类结果,如序列分类部分所述。

Grounded Common Sense Inference: Given a sentence, the task is to choose the most plausible continuation among four choices. We take 4 input sequences, each containing the original sentence and the corresponding choice concatenated to it. Here too, we use the [CLS] token state and feed it to a fully-connected layer to obtain the scores for each of the choices which are then normalized using a softmax layer.

扎根的常识推断:给定一个句子,任务是在四个选择中选择最合理的延续。 我们采用4个输入序列,每个输入序列包含原始句子和与其相连的相应选择。 在这里,我们也使用[CLS]令牌状态并将其馈送到完全连接的层,以获取每个选择的得分,然后使用softmax层对其进行归一化。

Question Answering: A paragraph is used as one sequence and the question is used as another. There are 2 cases in this task:

问题解答:一个段落用作一个序列,而问题用作另一个序列。 此任务有2种情况:

In the first case, the answer is expected to be found within the paragraph. Here, we intend to find the Start and the End token of the answer from the paragraph. For this, we take the dot product of each token T_i and the start token S to obtain the probability of the token i to be the start of the answer. Similarly, we obtain the probability of the end token j. The score of a candidate span from position i to position j is defined as S.T_i + E.T_j, and the maximum scoring span where j ≥ i is used as a prediction.

在第一种情况下,答案应在本段中找到。 在这里,我们打算从该段落中找到答案的开始和结束标记。 为此,我们取每个令牌 T_i和起始令牌 S的点积 获得令牌i成为答案开始的概率 。 同样,我们获得结束标记j的概率。 从位置i向位置j的候选跨度的分数被定义为S.T_i + E.T_j,最大得分跨度其中j≥i被用作预测。

In the second case, we consider the possibility that there may be no short answer to the question present in the paragraph, which is a more realistic case. For this, we consider the probability scores for the start and end of the answer at the [CLS] token itself. We call this as s_null. We compare this s_null with the max score obtained at the best candidate span (i.e. the best score for the first case). If this obtained score is greater than s_null by a sufficient threshold τ, then we use the candidate best score as the answer. The threshold τ can be tuned to maximize the dev set F1-score.

在第二种情况下, 我们认为该段落中的问题可能没有简短的答案 ,这是一个更现实的情况。 为此,我们考虑[CLS]令牌本身的答案开头和结尾的概率得分。 我们称其为s_null。 我们将此s_null与在最佳候选跨度(即第一种情况的最佳分数)下获得的最大分数进行比较。 如果此获得的分数比s_null大一个足够的阈值τ ,则我们将候选最佳分数用作答案。 阈值τ可以被调整以最大化开发集F1-得分 。

结论 (Conclusion)

We took a deep dive into the BERT’s architecture, pretraining specification and fine-tuning specification.

我们深入研究了BERT的体系结构,预培训规范和微调规范。

The main takeaway from this post is that BERT is one of the most fundamental Transformer-based language models, and a similar pattern is followed for improvement of this architecture and tasks in the future models that have surpassed the state of the art.

这篇文章的主要结论是BERT是最基本的基于Transformer的语言模型之一,并且在以后的模型中已经采用了类似的模式来改进这种体系结构和任务,从而超越了现有技术。

The official BERT code is open source and can be found here.

官方的BERT代码是开源的,可以在这里找到。

HuggingFace Transformers is a repository that implements Transformer-based architectures and provides an API for these as well as the pre-trained weights to use.

HuggingFace Transformers是一个存储库,它实现了基于Transformer的体系结构,并提供了这些API以及要使用的预训练权重的API。

I am planning to cover the major Transformer-based Models that have achieved the state of the art in the coming posts. BERT being the most fundamental of these had to be the first. Stay Tuned:)

我计划在以后的文章中介绍已经达到最新技术水平的主要基于变压器的模型。 BERT是其中最基本的,必须是第一个。 敬请关注:)

翻译自: https://medium.com/swlh/bert-pre-training-of-transformers-for-language-understanding-5214fba4a9af

伯特斯卡斯

280

280

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?