元学习算法将在未来发生变化 (META-LEARNING ALGORITHM WOULD LIKELY CHANGE IN FUTURE)

In order to successfully understand and study new concepts or views, people generally use one example in their commonplace.

为了成功地理解和研究新的概念或观点,人们通常在自己的家中使用一个例子。

They learn new skills much quicker and in more productive ways than machines; action, imagination, and explanation.

他们比机器更快,更富有成效地学习新技能。 动作,想象力和解释。

For instance, children who have seen butterflies and ants a few times can promptly recognize them. In the same way, teens who grasp how to handle a bike are likely to discover how to ride a motorcycle with a limited demonstration. However, hundreds of models to label and train are required by machine learning algorithms to deliver similar accuracy.

例如,几次见过蝴蝶和蚂蚁的孩子可以Swift认出它们。 同样,掌握了如何处理自行车的青少年可能会在有限的示范下发现如何骑摩托车。 但是,机器学习算法需要数百种模型来标记和训练,以提供相似的准确性。

介绍 (Introduction)

In computer science, meta-learning is a member of machine learning, which can be defined as learning to learn. It is an automatic algorithm that applies to metadata to recognize how learning can become flexible in interpreting difficulties, thus enhancing the performance of an existent algorithm or learning the algorithm itself (Thrun & Pratt, 1998¹; Andrychowicz et al., 2016²)

在计算机科学中,元学习是机器学习的一部分,可以定义为学习学习。 这是一种自动算法,适用于元数据,以识别学习如何在解释困难时变得更加灵活,从而增强现有算法的性能或学习算法本身( Thrun和Pratt,1998¹ ; Andrychowicz等,2016² )。

This research studies the use of evolutionary algorithms and neural networks in terms of meta-learning process. Begin with an explanation of the evolutionary algorithm process to avoid overfitting, followed by the concept of neural networks. Finally, a conclusion of the relationship between evolutionary algorithms and neural networks due to the meta-learning technique.

本研究从元学习过程的角度研究进化算法和神经网络的使用。 首先说明避免过度拟合的进化算法过程,然后是神经网络的概念。 最后,归纳了基于元学习技术的进化算法与神经网络之间的关系。

Meta-learning algorithms generally make Artificial Intelligence (AI) systems learn effectively, adapt to shifts in their conditions in a more robust way, and generalize to more tasks. They can be used to optimize a model’s architecture, parameters, and some combination of them. This research focuses on a particular meta-learning technique called neuro-evolution, as well it is called an evolutionary algorithm to learn neural architectures.

元学习算法通常使人工智能(AI)系统有效学习,以更健壮的方式适应其条件的变化,并推广到更多任务。 它们可用于优化模型的体系结构,参数以及它们的某种组合。 这项研究的重点是一种称为神经进化的特定元学习技术,也称为学习神经体系结构的进化算法 。

进化论 (Theory of Evolution)

Charles Darwin, an evolutionary biologist, visited the Galapagos Islands decades ago and noticed that some birds appeared to have evolved from a single genetic flock. They shared standard features characterized by their distinct beak figures, which sprung from their unique DNA (Steinheimer, 2004³), as represented in Figure 1.

进化生物学家查尔斯·达尔文(Charles Darwin)几十年前访问了加拉帕戈斯群岛,并发现有些鸟类似乎是从单一遗传群进化而来。 它们具有独特的喙特征,它们具有独特的DNA所衍生的标准特征( Steinheimer,2004³ ),如图1所示。

Steinheimer (2004) asserts that DNA is a blueprint that controls the replication of cells. The hypothesis was the withdrawal of each species into a popular theory of natural selection. This process is algorithmic and can be simulated by creating evolutionary algorithms.

Steinheimer(2004)断言DNA是控制细胞复制的蓝图。 假设是每个物种都退回到流行的自然选择理论中。 此过程是算法过程,可以通过创建进化算法进行模拟。

Additionally, the evolutionary algorithms are mutating some members randomly to attempt to find even more qualified applicants. This process repeats countless iterations as necessary. Actually, in this context, they can be represented as generations. These steps are all stimulated by the Darwin theory of natural selection. They are all a part of the broader class of algorithms called evolutionary computation.

此外,进化算法正在随机变异一些成员,以试图找到更多合格的申请人。 此过程根据需要重复无数次迭代。 实际上,在这种情况下,它们可以表示为几代人。 这些步骤都受到达尔文自然选择理论的刺激。 它们都是称为进化计算的更广泛算法类别的一部分。

进化策略 (Evolution Strategies)

Currently, neural networks can perform tasks that are likely challenging for humans if they present large amounts of training data. The optimal architectures for these networks are non-trivial and take a lot of trial and error. In fact, researchers worked hard on improving architectures that are progressively delivered to newer levels over the years.

当前,如果神经网络提供大量的训练数据,它们可以执行对人类来说可能具有挑战性的任务。 这些网络的最佳架构是不平凡的,需要大量的反复试验。 实际上,研究人员在改进体系结构方面进行了艰苦的工作,这些体系结构多年来逐步交付到了更高的水平。

Researchers discovered that neuro-evolution approaches are being used by a famous tech company, Uber, who started adopting Evolution Strategies (ES) to help enhance the performance of their services by concatenating with the Atari games.

研究人员发现,著名的科技公司Uber正在使用神经进化方法,后者开始采用Evolution Strategies(ES)通过与Atari游戏结合来帮助提高其服务性能。

“even a very basic decades-old evolution strategy provides comparable results; thus, more modern evolution strategies should be considered as a potentially competitive approach to modern deep Reinforcement Learning (RL) algorithms” (Such et al., 2017⁴; Chrabaszcz, Loshchilov & Hutter, 2018⁵).

“即使是非常基础的数十年进化战略也能提供可比的结果; 因此,应将更多的现代进化策略视为现代深度强化学习(RL)算法的一种潜在竞争方法”( Such等人,2017年 ; Chrabaszcz,Loshchilov&Hutter,2018年 )。

In Figure 2, Uber AI Labs conducted a test with several Atari games. This line chart depicts the comparison between standard evolution strategies with an enhanced exploration of the algorithms. It seems that various tweaking in the hyperparameters gives significant rewards value. The number of reward amounts rises significantly, from 0 to further than 30.

在图2中, Uber AI实验室对数款Atari游戏进行了测试。 此折线图描述了标准演进策略与算法的增强探索之间的比较。 似乎,对超参数的各种调整提供了可观的奖励价值。 奖励数量从0增加到30以上。

In the first 200 generation numbers, rewards value in both strategies is racing up to 5, and keeping steadily to more than 300 generation numbers. It then dramatically grows from 320 generation numbers until the maximum 800 generation numbers.

在前200个世代中,这两种策略的奖励价值都达到了5,并稳定地保持在300多个世代中。 然后,它从320代急剧增加到最大800代。

数据扩充 (Data Augmentation)

In contemporary work, appearances in deep learning patterns have been associated mainly with the number and diversity of data collected. Practically speaking, data augmentation recognizes a significantly enhanced uniqueness of available data to train the models, except, discovering the most current data existence. Data augmentation has several routines, which are cropping, padding, and horizontal flipping (Figure 3).

在当代作品中,深度学习模式的出现主要与所收集数据的数量和多样性有关。 实际上,数据发现认识到可训练模型的可用数据的独特性得到了显着增强,但发现了最新的数据却除外。 数据扩充有几个例程,分别是裁剪,填充和水平翻转 (图3)。

These techniques are frequently applied to train deep neural networks (Shorten & Khoshgoftaar, 2019⁶). Indeed, most of the neural networks’ training time strategy is using the basic types of data augmentation.

这些技术经常用于训练深度神经网络( Shorten&Khoshgoftaar,2019⁶ )。 实际上,大多数神经网络的训练时间策略都是使用数据增强的基本类型。

“the best classification accuracy obtained by our models is not in the top 10 approaches using the CIFAR-10 dataset. This makes the proposed architecture ideal for embedded systems unlike the best performing approaches” (Çalik & Demirci, 2018⁷)

“使用CIFAR-10数据集,我们的模型获得的最佳分类精度并不是前10种方法。 这使得拟议的体系结构与最佳性能的方法不同,因此非常适合嵌入式系统”( Çalik&Demirci,2018⁷ )

Despite this, the Canadian Institute for Advanced Research (CIFAR-10) successfully applied the image classification problems with Convolutional Neural Networks (CNN). Furthermore, Cubuk, Zoph, Mane, Vasudevan, and Le (2019)⁸ explain that the most recent accuracy on datasets, such as CIFAR-10 with AutoAugment is an innovative automated data augmentation method.

尽管如此, 加拿大高级研究学院(CIFAR-10)仍成功地使用了卷积神经网络(CNN)解决了图像分类问题。 此外, Cubuk,Zoph,Mane,Vasudevan和Le(2019)⁸解释说,关于数据集的最新准确性,例如具有AutoAugment的CIFAR-10是一种创新的自动化数据增强方法。

Li, Zhou, Chen, and Li (2017)⁹ study that research in meta-learning has commonly concentrated on data and model designs, with exemptions such as meta-learning optimizers, Stochastic Gradient Descent (SGD) for instance, which appears to still fall under the model optimization. Data augmentation is the most easily recognized in the context of image data. These image augmentations typically involve horizontal flips and small magnitudes of rotations or translations.

Li,Zhou,Chen和Li(2017)的研究表明,元学习的研究通常集中在数据和模型设计上,例如元学习优化器, 随机梯度下降(SGD)等豁免项目似乎仍然存在属于模型优化。 在图像数据的上下文中,数据增强是最容易识别的。 这些图像增强通常涉及水平翻转和较小幅度的旋转或平移。

讨论区 (Discussion)

There is a combined interaction in two intertwined processes, inter-life learning, and intra-life learning. Think of inter-life learning as a process of evolution through natural selection. In contrast, intra-life learning relates to how an animal learns throughout its existence, for example, identifying an object, learning to communicate, and walking.

生命学习和生命学习这两个相互交织的过程相互结合。 将终身学习视为通过自然选择而进化的过程。 相反,生命中学习涉及动物如何在其整个生存过程中学习,例如, 识别物体,学习交流和行走 。

Furthermore, evolutionary algorithms can be reflected as inter-life learning, whereas neural networks can be thought of as intra-life learning. Evolutionary algorithms and neural networks are likely the main factor to accomplish an optimized algorithm in deep reinforcement learning techniques.

此外,进化算法可以反映为生活中的学习,而神经网络可以视为生活中的学习。 进化算法和神经网络可能是在深度强化学习技术中实现优化算法的主要因素。

进化算法 (Evolutionary Algorithms)

Bingham, Macke, and Miikkulainen (2020)¹⁰ emphasize there are four main features associated with evolutionary algorithms, which are, numbers of network layers, numbers of neurons in each layer, activation function, and optimization algorithm.

Bingham,Macke和Miikkulainen(2020年)¹强调了与进化算法相关的四个主要特征,即网络层数,每层神经元数,激活函数和优化算法。

These start with the initialization of multiple neural networks with random weights to generate a population. Next is to train the weights of each network using an image dataset, then benchmark how strongly it performs at classifying test data. Moreover, another feature is applying the classification precision on the test set and implementing the fitness function to decide the top-scoring networks to be a member of the next generation.

这些从初始化具有随机权重的多个神经网络以生成总体开始。 接下来是使用图像数据集训练每个网络的权重,然后对网络在测试数据分类中的表现进行基准测试。 此外,另一个功能是在测试集上应用分类精度,并实施适应度函数,以将得分最高的网络确定为下一代的成员。

On the other hand, evolutionary algorithms filter out the lowest-performing networks and eliminate them. Finally, they feature selecting a few of the lower-scoring networks, which might be conceivably valuable in a local maximum when optimizing the networks. These techniques are an evolutionary way of preventing overfitting.

另一方面,进化算法会过滤掉性能最低的网络并消除它们。 最后,它们具有选择一些得分较低的网络的功能,这在优化网络时可能会在局部最大值中有价值。 这些技术是防止过度拟合的进化方法。

神经网络 (Neural Networks)

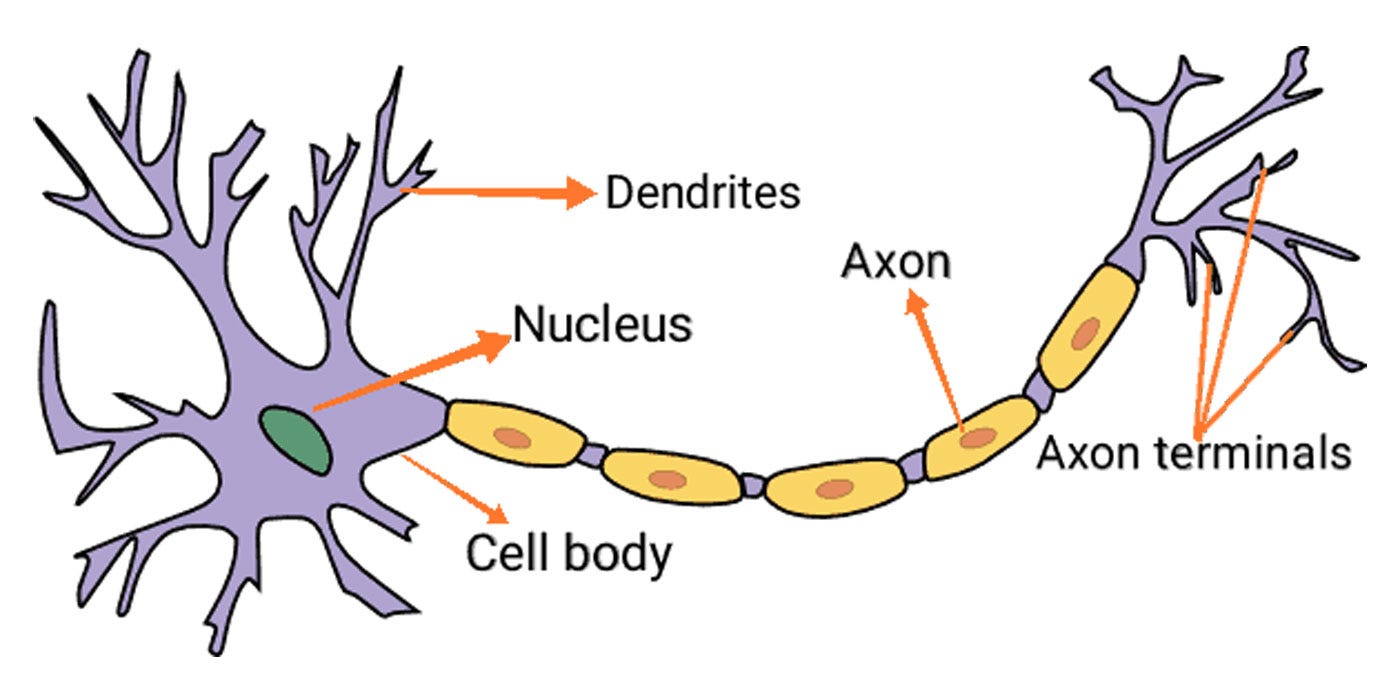

Neural Networks are an attempt to simulate the information processing abilities of the biological nervous scheme, reflected in Figure 4. The human body consists of trillions of cell bodies, and the nervous system cells called nucleus are trained to transfer messages or information through an electrochemical means.

神经网络是一种尝试模拟生物神经方案的信息处理能力的尝试,如图4所示。人体由数万亿个细胞体组成,神经系统细胞被称为核,它们被训练以通过电化学方式传递信息或信息。 。

In simple terms, neural networks are a collection of connected input and output units, in which each connection has an associated weight (Figure 5). Neural networks generate a new network by blending a random collection of parameters from their parent networks. An instance could have an equal number of layers as one origin, and the rest of its parameters are from a different parent. This process reflects how biology works in real life and helps algorithms converge on an optimized network.

简单来说,神经网络是连接的输入和输出单元的集合,其中每个连接都具有关联的权重(图5)。 神经网络通过混合来自其父网络的随机参数集合来生成新网络。 一个实例可以具有相等数量的图层作为一个原点,并且其其余参数来自不同的父级。 这个过程反映了生物学在现实生活中的工作方式,并帮助算法在优化的网络上融合。

As the parameter complexity of the network rises, evolutionary algorithms produce exponential speedups. Krizhevsky, Sutskever, and Hinton (2017)¹¹ mentioned that this process requires stacks of data and computing power and uses hundreds of GPUs and TPUs for days. It is initialized as 1000 identical convolutional neural networks with no hidden layers. Then through the evolutionary process, networks with higher accuracies are elected as parents, copied and mutated to generate children, while the rest are eliminated.

随着网络参数复杂度的提高,进化算法会产生指数级的加速。 Krizhevsky,Sutskever和Hinton(2017)¹¹提到此过程需要数据和计算能力的堆栈,并且需要使用数以百计的GPU和TPU数天。 它被初始化为1000个相同的无隐藏层的卷积神经网络。 然后,通过进化过程,将具有较高准确性的网络选为父代,进行复制和变异以生成子代,其余的则被消除。

In later practice, neural networks utilized a fixed stack of repeated modules called cells. The number of cells stayed the same, but the architecture of each cell mutated over time. They also decided to use a specific form of regularisation to improve network accuracy.

在以后的实践中,神经网络利用称为单元的重复模块的固定堆栈。 单元的数量保持不变,但是每个单元的架构都会随着时间而变化。 他们还决定使用特定形式的正则化来提高网络准确性。

Instead of eliminating the lowest-scoring networks, they exclude the oldest ones regardless of how well they scored, and it ended up enhancing the accuracy, and training from scratch. This technique elects for neural networks that remain accurate when they are retrained along with the evolution algorithms.

他们没有消除得分最低的网络,而是排除了得分最高的网络,而不管他们的得分如何,最终提高了准确性,并从头开始了培训。 该技术选择了与进化算法一起训练时仍保持准确的神经网络。

结论 (Conclusion)

Meta-learning is the process of learning to learn. Moreover, Artificial Intelligence (AI) optimizes one or numerous other AIs.

元学习是学习学习的过程。 此外,人工智能(AI)优化了一个或众多其他AI。

This research has concluded that evolutionary algorithms use notions from the evolutionary means and mimic the Charles Darwin hypothesis, for instance, mutation and natural selection, to interpret complicated puzzles.

这项研究得出的结论是,进化算法使用了来自进化手段的概念,并模仿了查尔斯·达尔文假设(例如,突变和自然选择)来解释复杂的难题。

In contrast, a meta-learning technique called neuro-evolution might utilize evolutionary algorithms to optimize neural networks individually. Although meta-learning is currently extremely fashionable, utilizing these algorithms on real-life problems remains particularly challenging.

相反,称为神经进化的元学习技术可能会利用进化算法来分别优化神经网络。 尽管元学习当前非常流行,但是在现实生活中使用这些算法仍然特别具有挑战性。

However, with the continually advancing computational power, dedicated machine learning hardware, and advancements in meta-learning algorithms, these will likely become more reliable and trustworthy.

但是,随着计算能力的不断提高,专用机器学习硬件以及元学习算法的进步,这些将可能变得更加可靠和值得信赖 。

#1 Learning to Learn#2 Learning to learn by gradient descent by gradient descent#3 Charles Darwin’s bird collection and ornithological knowledge during the voyage of H.M.S. “Beagle”, 1831–1836#4 Deep Neuroevolution: Genetic Algorithms Are a Competitive Alternative for Training Deep Neural Networks for Reinforcement Learning#5 Back to Basics: Benchmarking Canonical Evolution Strategies for Playing Atari#6 A survey on Image Data Augmentation for Deep Learning#7 Cifar-10 Image Classification with Convolutional Neural Networks for Embedded Systems#8 AutoAugment: Learning Augmentation Strategies From Data#9 Meta-SGD: Learning to Learn Quickly for Few-Shot Learning#10 Evolutionary Optimization of Deep Learning Activation Functions#11 ImageNet classification with deep convolutional neural networks翻译自: https://towardsdatascience.com/meta-learning-learning-to-learn-a0365a6a44f0

176

176

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?