aws课程

Dear readers, hope you are all doing well. I recently participated in an AWS DeepRacer tournament held by my organization (Deloitte) and today I am going to share my experience related to this fun and exciting event. For those who are unfamiliar with AWS DeepRacer, it is a virtual autonomous racing league where participants need to train a vehicle bot using reinforcement learning. The trained bots are deployed on a specific racing track and the bot with the lowest lap time wins. The bots are trained exclusively on AWS infrastructure using managed services like AWS SageMaker and AWS RoboMaker. Thus the DeepRacer service provides a fun and engaging way to get your hands dirty on concepts of reinforcement learning without getting bogged down with too much technicalities. Throughout this article, I will embed links to blogs which I came across during my research to explain related concepts. I found the blogs to be quite nicely written and they can serve as a good refresher to the experienced data scientist as well. However, feel free to skip them if you are already well-versed with these concepts. So without further ado, let’s dive right in.

亲爱的读者,希望你们一切都好。 我最近参加了由我的组织(Deloitte)举行的AWS DeepRacer锦标赛,今天我将分享与这个有趣而激动人心的活动相关的经验。 对于不熟悉AWS DeepRacer的人,这是一个虚拟的自动赛车联盟,参与者需要使用强化学习来训练车辆机器人。 受过训练的机器人被部署在特定的赛道上,单圈时间最少的机器人获胜。 这些机器人使用AWS SageMaker和AWS RoboMaker等托管服务专门针对AWS基础设施进行了培训。 因此,DeepRacer服务提供了一种有趣且引人入胜的方法,可让您轻松掌握强化学习的概念,而不会被过多的技术困扰。 在整篇文章中,我将嵌入指向我在研究过程中遇到的博客的链接,以解释相关概念。 我发现这些博客写得相当好,它们对于经验丰富的数据科学家也可以起到很好的提神作用。 但是,如果您已经熟悉这些概念,请随时跳过它们。 因此,事不宜迟,让我们直接深入。

First, let me show you my final model and how it performed. I will be referring to this video throughout my post so that it is easily relatable.

首先,让我向您展示我的最终模型及其性能。 我将在我的整个帖子中都引用此视频,以使其易于关联。

As you can see, the bot gets three trials to complete the lap. To be considered for evaluation, a trained bot needs to complete the lap atleast one out of three times. My bot completed the lap in the very first run while it was unable to do so in the second and third attempts. I achieved a best laptime of 12.945 seconds which gave me a position of #23 out of 58 participants, while the laptime of the bot that held the #1 position was 10.748 seconds i.e., a difference of +2.197 seconds only!!! So although this was a community race and not a professional competition, the level of competition was fierce and the best lap times achieved by the top 50 percentile were very much in line with actual competitions.

如您所见,该机器人进行了三轮试验以完成一圈。 要考虑进行评估,训练有素的机器人需要完成三圈中的至少一圈。 我的机器人在第一次跑步中完成了圈速,而在第二次和第三次尝试中却未能完成。 我获得了12.945秒的最佳单圈时间,这使我在58位参与者中排名第23,而排名第一的机器人的单圈时间为10.748秒,即相差仅+2.197秒! 因此,尽管这是一场社区竞赛,而不是职业竞赛,但是竞赛的水平很激烈,前50%的最佳单圈时间与实际比赛非常吻合。

This brings to my next question — how to build such a model? I went from zero to full deployment in literally 2 days, so this is definitely not rocket science. So let’s take it from the start.

这带来了我的下一个问题-如何建立这样的模型? 我几乎从两天的时间就从零部署到了全面部署,因此这绝对不是火箭科学。 因此,让我们从头开始。

Firstly, the entire DeepRacer competition is based on reinforcement learning. Details of reinforcement learning can be found here and here. Simply put, a reinforcement learning is a machine learning model that trains an agent (in this case, the vehicle bot) to undertake complex behaviors without any labeled training data by making it take short term actions (in this case, driving around the circuit) while optimizing for a long term goal (in this case, minimizing lap completion time). This differs significantly from supervised learning techniques. In fact, I found the process of reinforcement learning to be quite similar to how humans learn through trial and error.

首先,整个DeepRacer竞赛都是基于强化学习。 强化学习的细节可以在这里和这里找到。 简而言之,强化学习是一种机器学习模型,它通过使代理商采取短期行动(在这种情况下,绕电路行驶)来训练代理商(在这种情况下,是汽车机器人)采取复杂的行为而没有任何标记的训练数据。同时针对长期目标进行优化(在这种情况下,将单圈完成时间降至最短)。 这与监督学习技术大不相同。 实际上,我发现强化学习的过程与人类通过反复试验学习的过程非常相似。

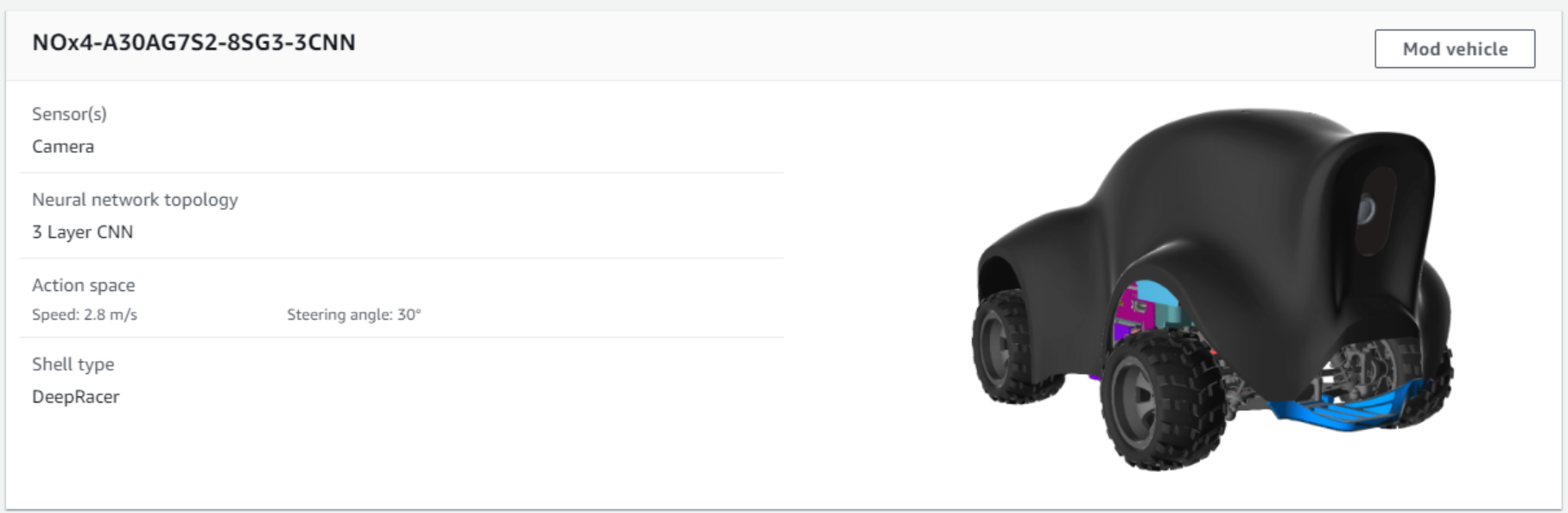

This brings to my second point — how does the model learn? Well, at the heart of every reinforcement learning model is something called as a neural network. A neuron takes one or more inputs and produces an output. It does this using an activation function (more about this here). However a single neuron is limited — it cannot perform complex logical operations alone. For complex operations, we use multiple layers of neurons (called as neural networks). A 3-layer network is easier to train than a 5-layer network but the latter will be able to perform more complex optimizations due to the additional layers. DeepRacer allows you to choose between 3-layer CNNs (convoluted neural networks) and 5-layer CNNs and I experimented with both. However, given that I had just 48 hours to train my model, I observed my 3-layer CNN to perform better than my 5-layer CNN. This is how my vehicle bot looked like below. It had a max speed of 2.8m/s, max steering angle of 30 degrees and only 1 camera as a sensor. These parameters are critical to how the bot performs and needs to be chosen through trial and error.

这是我的第二点-模型如何学习? 好吧,每个强化学习模型的核心都是所谓的神经网络。 神经元接受一个或多个输入并产生输出。 它使用激活功能来完成此操作( 此处有更多信息)。 但是,单个神经元是有限的 -它不能单独执行复杂的逻辑运算。 对于复杂的操作,我们使用多层神经元(称为神经网络)。 3层网络比5层网络更容易训练,但是由于增加了5层网络,后者将能够执行更复杂的优化。 DeepRacer允许您在3层CNN(卷积神经网络)和5层CNN之间进行选择,我对此进行了实验。 但是,考虑到我只有48个小时来训练模型,我观察到3层CNN的性能要好于5层CNN。 这是我的车辆机器人的外观,如下所示。 它的最大速度为2.8m / s,最大转向角为30度,只有1个摄像机作为传感器。 这些参数对于机器人的性能至关重要,需要通过反复试验来选择。

Based on the speed and steering angle constraints defined above, the bot was allowed the below combination of parameters. This is also known as the action space of the bot. Anytime during the race, the bot will choose one of the below action points in order to optimize its short term goals and long term objectives.

根据上面定义的速度和转向角约束,机器人可以使用以下参数组合。 这也称为机器人的动作空间。 在比赛中的任何时候,机器人都会选择以下动作点之一,以优化其短期目标和长期目标。

Third — once the bot was ready, I needed to define a short term and long term objective for the bot. This is done by defining a reward function. A sample function is shown below:

第三-机器人准备就绪后,我需要为机器人定义短期和长期目标。 这是通过定义奖励函数来完成的。 示例函数如下所示:

import math

def reward_function(params):

'''

Use square root for center line

'''

# Read input parameters

track_width = params['track_width']

distance_from_center = params['distance_from_center']

speed = params['speed']

progress = params['progress']

all_wheels_on_track = params['all_wheels_on_track']

MIN_SPEED_THRESHOLD = 2

reward = 1 - (distance_from_center / (track_width/2))**(4)

if reward < 0:

reward = 0

'''

assign a cost to time

'''

if speed < MIN_SPEED_THRESHOLD:

reward *= 0.8

'''

dis-incentivize off-tracking

'''

if not(all_wheels_on_track):

reward = 0

'''

reward completion

'''

if progress ==100:

reward *= 100return float(reward)As you can see, I used a reward function that rewarded 3 behaviors — higher speeds, staying on track and 100% completion. I reused the reward function mentioned in this post and tweaked it to suit my purpose. Once the reward function was defined, I submitted the model to AWS SageMaker for training. This is how my model scaled (explained below).

如您所见,我使用了奖励函数来奖励3种行为-更快的速度,保持原样和100%的完成率。 我重复使用在此提到的奖励功能后和调整以适合我的目的。 定义奖励功能后,我将模型提交给AWS SageMaker进行培训。 这就是我的模型缩放的方式(下面说明)。

The above graph is an example of a model that has converged well. The bot went over 3000+ iterations around the circuit. The left side of the graph shows that in the beginning, the model was not able to complete the track and received low rewards. It took 3000 iterations (approx) to gradually teach itself to complete the track. Once the bot learnt this, it retained this learning and started maximizing the reward by improving the average percentage completion and the average speed (see right side of the graph above). Fascinating, isn’t it?

上图是一个很好收敛的模型示例。 该机器人在电路上进行了3000多次迭代。 图的左侧显示,在开始时,该模型无法完成跟踪并获得低回报。 花了3000次迭代(大约)来逐步自学以完成曲目。 一旦机器人了解到了这一点,它就会继续学习并开始通过提高平均完成率和平均速度来最大化回报(参见上图的右侧)。 令人着迷,不是吗?

Fourthly, once a model is ready it needs to be evaluated and improved. For example, the drawback of this particular model was low average speed. This model took around 18 seconds to complete the entire track although it was completing the race consistently. However such a high completion time will not fetch a good result in actual competitions. Hence AWS exposes different methods that can be used to improve the average speed of the vehicle. One of the most commonly used ones is waypoint. In fact, my best model (the one with 12.945 seconds laptime) used waypoints too. If you are interested about waypoints, go through the sample examples here and here.

第四,一旦模型准备就绪,就需要对其进行评估和改进。 例如,该特定模型的缺点是平均速度低。 尽管持续完成比赛,但该模型花费了大约18秒才能完成整个赛道。 但是,如此高的完成时间将无法在实际比赛中取得良好的成绩。 因此,AWS公开了可用于提高车辆平均速度的不同方法。 最常用的一种是航点。 实际上,我最好的模型(单圈时间为12.945秒)也使用了航路点。 如果您对航路点感兴趣,请在此处和此处浏览示例示例。

In general, a simpler reward function is usually a good reward function. Don’t try to create a very complex reward function at the very outset. Instead, create a simple function, train the model, then clone it and make incremental changes to the reward function and retrain the model again. Keep repeating this until the model improves to desired levels.

通常,较简单的奖励功能通常是良好的奖励功能。 不要一开始就尝试创建非常复杂的奖励函数。 相反,创建一个简单的函数,训练模型,然后克隆它,对奖励函数进行增量更改,然后再次训练模型。 继续重复此操作,直到模型提高到所需的水平。

Finally a word of caution — training the models are not free. I incurred an AWS bill of $400 for 70–80 hours of training (I was training 3–4 models in parallel for 10 hours. It sure was super addictive to keep “cooking” the models since the improvement was so drastic — I almost felt like Jesse Pinkman from the show “Breaking Bad” until I saw my AWS bill!). I am thankful that Deloitte helped me out by providing extra AWS credits, else it would have been a serious issue. And this made me realize an important lesson in AI governance. Any organization will have a limited monthly cloud budget / run-rate. Thus, sticking to a judicious plan and choosing only your best models for training will help you cut down on additional expenses beyond what is provisioned in the monthly budget, optimize training time for models and improve performance faster. A good way to do this is by carrying out thought experiments to select your best candidate models. In 70–80% cases, you will have a strong intuition on what will not work. Avoid training such models.

最后要提个警告-训练模型不是免费的。 我为70-80小时的培训花费了400美元的AWS账单(我同时对10个小时的3-4个模型进行了培训。由于改进如此剧烈,因此保持“烹饪”模型确实非常容易上瘾—我几乎感觉到就像节目“绝命毒师”中的杰西·平克曼(Jesse Pinkman)一样,直到我看到我的AWS账单!)。 我很感谢Deloitte通过提供额外的AWS积分来帮助我,否则那将是一个严重的问题。 这使我意识到了AI治理的重要一课。 任何组织的每月云预算/运行率都是有限的。 因此,坚持明智的计划并仅选择最佳的培训模型将有助于您减少每月预算之外的额外开支,优化模型的培训时间并更快地提高性能。 做到这一点的一个好方法是进行思想实验,以选择最佳的候选模型。 在70-80%的情况下,您将对无法解决的问题有很强的直觉。 避免训练此类模型。

I trained over 30 CNN models in 2 days. If I can do it, you can too. All the best and happy learning!

我在2天内训练了30多个CNN模型。 如果我能做到,你也可以。 祝您学习愉快!

aws课程

这是一篇关于AWS DeepRacer课程的介绍,涵盖了强化学习的基础知识,并简要探讨了AI治理的重要性。通过aws课程,学习者可以深入理解Reinforcement Learning 101,并接触AI管治的初步概念。

这是一篇关于AWS DeepRacer课程的介绍,涵盖了强化学习的基础知识,并简要探讨了AI治理的重要性。通过aws课程,学习者可以深入理解Reinforcement Learning 101,并接触AI管治的初步概念。

1395

1395

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?