Engineers tend to love good stories so hopefully, our 5-year journey of moving towards API composition with GraphQL now in production (serving at peak 110 requests per second at 100ms latency) provides a good story.

工程师倾向于喜欢那些好故事,因此,我们希望通过使用GraphQL进行API组合的5年历程(现在,每秒处理110个峰值请求,并以100ms的延迟提供服务)提供了一个很好的故事。

[If you’re in a hurry, scroll down to Lessons learned and check out the open-sourced graphql-schema-registry.]

[如果您急着,请向下滚动至“ 经验教训”,并查看开源的graphql-schema-registry 。]

我们的要求 (Our Requirement)

For years, Pipedrive (which hit 10 years near the beginning of 2020) has had a public REST API, as well as hidden, undocumented endpoints for our webapp — one of which is /users/self, that was initially for loading user information but over time became a page-load API, composed of 30 different entity types. It originated in our PHP monolith which by nature is synced. We tried to split it into parallel threads but that didn’t work out so well.

多年来,Pipedrive(到2020年初已达到10年)拥有一个公共REST API ,以及我们的Web应用程序的隐藏的,未记录的终结点-其中一个是/ users / self,最初用于加载用户信息,但随着时间的流逝,它变成了由30种不同实体类型组成的页面加载API。 它起源于我们PHP整体,其本质上是同步的。 我们试图将其拆分为并行线程,但是效果不是很好。

From a maintenance point of view, with every new change, it became messier as nobody wanted to own this enormous endpoint.

从维护的角度来看,每进行一次新更改,由于没有人希望拥有这个庞大的端点,因此变得更加混乱。

直接DB访问PoC (Direct DB Access PoC)

Let's go back in time to when our devs experimented with graphql.

让我们回到过去,当我们的开发人员尝试使用graphql时。

About 3–4 years ago in the marketplace team, I began hearing new terms like “elixir” and “graphql” from Pavel, our fullstack engineer. He was involved in a proof-of-concept project which directly accessed MySQL and exposed the /graphql endpoint for querying core Pipedrive entities.

大约3-4年前,在市场团队中,我开始从我们的全栈工程师Pavel听到新词,例如“ elixir ”和“ graphql”。 他参与了一个概念验证项目,该项目直接访问MySQL,并公开了/ graphql端点以查询核心Pipedrive实体。

It worked fine in dev, but it wasn’t scalable because our backend was not only CRUD and nobody wanted to rewrite the monolith.

它在开发人员中运作良好,但无法扩展,因为我们的后端不仅是CRUD,而且没人愿意重写整体。

拼接PoC (Stitching PoC)

Fast forward to 2019 when I saw another internal PoC by a colleague that used GraphQL schema stitching with graphql-compose and makes requests to our REST API. As you can imagine, this was a major improvement since we wouldn’t need to re-implement all of the business logic and it was just a wrapper.

快进到2019年,当我看到一位同事的另一个内部PoC时,该同事使用GraphQL架构缝制了graphql-compose并向我们的REST API发出了请求。 可以想象,这是一个重大改进,因为我们不需要重新实现所有业务逻辑,而仅仅是包装。

The downsides in this PoC were:

此PoC的缺点是:

Performance. It didn’t have dataloader, so it had a potential N+1 API call problem. It didn’t limit query complexity and it didn’t have any intermediate caching. On average, the latency was higher than with the monolith.

表现 。 它没有数据加载器 ,因此存在潜在的N + 1 API调用问题。 它并没有限制查询的复杂性,也没有任何中间缓存。 平均而言,等待时间要比整体等待时间长。

Schema management. With schema stitching, we need to define a schema in the single repo, separate from an actual service that serves data. This complicates deployment, as we would need intermediate backward-compatible deployments to avoid crashes in case service changes.

模式管理。 使用模式缝合,我们需要在单个存储库中定义一个模式,与提供数据的实际服务分开。 这使部署变得复杂,因为我们需要中间的向后兼容部署,以避免在服务更改时崩溃。

制备 (Preparation)

In October 2019, I started to prepare for the mission that would move the previous PoC into production, but with a new Apollo federation that came out the same year. This would also land into a core team that would maintain the service through the long-term.

在2019年10月,我开始为将先前的PoC进入生产任务做准备,但一个新的阿波罗联盟出来的同一年。 这也将落入一个核心团队,该团队将长期维持服务。

收集开发人员的期望 (Gathering developer expectations)

Internally, some developers were skeptical and suggested building an in-house API composition by stitching REST API URLs and their payloads into a single POST request and relying on an internal gateway to split requests on the backend.

在内部,一些开发人员对此表示怀疑,并建议通过将REST API URL及其有效负载缝合到单个POST请求中并依靠内部网关在后端拆分请求来构建内部API组合。

Some saw graphql as still too raw to adopt in production & keep a status-quo. Some suggested exploring alternatives, like Protobuf or Thrift, and using transport conventions like GRPC, OData.

一些人认为graphql仍太原始而无法在生产中采用并保持现状。 一些建议建议探索替代方案(例如Protobuf或Thrift) ,并使用传输约定(例如GRPC , OData) 。

Conversely, some teams went full throttle and already had graphql in production for individual services (insights, teams), but couldn’t reuse other schemas (like User entity). Some (leads) used a typescript + relay which still needed to figure out how to federate.

相反,一些团队全力以赴,已经在生产单个服务(见解,团队)的graphql,但是无法重用其他模式(例如用户实体)。 一些( 领导 )使用了打字稿+ 中继 ,仍然需要弄清楚如何联合 。

Researching new tech was exciting: Strict, self-describing API for frontend developers? Global entity declaration and ownership that forces the reduction of duplication and increases transparency? A gateway that could automatically join data from different services without over-fetching? Wow.

对新技术的研究令人兴奋:针对前端开发人员的严格,自我描述的API? 全球实体的声明和所有权可以减少重复并提高透明度? 一个网关可以自动连接来自不同服务的数据而不会过度获取? 哇。

I knew that we needed schema management as a service to not rely on a hard-coded one and have visibility on what’s happening. Something like Confluent’s schema-registry or Atlassian’s Braid, but not Kafka-specific or written in Java, which we didn’t want to maintain.

我知道我们需要模式管理作为一种服务,而不是依赖于一个硬编码的服务,并且可以了解正在发生的事情。 诸如Confluent的架构注册表或Atlassian的Braid之类的东西 ,但不是Kafka特有的或用Java编写的,我们不想维护。

计划 (The plan)

I pitched an engineering mission that focused on 3 goals:

我提出了一项工程任务,着重于3个目标:

- Reducing initial page load time (pipeline view) by 15%. Achievable by joining some REST API calls into a single /graphql request 将初始页面加载时间(管道视图)减少了15%。 通过将一些REST API调用加入单个/ graphql请求中可实现

- Reducing API traffic by 30%. Achievable by moving deal loading for the pipeline view to graphql and requesting fewer properties. 将API流量减少30%。 通过将管道视图的交易负载移至graphql并请求较少的属性即可实现。

Using strict schema in API (for the frontend to write less defensive code)

I was lucky to get 3 awesome experienced developers to join the mission, including a PoC author.

我很幸运能邀请3位杰出的经验丰富的开发人员加入其中,其中包括PoC作者。

The original plan for the services looked like this:

服务的原始计划如下所示:

The schema-registry here would be a generic service that could store any type of schema you throw at it as an input (swagger, typescript, graphql, avro, proto). It would also be smart enough to convert an entity to whatever format you want for the output. The gateway would poll schema and call services that own it. Frontend components would need to download schema and use it for making queries.

这里的schema-registry将是一种通用服务,可以存储您向其抛出的任何类型的schema作为输入( swagger ,typescript,graphql,avro,proto)。 将实体转换为所需的输出格式也足够聪明。 网关将轮询模式并调用拥有它的服务。 前端组件将需要下载架构并将其用于查询。

In reality however, we implemented only graphql because federation only need this and we ran out of time pretty quickly.

但是实际上,我们仅实现了graphql,因为联邦只需要它,而且我们很快就用光了时间。

结果 (Results)

The main goal of replacing messy /users/self endpoint in the webapp was done within the first 2 weeks of the mission 😳 (yay!). Polishing it so that it’s performant and reliable, took most of the mission time though.

替换Web应用程序中混乱的/ users / self端点的主要目标是在任务😳的前两周内完成的(是的!)。 对其进行抛光以使其性能可靠,但这却占用了大部分任务时间。

By the end of the mission (in February 2020), we did achieve 13% initial page load time reduction and 25% on page reload (due to introduced caching) based on the synthetic Datadog test that we used.

到任务结束时(2020年2月),基于我们使用的综合Datadog测试,我们确实实现了13%的初始页面加载时间减少和25%的页面重新加载时间(由于引入了缓存)。

We did not reach the goal of traffic volume reduction, because we didn’t reach the refactoring pipeline view in webapp — we still use REST there.

我们没有达到减少流量的目标,因为我们没有达到webapp中的重构管道视图-我们仍然在那里使用REST。

To increase adoption we added internal tooling to ease federation process and recorded onboarding videos for teams to understand how it works now. After the mission ended, IOS and Android clients also migrated to graphql and the teams gave positive feedback.

为了增加采用率,我们添加了内部工具来简化联盟流程,并录制了入门视频,供团队了解它现在的工作方式。 任务结束后,IOS和Android客户也迁移到了graphql,并且团队给予了积极的反馈。

得到教训 (Lessons learned)

Looking back at the mission log that I kept for all of the 60 days, I can outline the biggest issues so that you don’t make the same mistakes

回顾我过去60天的任务日志,我可以概述最大的问题,以免您犯同样的错误

管理您的架构 (Manage your schema)

Could we have built this ourselves? Maybe, but it wouldn’t be as polished.Mark Stuart, Paypal Engineering

我们可以自己建造吗? 也许可以,但不会那么完美。 Paypal工程公司的Mark Stuart

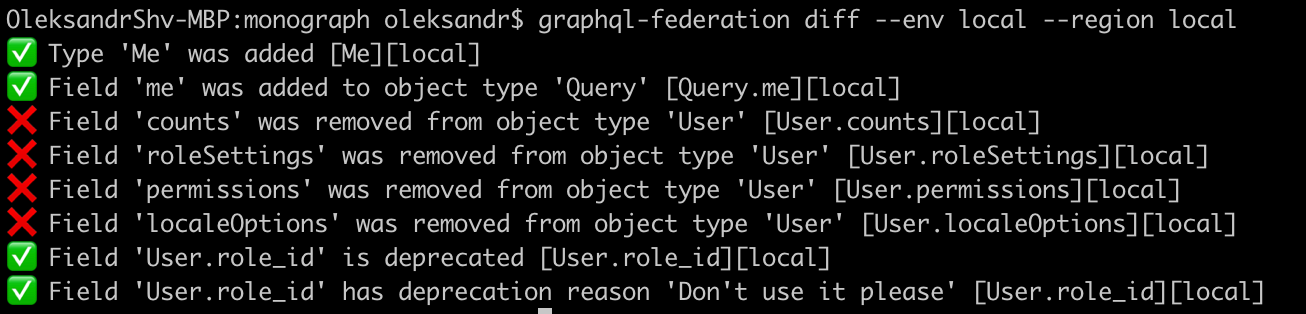

Through the first couple of days, I tried Apollo studio & its tooling for CLI to validate schema. The service is excellent and works out-of-the-box with minimal gateway configuration.

在最初的几天里,我尝试使用Apollo studio及其CLI的工具来验证模式。 该服务非常出色,并且开箱即用,网关配置最少。

As well as it works, I still felt that tying core backend traffic to an external SaaS is too risky for business continuity, regardless of its great features or pricing plans. This is why we wrote a service of our own, with basic functionality — now it’s an open-source graphql-schema-registry.

尽管它能正常工作,但我仍然认为将核心后端流量与外部SaaS捆绑在一起对于业务连续性来说风险太大,无论其功能或定价计划如何。 这就是为什么我们编写具有基本功能的自己的服务的原因-现在它是开源的graphql-schema-registry 。

The second reason to have in-house schema-registry was to follow Pipedrive’s distributed datacenter model. We don’t rely on centralized infrastructure, every datacenter is self-sufficient. This gives us higher reliability as well as a potential advantage in case we need to open a new DC in places like China, Russia, Iran, or Mars 🚀

内部拥有模式注册表的第二个原因是遵循Pipedrive的分布式数据中心模型 。 我们不依赖于集中式基础架构,每个数据中心都是自给自足的。 如果我们需要在中国,俄罗斯,伊朗或火星等地开设新的DC,这将给我们带来更高的可靠性以及潜在的优势🚀

版本化您的架构 (Version your schema)

Federated schema and graphql gateway are very fragile. If you have type name collision or invalid reference in one of the services and serve it to the gateway, it won’t like it.

联合模式和graphql网关非常脆弱。 如果您在其中一项服务中有类型名称冲突或无效引用,然后将其提供给网关,它将不会喜欢它。

By default, a gateway’s behavior is to poll services for their schemas so it's easy for one service to crash the traffic. Apollo studio solves it by validating schema on-push and rejecting registration if it causes possible conflict.

默认情况下,网关的行为是轮询服务的模式,因此一个服务很容易使流量崩溃。 Apollo studio通过验证模式推送并解决注册问题(如果可能引起冲突)来解决此问题。

The validation concept is the right way to go, but the implementation also means that Apollo studio is a stateful service that holds current valid schema. It makes the schema registration protocol more complex and dependent on time, which can be somewhat hard to debug in case of rolling deploys.

验证概念是正确的方法,但是该实现也意味着Apollo studio是一个具有当前有效架构的有状态服务。 它使模式注册协议更加复杂并且依赖于时间 ,在滚动部署的情况下可能很难调试。

In contrast, we tied the service version (based on docker image hash) to its schema. Service registers schema also in runtime, but we do it once, without constant pushing. The gateway takes federated services from service discovery (consul) and asks schema-registry to /schema/compose, providing their services’ versions.

相反,我们将服务版本(基于docker image hash )绑定到其架构。 服务也在运行时注册架构,但是我们只进行一次,而无需不断推送 。 网关从服务发现( consul )获取联合服务,并向/ schema / compose请求架构注册表,以提供其服务的版本 。

If schema-registry sees that the provided set of versions is not stable, it falls back to the last registered versions (which are used for schema validation on commit, so should be stable).

如果schema-registry看到所提供的版本集不稳定,则会回退到最后注册的版本(用于提交时进行模式验证的版本,因此应该是稳定的)。

Services can serve both REST and Graphql APIs, so we resort to alerts in case schema registration fails, keeping service operational for REST to work.

服务可以同时服务于REST和Graphql API,因此我们会在模式注册失败的情况下诉诸警报,以保持服务正常运行以使REST正常工作。

基于现有REST定义架构并不是那么简单 (Defining schema based on existing REST is not so straightforward)

Since I didn’t know how to convert our REST API to graphql, I tried openapi-to-graphql, but our API reference didn’t have sufficiently detailed swagger documentation to cover all inputs/outputs at the time.

由于我不知道如何将REST API转换为graphql,因此尝试了openapi-to-graphql ,但是我们的API参考没有足够详细的草率文档,无法涵盖当时的所有输入/输出。

Asking each team to define schema would take so much time that we just defined the schema for main entities ourselves 😓 based on REST API responses.

要求每个团队定义架构将花费大量时间,以至于我们只是基于REST API响应为自己定义主要实体的架构。

Doing this came back to haunt us later, when it turned out that some of the REST API depended on the client that makes requests OR had different response format depending on some business logic/state.

后来,当发现某些REST API依赖于发出请求的客户端或根据某些业务逻辑/状态具有不同的响应格式时,这样做又困扰了我们。

For example, custom fields affect API response depending on the customer. If you add a custom field to a deal, it will be served as a hash on the same level as deal.pipeline_id. Dynamic schema is not possible with graphql federation, so we had to work-around that by moving custom fields into a separate property.

例如, 自定义字段会根据客户影响API响应。 如果您向交易添加自定义字段,则该字段将用作与Deal.pipeline_id相同级别的哈希 。 使用graphql联合无法实现动态模式,因此我们不得不通过将自定义字段移到单独的属性中来解决此问题。

Another long-term issue is naming conventions. We wanted to use camelCase, but since most of REST used snake_case, we ended up with a mix for now.

另一个长期的问题是命名约定 。 我们想使用camelCase ,但是由于大多数REST使用snake_case ,所以现在就结束了。

CQRS和缓存 (CQRS and cache)

The Pipedrive data model isn’t simple enough to rely only on a TTL-cache.

Pipedrive数据模型还不够简单,无法仅依赖TTL缓存。

For example, if a support engineer creates a global message about maintenance from our backoffice, he also expects it to be immediately shown to customers. Those global messages can be shown to all customers or can affect specific users or companies. Breaking such cache needs 3 layers.

例如,如果支持工程师在我们的后台创建了有关维护的全局消息 ,那么他也希望将其立即显示给客户。 这些全局消息可以显示给所有客户 ,也可以影响特定的用户或公司 。 打破这种缓存需要3层。

To handle php monolith with graphql in async mode, we have created a nodejs service (called monograph) which caches everything php responded into memcached. This cache has to be cleaned from PHP depending on business-logic making it a bit of an anti-pattern of tightly-coupled cross-service cache.

为了在异步模式下使用graphql处理php整体,我们创建了一个nodejs服务(称为monograph ),该服务将php响应的所有内容都缓存到memcached中。 必须根据业务逻辑从PHP中清除此缓存,这使其成为紧密耦合的跨服务缓存的反模式。

You can see the CQRS pattern here. Such cache makes it possible to speed-up 80% of requests and get avg latency to the same as php-app, while still having strict schema and no overfetching

您可以在此处看到CQRS模式 。 这样的缓存可以加快80%的请求速度,并获得与php-app相同的平均延迟,同时仍具有严格的架构且不会过度提取

Another complication is the customer’s language. Changing this affects so many different entities — from activity types to google map views and to make things worse, the user language is not managed by php-app anymore, its in identity service — and I didn’t want to couple even more services to a single cache.

另一个问题是客户的语言 。 更改此设置会影响许多不同的实体-从活动类型到Google地图视图,更糟糕的是,用户语言不再由php-app( 身份验证服务)管理,并且我不想将更多服务耦合到单个缓存。

Identity owns user information, so it emits a change event that monograph listens to and clears its caches. This means that there is some delay (maybe max ~1 sec) between changing language and getting caches cleared, but it's not critical, because a customer won’t navigate away from a page so fast to notice the old language still in the cache.

身份拥有用户信息,因此它发出一个更改事件, 专着会监听并清除其缓存。 这意味着在更改语言和清除缓存之间存在一定的延迟(最长约1秒钟),但这并不重要,因为客户不会快速离开页面以注意到旧语言仍保留在缓存中。

追踪成效 (Track performance)

Performance was the main goal and to reach that goal we had to master APM and distributed tracing between microservices to see what is the slowest area. We used Datadog during this time and it showed the main issues.

性能是主要目标,要实现该目标,我们必须掌握APM和微服务之间的分布式跟踪,以了解最慢的区域。 在此期间,我们使用了Datadog,它显示了主要问题。

We also used memcached to cache all 30 parallel requests. The problem (shown on this picture) displays that purple requests to memcached for some of the resolvers throttled up to 220ms, whereas the first 20 requests saved data in 10ms. This was because I used the same mcrouter host for all of the requests. Rotating hosts reduced caching write latency to 20 ms max.

我们还使用了memcached来缓存所有30个并行请求。 该问题(如图所示)显示,某些解析器对memcached的紫色请求被限制在220毫秒内,而前20个请求在10毫秒内保存了数据。 这是因为我对所有请求都使用了同一个微型计算机主机。 旋转主机可将缓存写入延迟减少到最大20毫秒。

To reduce latency due to network traffic, we got data from memcached with a single batch request for different resolvers using getMulti and a 5 ms debounce.

为了减少由于网络流量引起的延迟,我们使用getMulti和5 ms的反跳,通过针对不同解析器的单个批处理请求从memcached获取数据。

Notice also the yellow bars on the right — that’s the graphql gateway’s tax on making data strictly typed after all data is resolved. It grows with the amount of data you transfer.

还要注意右边的黄条-这是graphql 网关对在解析所有数据后严格键入数据的征税 。 它随您传输的数据量而增长。

We needed to find the slowest resolvers because the latency of the entire request depends entirely on them. It was pretty infuriating to see 28 out of 30 resolvers reply in 40ms time.. and 2 of the APIs taking 500ms, because they didn’t have any cache.

我们需要找到最慢的解析器,因为整个请求的延迟完全取决于它们。 十分高兴地看到30个解析器中有28个在40毫秒内回复..其中2个API占用了500毫秒,因为它们没有任何缓存。

We had to move some of these endpoints out of the initialization query to create better latency. So we actually make 3–5 separate graphql queries from frontend, depending on when query is requested (also with some debounce logic).

我们必须将其中一些端点移出初始化查询,以创建更好的延迟。 因此,实际上我们根据前端的查询时间来进行3-5个独立的graphql查询(还带有一些反跳逻辑)。

不跟踪效果(在生产中) (Do not track performance (in production))

The counter-intuitive clickbait heading here actually means that you should avoid using APMs in production for graphql gateway or apollo server’s built-in tracing:true.

实际上,这里的违反直觉的clickbait标题意味着您应避免在生产中为graphql网关或apollo服务器的内置tracing:true使用APM。

Both turning on and removing them resulted in a 2x latency reduction from 700ms to 300ms for our test company. The reason at the time (as I understand) was that the time functions (like performance.now()) that measure spans for every resolver are too CPU-intensive.

对于我们的测试公司来说,打开和删除它们都将延迟时间从700ms减少到300ms的2倍。 当时的原因(据我了解)是,测量每个解析器的跨度的时间函数(例如performance.now() )占用的CPU过多。

Ben here did a nice benchmark of different backend servers and confirmed this.

Ben在这里对不同的后端服务器做了很好的基准测试,并确认了这一点。

考虑在前端进行预取 (Consider prefetching on frontend)

The timing of graphql query on a frontend is tricky. I wanted to move the initial graphql request as early as possible (before vendors.js) in the network waterfall. Doing this granted us some time, but it made the webapp much less maintainable.

在前端进行graphql查询的时间很棘手。 我想在网络瀑布中尽早(在vendors.js之前)移动初始graphql请求。 这样做给了我们一些时间,但是却使Webapp的可维护性大大降低了。

To make the query, you need graphql client and gql literal parsing and these typically would come via vendors.js. Now you would either need to bundle them separately or make a query with raw fetch. Even if you do make a raw request, you’ll need to manage the response gracefully so that the response gets propagated into the correct models, (but those are initialized until later). So it made sense to not continue with this and maybe resort to server-side-rendering or service workers in the future.

要进行查询,您需要graphql客户端和gql文字解析 ,这些解析通常通过vendors.js进行。 现在,您要么需要将它们单独捆绑在一起,要么使用raw fetch进行查询。 即使您确实提出了原始请求,也需要优雅地管理响应,以使响应传播到正确的模型中(但这些模型将被初始化直到以后)。 因此,有意义的是不要继续这样做,将来可能会诉诸于服务器端渲染或服务工作者 。

评估查询复杂度 (Evaluate query complexity)

What makes graphql unique from REST is that you can estimate how complicated a client’s request is going to be for your infrastructure before processing it. This is based purely on what he requests and how you define execution costs for your schema. If the estimated cost is too big, we reject the request, similar to our rate limiting.

使graphql在REST中独树一帜的原因是,您可以估计在处理该请求之前,针对您的基础结构的客户端请求的复杂程度。 这完全基于他的要求以及如何定义架构的执行成本。 如果估算费用太大,我们将拒绝请求,这与我们的速率限制类似。

We first tried graphql-cost-analysis library but ended up creating our own because we wanted logic to take into account pagination multipliers, nesting, and impact types (network, I/O, DB, CPU). Though the hardest part is injecting custom cost directive into gateway & schema-registry. I hope we can opensource it too in the near future.

我们首先尝试了graphql-cost-analysis库,但最终创建了我们自己的库,因为我们希望逻辑考虑分页系数,嵌套和影响类型(网络,I / O,DB,CPU)。 尽管最困难的部分是将自定义Cost指令注入网关和架构注册表。 我希望我们也可以在不久的将来将其开源。

模式有很多面Kong (Schema has many faces)

Working with schema in js/typescript on a low level is confusing. You figure it out when you try to integrate federation into your existing graphql service.

在较低级别使用js / typescript中的模式会造成混淆。 当您尝试将联合身份验证集成到现有的graphql服务中时,便会弄清楚。

For example, plain koa-graphql and apollo-server-koa setups expect a nested GraphQLSchema param that includes resolvers, but federated apollo/server wants schema to be passed separately:

例如,普通的koa-graphql和apollo-server-koa设置期望包含解析器的嵌套GraphQLSchema参数,但联合的apollo / server 希望单独传递模式 :

buildFederatedSchema([{typeDefs, resolvers}])

buildFederatedSchema([{{typeDefs,解析器}])

In another case, you may want to define schema as an inline gql tag string or store it as schema.graphql file, but when you want to do cost evaluation, you may need it as ASTNode (parse / buildASTSchema).

在另一种情况下,您可能希望将模式定义为内联gql标签字符串或将其存储为schema.graphql文件,但是当您要进行成本评估时,可能需要将其作为ASTNode ( parse / buildASTSchema )。

金丝雀逐步推出 (Gradual canary rollout)

During the mission, we did a gradual rollout to all internal developers first to catch obvious errors.

在执行任务期间,我们首先向所有内部开发人员进行了逐步推广,以发现明显的错误。

By the end of the mission, in February, we released graphql only to 100 lucky companies. We then slowly rolled it out to 1000 — 1%, 10%, 30%, 50%, and finally 100% of the customers finishing it in June.

到任务结束时,在2月,我们仅向100家幸运公司发布了graphql。 然后,我们慢慢地将其推广到1000个用户,分别是1%,10%,30%,50%,最后有100%的客户在6月完成了该过程。

The rollout was based on company ID and modulo logic. We also had allow- and deny-lists for test companies and for cases when developers didn’t want their companies to have graphql on yet. We also had an emergency off-switch that would revert it, which was handy during incidents to ease debugging

部署基于公司ID和模逻辑。 我们还为测试公司以及开发人员不希望其公司使用graphql的情况提供了允许和拒绝列表。 我们还有一个紧急关闭开关,可以将其还原,这在事件发生期间非常方便,可以简化调试

Considering how big of a change we did, it was a great way to get feedback and find bugs, while having lower risks for our customers.

考虑到我们所做的改变有多大,这是一种获得反馈和发现错误的好方法,同时对我们的客户来说风险更低。

希望与梦想 (Hopes & dreams)

To get all of the graphql benefits, we need to adopt mutations, subscriptions, and batch operations on a federated level. All of this needs teamwork & internal evangelism to expand the number of federated services.

为了获得所有graphql的好处,我们需要在联合级别采用突变, 订阅和批处理操作。 所有这些都需要团队合作和内部传福音,以扩大联盟服务的数量。

Once graphql is stable and sufficient enough for our customers, it can become version 2 of our public API. A public launch would need directives to limit entity access based on OAuth scopes (for marketplace apps) and our product suites.

一旦graphql稳定并足以满足我们的客户需求,它就可以成为我们公共API的版本2。 公开发布需要基于OAuth范围 (针对市场应用)和我们的产品套件来限制实体访问的指令。

For schema-registry, we need tracking clients, and getting usage analytics for better deprecation experience, filtering/highlighting of schemas’ costs & visibility, naming validation, managing non-ephemeral persisted queries, public schema change history.

对于模式注册,我们需要跟踪客户端,并获得使用情况分析,以获得更好的弃用体验, 过滤 /突出显示模式的成本和可见性, 命名验证 ,管理非临时性持久查询 ,公共模式更改历史记录 。

As we have services in go, it's unclear how internal communication should happen — over GRPC for speed & reliability, or individual graphql endpoints, or via centralized internal graphql gateway? If GRPC is better only due to its binary nature, could we make graphql binary instead with msgpack?

正如我们在服务去 ,目前还不清楚应该如何沟通内部发生-在GRPC速度和可靠性或个人graphql终点,或者通过集中内部graphql网关? 如果GRPC是更好的不仅是因为它的二进制性质,我们可以让graphql的二进制,而不是msgpack ?

As for the outside world, I hope Apollo’s roadmap with project Constellation will optimize Query planner in Rust so that we don’t see that 10% gateway tax on performance, as well as enable flexible federation of services without their knowledge.

对于外部世界,我希望Apollo的Constellation项目路线图能够优化Rust中的 查询计划程序 ,以便我们不会看到对性能征收 10%的网关税 ,并在他们不知情的情况下实现灵活的服务联合。

Exciting times to enjoy software development, full of complexity!

享受软件开发的激动人心的时刻,充满了复杂性!

翻译自: https://medium.com/pipedrive-engineering/journey-to-federated-graphql-2a6f2eecc6a4

766

766

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?