mr和mapreduce

重点 (Top highlight)

When do I need to use MapReduce? How can I translate my jobs to Map, Combiner, and Reducer?

什么时候需要使用MapReduce? 如何将我的工作翻译为Map,Combiner和Reducer?

MapReduce is a programming technique for manipulating large data sets, whereas Hadoop MapReduce is a specific implementation of this programming technique.

MapReduce是用于处理大型数据集的编程技术,而Hadoop MapReduce是此编程技术的特定实现。

Following is how the process looks in general:

以下是该过程的总体外观:

Map(s) (for individual chunk of input) ->

- sorting individual map outputs ->

Combiner(s) (for each individual map output) ->

- shuffle and partition for distribution to reducers ->

- sorting individual reducer input ->

Reducer(s) (for sorted data of group of partitions)Hadoop的MapReduce概述 (Hadoop’s MapReduce In General)

Hadoop MapReduce is a framework to write applications that process enormous amounts of data (multi-terabyte) in-parallel on large clusters (thousands of nodes) of commodity hardware in a reliable, fault-tolerant manner.

Hadoop MapReduce是一个框架,用于编写应用程序,该应用程序以可靠,容错的方式并行处理大型硬件集群(数千个节点)上的大量数据(多TB)。

A typical MapReduce job:

典型的MapReduce作业:

- splits the input data-set into independent data sets 将输入数据集拆分为独立的数据集

each individual dataset is processed in parallel by the map tasks

地图任务 并行处理每个单独的数据集

then the framework sorts the outputs of maps,

然后框架对地图的输出进行排序 ,

this output is then used as input to the reduce tasks

然后将此输出用作归约任务的输入

In general, both the input and the output of the job are stored in a file-system.

通常,作业的输入和输出都存储在文件系统中。

The Hadoop MapReduce framework takes care of scheduling tasks, monitoring them, and re-execution of the failed tasks.

Hadoop MapReduce框架负责安排任务,监视任务以及重新执行失败的任务。

Generally, the Hadoop’s MapReduce framework and Hadoop Distribution File System (HDFS) run on the same nodes, which means that each node is used for computing and storage both. The benefit of such a configuration is that tasks can be scheduled on the nodes where data resides and thus results in high aggregated bandwidth across the cluster.

通常,Hadoop的MapReduce框架和Hadoop分发文件系统(HDFS)在同一节点上运行,这意味着每个节点都用于计算和存储 。 这种配置的好处是,可以在数据所驻留的节点上调度任务,从而在整个群集中产生较高的聚合带宽。

The MapReduce framework consists of:

MapReduce框架包括:

a single master

ResourceManager(Hadoop YARN),单个主

ResourceManager(Hadoop YARN),one worker

NodeManagerper cluster-node, and每个群集节点一个工人

NodeManager,以及MRAppMasterper application每个应用程序

MRAppMaster

The resource manager keeps track of compute resources, assigns them to specific tasks, and schedules jobs across the cluster.

资源管理器跟踪计算资源,将其分配给特定任务,并在整个集群中调度作业。

In order to configure a MapReduce job, at minimum, an application specifies:

为了至少配置MapReduce作业 ,应用程序应指定:

- input source and output destination 输入源和输出目的地

- map and reduce function 映射和归约功能

A job along with its configuration is then submitted by the Hadoop’s job client to YARN, which is then responsible for distributing it across the cluster, schedules tasks, monitors them, and provides their status back to the job client.

然后, 作业及其配置由Hadoop的作业客户端提交给YARN,然后YARN负责在整个群集中分配作业,计划任务,监视任务并将其状态提供回作业客户端。

Although the Hadoop framework is implemented in Java, MapReduce applications need not be written in Java. We can use mrjobs Python package to write MapReduce jobs that can be run on Hadoop or AWS.

尽管Hadoop框架是用Java实现的,但MapReduce应用程序不必用Java编写。 我们可以使用mrjobs Python包编写可在Hadoop或AWS上运行的MapReduce作业。

MapReduce作业的输入和输出 (Inputs And Outputs Of MapReduce Jobs)

For both input and output, the data is stored in key-value pairs. Each key and value class has to be serializable by the MapReduce framework and thus should implement the Writable interface. Apart from this, the key class needs to implement WritableComparable the interface as well which is required for sort mechanism.

对于输入和输出, 数据都存储在键值对中 。 每个键和值类都必须由MapReduce框架进行序列化,因此应实现Writable接口。 除此之外,键类还需要实现WritableComparable接口,这是排序机制所必需的。

映射器 (The Mapper)

A Mapper is a task which input key/value pairs to a set of output key/value pairs (which are then used by further steps). The output records do not need to be of the same type as that of input records, also an input pair may be mapped to zero or more output pairs.

Mapper是一项将键/值对输入到一组输出键/值对的任务,然后将其用于其他步骤。 输出记录不必与输入记录具有相同的类型,输入对也可以映射到零个或多个输出对。

All values associated with a given output key are subsequently grouped by the framework and passed to the Reducer(s) to determine the final output. The Mapper outputs are sorted and then partitioned per Reducer. The total number of partitions is the same as the number of reduce tasks for the job.

随后,与给定输出键关联的所有值都由框架进行分组,并传递到Reducer以确定最终输出。 对Mapper输出进行排序,然后按Reducer进行分区。 分区总数与作业的reduce任务数相同。

随机和排序阶段 (Shuffle & Sort Phases)

The output of individual mapper output is sorted by the framework.

各个映射器输出的输出按框架排序。

Before feeding data to reducers, the data from all mappers is partitioned by some grouping of keys. Each partition contains data from one or more keys. The data for each partition is sorted by keys. The partitions are then distributed to reducers. Each reducer input data is data from one or more partitions (generally 1:1 ratio).

在将数据提供给reducer之前,所有映射器的数据都通过一些键分组进行分区。 每个分区包含来自一个或多个键的数据。 每个分区的数据均按键排序。 然后将分区分配给减速器。 每个化简器输入数据是来自一个或多个分区(通常为1:1比例)的数据。

减速器 (The Reducer)

A Reducer reduces a set of intermediate values (output of shuffle and sort phase) which share a key to a smaller set of values.

Reducer减少一组中间值(随机和排序阶段的输出),这些中间值共享一组较小值的密钥。

In the reducer phase, the reduce method is called for each <key, (list of values)> pair in the grouped inputs. Note that the output of Reducer is not sorted.

在reducer阶段,对分组输入中的每个<key, (list of values)>对调用reduce方法。 请注意, Reducer的输出未排序。

The right number of reducers are generally between 0.95 and 1.75 multiplied by <no. of nodes> * <no. of maximum containers per node>.

减速器的正确数量通常在0.95和1.75之间乘以<no. of nodes> * <no. of maximum containers per node> <no. of nodes> * <no. of maximum containers per node> <no. of nodes> * <no. of maximum containers per node> 。

合路器 (The Combiner)

We can optionally specify a Combiner (know as local-reducer) to perform local aggregation of the intermediate outputs, which helps to cut down the amount of data transferred from the the Mapper to Reducer. In many cases, the same reducer code can be used as a combiner as well.

我们可以选择指定一个Combiner (称为local-reducer)对中间输出执行本地聚合,这有助于减少从Mapper传递给Reducer的数据量。 在许多情况下,相同的reducer代码也可以用作组合器。

让我们看一个简单的例子 (Let’s look at a simple example)

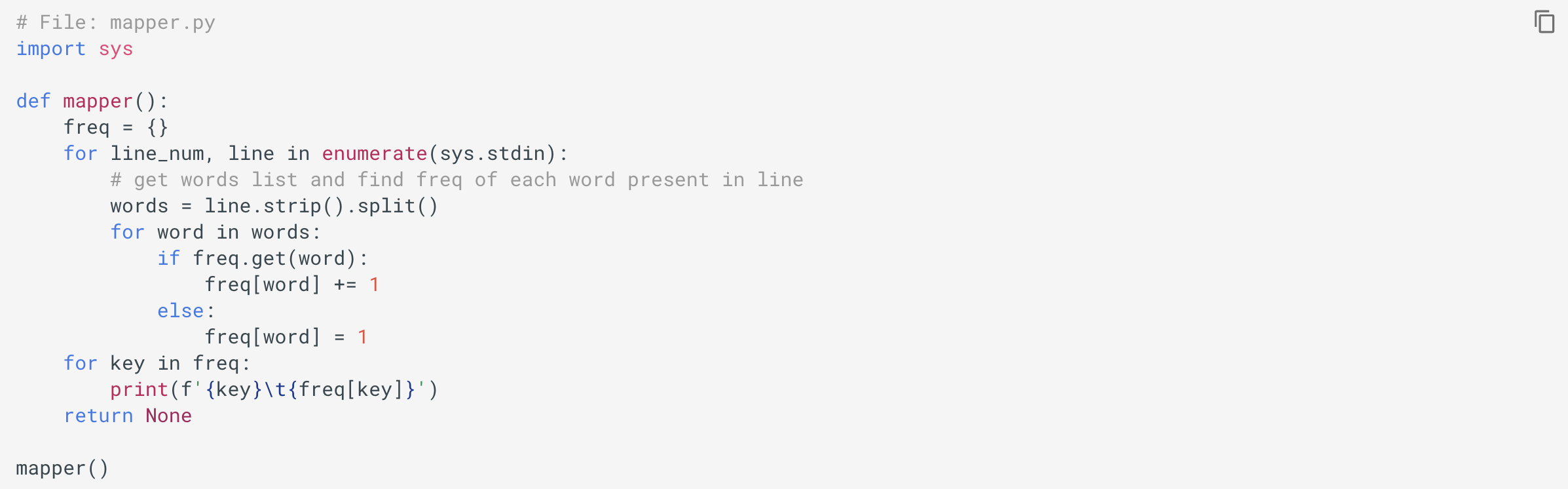

Let’s look at a simple example of counting word frequencies. Consider the following mapper.py file:

让我们看一个简单的单词频率计数示例。 考虑以下mapper.py文件:

and following reducer.py file:

以及以下reducer.py文件:

We can locally test them as:

我们可以在本地对其进行测试,如下所示:

可是等等! 先前的实现有问题... (But Wait! The previous implementation has a problem…)

The previous mapper code is memory intensive as it has to maintain a dictionary of the frequency of all of the unique words present chunk of input for each individual mapper. If memory is a concern even for dict involving individual chunks of inputs, then the better way is to let it just print each word as we encounter (with its frequency as 1). For example,

先前的映射器代码是内存密集型的,因为它必须维护每个映射器的输入块中所有唯一词的出现频率的字典。 如果即使对于涉及单个输入块的dict ,内存也是一个问题,那么更好的方法是让它仅在遇到时打印每个单词(其频率为1 )。 例如,

Now, let’s use new mapper with the same input data:

现在,让我们使用具有相同输入数据的新映射器:

If it helps, we can also use a combiner (same as reducer code) for local aggregation from each mapper output.

如果有帮助,我们还可以使用组合器(与简化器代码相同)从每个映射器输出进行本地聚合。

Partitioner和Comparator详细信息 (Details on Partitioner And Comparator)

A Partitioner partitions the data, which it does by essentially partitioning the “key space”.

Partitioner实际上是通过对“键空间”进行分区来对数据进行分区的。

Partitioner controls the partitioning of the keys of the intermediate map-outputs. The key (or a subset of key) is used to derive the partition, typically by a hash function. The total number of partitions is the same as the number of reduce tasks for the job. Hence this controls which of the m reduce tasks the intermediate key (and hence the record) is sent to for reduction.

分区程序控制中间映射输出的键的分区。 密钥(或密钥的子集)通常用于通过散列函数来得出分区。 分区总数与作业的reduce任务数相同。 因此,这控制了将中间键(以及记录)发送到m还原任务中的哪一个还原任务。

HashPartitioner is the default Partitioner. You would need to use different partitioner (or your custom one) if you need to partition data by multiple keys.

HashPartitioner是默认的Partitioner 。 如果需要通过多个键对数据进行分区,则需要使用其他分区程序(或自定义分区程序)。

Hadoop has a library class, KeyFieldBasedPartitioner which allows the MapReduce framework to partition the map outputs based on certain key fields, not the whole keys. For example, -D mapreduce.partition.keypartitioner.options=-k1,2.

Hadoop有一个库类KeyFieldBasedPartitioner ,该类允许MapReduce框架根据某些键字段(而不是整个键)对地图输出进行分区。 例如, -D mapreduce.partition.keypartitioner.options=-k1,2 。

We can control the grouping by specifying a Comparator.

我们可以通过指定Comparator来控制分组。

Hadoop has a library class, KeyFieldBasedComparator, that provides a subset of features provided by Unix/GNU sort function. For example, -D mapreduce.partition.keycomparator.options=-k2,2nr.

Hadoop有一个库类KeyFieldBasedComparator ,该类提供Unix / GNU sort功能提供的功能的子集。 例如, -D mapreduce.partition.keycomparator.options=-k2,2nr 。

分布式缓存 (The DistributedCache)

The DistributedCache, provided by the MapReduce framework, efficiently distributes large read-only files as cache needed by applications.

MapReduce框架提供的DistributedCache有效地将大型只读文件分发为应用程序所需的缓存。

配置参数 (The Configuration Parameters)

We can get job configuration options through environment variables. When we launch the MapReduce application, the framework will assign data to available workers. We can access this data from our scripts. For instance, if we are running a mapper, then we can access the information about the file and slides we are working on. Also, we can get the information on whether we are running a mapper or reducer, which can be important if we are running the same script on the map and reduce phase. We can also access task id within the map or reduce phase with the following environment variables: mapreduce_task_id, mapreduce_task_partition.

我们可以通过环境变量获取作业配置选项。 当我们启动MapReduce应用程序时,该框架会将数据分配给可用的工作程序。 我们可以从脚本中访问这些数据。 例如,如果我们正在运行映射器,则可以访问有关正在处理的文件和幻灯片的信息。 此外,我们还可以获得有关是否正在运行映射程序或化简器的信息,如果我们在映射上运行相同的脚本并缩减阶段,这可能非常重要。 我们还可以使用以下环境变量访问地图中的任务ID或简化阶段: mapreduce_task_id , mapreduce_task_partition 。

The Configured Parameters are localized in the job configuration for each task’s execution. During the execution of a streaming job, the names of the “mapred” parameters are transformed. The dots (.) becomes underscores (_). For example, mapreduce.job.id becomes mapreduce_job_id. In your code, use the parameter names with the underscores.

已配置的参数在作业配置中本地化,以执行每个任务。 在执行流作业期间,将转换“ mapred”参数的名称。 点( . )变成下划线( _ )。 例如, mapreduce.job.id变为mapreduce_job_id 。 在您的代码中,将参数名称与下划线一起使用。

Updating status in streaming applications:

流应用程序中的更新状态:

A streaming process can use the stderr to emit status information. To set a status reporter:status:<message> should be sent to stderr.

流处理过程可以使用stderr发出状态信息。 要设置状态reporter:status:<message>应将其发送到stderr 。

捆绑的映射器,精简器和分区 (Bundled Mappers, Reducers, and Partitions)

Hadoop MapReduce comes bundled with a library of generally useful mappers, reducers, and partitioners.

Hadoop MapReduce捆绑了一个包含通常有用的映射器,精简器和分区器的库 。

Here are some related interesting stories that you might find helpful:

以下是一些相关的有趣故事,您可能会觉得有帮助:

Apache Cassandra — Distributed Row-Partitioned Database for Structured and Semi-Structured Data

Gain Access to Expert View — Subscribe to DDI Intel

获得访问专家视图的权限- 订阅DDI Intel

翻译自: https://medium.com/datadriveninvestor/the-why-and-how-of-mapreduce-17c3d99fa900

mr和mapreduce

894

894

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?