pod中容器如何负载均衡?

We all strive to build resilient and self-healing applications, but occasionally we make a mistake and have to restart one. Hopefully, we will have the time to fix this, but until then, we may need manual intervention. In this article, we understand what happens when we delete a Kubernetes (K8s) pod while it is serving live traffic. We can then apply this knowledge to our operations so we don't affect our customer's experience.

我们都在努力构建具有弹性和自我修复能力的应用程序,但有时我们会犯一个错误,因此必须重新启动一个应用程序。 希望我们将有时间解决此问题,但在此之前,我们可能需要手动干预。 在本文中,我们了解了删除Kubernetes (K8)Pod服务实时流量时会发生什么。 然后,我们可以将这些知识应用到我们的运营中,这样我们就不会影响客户的体验。

Pod生命周期 (Pod Lifecycle)

First, let's understand what actually happens when a pod is deleted.

首先,让我们了解删除Pod时实际发生的情况。

Kubernetes sends two signals to the process in a container when it is deleted. The initial one is SIGTERM, followed by SIGKILL. SIGTERM is kind of like asking the process nicely to shut down, and SIGKILL immediately stops the process. We can listen forSIGTERM and tidy up any resources we are using, such as databases and other connections. Applications should not instantly shut down when they receive SIGTERM. Rather, they should stop accepting new requests and wait for existing requests to finish. If there are any background tasks running, the process should also wait for them to finish before exiting.

删除容器后,Kubernetes会向容器中的进程发送两个信号。 最初的是SIGTERM ,其次是SIGKILL 。 SIGTERM就像很好地要求关闭进程, SIGKILL立即停止该进程。 我们可以收听SIGTERM并整理我们正在使用的任何资源,例如数据库和其他连接。 收到SIGTERM时,应用程序不应立即关闭。 相反,他们应该停止接受新请求,并等待现有请求完成。 如果正在运行任何后台任务,则该过程还应等待它们完成后再退出。

So far we have only mentioned SIGTERM. If the application takes too long to exit after receiving this signal, K8s then sends a SIGKILL signal. In a perfect setup, this will not be sent to an application as it will have gracefully exited. If this does happen, chances are, requests will have been interrupted and customers will be unhappy. You can configure how long K8s waits before sending SIGKILL with the terminationGracePeriodSeconds setting for the container.

到目前为止,我们仅提及SIGTERM 。 如果应用程序在接收到此信号后花费太长时间退出,则K8s然后发送SIGKILL信号。 在理想的设置中,由于已正常退出,因此不会将其发送到应用程序。 如果确实发生这种情况,则请求将被打断,客户会感到不满意。 您可以使用容器的terminationGracePeriodSeconds设置配置在发送SIGKILL之前K8的等待时间。

If the application is part of a K8s service or has ingress enabled, when the pod is deleted, it’s first taken out of traffic. This allows for the application to process the SIGTERM signal and not receive new requests in the process. By doing this, K8s allows us to present a seamless experience to our customers while still allowing the underlying infrastructure to change.

如果该应用程序是K8s服务的一部分或已启用入口,则删除该Pod时,首先会使其流量消失。 这允许应用程序处理SIGTERM信号,并且在该过程中不接收新请求。 通过这样做,K8s使我们能够向客户提供无缝的体验,同时仍允许基础架构发生变化。

It is not only deleting pods which causes pods to be removed. When releasing new versions of your application or scaling down, pods will go through this lifecycle. It is important to configure your service correctly. Otherwise, customers may have requests fail. For a more in-depth look into the pod lifecycle, check out the documentation on the pod lifecycle.

不仅删除吊舱还会导致吊舱被删除。 在发布应用程序的新版本或按比例缩小时,pod将经历此生命周期。 正确配置服务很重要。 否则,客户的请求可能会失败。 要更深入地了解Pod的生命周期,请查看Pod的生命周期文档 。

复制品 (Replicas)

Most of this article assumes that there is more than one instance of the service running. This is done in K8s by using a replica set or, better yet, a deployment which will create a replica set for you. If you manually deploy a single pod and then delete it, your service will go down and won't come back up. If a service is running through a replica set but with only one pod, the service will become unavailable after deleting the pod. The replica set will then automatically bring up a new pod to replace the deleted one, and your service will become available again.

本文的大部分内容都假定有多个服务实例在运行。 在K8中,这是通过使用副本集或更好的部署来完成的,该部署将为您创建副本集。 如果您手动部署一个Pod,然后将其删除,则您的服务将关闭并且不会恢复。 如果服务通过副本集运行但只有一个容器,则该服务在删除容器后将变得不可用。 然后,副本集将自动调出新的pod来替换已删除的pod,您的服务将再次可用。

By having a minimum of two pods running for every service, we get around this problem. When we delete one of the pods, K8s will send the traffic seamlessly to the other pods. Running two pods as standard is a good idea as there is a chance that an application may crash. Having at least one back-up pod will give you the redundancy needed to keep the service available in this scenario. If you do decide to only run one pod for a service and need to delete a pod, you may want to consider scaling up to two pods, delete the pod, and then scale back down.

通过为每个服务至少运行两个Pod,我们可以解决此问题。 当我们删除其中一个Pod时,K8会将流量无缝发送到其他Pod。 标准运行两个Pod是一个好主意,因为应用程序可能会崩溃。 拥有至少一个备用吊舱将为您提供在这种情况下保持服务可用所需的冗余。 如果您决定只为服务运行一个Pod,并且需要删除一个Pod,则可能要考虑扩展到两个Pod,删除Pod,然后再缩小。

When moving pods around in K8s, it is important to think about the capacity the service has to handle. If we delete a pod, while we wait for a new one to start, will there still be enough pods to handle all the requests? When configuring the number of pods or autoscaling, this should be taken into account. You might want to have n+1 pods so you have enough for one to be unavailable. You may want to check your deployment update strategy as by default, it may leave a service in a state of undercapacity. For more information on this, check out my other article, How to Stop Kubernetes From DOSing Your Application During Deployment.

在K8中移动吊舱时,重要的是要考虑服务必须处理的容量。 如果我们删除一个Pod,而又等待一个新的Pod,那么还会有足够的Pod来处理所有请求吗? 在配置窗格数量或自动缩放时,应考虑到这一点。 您可能需要n + 1个吊舱,所以您有足够的一个吊舱不可用。 您可能希望检查您的部署更新策略,因为默认情况下,它可能会使服务处于容量不足的状态。 有关此的更多信息,请查看我的其他文章“ 如何在部署期间阻止Kubernetes DOS停止应用程序” 。

Rather than deleting specific pods, we could roll out a change to the deployment. Instead of changing the source code of our application to cause an update, we can use K8s labels. The below command updates the deployment called load-app and adds or update the label restarted-at. This will cause a rolling update of our pods, creating new pods with the updated label. The command sets the value of the label to be the timestamp the command was run, for auditing purposes. This rolling update will abide by the deployment strategy, keeping enough pods for the workload.

除了删除特定的Pod,我们还可以对部署进行更改。 无需更改应用程序的源代码以进行更新,我们可以使用K8s标签。 以下命令将更新名为load-app的部署,并添加或更新标签restarted-at 。 这将导致我们的广告连播的滚动更新,并使用更新后的标签创建新的广告连播。 该命令将标签的值设置为该命令运行的时间戳记,以进行审核。 此滚动更新将遵守部署策略,并为工作负载保留足够的Pod。

健康检查 (Health Checks)

Health checks are useful in the case that an application crashes as K8s will reschedule the service. The checks are also useful in other scenarios, as discussed below.

当应用程序崩溃时,运行状况检查很有用,因为K8s将重新安排服务的时间。 如下所述,检查在其他情况下也很有用。

First, let's understand the different types of health checks in K8s. There are two types of health checks, ready and alive. Alive checks that the process is up and running. If this fails, K8s will restart it (keeping the same pod). If the pod is in a service or has ingress enabled, the readiness check will take the service out of traffic if the health check fails.

首先,让我们了解K8中不同类型的健康检查。 健康检查有两种类型, 即准备好和活着的 。 活着检查该进程是否已启动并正在运行。 如果失败,则K8s将重新启动它(保留相同的容器)。 如果Pod在服务中或已启用入口,则在运行状况检查失败的情况下,就绪检查将使服务中断流量。

The readiness check is the important one for us discussing deleting pods — interestingly, not for the pod being deleted but for the new one which will take its place. If ready checks are not in place, the new pod will be put into traffic straight away. Even simple apps take some time to start up, and in this window, requests could be sent to it. As the app is still booting up, the requests will fail. This is easily fixed by adding a ready check which curls an endpoint which always returns a status code of200.

准备就绪检查是我们讨论删除吊舱的重要检查,有趣的是,不是要删除吊舱,而是要更换新吊舱。 如果没有准备好检查,新的吊舱将立即投入交通。 即使简单的应用程序也需要花费一些时间才能启动,并且可以在此窗口中向其发送请求。 由于该应用仍在启动,因此请求将失败。 通过添加准备好检查可以很容易地解决此问题,该检查可以卷曲端点,始终返回状态代码200 。

在Node.js中实现 (Implementation in Node.js)

If your application is using a Node.js server, you can use the below code to make sure all requests finish before exiting the application. This works by registering a listener for SIGTERM with the callback calling server.close(). The close function will shut the server down but allow the existing requests to finish before the process exits. If you don't have this code or something similar, the process will exit immediately on receiving SIGTERM.

如果您的应用程序使用Node.js服务器,则可以使用以下代码来确保所有请求在退出应用程序之前完成。 通过使用回调调用server.close()为SIGTERM注册侦听器,可以实现此目的。 关闭功能将关闭服务器,但允许现有请求在进程退出之前完成。 如果您没有此代码或类似代码,则在收到SIGTERM该过程将立即退出。

演示版 (Demo)

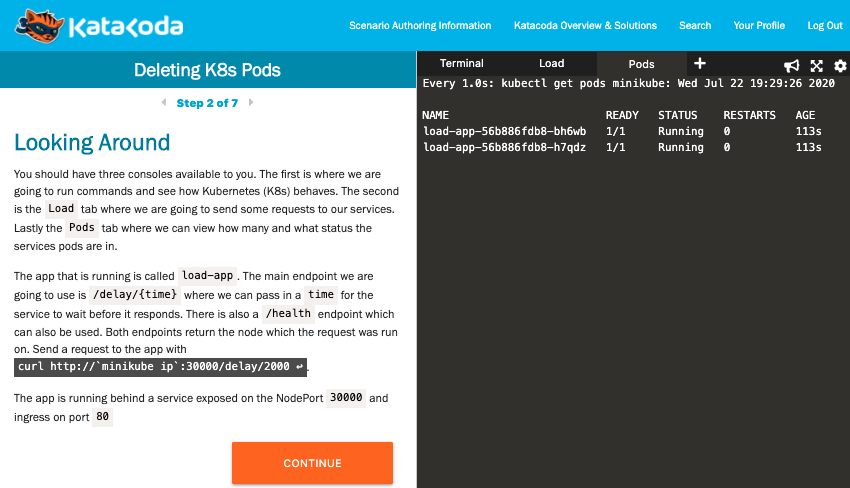

To put everything you have learnt into action and experiment with different scenarios, you can try this Katacoda scenario. Here you can play with a K8s cluster which has an example service deployed to it. The scenario walks you through deleting pods in different ways and watching how customer requests are affected.

为了将您学到的所有知识付诸实践并尝试不同的场景,您可以尝试使用Katacoda场景 。 在这里,您可以使用已部署了示例服务的K8s集群。 该场景将引导您以不同的方式删除吊舱,并观察客户请求的影响方式。

This has been a whistle-stop tour of some of the features of Kubernetes which can help you build resilient services. I hope you now have the confidence and knowledge to delete Kubernetes pods without affecting your customers.

这是Kubernetes的某些功能的全面介绍,可以帮助您构建弹性服务。 我希望您现在有信心和知识在不影响客户的情况下删除Kubernetes Pod。

翻译自: https://medium.com/better-programming/is-it-safe-to-delete-kubernetes-pods-under-load-83c85d268024

pod中容器如何负载均衡?

186

186

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?