幼儿园ai监控老师

If you’ve been struggling to get some transparency into your AI models’ performance in production, you’re in good company. Monitoring complex systems is always a challenge. Monitoring complex AI systems, with their built-in opacity, is a radical challenge.

如果您一直在努力提高AI模型在生产中的性能透明度,那么您将处于良好状态。 监视复杂系统始终是一个挑战。 监视具有内置不透明度的复杂AI系统是一项严峻的挑战。

With the proliferation of methods, underlying technologies and use cases for AI in the enterprise, AI systems are becoming increasingly unique. To fit the needs of their business, each data science team ends up building something quite singular; every AI system is a snowflake.

随着企业中AI的方法,基础技术和使用案例的激增,AI系统变得越来越独特。 为了满足业务需求,每个数据科学团队最终都会构建出非常独特的东西。 每个AI系统都是雪花。

The immense diversity of AI techniques, use cases and data contexts voids the possibility of a one size fits all monitoring solution. Autonomous monitoring solutions, for example, are often insufficient because they naturally don’t extend beyond a handful of use cases. It’s a big challenge for these solutions to extend or scale because to monitor AI systems without user context one has to make a lot of assumptions about the metrics, their expected behavior, and the possible root causes of changes in them.

大量的AI技术,用例和数据上下文使单一尺寸适合所有监控解决方案的可能性荡然无存。 例如,自主监视解决方案通常不够用,因为它们自然不会超出少数用例。 这些解决方案的扩展或扩展是一个巨大的挑战,因为要在没有用户上下文的情况下监视AI系统,必须对指标,指标的预期行为以及变化的可能根本原因做出很多假设。

An obvious fix is to build autonomous point solutions for each use case, but this won’t scale. So is there a framework or solution designed to work for (almost) everyone?

一个明显的解决办法是为每个用例构建自治点解决方案,但这不会扩展。 那么,是否有一个旨在为(几乎)每个人工作的框架或解决方案?

YES. It’s the Platform Approach.

是。 这是平台方法。

监视黑匣子的平台方法 (The Platform Approach to monitoring the black box)

The Platform Approach to AI system monitoring is the sweet spot between the generic autonomous approach, and the tailored approach, built-from-scratch. This means that on the one hand the monitoring system is configured according to the unique characteristics of the user’s AI-system, but on the other hand, it can effortlessly scale to meet new models, solution architectures and monitoring contexts.

人工智能系统监控的平台方法是通用自主方法与从头开始构建的量身定制方法之间的最佳结合点。 这意味着一方面要根据用户AI系统的独特特性来配置监视系统,另一方面,它可以轻松扩展以适应新模型,解决方案架构和监视环境。

This is achieved by implementing common monitoring building blocks, that are then configured by the user on the fly to achieve a tailored, dedicated monitoring solution. This monitoring environment works with any development and deployment stack (and any model type or AI technique). And, since the monitoring platform is independent and decoupled from the stack it enables a super swift integration that doesn’t affect development and deployment processes, and, even more importantly, monitoring that cuts across all layers in the stack.

这是通过实现通用的监视构建块来实现的,然后由用户即时配置这些监视构建块,以实现量身定制的专用监视解决方案。 该监视环境可与任何开发和部署堆栈(以及任何模型类型或AI技术)一起使用。 而且,由于监视平台是独立的并且与堆栈分离,因此它可以进行超级快速的集成,而不会影响开发和部署过程,更重要的是,监视跨堆栈的所有层。

The configurability of the system means that data teams aren’t limited to a prefabricated definition of their system: they are actually empowered by an “engineering arm” that gives them full control of the monitoring strategy and execution.

系统的可配置性意味着数据团队不仅限于其系统的预制定义:实际上,它们是由“工程部门”授权的,从而使他们能够完全控制监视策略和执行。

灵活,适应性强的解决方案 (A flexible, adaptable solution)

Beyond the fact that the Platform Approach can solve the monitoring problem for the industry, its endless flexibility benefits the user in ways that are not available in an industry specific autonomous solution. This might sound like a counter-intuitive assumption, so here are a few examples to the kind of features inherent in an agnostic system that are simply unattainable through autonomous solutions, even when those are industry specific:

除了平台方法可以解决行业的监控问题外,其无尽的灵活性还以特定于行业的自主解决方案无法提供的方式使用户受益。 这听起来像是违反直觉的假设,因此以下是不可知系统固有功能的一些示例,这些示例即使通过特定行业解决方案也无法通过自主解决方案实现:

Monitor by context (or system), not by model. If you have ground truth (or objective feedback on success/failure) available some time after the model ran, you’d want to correlate the new information with the info captured at inference time. An example for this kind of scenario is a marketing campaign targeting model that receives feedback on the success of the campaign (conversion, CTR, etc.) within a few weeks. In such a use case we would want the monitoring to be able to tie between the model’s predictions and the business outcome.

通过上下文(或系统)而不是模型进行监视。 如果在模型运行后的某个时间有可用的真实情况(或关于成功/失败的客观反馈),则需要将新信息与推断时捕获的信息相关联。 这种情况的一个示例是市场营销活动定位模型,该模型在几周内收到有关活动成功的反馈(转化,点击率等)。 在这种用例中,我们希望监视能够将模型的预测与业务结果联系起来。

Monitoring by context can also be invaluable if you’re comparing two different model versions running on the same data (in a “Shadow Deployment” scenario).

如果您要比较在同一数据上运行的两个不同模型版本(在“影子部署”方案中),则按上下文进行监视也将非常有用。

If you have multiple models that run in the same data context and perhaps depend on one another, you need to have a way to infer whether the true root cause for a failure in one model relates to the other. For example, in an NLP setting, if your language detection model deteriorates in accuracy, the sentiment analysis model downstream will likely underperform as well.

如果您有多个在同一数据上下文中运行并且可能相互依赖的模型,则需要一种方法来推断一个模型中失败的真正根源是否与另一个模型有关。 例如,在NLP设置中,如果您的语言检测模型的准确性下降,则下游的情感分析模型也可能会表现不佳。

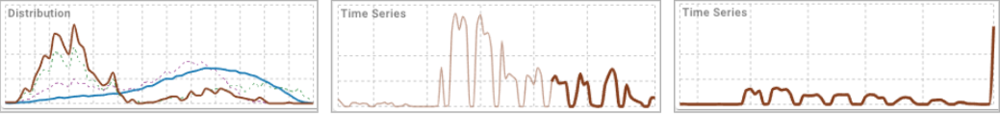

Configure your anomaly detection. Anomalies are always in context, so there are many instances in which an apparent anomaly (even underperformance) is acceptable, and the data scientist can dismiss it. The ability to configure your anomaly detection means that the monitoring solution won’t simply detect generic underperformance, but rather define what custom-configured anomalies might indicate underperformance.

配置异常检测。 异常总是存在于上下文中,因此在许多情况下,明显的异常(甚至性能不佳)是可以接受的,数据科学家可以消除它。 配置异常检测的能力意味着监视解决方案不仅可以检测一般的性能不佳,还可以定义哪些自定义配置的异常可能表明性能不佳。

For example: You analyze the sentiment of tweets. The monitoring solution found reduced prediction confidence on tweets below 20 characters (short tweets). You might be okay with this sort of weakness (e.g., if this is in line with customer expectations). Your monitoring solution should then enable you to exclude this segment (short tweets) from the analysis to avoid skewing the measurement for the rest of the data (which is particularly important if short tweets account for a significant part of the data).

例如:您分析推文的情绪。 监控解决方案发现,对低于20个字符(短推文)的推文的预测置信度降低。 您可能会遇到这种弱点(例如,如果这符合客户的期望)。 然后,您的监视解决方案应该使您能够从分析中排除此段(短推文),从而避免使其余数据偏离度量标准(如果短推文占数据的很大一部分,这尤其重要)。

Track any metric, at any stage. Most commonly, teams would track and review model precision and recall (which require “labeled data”). Even when there’s no such “ground truth”, there could be a lot of non-trivial indicators to track which could indicate model misbehavior. For example: If you have a text category classifier, you may want to track the relative prevalence of tweets for which no category scored above a certain threshold, as an indicator for low classification confidence. This kind of metric needs to be calculated from the raw results, so it’s non-trivial and won’t be “available” out of the box.

在任何阶段跟踪任何指标。 最常见的是,团队会跟踪和审查模型的精度和召回率(这需要“标记的数据”)。 即使没有这样的“基础事实”,也可能会有很多非平凡的指标可以追踪,这些指标可能表明模型的不良行为。 例如:如果您有一个文本类别分类器,您可能希望跟踪其类别没有得分高于某个阈值的推文的相对流行度,以此作为分类可信度低的指标。 这种指标需要从原始结果中计算出来,因此这是不平凡的,不会开箱即用。

In addition, every metric can be further segmented according to numerous dimensions along which the behavior of the metrics might change (for example if your metric is the text classification confidence, then a dimension might be the text’s length or the language in which the text is written.)

此外,每个指标可以根据可能会改变其行为的众多维度进一步细分(例如,如果您的指标是文本分类的置信度,则维度可能是文本的长度或文本所使用的语言书面。)

Furthermore, if you’re just looking at model inputs and outputs, you’re missing out on dimensions that might not be important for your predictions but are essential for Governance. For example: Race and gender aren’t (and should never be) features in your loan algorithm, but they’re important dimensions along which to assess the model’s behavior.

此外,如果仅查看模型的输入和输出,那么可能会漏掉一些对您的预测而言并不重要但对治理至关重要的维度。 例如:种族和性别不是(也不应该是)贷款算法中的特征,但是它们是评估模型行为的重要维度。

没有人比你更了解你的人工智能 (No one knows your AI better than you)

A robust monitoring solution that can give you real, valuable insights into your AI system needs to be relevant to your unique technology and context and flexible to accommodate your specific use cases and future development needs.

一个强大的监视解决方案可以为您提供对AI系统的真实,有价值的见解,并且需要与您独特的技术和环境相关联,并且可以灵活地适应您的特定用例和未来的开发需求。

Such a system should be stack agnostic, so you’re free to use any technology or methodology for production and training. It needs to look at your AI system holistically, so that specific production and performance areas are viewed not as silos but in the context of the overall system. And most importantly, it needs to look at your system in your unique backdrop — that only you can provide and fine-tune. The Platform Approach to AI monitoring is aimed at achieving just that.

这样的系统应该与堆栈无关 ,因此您可以自由地使用任何技术或方法进行生产和培训。 它需要从整体上看待您的AI系统,以便特定的生产和性能领域不是孤立的,而是整个系统的上下文 。 最重要的是,它需要在独特的背景下审视您的系统-只有您才能提供并进行微调。 人工智能监控的平台方法旨在实现这一目标。

Originally published at https://www.monalabs.io on August 12, 2020.

最初于 2020年8月12日 发布在 https://www.monalabs.io 。

翻译自: https://towardsdatascience.com/the-platform-approach-to-ai-monitoring-dcc43dee6c6e

幼儿园ai监控老师

1173

1173

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?