etl工具 apache

Many of you might not be familiar with the word Apache Beam, but trust me its worth learning about it. In this blog post, I will take you on a journey to understand beam, building your first ETL pipeline, branch it and run it locally.

你们中的许多人可能不熟悉Apache Beam这个词,但相信我值得学习。 在这篇博客文章中,我将带您了解Beam,构建您的第一个ETL管道,对其进行分支并在本地运行。

So, buckle up your belts and let’s start the journey…!

因此,系好安全带,让我们开始旅程吧!!

什么是Apache Beam? (What is Apache Beam?)

Apache Beam is a unified programming model that can be used to build portable data pipelines

Apache Beam是一个统一的编程模型,可用于构建可移植的数据管道

Apache beam is just a programming model like others to build big data pipelines but what makes it unique are two keywords, Unified & Portable.

Apache Beam只是一个用于构建大数据管道的编程模型,但它的独特之处在于两个关键字,Unified&Portable。

统一自然 (Unified Nature)

The most prominent data processing use-cases now a days are batch data processing and stream data processing in which we process historical data and real-time data streams respectively. Existing programming models like Spark and Flink have different APIs to handle both use-cases which ultimately leads to writing separate logics. But Apache Beam can handle batch and streaming data in the same way, means we have one Beam Runner API to handle both batch and streaming workloads and don’t need to write different logics separately.

当今最突出的数据处理用例是批处理数据处理和流数据处理,其中我们分别处理历史数据和实时数据流。 现有的编程模型(例如Spark和Flink)具有不同的API来处理这两种用例,最终导致编写单独的逻辑。 但是Apache Beam可以以相同的方式处理批处理和流数据,这意味着我们拥有一个Beam Runner API来处理批处理和流工作负载,不需要分别编写不同的逻辑。

In this blog post, our focus will be on historical data. Second part of it will be focused on streaming data challenges and how beam handles them

在此博客文章中,我们的重点将放在历史数据上。 第二部分将重点讨论流数据挑战以及Beam如何应对它们

可移植性 (Portability)

In Apache Beam, there is a clear separation of the runtime layer and the programming layer as a result of which development of code is not at all dependent on the underlying execution engine, which means code once developed can be migrated to any of the supported execution engines for Apache Beam.

在Apache Beam中,运行时层和编程层之间存在明显的分离,因此,代码的开发完全不依赖于底层执行引擎,这意味着一旦开发了代码,便可以迁移到任何受支持的执行中Apache Beam的引擎。

It is important to mention the comparison between beam, spark and flink is invalid, as beam is a programming model and other two are the execution engines Having said that we can also deduce that the performance of apache beam is directly proportional to the performance of underlying execution engine.

值得一提的是,beam,spark和flink之间的比较是无效的,因为beam是一种编程模型,另外两个是执行引擎。前面已经说过,我们还可以推断出Apache的性能与底层的性能成正比。执行引擎。

架构概述: (Architectural Overview:)

At high level apache beam is doing following steps,

在高水平的apap Beam中,请执行以下步骤,

- We write the code in any of the supported SDK. 我们使用任何受支持的SDK编写代码。

- Beam runner API will convert our code into language agnostic format and if there are any language specific primitives like user defined functions, they are resolved by the corresponding SDK worker. BeamRunner API会将我们的代码转换为与语言无关的格式,如果有任何语言特定的原语(例如用户定义的函数),则由相应的SDK工作人员解析。

- Final code can be executed on any of the supported runners. 最终代码可以在任何受支持的运行程序上执行。

基本术语: (Basic Terminologies:)

Let’s discuss some of the very basic terminologies,

让我们讨论一些非常基本的术语,

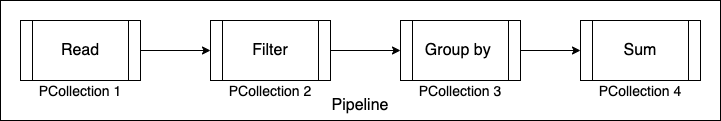

Pipeline: It is an encapsulation of entire data processing task. Right from reading data from source systems, applying transformations to it and write it to target systems

管道:它是整个数据处理任务的封装。 从读取源系统中的数据,对其进行转换,然后将其写入目标系统

P-Collection: It is a distributed dataset that our beam pipeline operates on. This can be seen as equivalent to RDDs in spark. P-Collections are capable of holding bounded (historical) as well as unbounded (streaming) data.

P-Collection:这是我们的光束管道运行的分布式数据集。 可以将其视为火花中的RDD。 P集合能够保存有界 (历史)和无界 (流)数据。

It is important to discuss core properties of P-Collection at this point,

此时,重要的是讨论P-Collection的核心属性,

- Just like spark RDDs, P-Collections in beam are immutable in nature which means applying a transformation on P-Collection won’t modify the existing P-Collection. In-fact, it will a create a new one. 就像火花RDD一样,beam中的P-Collection本质上是不可变的,这意味着对P-Collection进行变换不会修改现有的P-Collection。 实际上,它将创建一个新的。

- The elements in P-Collection can be of any type but they ALL must be of same type. P-Collection中的元素可以是任何类型,但是所有元素都必须是同一类型。

- P-Collection does not allow grained operations which means that we cannot apply transformations on some specific elements in it. Transforms will be applied to all elements of P-Collection. P-Collection不允许进行细化操作,这意味着我们无法对其中的某些特定元素应用转换。 转换将应用于P-Collection的所有元素。

- Each element in P-Collection has timestamp associated with it. For bounded data, timestamp can either be set by user explicitly or by the beam implicitly. For unbounded data, this is typically assigned by the source. P-Collection中的每个元素都有与之关联的时间戳。 对于有界数据,时间戳可以由用户显式设置,也可以由波束隐式设置。 对于无界数据,这通常由源分配。

P-Transform: It represents a data operations performed on P-Collection.

P-Transform:它表示对P-Collection执行的数据操作。

安装: (Installation:)

You can install Apache beam on your local system but for that you have to install python, beam related libraries and setup some paths which can be a hassle for many people. So, I decided to leverage Google Co-lab which is an interactive environment which lets you write and execute python code in cloud. You would need google account for it.

您可以在本地系统上安装Apache Beam,但为此,您必须安装python,与Beam有关的库并设置一些路径,这对很多人来说都是一件麻烦事。 因此,我决定利用Google Co-lab,它是一个交互式环境,可让您在云中编写和执行python代码。 您需要使用Google帐户。

It is important to remember that if the sessions is expired then you have to execute all above commands again because in new session you might not have same virtual machine as of previous session.

重要的是要记住,如果会话已过期,则必须再次执行上述所有命令,因为在新会话中,您可能没有与先前会话相同的虚拟机。

Awesome, So We are all set to write our ETL pipeline now…!

太棒了,所以我们现在都准备编写我们的ETL管道…!

编写ETL管道: (Writing ETL Pipeline:)

Let’s say we have a source data present in file which contains data for employee id, employee name, department no, department name, present date. From this data, let’s say we want to calculate the attendance count for each of the employee for Accounts department.

假设我们在文件中存在一个源数据,其中包含有关员工ID,员工名称,部门编号,部门名称,当前日期的数据。 假设我们要根据此数据计算会计部门每个员工的出勤计数 。

Line 3, Creating a Pipeline object to control the life cycle of the pipeline

第3行 ,创建管道对象以控制管道的生命周期

Line 5–7, Creating a P-Collection and reading bounded data from file in it

第5-7行 ,创建一个P集合并从其中的文件中读取有界数据

Line 8-11, Applying P-Transforms

第8-11行 ,应用P变换

Line 12–15-, Writing output to file and running pipeline locally

第12–15-行 ,将输出写入文件并在本地运行管道

Line 17, printing content of output file.

第17行 ,输出文件的打印内容。

There are few other things, we need to talk about at this stage,

在此阶段,我们需要谈谈的其他事情很少,

| operator applied after each P-Transform corresponds to generic .apply() method in beam SDK

| 在每个P-Transform之后应用的运算符对应于Beam SDK中的通用.apply()方法

After each | we specified a label like this , ‘LABEL’ >> which gives a hint of what is being done in particular P-Transform operation. This is optional but if you choose to label P-Transforms then labels MUST BE UNIQUE.

每次之后 我们指定了这样的标签'LABEL'>> ,以提示特定的P-Transform操作正在执行的操作。 这是可选的,但是如果您选择标记P变换,则标记必须是唯一的。

Lambda function is a small, anonymous and one liner function which can take any no of parameters but can have only one expression. I usually recalls it by this general expression: (result = lambda x, y, z : x*y*z)

Lambda函数是一个小的匿名匿名函数,它可以不带任何参数,但只能有一个表达式 。 我通常通过以下一般表达式来回忆它:( 结果= lambda x,y,z:x * y * z)

00000-of-00001 on Line 17 is the num of shards. WriteToText() has num_shards parameter which specifies the number of files written as output. Beam can automatically set this value or we can also set explicitly.

第17行的00000-of-00001是分片数。 WriteToText()具有num_shards参数,该参数指定作为输出写入的文件数。 Beam可以自动设置该值,也可以显式设置。

Map() takes one element as input and emits one element as output.

Map()将一个元素作为输入,并发出一个元素作为输出。

CombinePerKey() Transform is grouping by key and doing sum.

CombinePerKey()变换按键分组并求和。

分支管道: (Branching Pipeline:)

Lets enhance our previous scenario and calculate the attendance count for each of the employee for HR department as well.

让我们改善以前的情况,并为人力资源部门的每个员工计算出勤率 。

We are effectively branching our pipeline where we can use same P-Collection as an input to multiple P-Transforms. After reading data in P-Collection, Pipeline applies multiple P-Transforms to it. In our case, Transform A and Transform B both will execute in parallel, filter the data (accounts/HR) and will calculate their attendance count. Lastly, we have an option to merge the results of both OR we can also emit their results in separate files.

我们正在有效地分支我们的管道,在这里我们可以使用相同的P-Collection作为多个P-Transforms的输入。 在读取P-Collection中的数据后,管道将对其应用多个P-Transform。 在我们的示例中,转换A和转换B都将并行执行,过滤数据(帐户/人力资源)并计算出勤人数。 最后,我们可以选择合并两个结果,也可以将它们的结果发送到单独的文件中。

Let’s extend our previous code & We will go with merging the results.

让我们扩展之前的代码,然后合并结果。

Line 4, we are initiating Pipeline() object. This is another way to initiate Pipeline object but we have to take care of indentation in this case.

第4行 ,我们正在初始化Pipeline()对象。 这是初始化Pipeline对象的另一种方法,但是在这种情况下,我们必须注意缩进。

Line 18–22, Same as discussed above. Only filtering criteria is changed.

第18-22行 ,与上面讨论的相同。 仅更改过滤条件。

Line 25–29, Flatten() merges multiple P-Collection objects into a single logical P-Collection and then we are writing combined results to file.

第25–29行, Flatten()将多个P-Collection对象合并为一个逻辑P-Collection,然后将合并的结果写入文件。

Line 34–51, Output of the code, commented

第34–51行 ,代码输出,已注释

Before wrapping up this first part of blog post, I would like to talk about one more P-Transform function that we will be using in Part 2,

在结束博客文章的第一部分之前,我想谈一谈我们将在第2部分中使用的另一个P-Transform函数。

ParDo: Takes each element of input P-Collection, performs processing function on it and emits 0,1 or multiple elements. It corresponds to the Map & Shuffle phase of Map-Reduce paradigm

ParDo:获取输入P-Collection的每个元素,对其执行处理功能,并发出0,1或多个元素。 它对应于Map-Reduce范式的Map&Shuffle阶段

DoFn: A beam class that defines the distributed processing function. It overrides the process method which contains the processing logic to run in a parallel way.

DoFn:定义了分布式处理功能的梁类。 它覆盖了包含以并行方式运行的处理逻辑的处理方法。

In part 2 of this blog post, we will talk about following,

在此博客文章的第2部分中,我们将讨论以下内容,

- Streaming data processing challenges 流数据处理挑战

- How Apache beam solves them Apache Beam如何解决它们

敬请关注…! (STAY TUNED…!)

翻译自: https://medium.com/@arslanmehmood50/writing-etl-pipelines-in-apache-beam-part-1-d945b1adb335

etl工具 apache

423

423

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?