#include <algorithm>

#include "ivector/agglomerative-clustering.h"

namespace kaldi {

void AgglomerativeClusterer::Cluster() {

KALDI_VLOG(2) << "Initializing cluster assignments.";

Initialize();

KALDI_VLOG(2) << "Clustering...";

// This is the main algorithm loop. It moves through the queue merging

// clusters until a stopping criterion has been reached.

while (num_clusters_ > min_clust_ && !queue_.empty()) {

std::pair<BaseFloat, std::pair<uint16, uint16> > pr = queue_.top();

int32 i = (int32) pr.second.first, j = (int32) pr.second.second;

queue_.pop();

// check to make sure clusters have not already been merged

if ((active_clusters_.find(i) != active_clusters_.end()) &&

(active_clusters_.find(j) != active_clusters_.end()))

MergeClusters(i, j);

}

std::vector<int32> new_assignments(num_points_);

int32 label_id = 0;

std::set<int32>::iterator it;

// Iterate through the clusters and assign all utterances within the cluster

// an ID label unique to the cluster. This is the final output and frees up

// the cluster memory accordingly.

for (it = active_clusters_.begin(); it != active_clusters_.end(); ++it) {

++label_id;

AhcCluster *cluster = clusters_map_[*it];

std::vector<int32>::iterator utt_it;

for (utt_it = cluster->utt_ids.begin();

utt_it != cluster->utt_ids.end(); ++utt_it)

new_assignments[*utt_it] = label_id;

delete cluster;

}

assignments_->swap(new_assignments);

}

BaseFloat AgglomerativeClusterer::GetCost(int32 i, int32 j) {

if (i < j)

return cluster_cost_map_[std::make_pair(i, j)];

else

return cluster_cost_map_[std::make_pair(j, i)];

}

void AgglomerativeClusterer::Initialize() {

KALDI_ASSERT(num_clusters_ != 0);

for (int32 i = 0; i < num_points_; i++) {

// create an initial cluster of size 1 for each point

std::vector<int32> ids;

ids.push_back(i);

AhcCluster *c = new AhcCluster(++count_, -1, -1, ids);

clusters_map_[count_] = c;

active_clusters_.insert(count_);

// propagate the queue with all pairs from the cost matrix

for (int32 j = i+1; j < num_clusters_; j++) {

BaseFloat cost = costs_(i,j);

cluster_cost_map_[std::make_pair(i+1, j+1)] = cost;

if (cost <= thresh_)

queue_.push(std::make_pair(cost,

std::make_pair(static_cast<uint16>(i+1),

static_cast<uint16>(j+1))));

}

}

}

void AgglomerativeClusterer::MergeClusters(int32 i, int32 j) {

AhcCluster *clust1 = clusters_map_[i];

AhcCluster *clust2 = clusters_map_[j];

// For memory efficiency, the first cluster is updated to contain the new

// merged cluster information, and the second cluster is later deleted.

clust1->id = ++count_;

clust1->parent1 = i;

clust1->parent2 = j;

clust1->size += clust2->size;

clust1->utt_ids.insert(clust1->utt_ids.end(), clust2->utt_ids.begin(),

clust2->utt_ids.end());

// Remove the merged clusters from the list of active clusters.

active_clusters_.erase(i);

active_clusters_.erase(j);

// Update the queue with all the new costs involving the new cluster

std::set<int32>::iterator it;

for (it = active_clusters_.begin(); it != active_clusters_.end(); ++it) {

// The new cost is the sum of the costs of the new cluster's parents

BaseFloat new_cost = GetCost(*it, i) + GetCost(*it, j);

cluster_cost_map_[std::make_pair(*it, count_)] = new_cost;

BaseFloat norm = clust1->size * (clusters_map_[*it])->size;

if (new_cost / norm <= thresh_)

queue_.push(std::make_pair(new_cost / norm,

std::make_pair(static_cast<uint16>(*it),

static_cast<uint16>(count_))));

}

active_clusters_.insert(count_);

clusters_map_[count_] = clust1;

delete clust2;

num_clusters_--;

}

void AgglomerativeCluster(

const Matrix<BaseFloat> &costs,

BaseFloat thresh,

int32 min_clust,

std::vector<int32> *assignments_out) {

KALDI_ASSERT(min_clust >= 0);

AgglomerativeClusterer ac(costs, thresh, min_clust, assignments_out);

ac.Cluster();

}

ivector-extractor.cc File Reference

#include <vector>#include "ivector/ivector-extractor.h"#include "util/kaldi-thread.h"

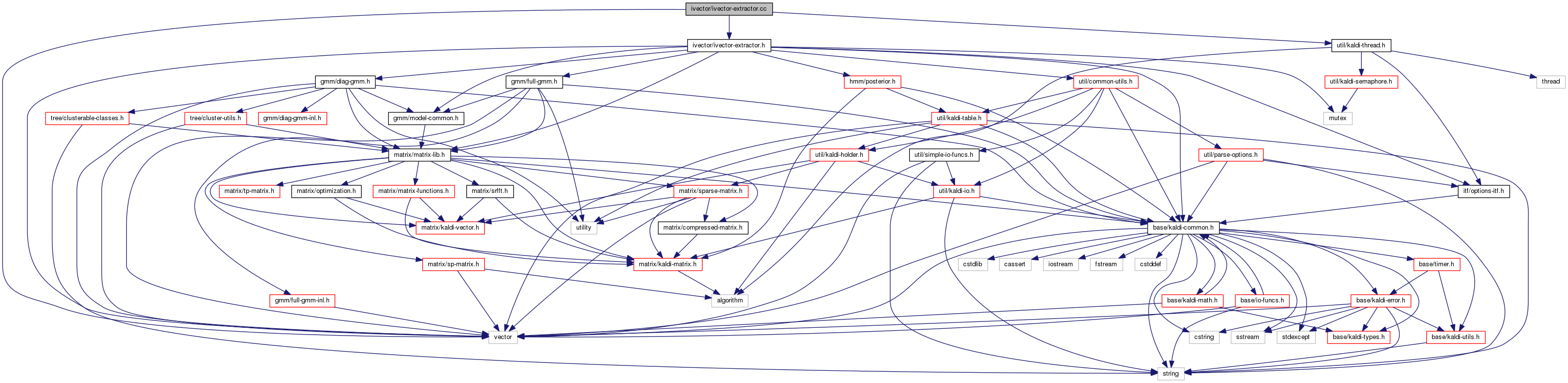

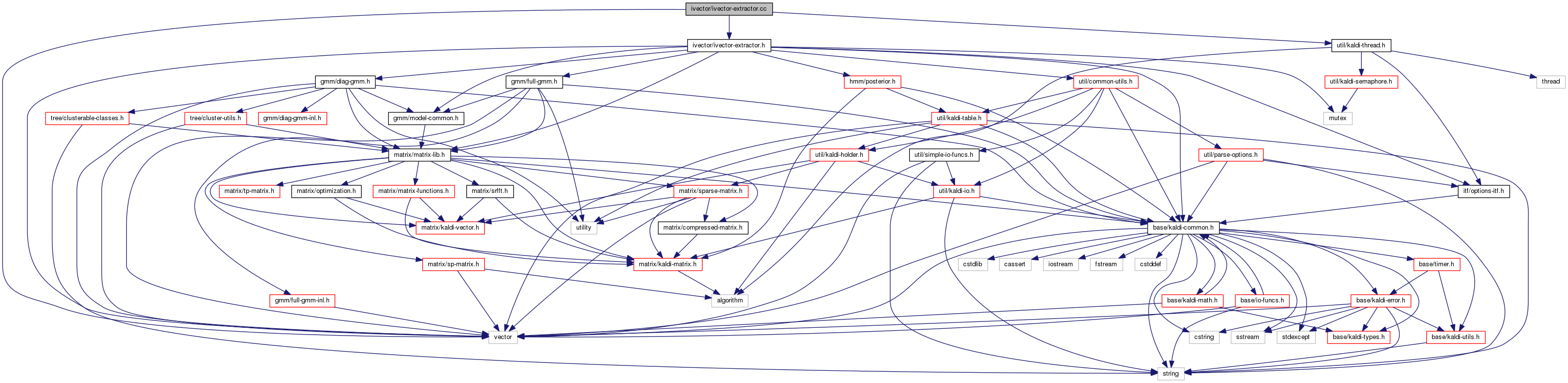

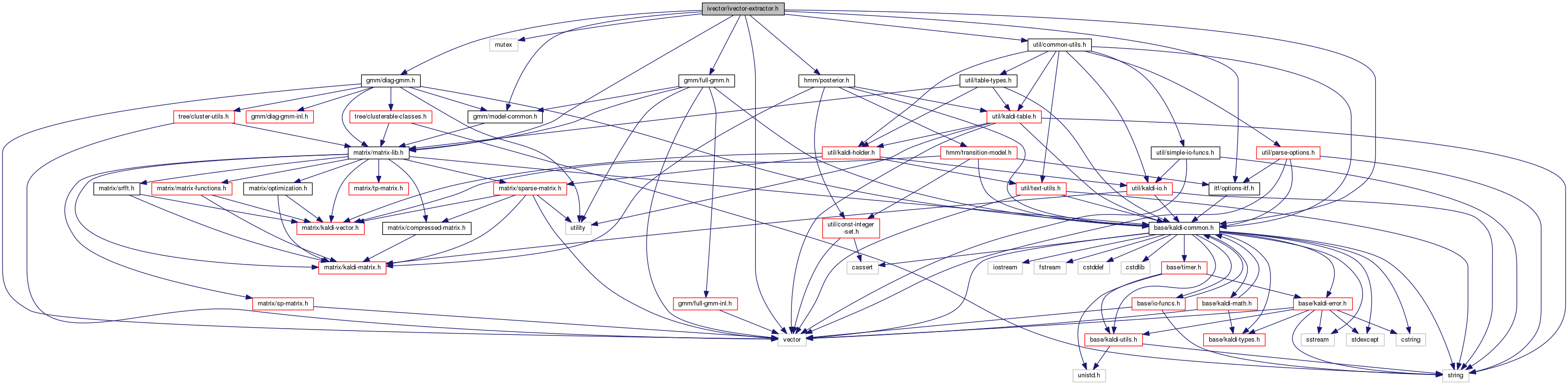

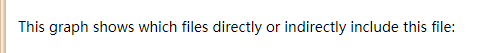

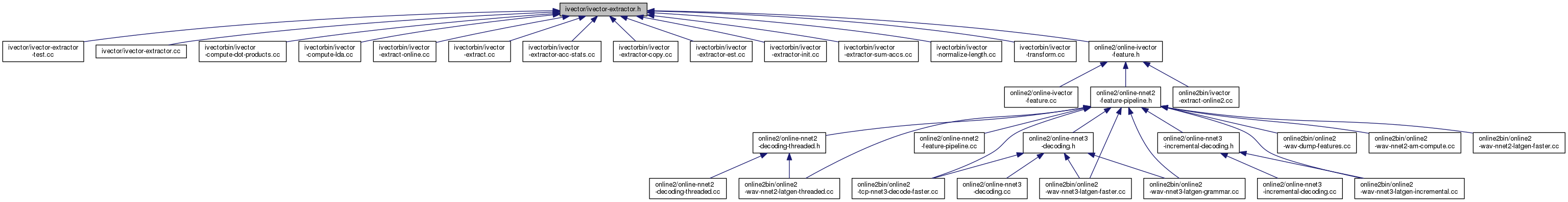

Include dependency graph for ivector-extractor.cc:

#ifndef KALDI_IVECTOR_IVECTOR_EXTRACTOR_H_

#define KALDI_IVECTOR_IVECTOR_EXTRACTOR_H_

#include <vector>

#include <mutex>

#include "base/kaldi-common.h"

#include "matrix/matrix-lib.h"

#include "gmm/model-common.h"

#include "gmm/diag-gmm.h"

#include "gmm/full-gmm.h"

#include "itf/options-itf.h"

#include "util/common-utils.h"

#include "hmm/posterior.h"

namespace kaldi {

// Note, throughout this file we use SGMM-type notation because

// that's what I'm comfortable with.

// Dimensions:

// D is the feature dim (e.g. D = 60)

// I is the number of Gaussians (e.g. I = 2048)

// S is the ivector dim (e.g. S = 400)

// Options for estimating iVectors, during both training and test. Note: the

// "acoustic_weight" is not read by any class declared in this header; it has to

// be applied by calling IvectorExtractorUtteranceStats::Scale() before

// obtaining the iVector.

// The same is true of max_count: it has to be applied by programs themselves

// e.g. see ../ivectorbin/ivector-extract.cc.

struct IvectorEstimationOptions {

double acoustic_weight;

double max_count;

IvectorEstimationOptions(): acoustic_weight(1.0), max_count(0.0) {}

void Register(OptionsItf *opts) {

opts->Register("acoustic-weight", &acoustic_weight,

"Weight on part of auxf that involves the data (e.g. 0.2); "

"if this weight is small, the prior will have more effect.");

opts->Register("max-count", &max_count,

"Maximum frame count (affects prior scaling): if >0, the prior "

"term will be scaled up after the frame count exceeds this "

"value. Note that this count is considered after posterior "

"scaling (e.g. --acoustic-weight option, or scale argument to "

"scale-post), so you would normally use a cutoff 10 times "

"smaller than the corresponding number of frames.");

}

};

class IvectorExtractor;

class IvectorExtractorComputeDerivedVarsClass;

class IvectorExtractorUtteranceStats {

public:

IvectorExtractorUtteranceStats(int32 num_gauss, int32 feat_dim,

bool need_2nd_order_stats):

gamma_(num_gauss), X_(num_gauss, feat_dim) {

if (need_2nd_order_stats) {

S_.resize(num_gauss);

for (int32 i = 0; i < num_gauss; i++)

S_[i].Resize(feat_dim);

}

}

void AccStats(const MatrixBase<BaseFloat> &feats,

const Posterior &post);

void Scale(double scale); // Used to apply acoustic scale.

double NumFrames() { return gamma_.Sum(); }

protected:

friend class IvectorExtractor;

friend class IvectorExtractorStats;

Vector<double> gamma_; // zeroth-order stats (summed posteriors), dimension [I]

Matrix<double> X_; // first-order stats, dimension [I][D]

std::vector<SpMatrix<double> > S_; // 2nd-order stats, dimension [I][D][D], if

// required.

};

struct IvectorExtractorOptions {

int ivector_dim;

int num_iters;

bool use_weights;

IvectorExtractorOptions(): ivector_dim(400), num_iters(2),

use_weights(true) { }

void Register(OptionsItf *opts) {

opts->Register("num-iters", &num_iters, "Number of iterations in "

"iVector estimation (>1 needed due to weights)");

opts->Register("ivector-dim", &ivector_dim, "Dimension of iVector");

opts->Register("use-weights", &use_weights, "If true, regress the "

"log-weights on the iVector");

}

};

// Forward declaration. This class is used together with IvectorExtractor to

// compute iVectors in an online way, so we can update the estimate efficiently

// as we add frames.

class OnlineIvectorEstimationStats;

// Caution: the IvectorExtractor is not the only thing required to get an

// ivector. We also need to get posteriors from a GMM, typically a FullGmm.

// Typically these will be obtained in a process that involves using a DiagGmm

// for Gaussian selection, followed by getting posteriors from the FullGmm. To

// keep track of these, we keep them all in the same directory,

// e.g. final.{ubm,dubm,ie}.

class IvectorExtractor {

public:

friend class IvectorExtractorStats;

friend class OnlineIvectorEstimationStats;

IvectorExtractor(): prior_offset_(0.0) { }

IvectorExtractor(

const IvectorExtractorOptions &opts,

const FullGmm &fgmm);

void GetIvectorDistribution(

const IvectorExtractorUtteranceStats &utt_stats,

VectorBase<double> *mean,

SpMatrix<double> *var) const;

double PriorOffset() const { return prior_offset_; }

double GetAuxf(const IvectorExtractorUtteranceStats &utt_stats,

const VectorBase<double> &mean,

const SpMatrix<double> *var = NULL) const;

double GetAcousticAuxf(const IvectorExtractorUtteranceStats &utt_stats,

const VectorBase<double> &mean,

const SpMatrix<double> *var = NULL) const;

double GetPriorAuxf(const VectorBase<double> &mean,

const SpMatrix<double> *var = NULL) const;

double GetAcousticAuxfVariance(

const IvectorExtractorUtteranceStats &utt_stats) const;

double GetAcousticAuxfMean(

const IvectorExtractorUtteranceStats &utt_stats,

const VectorBase<double> &mean,

const SpMatrix<double> *var = NULL) const;

double GetAcousticAuxfGconst(

const IvectorExtractorUtteranceStats &utt_stats) const;

double GetAcousticAuxfWeight(

const IvectorExtractorUtteranceStats &utt_stats,

const VectorBase<double> &mean,

const SpMatrix<double> *var = NULL) const;

void GetIvectorDistMean(

const IvectorExtractorUtteranceStats &utt_stats,

VectorBase<double> *linear,

SpMatrix<double> *quadratic) const;

void GetIvectorDistPrior(

const IvectorExtractorUtteranceStats &utt_stats,

VectorBase<double> *linear,

SpMatrix<double> *quadratic) const;

void GetIvectorDistWeight(

const IvectorExtractorUtteranceStats &utt_stats,

const VectorBase<double> &mean,

VectorBase<double> *linear,

SpMatrix<double> *quadratic) const;

// Note: the function GetStats no longer exists due to code refactoring.

// Instead of this->GetStats(feats, posterior, &utt_stats), call

// utt_stats.AccStats(feats, posterior).

int32 FeatDim() const;

int32 IvectorDim() const;

int32 NumGauss() const;

bool IvectorDependentWeights() const { return w_.NumRows() != 0; }

void Write(std::ostream &os, bool binary) const;

void Read(std::istream &is, bool binary);

// Note: we allow the default assignment and copy operators

// because they do what we want.

protected:

void ComputeDerivedVars();

void ComputeDerivedVars(int32 i);

friend class IvectorExtractorComputeDerivedVarsClass;

// Imagine we'll project the iVectors with transformation T, so apply T^{-1}

// where necessary to keep the model equivalent. Used to keep unit variance

// (like prior re-estimation).

void TransformIvectors(const MatrixBase<double> &T,

double new_prior_offset);

Matrix<double> w_;

Vector<double> w_vec_;

std::vector<Matrix<double> > M_;

std::vector<SpMatrix<double> > Sigma_inv_;

double prior_offset_;

// Below are *derived variables* that can be computed from the

// variables above.

Vector<double> gconsts_;

Matrix<double> U_;

std::vector<Matrix<double> > Sigma_inv_M_;

private:

// var <-- quadratic_term^{-1}, but done carefully, first flooring eigenvalues

// of quadratic_term to 1.0, which mathematically is the least they can be,

// due to the prior term.

static void InvertWithFlooring(const SpMatrix<double> &quadratic_term,

SpMatrix<double> *var);

};

class OnlineIvectorEstimationStats {

public:

// Search above for max_count to see an explanation; if nonzero, it will

// put a higher weight on the prior (vs. the stats) once the count passes

// that value.

OnlineIvectorEstimationStats(int32 ivector_dim,

BaseFloat prior_offset,

BaseFloat max_count);

OnlineIvectorEstimationStats(const OnlineIvectorEstimationStats &other);

// Accumulate stats for one frame.

void AccStats(const IvectorExtractor &extractor,

const VectorBase<BaseFloat> &feature,

const std::vector<std::pair<int32, BaseFloat> > &gauss_post);

// Accumulate stats for a sequence (or collection) of frames.

void AccStats(const IvectorExtractor &extractor,

const MatrixBase<BaseFloat> &features,

const std::vector<std::vector<std::pair<int32, BaseFloat> > > &gauss_post);

int32 IvectorDim() const { return linear_term_.Dim(); }

void GetIvector(int32 num_cg_iters,

VectorBase<double> *ivector) const;

double NumFrames() const { return num_frames_; }

double PriorOffset() const { return prior_offset_; }

double ObjfChange(const VectorBase<double> &ivector) const;

double Count() const { return num_frames_; }

void Scale(double scale);

void Write(std::ostream &os, bool binary) const;

void Read(std::istream &is, bool binary);

// Override the default assignment operator

inline OnlineIvectorEstimationStats &operator=(const OnlineIvectorEstimationStats &other) {

this->prior_offset_ = other.prior_offset_;

this->max_count_ = other.max_count_;

this->num_frames_ = other.num_frames_;

this->quadratic_term_=other.quadratic_term_;

this->linear_term_=other.linear_term_;

return *this;

}

protected:

double Objf(const VectorBase<double> &ivector) const;

double DefaultObjf() const;

friend class IvectorExtractor;

double prior_offset_;

double max_count_;

double num_frames_; // num frames (weighted, if applicable).

SpMatrix<double> quadratic_term_;

Vector<double> linear_term_;

};

// This code obtains periodically (for each "ivector_period" frames, e.g. 10

// frames), an estimate of the iVector including all frames up to that point.

// This emulates what you could do in an online/streaming algorithm; its use is

// for neural network training in a way that's matched to online decoding.

// [note: I don't believe we are currently using the program,

// ivector-extract-online.cc, that calls this function, in any of the scripts.].

// Caution: this program outputs the raw iVectors, where the first component

// will generally be very positive. You probably want to subtract PriorOffset()

// from the first element of each row of the output before writing it out.

// For num_cg_iters, we suggest 15. It can be a positive number (more -> more

// exact, less -> faster), or if it's negative it will do the optimization

// exactly each time which is slower.

// It returns the objective function improvement per frame from the "default" iVector to

// the last iVector estimated.

double EstimateIvectorsOnline(

const Matrix<BaseFloat> &feats,

const Posterior &post,

const IvectorExtractor &extractor,

int32 ivector_period,

int32 num_cg_iters,

BaseFloat max_count,

Matrix<BaseFloat> *ivectors);

struct IvectorExtractorStatsOptions {

bool update_variances;

bool compute_auxf;

int32 num_samples_for_weights;

int cache_size;

IvectorExtractorStatsOptions(): update_variances(true),

compute_auxf(true),

num_samples_for_weights(10),

cache_size(100) { }

void Register(OptionsItf *opts) {

opts->Register("update-variances", &update_variances, "If true, update the "

"Gaussian variances");

opts->Register("compute-auxf", &compute_auxf, "If true, compute the "

"auxiliary functions on training data; can be used to "

"debug and check convergence.");

opts->Register("num-samples-for-weights", &num_samples_for_weights,

"Number of samples from iVector distribution to use "

"for accumulating stats for weight update. Must be >1");

opts->Register("cache-size", &cache_size, "Size of an internal "

"cache (not critical, only affects speed/memory)");

}

};

struct IvectorExtractorEstimationOptions {

double variance_floor_factor;

double gaussian_min_count;

int32 num_threads;

bool diagonalize;

IvectorExtractorEstimationOptions(): variance_floor_factor(0.1),

gaussian_min_count(100.0),

diagonalize(true) { }

void Register(OptionsItf *opts) {

opts->Register("variance-floor-factor", &variance_floor_factor,

"Factor that determines variance flooring (we floor each covar "

"to this times global average covariance");

opts->Register("gaussian-min-count", &gaussian_min_count,

"Minimum total count per Gaussian, below which we refuse to "

"update any associated parameters.");

opts->Register("diagonalize", &diagonalize,

"If true, diagonalize the quadratic term in the "

"objective function. This reorders the ivector dimensions"

"from most to least important.");

}

};

class IvectorExtractorUpdateProjectionClass;

class IvectorExtractorUpdateWeightClass;

class IvectorExtractorStats {

public:

friend class IvectorExtractor;

IvectorExtractorStats(): tot_auxf_(0.0), R_num_cached_(0), num_ivectors_(0) { }

IvectorExtractorStats(const IvectorExtractor &extractor,

const IvectorExtractorStatsOptions &stats_opts);

void Add(const IvectorExtractorStats &other);

void AccStatsForUtterance(const IvectorExtractor &extractor,

const MatrixBase<BaseFloat> &feats,

const Posterior &post);

// This version (intended mainly for testing) works out the Gaussian

// posteriors from the model. Returns total log-like for feats, given

// unadapted fgmm. You'd want to add Gaussian pruning and preselection using

// the diagonal, GMM, for speed, if you used this outside testing code.

double AccStatsForUtterance(const IvectorExtractor &extractor,

const MatrixBase<BaseFloat> &feats,

const FullGmm &fgmm);

void Read(std::istream &is, bool binary, bool add = false);

void Write(std::ostream &os, bool binary); // non-const version; relates to cache.

// const version of Write; may use extra memory if we have stuff cached

void Write(std::ostream &os, bool binary) const;

double Update(const IvectorExtractorEstimationOptions &opts,

IvectorExtractor *extractor) const;

double AuxfPerFrame() { return tot_auxf_ / gamma_.Sum(); }

void IvectorVarianceDiagnostic(const IvectorExtractor &extractor);

// Copy constructor.

explicit IvectorExtractorStats (const IvectorExtractorStats &other);

protected:

friend class IvectorExtractorUpdateProjectionClass;

friend class IvectorExtractorUpdateWeightClass;

// This is called by AccStatsForUtterance

void CommitStatsForUtterance(const IvectorExtractor &extractor,

const IvectorExtractorUtteranceStats &utt_stats);

void CommitStatsForM(const IvectorExtractor &extractor,

const IvectorExtractorUtteranceStats &utt_stats,

const VectorBase<double> &ivec_mean,

const SpMatrix<double> &ivec_var);

void FlushCache();

void CommitStatsForSigma(const IvectorExtractor &extractor,

const IvectorExtractorUtteranceStats &utt_stats);

void CommitStatsForWPoint(const IvectorExtractor &extractor,

const IvectorExtractorUtteranceStats &utt_stats,

const VectorBase<double> &ivector,

double weight);

void CommitStatsForW(const IvectorExtractor &extractor,

const IvectorExtractorUtteranceStats &utt_stats,

const VectorBase<double> &ivec_mean,

const SpMatrix<double> &ivec_var);

void CommitStatsForPrior(const VectorBase<double> &ivec_mean,

const SpMatrix<double> &ivec_var);

// Updates M. Returns the objf improvement per frame.

double UpdateProjections(const IvectorExtractorEstimationOptions &opts,

IvectorExtractor *extractor) const;

// This internally called function returns the objf improvement

// for this Gaussian index. Updates one M.

double UpdateProjection(const IvectorExtractorEstimationOptions &opts,

int32 gaussian,

IvectorExtractor *extractor) const;

// Updates the weight projections. Returns the objf improvement per

// frame.

double UpdateWeights(const IvectorExtractorEstimationOptions &opts,

IvectorExtractor *extractor) const;

// Updates the weight projection for one Gaussian index. Returns the objf

// improvement for this index.

double UpdateWeight(const IvectorExtractorEstimationOptions &opts,

int32 gaussian,

IvectorExtractor *extractor) const;

// Returns the objf improvement per frame.

double UpdateVariances(const IvectorExtractorEstimationOptions &opts,

IvectorExtractor *extractor) const;

// Updates the prior; returns obj improvement per frame.

double UpdatePrior(const IvectorExtractorEstimationOptions &opts,

IvectorExtractor *extractor) const;

// Called from UpdatePrior, separating out some code that

// computes likelihood changes.

double PriorDiagnostics(double old_prior_offset) const;

void CheckDims(const IvectorExtractor &extractor) const;

IvectorExtractorStatsOptions config_;

double tot_auxf_;

std::mutex gamma_Y_lock_;

Vector<double> gamma_;

std::vector<Matrix<double> > Y_;

std::mutex R_lock_;

Matrix<double> R_;

std::mutex R_cache_lock_;

int32 R_num_cached_;

Matrix<double> R_gamma_cache_;

Matrix<double> R_ivec_scatter_cache_;

std::mutex weight_stats_lock_;

Matrix<double> Q_;

Matrix<double> G_;

std::mutex variance_stats_lock_;

std::vector< SpMatrix<double> > S_;

std::mutex prior_stats_lock_;

double num_ivectors_;

Vector<double> ivector_sum_;

SpMatrix<double> ivector_scatter_;

private:

void GetOrthogonalIvectorTransform(const SubMatrix<double> &T,

IvectorExtractor *extractor,

Matrix<double> *A) const;

IvectorExtractorStats &operator = (const IvectorExtractorStats &other); // Disallow.

};

} // namespace kaldi

#endif

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?