我就废话不多说了,大家还是直接看代码吧~

注释讲解版:

# Classifier example

import numpy as np

# for reproducibility

np.random.seed(1337)

# from keras.datasets import mnist

from keras.utils import np_utils

from keras.models import Sequential

from keras.layers import Dense, Activation

from keras.optimizers import RMSprop

# 程序中用到的数据是经典的手写体识别mnist数据集

# download the mnist to the path if it is the first time to be called

# X shape (60,000 28x28), y

# (X_train, y_train), (X_test, y_test) = mnist.load_data()

# 下载minst.npz:

# 链接: https://pan.baidu.com/s/1b2ppKDOdzDJxivgmyOoQsA

# 提取码: y5ir

# 将下载好的minst.npz放到当前目录下

path='./mnist.npz'

f = np.load(path)

X_train, y_train = f['x_train'], f['y_train']

X_test, y_test = f['x_test'], f['y_test']

f.close()

# data pre-processing

# 数据预处理

# normalize

# X shape (60,000 28x28),表示输入数据 X 是个三维的数据

# 可以理解为 60000行数据,每一行是一张28 x 28 的灰度图片

# X_train.reshape(X_train.shape[0], -1)表示:只保留第一维,其余的纬度,不管多少纬度,重新排列为一维

# 参数-1就是不知道行数或者列数多少的情况下使用的参数

# 所以先确定除了参数-1之外的其他参数,然后通过(总参数的计算) / (确定除了参数-1之外的其他参数) = 该位置应该是多少的参数

# 这里用-1是偷懒的做法,等同于 28*28

# reshape后的数据是:共60000行,每一行是784个数据点(feature)

# 输入的 x 变成 60,000*784 的数据,然后除以 255 进行标准化

# 因为每个像素都是在 0 到 255 之间的,标准化之后就变成了 0 到 1 之间

X_train = X_train.reshape(X_train.shape[0], -1) / 255

X_test = X_test.reshape(X_test.shape[0], -1) / 255

# 分类标签编码

# 将y转化为one-hot vector

y_train = np_utils.to_categorical(y_train, num_classes = 10)

y_test = np_utils.to_categorical(y_test, num_classes = 10)

# Another way to build your neural net

# 建立神经网络

# 应用了2层的神经网络,前一层的激活函数用的是relu,后一层的激活函数用的是softmax

#32是输出的维数

model = Sequential([

Dense(32, input_dim=784),

Activation('relu'),

Dense(10),

Activation('softmax')

])

# Another way to define your optimizer

# 优化函数

# 优化算法用的是RMSprop

rmsprop = RMSprop(lr=0.001, rho=0.9, epsilon=1e-08, decay=0.0)

# We add metrics to get more results you want to see

# 不自己定义,直接用内置的优化器也行,optimizer='rmsprop'

#激活模型:接下来用 model.compile 激励神经网络

model.compile(

optimizer=rmsprop,

loss='categorical_crossentropy',

metrics=['accuracy']

)

print('Training------------')

# Another way to train the model

# 训练模型

# 上一个程序是用train_on_batch 一批一批的训练 X_train, Y_train

# 默认的返回值是 cost,每100步输出一下结果

# 输出的样式与上一个程序的有所不同,感觉用model.fit()更清晰明了

# 上一个程序是Python实现Keras搭建神经网络训练回归模型:

# https://blog.csdn.net/weixin_45798684/article/details/106503685

model.fit(X_train, y_train, nb_epoch=2, batch_size=32)

print('\nTesting------------')

# Evaluate the model with the metrics we defined earlier

# 测试

loss, accuracy = model.evaluate(X_test, y_test)

print('test loss:', loss)

print('test accuracy:', accuracy)

运行结果:

Using TensorFlow backend.

Training------------

Epoch 1/2

32/60000 [..............................] - ETA: 5:03 - loss: 2.4464 - accuracy: 0.0625

864/60000 [..............................] - ETA: 14s - loss: 1.8023 - accuracy: 0.4850

1696/60000 [..............................] - ETA: 9s - loss: 1.5119 - accuracy: 0.6002

2432/60000 [>.............................] - ETA: 7s - loss: 1.3151 - accuracy: 0.6637

3200/60000 [>.............................] - ETA: 6s - loss: 1.1663 - accuracy: 0.7056

3968/60000 [>.............................] - ETA: 5s - loss: 1.0533 - accuracy: 0.7344

4704/60000 [=>............................] - ETA: 5s - loss: 0.9696 - accuracy: 0.7564

5408/60000 [=>............................] - ETA: 5s - loss: 0.9162 - accuracy: 0.7681

6112/60000 [==>...........................] - ETA: 5s - loss: 0.8692 - accuracy: 0.7804

6784/60000 [==>...........................] - ETA: 4s - loss: 0.8225 - accuracy: 0.7933

7424/60000 [==>...........................] - ETA: 4s - loss: 0.7871 - accuracy: 0.8021

8128/60000 [===>..........................] - ETA: 4s - loss: 0.7546 - accuracy: 0.8099

8960/60000 [===>..........................] - ETA: 4s - loss: 0.7196 - accuracy: 0.8183

9568/60000 [===>..........................] - ETA: 4s - loss: 0.6987 - accuracy: 0.8230

10144/60000 [====>.........................] - ETA: 4s - loss: 0.6812 - accuracy: 0.8262

10784/60000 [====>.........................] - ETA: 4s - loss: 0.6640 - accuracy: 0.8297

11456/60000 [====>.........................] - ETA: 4s - loss: 0.6462 - accuracy: 0.8329

12128/60000 [=====>........................] - ETA: 4s - loss: 0.6297 - accuracy: 0.8366

12704/60000 [=====>........................] - ETA: 4s - loss: 0.6156 - accuracy: 0.8405

13408/60000 [=====>........................] - ETA: 3s - loss: 0.6009 - accuracy: 0.8430

14112/60000 [======>.......................] - ETA: 3s - loss: 0.5888 - accuracy: 0.8457

14816/60000 [======>.......................] - ETA: 3s - loss: 0.5772 - accuracy: 0.8487

15488/60000 [======>.......................] - ETA: 3s - loss: 0.5685 - accuracy: 0.8503

16192/60000 [=======>......................] - ETA: 3s - loss: 0.5576 - accuracy: 0.8534

16896/60000 [=======>......................] - ETA: 3s - loss: 0.5477 - accuracy: 0.8555

17600/60000 [=======>......................] - ETA: 3s - loss: 0.5380 - accuracy: 0.8576

18240/60000 [========>.....................] - ETA: 3s - loss: 0.5279 - accuracy: 0.8600

18976/60000 [========>.....................] - ETA: 3s - loss: 0.5208 - accuracy: 0.8617

19712/60000 [========>.....................] - ETA: 3s - loss: 0.5125 - accuracy: 0.8634

20416/60000 [=========>....................] - ETA: 3s - loss: 0.5046 - accuracy: 0.8654

21088/60000 [=========>....................] - ETA: 3s - loss: 0.4992 - accuracy: 0.8669

21792/60000 [=========>....................] - ETA: 3s - loss: 0.4932 - accuracy: 0.8684

22432/60000 [==========>...................] - ETA: 3s - loss: 0.4893 - accuracy: 0.8693

23072/60000 [==========>...................] - ETA: 2s - loss: 0.4845 - accuracy: 0.8703

23648/60000 [==========>...................] - ETA: 2s - loss: 0.4800 - accuracy: 0.8712

24096/60000 [===========>..................] - ETA: 2s - loss: 0.4776 - accuracy: 0.8718

24576/60000 [===========>..................] - ETA: 2s - loss: 0.4733 - accuracy: 0.8728

25056/60000 [===========>..................] - ETA: 2s - loss: 0.4696 - accuracy: 0.8736

25568/60000 [===========>..................] - ETA: 2s - loss: 0.4658 - accuracy: 0.8745

26080/60000 [============>.................] - ETA: 2s - loss: 0.4623 - accuracy: 0.8753

26592/60000 [============>.................] - ETA: 2s - loss: 0.4600 - accuracy: 0.8756

27072/60000 [============>.................] - ETA: 2s - loss: 0.4566 - accuracy: 0.8763

27584/60000 [============>.................] - ETA: 2s - loss: 0.4532 - accuracy: 0.8771

28032/60000 [=============>................] - ETA: 2s - loss: 0.4513 - accuracy: 0.8775

28512/60000 [=============>................] - ETA: 2s - loss: 0.4477 - accuracy: 0.8784

28992/60000 [=============>................] - ETA: 2s - loss: 0.4464 - accuracy: 0.8786

29472/60000 [=============>................] - ETA: 2s - loss: 0.4439 - accuracy: 0.8791

29952/60000 [=============>................] - ETA: 2s - loss: 0.4404 - accuracy: 0.8800

30464/60000 [==============>...............] - ETA: 2s - loss: 0.4375 - accuracy: 0.8807

30784/60000 [==============>...............] - ETA: 2s - loss: 0.4349 - accuracy: 0.8813

31296/60000 [==============>...............] - ETA: 2s - loss: 0.4321 - accuracy: 0.8820

31808/60000 [==============>...............] - ETA: 2s - loss: 0.4301 - accuracy: 0.8827

32256/60000 [===============>..............] - ETA: 2s - loss: 0.4279 - accuracy: 0.8832

32736/60000 [===============>..............] - ETA: 2s - loss: 0.4258 - accuracy: 0.8838

33280/60000 [===============>..............] - ETA: 2s - loss: 0.4228 - accuracy: 0.8844

33920/60000 [===============>..............] - ETA: 2s - loss: 0.4195 - accuracy: 0.8849

34560/60000 [================>.............] - ETA: 2s - loss: 0.4179 - accuracy: 0.8852

35104/60000 [================>.............] - ETA: 2s - loss: 0.4165 - accuracy: 0.8854

35680/60000 [================>.............] - ETA: 2s - loss: 0.4139 - accuracy: 0.8860

36288/60000 [=================>............] - ETA: 2s - loss: 0.4111 - accuracy: 0.8870

36928/60000 [=================>............] - ETA: 2s - loss: 0.4088 - accuracy: 0.8874

37504/60000 [=================>............] - ETA: 2s - loss: 0.4070 - accuracy: 0.8878

38048/60000 [==================>...........] - ETA: 1s - loss: 0.4052 - accuracy: 0.8882

38656/60000 [==================>...........] - ETA: 1s - loss: 0.4031 - accuracy: 0.8888

39264/60000 [==================>...........] - ETA: 1s - loss: 0.4007 - accuracy: 0.8894

39840/60000 [==================>...........] - ETA: 1s - loss: 0.3997 - accuracy: 0.8896

40416/60000 [===================>..........] - ETA: 1s - loss: 0.3978 - accuracy: 0.8901

40960/60000 [===================>..........] - ETA: 1s - loss: 0.3958 - accuracy: 0.8906

41504/60000 [===================>..........] - ETA: 1s - loss: 0.3942 - accuracy: 0.8911

42016/60000 [====================>.........] - ETA: 1s - loss: 0.3928 - accuracy: 0.8915

42592/60000 [====================>.........] - ETA: 1s - loss: 0.3908 - accuracy: 0.8920

43168/60000 [====================>.........] - ETA: 1s - loss: 0.3889 - accuracy: 0.8924

43744/60000 [====================>.........] - ETA: 1s - loss: 0.3868 - accuracy: 0.8931

44288/60000 [=====================>........] - ETA: 1s - loss: 0.3864 - accuracy: 0.8931

44832/60000 [=====================>........] - ETA: 1s - loss: 0.3842 - accuracy: 0.8938

45408/60000 [=====================>........] - ETA: 1s - loss: 0.3822 - accuracy: 0.8944

45984/60000 [=====================>........] - ETA: 1s - loss: 0.3804 - accuracy: 0.8949

46560/60000 [======================>.......] - ETA: 1s - loss: 0.3786 - accuracy: 0.8953

47168/60000 [======================>.......] - ETA: 1s - loss: 0.3767 - accuracy: 0.8958

47808/60000 [======================>.......] - ETA: 1s - loss: 0.3744 - accuracy: 0.8963

48416/60000 [=======================>......] - ETA: 1s - loss: 0.3732 - accuracy: 0.8966

48928/60000 [=======================>......] - ETA: 0s - loss: 0.3714 - accuracy: 0.8971

49440/60000 [=======================>......] - ETA: 0s - loss: 0.3701 - accuracy: 0.8974

50048/60000 [========================>.....] - ETA: 0s - loss: 0.3678 - accuracy: 0.8979

50688/60000 [========================>.....] - ETA: 0s - loss: 0.3669 - accuracy: 0.8983

51264/60000 [========================>.....] - ETA: 0s - loss: 0.3654 - accuracy: 0.8988

51872/60000 [========================>.....] - ETA: 0s - loss: 0.3636 - accuracy: 0.8992

52608/60000 [=========================>....] - ETA: 0s - loss: 0.3618 - accuracy: 0.8997

53376/60000 [=========================>....] - ETA: 0s - loss: 0.3599 - accuracy: 0.9003

54048/60000 [==========================>...] - ETA: 0s - loss: 0.3583 - accuracy: 0.9006

54560/60000 [==========================>...] - ETA: 0s - loss: 0.3568 - accuracy: 0.9010

55296/60000 [==========================>...] - ETA: 0s - loss: 0.3548 - accuracy: 0.9016

56064/60000 [===========================>..] - ETA: 0s - loss: 0.3526 - accuracy: 0.9021

56736/60000 [===========================>..] - ETA: 0s - loss: 0.3514 - accuracy: 0.9026

57376/60000 [===========================>..] - ETA: 0s - loss: 0.3499 - accuracy: 0.9029

58112/60000 [============================>.] - ETA: 0s - loss: 0.3482 - accuracy: 0.9033

58880/60000 [============================>.] - ETA: 0s - loss: 0.3459 - accuracy: 0.9039

59584/60000 [============================>.] - ETA: 0s - loss: 0.3444 - accuracy: 0.9043

60000/60000 [==============================] - 5s 87us/step - loss: 0.3435 - accuracy: 0.9046

Epoch 2/2

32/60000 [..............................] - ETA: 11s - loss: 0.0655 - accuracy: 1.0000

736/60000 [..............................] - ETA: 4s - loss: 0.2135 - accuracy: 0.9389

1408/60000 [..............................] - ETA: 4s - loss: 0.2217 - accuracy: 0.9361

1984/60000 [..............................] - ETA: 4s - loss: 0.2316 - accuracy: 0.9390

2432/60000 [>.............................] - ETA: 4s - loss: 0.2280 - accuracy: 0.9379

3040/60000 [>.............................] - ETA: 4s - loss: 0.2374 - accuracy: 0.9368

3808/60000 [>.............................] - ETA: 4s - loss: 0.2251 - accuracy: 0.9386

4576/60000 [=>............................] - ETA: 4s - loss: 0.2225 - accuracy: 0.9379

5216/60000 [=>............................] - ETA: 4s - loss: 0.2208 - accuracy: 0.9377

5920/60000 [=>............................] - ETA: 4s - loss: 0.2173 - accuracy: 0.9383

6656/60000 [==>...........................] - ETA: 4s - loss: 0.2217 - accuracy: 0.9370

7392/60000 [==>...........................] - ETA: 4s - loss: 0.2224 - accuracy: 0.9360

8096/60000 [===>..........................] - ETA: 4s - loss: 0.2234 - accuracy: 0.9363

8800/60000 [===>..........................] - ETA: 3s - loss: 0.2235 - accuracy: 0.9358

9408/60000 [===>..........................] - ETA: 3s - loss: 0.2196 - accuracy: 0.9365

10016/60000 [====>.........................] - ETA: 3s - loss: 0.2207 - accuracy: 0.9363

10592/60000 [====>.........................] - ETA: 3s - loss: 0.2183 - accuracy: 0.9369

11168/60000 [====>.........................] - ETA: 3s - loss: 0.2177 - accuracy: 0.9377

11776/60000 [====>.........................] - ETA: 3s - loss: 0.2154 - accuracy: 0.9385

12544/60000 [=====>........................] - ETA: 3s - loss: 0.2152 - accuracy: 0.9393

13216/60000 [=====>........................] - ETA: 3s - loss: 0.2163 - accuracy: 0.9390

13920/60000 [=====>........................] - ETA: 3s - loss: 0.2155 - accuracy: 0.9391

14624/60000 [======>.......................] - ETA: 3s - loss: 0.2150 - accuracy: 0.9391

15424/60000 [======>.......................] - ETA: 3s - loss: 0.2143 - accuracy: 0.9398

16032/60000 [=======>......................] - ETA: 3s - loss: 0.2122 - accuracy: 0.9405

16672/60000 [=======>......................] - ETA: 3s - loss: 0.2096 - accuracy: 0.9409

17344/60000 [=======>......................] - ETA: 3s - loss: 0.2091 - accuracy: 0.9411

18112/60000 [========>.....................] - ETA: 3s - loss: 0.2086 - accuracy: 0.9416

18784/60000 [========>.....................] - ETA: 3s - loss: 0.2084 - accuracy: 0.9418

19392/60000 [========>.....................] - ETA: 3s - loss: 0.2076 - accuracy: 0.9418

20000/60000 [=========>....................] - ETA: 3s - loss: 0.2067 - accuracy: 0.9421

20608/60000 [=========>....................] - ETA: 3s - loss: 0.2071 - accuracy: 0.9419

21184/60000 [=========>....................] - ETA: 3s - loss: 0.2056 - accuracy: 0.9423

21856/60000 [=========>....................] - ETA: 3s - loss: 0.2063 - accuracy: 0.9419

22624/60000 [==========>...................] - ETA: 2s - loss: 0.2059 - accuracy: 0.9421

23328/60000 [==========>...................] - ETA: 2s - loss: 0.2056 - accuracy: 0.9422

23936/60000 [==========>...................] - ETA: 2s - loss: 0.2051 - accuracy: 0.9423

24512/60000 [===========>..................] - ETA: 2s - loss: 0.2041 - accuracy: 0.9424

25248/60000 [===========>..................] - ETA: 2s - loss: 0.2036 - accuracy: 0.9426

26016/60000 [============>.................] - ETA: 2s - loss: 0.2031 - accuracy: 0.9424

26656/60000 [============>.................] - ETA: 2s - loss: 0.2035 - accuracy: 0.9422

27360/60000 [============>.................] - ETA: 2s - loss: 0.2050 - accuracy: 0.9417

28128/60000 [=============>................] - ETA: 2s - loss: 0.2045 - accuracy: 0.9418

28896/60000 [=============>................] - ETA: 2s - loss: 0.2046 - accuracy: 0.9418

29536/60000 [=============>................] - ETA: 2s - loss: 0.2052 - accuracy: 0.9417

30208/60000 [==============>...............] - ETA: 2s - loss: 0.2050 - accuracy: 0.9417

30848/60000 [==============>...............] - ETA: 2s - loss: 0.2046 - accuracy: 0.9419

31552/60000 [==============>...............] - ETA: 2s - loss: 0.2037 - accuracy: 0.9421

32224/60000 [===============>..............] - ETA: 2s - loss: 0.2043 - accuracy: 0.9420

32928/60000 [===============>..............] - ETA: 2s - loss: 0.2041 - accuracy: 0.9420

33632/60000 [===============>..............] - ETA: 2s - loss: 0.2035 - accuracy: 0.9422

34272/60000 [================>.............] - ETA: 1s - loss: 0.2029 - accuracy: 0.9423

34944/60000 [================>.............] - ETA: 1s - loss: 0.2030 - accuracy: 0.9423

35648/60000 [================>.............] - ETA: 1s - loss: 0.2027 - accuracy: 0.9422

36384/60000 [=================>............] - ETA: 1s - loss: 0.2027 - accuracy: 0.9421

37120/60000 [=================>............] - ETA: 1s - loss: 0.2024 - accuracy: 0.9421

37760/60000 [=================>............] - ETA: 1s - loss: 0.2013 - accuracy: 0.9424

38464/60000 [==================>...........] - ETA: 1s - loss: 0.2011 - accuracy: 0.9424

39200/60000 [==================>...........] - ETA: 1s - loss: 0.2000 - accuracy: 0.9426

40000/60000 [===================>..........] - ETA: 1s - loss: 0.1990 - accuracy: 0.9428

40672/60000 [===================>..........] - ETA: 1s - loss: 0.1986 - accuracy: 0.9430

41344/60000 [===================>..........] - ETA: 1s - loss: 0.1982 - accuracy: 0.9432

42112/60000 [====================>.........] - ETA: 1s - loss: 0.1981 - accuracy: 0.9432

42848/60000 [====================>.........] - ETA: 1s - loss: 0.1977 - accuracy: 0.9433

43552/60000 [====================>.........] - ETA: 1s - loss: 0.1970 - accuracy: 0.9435

44256/60000 [=====================>........] - ETA: 1s - loss: 0.1972 - accuracy: 0.9436

44992/60000 [=====================>........] - ETA: 1s - loss: 0.1972 - accuracy: 0.9437

45664/60000 [=====================>........] - ETA: 1s - loss: 0.1966 - accuracy: 0.9438

46176/60000 [======================>.......] - ETA: 1s - loss: 0.1968 - accuracy: 0.9437

46752/60000 [======================>.......] - ETA: 1s - loss: 0.1969 - accuracy: 0.9438

47488/60000 [======================>.......] - ETA: 0s - loss: 0.1965 - accuracy: 0.9439

48256/60000 [=======================>......] - ETA: 0s - loss: 0.1965 - accuracy: 0.9438

48896/60000 [=======================>......] - ETA: 0s - loss: 0.1963 - accuracy: 0.9436

49568/60000 [=======================>......] - ETA: 0s - loss: 0.1962 - accuracy: 0.9438

50304/60000 [========================>.....] - ETA: 0s - loss: 0.1965 - accuracy: 0.9437

51072/60000 [========================>.....] - ETA: 0s - loss: 0.1967 - accuracy: 0.9437

51744/60000 [========================>.....] - ETA: 0s - loss: 0.1961 - accuracy: 0.9439

52480/60000 [=========================>....] - ETA: 0s - loss: 0.1957 - accuracy: 0.9439

53248/60000 [=========================>....] - ETA: 0s - loss: 0.1959 - accuracy: 0.9438

54016/60000 [==========================>...] - ETA: 0s - loss: 0.1963 - accuracy: 0.9437

54592/60000 [==========================>...] - ETA: 0s - loss: 0.1965 - accuracy: 0.9436

55168/60000 [==========================>...] - ETA: 0s - loss: 0.1962 - accuracy: 0.9436

55776/60000 [==========================>...] - ETA: 0s - loss: 0.1959 - accuracy: 0.9437

56448/60000 [===========================>..] - ETA: 0s - loss: 0.1965 - accuracy: 0.9437

57152/60000 [===========================>..] - ETA: 0s - loss: 0.1958 - accuracy: 0.9439

57824/60000 [===========================>..] - ETA: 0s - loss: 0.1956 - accuracy: 0.9438

58560/60000 [============================>.] - ETA: 0s - loss: 0.1951 - accuracy: 0.9440

59360/60000 [============================>.] - ETA: 0s - loss: 0.1947 - accuracy: 0.9440

60000/60000 [==============================] - 5s 76us/step - loss: 0.1946 - accuracy: 0.9440

Testing------------

32/10000 [..............................] - ETA: 15s

1248/10000 [==>...........................] - ETA: 0s

2656/10000 [======>.......................] - ETA: 0s

4064/10000 [===========>..................] - ETA: 0s

5216/10000 [==============>...............] - ETA: 0s

6464/10000 [==================>...........] - ETA: 0s

7744/10000 [======================>.......] - ETA: 0s

9056/10000 [==========================>...] - ETA: 0s

9984/10000 [============================>.] - ETA: 0s

10000/10000 [==============================] - 0s 47us/step

test loss: 0.17407772153392434

test accuracy: 0.9513000249862671

补充知识:Keras 搭建简单神经网络:顺序模型+回归问题

多层全连接神经网络

每层神经元个数、神经网络层数、激活函数等可自由修改

使用不同的损失函数可适用于其他任务,比如:分类问题

这是Keras搭建神经网络模型最基础的方法之一,Keras还有其他进阶的方法,官网给出了一些基本使用方法:Keras官网

# 这里搭建了一个4层全连接神经网络(不算输入层),传入函数以及函数内部的参数均可自由修改

def ann(X, y):

'''

X: 输入的训练集数据

y: 训练集对应的标签

'''

'''初始化模型'''

# 首先定义了一个顺序模型作为框架,然后往这个框架里面添加网络层

# 这是最基础搭建神经网络的方法之一

model = Sequential()

'''开始添加网络层'''

# Dense表示全连接层,第一层需要我们提供输入的维度 input_shape

# Activation表示每层的激活函数,可以传入预定义的激活函数,也可以传入符合接口规则的其他高级激活函数

model.add(Dense(64, input_shape=(X.shape[1],)))

model.add(Activation('sigmoid'))

model.add(Dense(256))

model.add(Activation('relu'))

model.add(Dense(256))

model.add(Activation('tanh'))

model.add(Dense(32))

model.add(Activation('tanh'))

# 输出层,输出的维度大小由具体任务而定

# 这里是一维输出的回归问题

model.add(Dense(1))

model.add(Activation('linear'))

'''模型编译'''

# optimizer表示优化器(可自由选择),loss表示使用哪一种

model.compile(optimizer='rmsprop', loss='mean_squared_error')

# 自定义学习率,也可以使用原始的基础学习率

reduce_lr = ReduceLROnPlateau(monitor='loss', factor=0.1, patience=10,

verbose=0, mode='auto', min_delta=0.001,

cooldown=0, min_lr=0)

'''模型训练'''

# 这里的模型也可以先从函数返回后,再进行训练

# epochs表示训练的轮数,batch_size表示每次训练的样本数量(小批量学习),validation_split表示用作验证集的训练数据的比例

# callbacks表示回调函数的集合,用于模型训练时查看模型的内在状态和统计数据,相应的回调函数方法会在各自的阶段被调用

# verbose表示输出的详细程度,值越大输出越详细

model.fit(X, y, epochs=100,

batch_size=50, validation_split=0.0,

callbacks=[reduce_lr],

verbose=0)

# 打印模型结构

print(model.summary())

return model

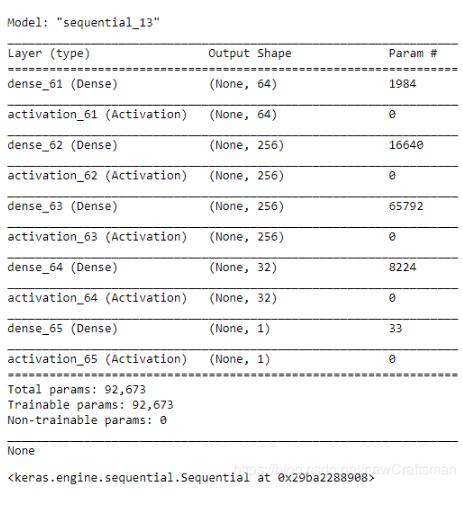

下图是此模型的结构图,其中下划线后面的数字是根据调用次数而定

以上这篇Python实现Keras搭建神经网络训练分类模型教程就是小编分享给大家的全部内容了,希望能给大家一个参考,也希望大家多多支持脚本之家。

135

135

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?