4.1 使用sessions编写hello world:

程序:

import tensorflow as tf

hello = tf.constant('Hello, TensorFlow!') #定义一个常量

sess = tf.Session() #建立一个session

print (sess.run(hello)) #通过session里面的run来运行结果

sess.close() #关闭session

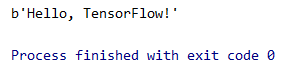

结果:

4.2 with session的使用

程序:

import tensorflow as tf

a = tf.constant(3) #定义常量3

b = tf.constant(4) #定义常量4

with tf.Session() as sess: #建立session

print ("相加: %i" % sess.run(a+b))

print( "相乘: %i" % sess.run(a*b))

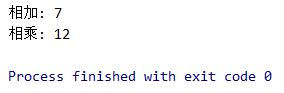

结果:

4.3 演示注入机制:

定义占位符,使用feed机制将具体数值3,4通过占位符传入,并进行相加和相乘。

程序:

import tensorflow as tf

a = tf.placeholder(tf.int16)

b = tf.placeholder(tf.int16)

add = tf.add(a, b)

mul = tf.multiply(a, b) #a与b相乘

with tf.Session() as sess:

# Run every operation with variable input

print ("相加: %i" % sess.run(add, feed_dict={a: 3, b: 4}))

print ("相乘: %i" % sess.run(mul, feed_dict={a: 3, b: 4}))

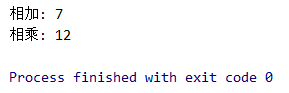

结果:

4.4 线性回归模型保存及载入:

程序:

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

plotdata = {"batchsize": [], "loss": []}

def moving_average(a, w=10):

if len(a) < w:

return a[:]

return [val if idx < w else sum(a[(idx - w):idx]) / w for idx, val in enumerate(a)]

# 生成模拟数据

train_X = np.linspace(-1, 1, 100)

train_Y = 2 * train_X + np.random.randn(*train_X.shape) * 0.3 # y=2x,但是加入了噪声

# 图形显示

plt.plot(train_X, train_Y, 'ro', label='Original data')

plt.legend()

plt.show()

tf.reset_default_graph()

# 创建模型

# 占位符

X = tf.placeholder("float")

Y = tf.placeholder("float")

# 模型参数

W = tf.Variable(tf.random_normal([1]), name="weight")

b = tf.Variable(tf.zeros([1]), name="bias")

# 前向结构

z = tf.multiply(X, W) + b

# 反向优化

cost = tf.reduce_mean(tf.square(Y - z))

learning_rate = 0.01

optimizer = tf.train.GradientDescentOptimizer(learning_rate).minimize(cost) # Gradient descent

# 初始化变量

init = tf.global_variables_initializer()

# 参数设置

training_epochs = 20

display_step = 2

saver = tf.train.Saver() # 生成saver

savedir = "F:/shendu/000" #生成模型的路径

# 启动session

with tf.Session() as sess:

sess.run(init)

# 添加session中的训练代码 Fit all training data

for epoch in range(training_epochs):

for (x, y) in zip(train_X, train_Y):

sess.run(optimizer, feed_dict={X: x, Y: y})

# 显示训练中的详细信息

if epoch % display_step == 0:

loss = sess.run(cost, feed_dict={X: train_X, Y: train_Y})

print("Epoch:", epoch + 1, "cost=", loss, "W=", sess.run(W), "b=", sess.run(b))

if not (loss == "NA"):

plotdata["batchsize"].append(epoch)

plotdata["loss"].append(loss)

print(" Finished!")

saver.save(sess, savedir + "linermodel.cpkt")#保存模型

print("cost=", sess.run(cost, feed_dict={X: train_X, Y: train_Y}), "W=", sess.run(W), "b=", sess.run(b))

# print ("cost:",cost.eval({X: train_X, Y: train_Y}))

# 图形显示

plt.plot(train_X, train_Y, 'ro', label='Original data')

plt.plot(train_X, sess.run(W) * train_X + sess.run(b), label='Fitted line')

plt.legend()

plt.show()

plotdata["avgloss"] = moving_average(plotdata["loss"])

plt.figure(1)

plt.subplot(211)

plt.plot(plotdata["batchsize"], plotdata["avgloss"], 'b--')

plt.xlabel('Minibatch number')

plt.ylabel('Loss')

plt.title('Minibatch run vs. Training loss')

plt.show()

# 重启一个session

with tf.Session() as sess2:

sess2.run(tf.global_variables_initializer())#初始化模型

saver.restore(sess2, savedir + "linermodel.cpkt")

print("x=0.2,z=", sess2.run(z, feed_dict={X: 0.2}))

4.5 模型内容

#打印模型内容:

程序:

import tensorflow as tf

from tensorflow.python.tools.inspect_checkpoint import print_tensors_in_checkpoint_file

savedir = "F:/shendu/000"

print_tensors_in_checkpoint_file(savedir+"linermodel.cpkt", None, True)

结果:

tensor_name: bias

[0.08573835]

tensor_name: weight

[1.986465]

#将w和b分别指定一个固定值,并将它们颠倒放置

程序;

import tensorflow as tf

from tensorflow.python.tools.inspect_checkpoint import print_tensors_in_checkpoint_file

savedir = "F:/shendu/000"

print_tensors_in_checkpoint_file(savedir+"linermodel.cpkt", None, True)

W = tf.Variable(1.0, name="weight")

b = tf.Variable(2.0, name="bias")

# 放到一个字典里:

saver = tf.train.Saver({'weight': b, 'bias': W})

with tf.Session() as sess:

tf.global_variables_initializer().run()

saver.save(sess, savedir+"linermodel.cpkt")

print_tensors_in_checkpoint_file(savedir+"linermodel.cpkt", None, True)

结果:

tensor_name: bias

1.0

tensor_name: weight

2.0

4.6保存检查点

#程序:

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

plotdata = {"batchsize": [], "loss": []}

def moving_average(a, w=10):

if len(a) < w:

return a[:]

return [val if idx < w else sum(a[(idx - w):idx]) / w for idx, val in enumerate(a)]

# 生成模拟数据

train_X = np.linspace(-1, 1, 100)

train_Y = 2 * train_X + np.random.randn(*train_X.shape) * 0.3 # y=2x,但是加入了噪声

# 图形显示

plt.plot(train_X, train_Y, 'ro', label='Original data')

plt.legend()

plt.show()

tf.reset_default_graph()

# 创建模型

# 占位符

X = tf.placeholder("float")

Y = tf.placeholder("float")

# 模型参数

W = tf.Variable(tf.random_normal([1]), name="weight")

b = tf.Variable(tf.zeros([1]), name="bias")

# 前向结构

z = tf.multiply(X, W) + b

# 反向优化

cost = tf.reduce_mean(tf.square(Y - z))

learning_rate = 0.01

optimizer = tf.train.GradientDescentOptimizer(learning_rate).minimize(cost) # Gradient descent

# 初始化变量

init = tf.global_variables_initializer()

# 参数设置

training_epochs = 20

display_step = 2

saver = tf.train.Saver(max_to_keep=1) # 生成saver

savedir = "F:/shendu/111"

# 启动session

with tf.Session() as sess:

sess.run(init)

# Fit all training data

for epoch in range(training_epochs):

for (x, y) in zip(train_X, train_Y):

sess.run(optimizer, feed_dict={X: x, Y: y})

# 显示训练中的详细信息

if epoch % display_step == 0:

loss = sess.run(cost, feed_dict={X: train_X, Y: train_Y})

print("Epoch:", epoch + 1, "cost=", loss, "W=", sess.run(W), "b=", sess.run(b))

if not (loss == "NA"):

plotdata["batchsize"].append(epoch)

plotdata["loss"].append(loss)

saver.save(sess, savedir + "linermodel.cpkt", global_step=epoch)

print(" Finished!")

print("cost=", sess.run(cost, feed_dict={X: train_X, Y: train_Y}), "W=", sess.run(W), "b=", sess.run(b))

# print ("cost:",cost.eval({X: train_X, Y: train_Y}))

# 图形显示

plt.plot(train_X, train_Y, 'ro', label='Original data')

plt.plot(train_X, sess.run(W) * train_X + sess.run(b), label='Fitted line')

plt.legend()

plt.show()

plotdata["avgloss"] = moving_average(plotdata["loss"])

plt.figure(1)

plt.subplot(211)

plt.plot(plotdata["batchsize"], plotdata["avgloss"], 'b--')

plt.xlabel('Minibatch number')

plt.ylabel('Loss')

plt.title('Minibatch run vs. Training loss')

plt.show()

# 重启一个session

load_epoch = 18

with tf.Session() as sess2:

sess2.run(tf.global_variables_initializer())

saver.restore(sess2, savedir + "linermodel.cpkt-" + str(load_epoch))

print("x=0.2,z=", sess2.run(z, feed_dict={X: 0.2}))

with tf.Session() as sess3:

sess3.run(tf.global_variables_initializer())

ckpt = tf.train.get_checkpoint_state(savedir)

if ckpt and ckpt.model_checkpoint_path:

saver.restore(sess3, ckpt.model_checkpoint_path)

print("x=0.2,z=", sess3.run(z, feed_dict={X: 0.2}))

with tf.Session() as sess4:

sess4.run(tf.global_variables_initializer())

kpt = tf.train.latest_checkpoint(savedir)

if kpt != None:

saver.restore(sess4, kpt)

print("x=0.2,z=", sess4.run(z, feed_dict={X: 0.2}))

结果:

Epoch: 1 cost= 1.4073209 W= [0.24966796] b= [0.5030684]

Epoch: 3 cost= 0.17785853 W= [1.5477322] b= [0.15313277]

Epoch: 5 cost= 0.079329684 W= [1.8994919] b= [0.02064049]

Epoch: 7 cost= 0.07323916 W= [1.9907178] b= [-0.01432499]

Epoch: 9 cost= 0.07298315 W= [2.01431] b= [-0.02337763]

Epoch: 11 cost= 0.07300522 W= [2.0204103] b= [-0.02571867]

Epoch: 13 cost= 0.07301682 W= [2.021988] b= [-0.02632416]

Epoch: 15 cost= 0.07302022 W= [2.0223956] b= [-0.02648057]

Epoch: 17 cost= 0.073021114 W= [2.0225003] b= [-0.02652072]

Epoch: 19 cost= 0.07302137 W= [2.0225282] b= [-0.02653147]

Finished!

cost= 0.07302142 W= [2.0225341] b= [-0.02653361]

x=0.2,z= [0.37797418]

4.7 使用MonitoredTrainingSession函数自动管理检查点文件

程序:

import tensorflow as tf

tf.reset_default_graph()

global_step = tf.train.get_or_create_global_step()

step = tf.assign_add(global_step, 1)

with tf.train.MonitoredTrainingSession(checkpoint_dir='log/checkpoints',save_checkpoint_secs = 2) as sess:

print(sess.run([global_step]))

while not sess.should_stop():#启用死循环,当sess不结束时就不停止

i = sess.run( step)

print( i)

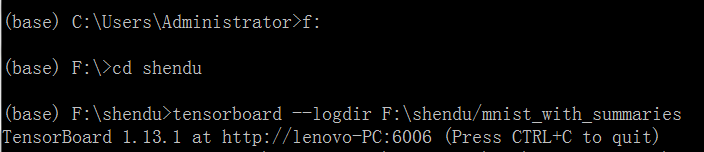

4.8线性回归的TensorBoard可视化

通过TensorBoard观察训练过程

程序:

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

print("TensorFlow 版本: {}".format(tf.VERSION))

plotdata = {"batchsize": [], "loss": []}

def moving_average(a, w=10):

if len(a) < w:

return a[:]

return [val if idx < w else sum(a[(idx - w):idx]) / w for idx, val in enumerate(a)]

# 生成模拟数据

train_X = np.linspace(-1, 1, 100)

train_Y = 2 * train_X + np.random.randn(*train_X.shape) * 0.3 # y=2x,但是加入了噪声

# 图形显示

plt.plot(train_X, train_Y, 'ro', label='Original data')

plt.legend()

plt.show()

tf.reset_default_graph()

# 创建模型

# 占位符

X = tf.placeholder("float")

Y = tf.placeholder("float")

# 模型参数

W = tf.Variable(tf.random_normal([1]), name="weight")

b = tf.Variable(tf.zeros([1]), name="bias")

# 前向结构

z = tf.multiply(X, W) + b

tf.summary.histogram('z', z) # 将预测值以直方图显示

# 反向优化

cost = tf.reduce_mean(tf.square(Y - z))

tf.summary.scalar('loss_function', cost) # 将损失以标量显示

learning_rate = 0.01

optimizer = tf.train.GradientDescentOptimizer(learning_rate).minimize(cost) # Gradient descent

# 初始化变量

init = tf.global_variables_initializer()

# 参数设置

training_epochs = 20

display_step = 2

# 启动session

with tf.Session() as sess:

sess.run(init)

merged_summary_op = tf.summary.merge_all() # 合并所有summary

# 创建summary_writer,用于写文件

summary_writer = tf.summary.FileWriter('F:/shendu/mnist_with_summaries', sess.graph)

# Fit all training data

for epoch in range(training_epochs):

for (x, y) in zip(train_X, train_Y):

sess.run(optimizer, feed_dict={X: x, Y: y})

# 生成summary

summary_str = sess.run(merged_summary_op, feed_dict={X: x, Y: y});

summary_writer.add_summary(summary_str, epoch); # 将summary 写入文件

# 显示训练中的详细信息

if epoch % display_step == 0:

loss = sess.run(cost, feed_dict={X: train_X, Y: train_Y})

print("Epoch:", epoch + 1, "cost=", loss, "W=", sess.run(W), "b=", sess.run(b))

if not (loss == "NA"):

plotdata["batchsize"].append(epoch)

plotdata["loss"].append(loss)

print(" Finished!")

print("cost=", sess.run(cost, feed_dict={X: train_X, Y: train_Y}), "W=", sess.run(W), "b=", sess.run(b))

# print ("cost:",cost.eval({X: train_X, Y: train_Y}))

# 图形显示

plt.plot(train_X, train_Y, 'ro', label='Original data')

plt.plot(train_X, sess.run(W) * train_X + sess.run(b), label='Fitted line')

plt.legend()

plt.show()

plotdata["avgloss"] = moving_average(plotdata["loss"])

plt.figure(1)

plt.subplot(211)

plt.plot(plotdata["batchsize"], plotdata["avgloss"], 'b--')

plt.xlabel('Minibatch number')

plt.ylabel('Loss')

plt.title('Minibatch run vs. Training loss')

plt.show()

print("x=0.2,z=", sess.run(z, feed_dict={X: 0.2}))

结果:

TensorFlow 版本: 1.13.1

Epoch: 1 cost= 1.3197309 W= [0.34877086] b= [0.49700525]

Epoch: 3 cost= 0.18035989 W= [1.5924916] b= [0.16364832]

Epoch: 5 cost= 0.08659153 W= [1.9297352] b= [0.03665461]

Epoch: 7 cost= 0.08016183 W= [2.0171988] b= [0.00313166]

Epoch: 9 cost= 0.079711564 W= [2.0398188] b= [-0.00554797]

Epoch: 11 cost= 0.07967628 W= [2.0456674] b= [-0.00779231]

Epoch: 13 cost= 0.07967259 W= [2.0471792] b= [-0.00837264]

Epoch: 15 cost= 0.07967199 W= [2.0475702] b= [-0.00852263]

Epoch: 17 cost= 0.07967186 W= [2.0476708] b= [-0.00856123]

Epoch: 19 cost= 0.07967183 W= [2.0476975] b= [-0.00857138]

Finished!

cost= 0.07967182 W= [2.0477023] b= [-0.00857328]

x=0.2,z= [0.4009672]

153

153

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?