- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍦 参考文章:[Pytorch实战 | 第J4周:ResNet与DenseNet结合探索

- 🍖 原作者:K同学啊|接辅导、项目定制

前言

上周文章中我们复现了DenseNet算法并与,本文将结合Resnet50算法模型进行初步的对比,我们将继续把DenseNet算法与Resnet50算法的特点结合,并构建一个新的模型框架

参考文章:第J3周/J3-1周:Densenet算法对比及多数据集对比(乳腺癌识别)_quant_day的博客-CSDN博客

首先我们再看一下Resnet50算法与DenseNet算法的结构图

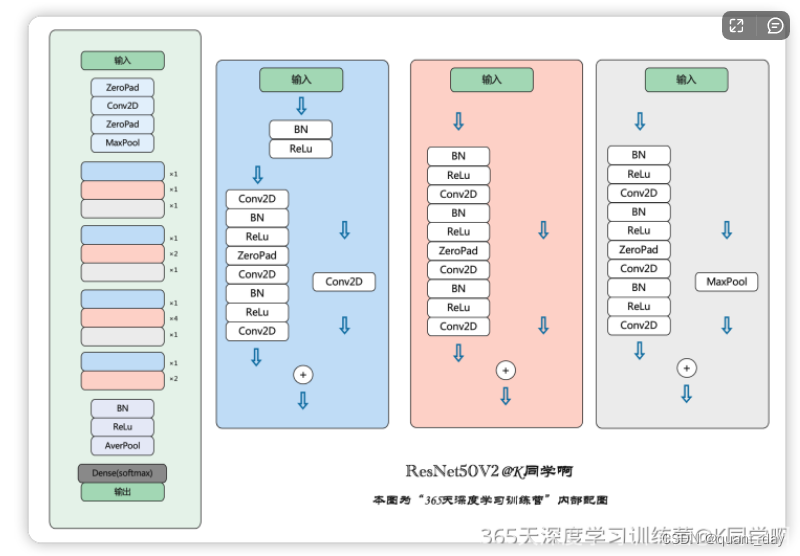

Resnet50算法

Resnet50主要通过IdentityBlock与ConvBlock两个过程提供逐层短路连接来加深网络

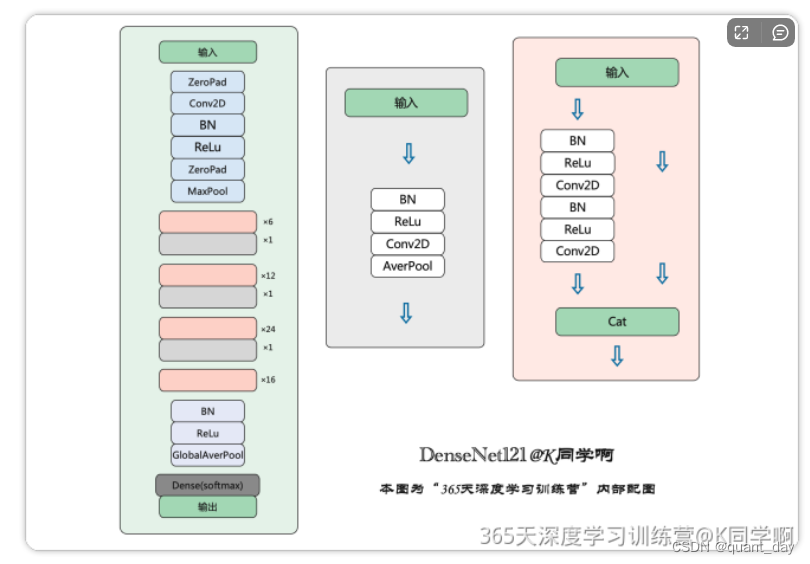

DenseNet算法的结构图

DenseNet算法类似的,提供了DenseBlock与Transition过程来连接前面所有层跟下一层的密集连接

所以本文设计了一个既有密集连接又有逐层短路连接的算法方案

一、模型准备

第一步,我们先封装Resnet50与DenseNet算法,以便新的模型直接引用标准的模型框架

resnet50v2.py

# -*- coding:utf-8 -*-

import torch

from torchvision import datasets, transforms

import torch.nn as nn

import time

import numpy as np

import matplotlib.pyplot as plt

import torchsummary as summary

import os

from collections import OrderedDict

class IdentityBlock_V2(nn.Module):

def __init__(self, in_channel, kl_size, filters):

super(IdentityBlock_V2, self).__init__()

filter1, filter2, filter3 = filters

self.bn0 = nn.BatchNorm2d(num_features=in_channel)

self.cov1 = nn.Conv2d(in_channels=in_channel, out_channels=filter1, kernel_size=1, stride=1, padding=0)

self.bn1 = nn.BatchNorm2d(num_features=filter1)

self.relu = nn.ReLU(inplace=True)

self.zeropadding2d1 = nn.ZeroPad2d(1)

self.cov2 = nn.Conv2d(in_channels=filter1, out_channels=filter2, kernel_size=kl_size, stride=1, padding=0)

self.bn2 = nn.BatchNorm2d(num_features=filter2)

self.cov3 = nn.Conv2d(in_channels=filter2, out_channels=filter3, kernel_size=1, stride=1, padding=0)

self.bn3 = nn.BatchNorm2d(num_features=filter3)

def forward(self, x):

identity = self.bn0(x)

identity = self.relu(identity)

identity = self.cov1(identity)

identity = self.bn1(identity)

identity = self.relu(identity)

identity = self.zeropadding2d1(identity)

identity = self.cov2(identity)

identity = self.bn2(identity)

identity = self.relu(identity)

identity = self.cov3(identity)

x = identity + x

x = self.relu(x)

return x

class ConvBlock_V2(nn.Module):

def __init__(self, in_channel, kl_size, filters, stride_size=2, conv_shortcut=False):

super(ConvBlock_V2, self).__init__()

filter1, filter2, filter3 = filters

self.bn0 = nn.BatchNorm2d(num_features=in_channel)

self.cov1 = nn.Conv2d(in_channels=in_channel, out_channels=filter1, kernel_size=1, stride=stride_size, padding=0)

self.bn1 = nn.BatchNorm2d(num_features=filter1)

self.relu = nn.ReLU(inplace=True)

self.zeropadding2d1 = nn.ZeroPad2d(1)

self.cov2 = nn.Conv2d(in_channels=filter1, out_channels=filter2, kernel_size=kl_size, stride=1, padding=0)

self.bn2 = nn.BatchNorm2d(num_features=filter2)

self.cov3 = nn.Conv2d(in_channels=filter2, out_channels=filter3, kernel_size=1, stride=1, padding=0)

self.bn3 = nn.BatchNorm2d(num_features=filter3)

self.conv_shortcut = conv_shortcut

if self.conv_shortcut:

self.short_cut = nn.Conv2d(in_channels=in_channel, out_channels=filter3, kernel_size=1, stride=stride_size, padding=0)

else:

self.short_cut = nn.MaxPool2d(kernel_size=1, stride=stride_size, padding=0)

def forward(self, x):

identity = self.bn0(x)

identity = self.relu(identity)

short_cut = self.short_cut(identity)

identity = self.cov1(identity)

identity = self.bn1(identity)

identity = self.relu(identity)

identity = self.zeropadding2d1(identity)

identity = self.cov2(identity)

identity = self.bn2(identity)

identity = self.relu(identity)

identity = self.cov3(identity)

x = identity + short_cut

x = self.relu(x)

return x

class Resnet50_Model_V2(nn.Module):

def __init__(self, in_channel, N_classes):

super(Resnet50_Model_V2, self).__init__()

self.in_channels = in_channel

# ============= 基础层

# 方法1

self.zeropadding2d_0 = nn.ZeroPad2d(3)

self.cov0 = nn.Conv2d(self.in_channels, out_channels=64, kernel_size=7, stride=2)

self.zeropadding2d_1 = nn.ZeroPad2d(1)

self.maxpool0 = nn.MaxPool2d(kernel_size=3, stride=2, padding=0)

self.layer1 = nn.Sequential(

ConvBlock_V2(64, 3, [64, 64, 256], 1, 1),

IdentityBlock_V2(256, 3, [64, 64, 256]),

ConvBlock_V2(256, 3, [64, 64, 256], 2, 0),

)

self.layer2 = nn.Sequential(

ConvBlock_V2(256, 3, [128, 128, 512], 1, 1),

IdentityBlock_V2(512, 3, [128, 128, 512]),

IdentityBlock_V2(512, 3, [128, 128, 512]),

ConvBlock_V2(512, 3, [128, 128, 512], 2, 0),

)

self.layer3 = nn.Sequential(

ConvBlock_V2(512, 3, [256, 256, 1024], 1, 1),

IdentityBlock_V2(1024, 3, [256, 256, 1024]),

IdentityBlock_V2(1024, 3, [256, 256, 1024]),

IdentityBlock_V2(1024, 3, [256, 256, 1024]),

IdentityBlock_V2(1024, 3, [256, 256, 1024]),

ConvBlock_V2(1024, 3, [256, 256, 1024], 2, 0),

)

self.layer4 = nn.Sequential(

ConvBlock_V2(1024, 3, [512, 512, 2048], 1, 1),

IdentityBlock_V2(2048, 3, [512, 512, 2048]),

IdentityBlock_V2(2048, 3, [512, 512, 2048]),

)

# 输出网络

self.bn = nn.BatchNorm2d(num_features=2048)

self.relu = nn.ReLU(inplace=True)

self.avgpool = nn.AvgPool2d((7, 7))

# classfication layer

# 7*7均值后2048个参数

self.fc = nn.Sequential(nn.Linear(2048, N_classes),

nn.Softmax(dim=1))

def basic_layer1(self, x):

'''

input: x = tensor(3, 224, 224).unsqueeze(0)

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 112, 112] 9,408

BatchNorm2d-2 [-1, 64, 112, 112] 128

ReLU-3 [-1, 64, 112, 112] 0

MaxPool2d-4 [-1, 64, 56, 56] 0

================================================================

'''

x = self.zeropadding2d_0(x)

x = self.cov0(x)

x = self.zeropadding2d_1(x)

x = self.maxpool0(x)

return x

def forward(self, x):

x = self.forward1(x)

return x

def forward1(self, x):

x = self.basic_layer1(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.bn(x)

x = self.relu(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

#%%

if __name__=='__main__':

model = Resnet50_Model_V2()

DenseNet.py

# -*- coding:utf-8 -*-

import torch

from torchvision import datasets, transforms

import torch.nn as nn

import time

import numpy as np

import matplotlib.pyplot as plt

import torch.nn.functional as F

import torchsummary as summary

import os

from collections import OrderedDict

class DenseLayer(nn.Sequential):

def __init__(self, in_channel, growth_rate, bn_size, drop_rate):

super(DenseLayer, self).__init__()

self.out_channels1 = bn_size * growth_rate

self.out_channels2 = growth_rate

self.add_module("norm1", nn.BatchNorm2d(num_features=in_channel))

self.add_module("relu1", nn.ReLU(inplace=True))

self.add_module("conv1", nn.Conv2d(in_channels=in_channel, out_channels=self.out_channels1, kernel_size=1, stride=1, padding=0, bias=False))

self.add_module("norm2", nn.BatchNorm2d(num_features=self.out_channels1))

self.add_module("relu2", nn.ReLU(inplace=True))

self.add_module("conv2", nn.Conv2d(in_channels=self.out_channels1, out_channels=self.out_channels2, kernel_size=3, stride=1, padding=1, bias=False))

self.drop_rate = drop_rate

def forward(self, x):

new_features = super(DenseLayer, self).forward(x)

if self.drop_rate>0:

new_features = F.dropout(new_features, p=self.drop_rate, training=self.training)

return torch.cat([x, new_features], 1)

class DenseBlock(nn.Sequential):

def __init__(self, num_layers, in_channel, growth_rate, bn_size, drop_rate):

super(DenseBlock, self).__init__()

for i in range(num_layers):

layer = DenseLayer(in_channel+i*growth_rate, growth_rate, bn_size, drop_rate)

self.add_module('DenseLayer%d' %(i+1), layer)

class Transition(nn.Sequential):

def __init__(self, in_channel, out_channel):

super(Transition, self).__init__()

self.add_module("norm", nn.BatchNorm2d(num_features=in_channel))

self.add_module("relu", nn.ReLU(inplace=True))

self.add_module("conv", nn.Conv2d(in_channels=in_channel, out_channels=out_channel, kernel_size=1, stride=1, padding=0, bias=False))

self.add_module("pool", nn.AvgPool2d(2, stride=2))

class DenseNet(nn.Module):

def __init__(self, growth_rate = 32, block_config=(6, 12, 24, 16), num_init_features=64,

bn_size=4, compression_rate=0.5, drop_rate=0, num_classes=4):

super(DenseNet, self).__init__()

# basic_layer

self.in_channels = 3

self.basic_layer = nn.Sequential(

nn.Conv2d(self.in_channels, out_channels=num_init_features, kernel_size=7, stride=2, padding=3, bias=False),

nn.BatchNorm2d(num_features=num_init_features),

nn.ReLU(inplace=False),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

self.features = self.basic_layer

# DenseBlock

self.num_features = num_init_features

for i, num_layers in enumerate(block_config):

block = DenseBlock(num_layers, self.num_features, growth_rate, bn_size, drop_rate)

self.features.add_module('DenseBlock%d' %(i+1), block)

self.num_features += num_layers * growth_rate

if i != (len(block_config) - 1):

trans = Transition(self.num_features, int(self.num_features * compression_rate))

self.features.add_module('Transition%d' %(i+1), trans)

self.num_features = int(self.num_features * compression_rate)

# final_layer

self.features.add_module('norm_f', nn.BatchNorm2d(num_features=self.num_features))

self.add_module("relu_f", nn.ReLU(inplace=True))

# classfication layer

self.classifier = nn.Sequential(nn.Linear(self.num_features, num_classes),

nn.Softmax(dim=1))

# param initialization

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight)

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.bias, 0)

nn.init.constant_(m.weight, 1)

elif isinstance(m, nn.Linear):

nn.init.constant_(m.bias, 0)

def forward(self, x):

features = self.features(x)

out = F.avg_pool2d(features, 7, stride=1).view(features.size(0), -1)

out = self.classifier(out)

return out

def densenet121(pretrained=False, **kwargs):

model = DenseNet(growth_rate = 32, block_config=(6, 12, 24, 16), num_init_features=64)

if pretrained:

summary.summary(model, (3, 224, 224))

return model

#%%

if __name__=='__main__':

model = DenseNet(growth_rate = 32, block_config=(6, 12, 24, 16), num_init_features=64, bn_size=4, compression_rate=0.5, drop_rate=0, num_classes=4)

二、引用标准模型

from resnet50v2 import *

from DenseNet import *通过上面两步,我们将resnet50v2的IdentityBlock_V2与ConvBlock_V2引入,及DenseNet的DenseBlock与Transition引入,通过这四个block组合新的网络框架

简单的,我们在DenseNet的密集连接后加一条resnet50v2的短路连接

实现如下:

class DenseNet_Resnet(nn.Module):

def __init__(self, growth_rate = 32, block_config=(6, 12, 24, 16), num_init_features=64,

bn_size=4, compression_rate=0.5, drop_rate=0, num_classes=4):

super(DenseNet_Resnet, self).__init__()

# basic_layer

self.in_channels = 3

self.basic_layer = nn.Sequential(

nn.Conv2d(self.in_channels, out_channels=num_init_features, kernel_size=7, stride=2, padding=3, bias=False),

nn.BatchNorm2d(num_features=num_init_features),

nn.ReLU(inplace=False),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

self.features = self.basic_layer

# DenseBlock

self.num_features = num_init_features

for i, num_layers in enumerate(block_config):

block = DenseBlock(num_layers, self.num_features, growth_rate, bn_size, drop_rate)

self.features.add_module('DenseBlock%d' %(i+1), block)

self.num_features += num_layers * growth_rate

if i != (len(block_config) - 1):

trans = Transition(self.num_features, int(self.num_features * compression_rate))

self.features.add_module('Transition%d' %(i+1), trans)

self.num_features = int(self.num_features * compression_rate)

# Resnet50_V2

Resnet_ConvBlock_V2 = ConvBlock_V2(self.num_features, 3, [int(self.num_features/2), int(self.num_features/2), self.num_features * 2], 1, 1)

self.features.add_module('ConvBlock_V2%d' %(i+1), Resnet_ConvBlock_V2)

self.num_features = self.num_features * 2

# final_layer

self.features.add_module('norm_f', nn.BatchNorm2d(num_features=self.num_features))

self.add_module("relu_f", nn.ReLU(inplace=True))

# classfication layer

self.classifier = nn.Sequential(nn.Linear(self.num_features, num_classes),

nn.Softmax(dim=1))

# param initialization

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight)

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.bias, 0)

nn.init.constant_(m.weight, 1)

elif isinstance(m, nn.Linear):

nn.init.constant_(m.bias, 0)

def forward(self, x):

features = self.features(x)

out = F.avg_pool2d(features, 7, stride=1).view(features.size(0), -1)

out = self.classifier(out)

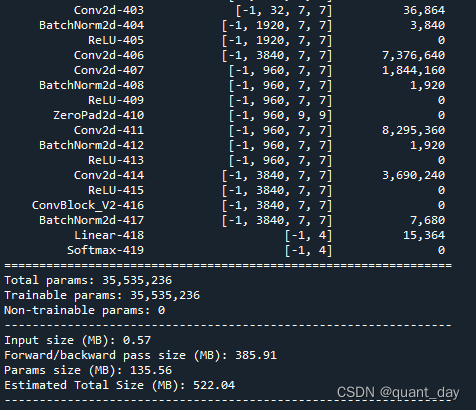

return out不难看出,主要区别是在每层DenseBlock与Transition后都添加了一步ConvBlock_V2

Resnet_ConvBlock_V2 = ConvBlock_V2(self.num_features, 3, [int(self.num_features/2), int(self.num_features/2), self.num_features * 2], 1, 1)

self.features.add_module('ConvBlock_V2%d' %(i+1), Resnet_ConvBlock_V2)

self.num_features = self.num_features * 2新模型的打印,参数增加了不少

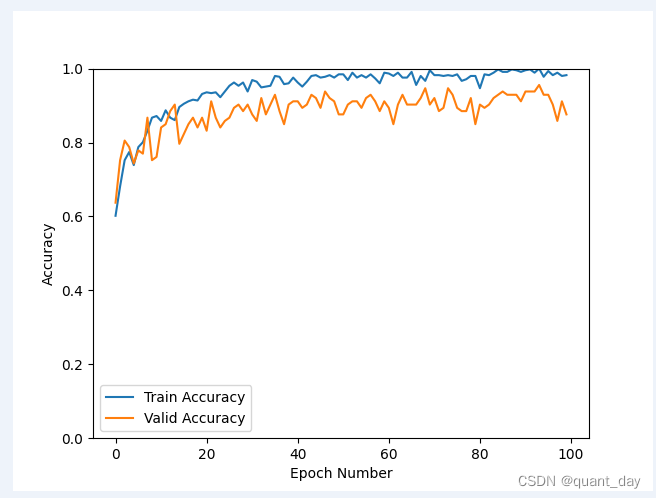

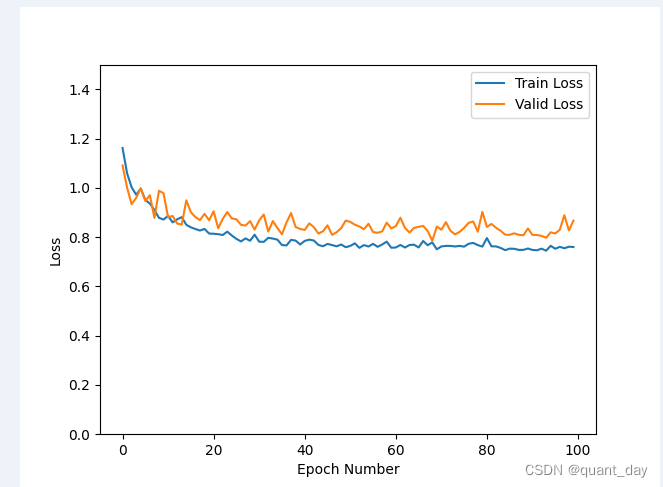

三、运行结果

对比第J3周/J3-1周:Densenet算法对比及多数据集对比(乳腺癌识别)_quant_day的博客-CSDN博客

的结果

DenseNet_Resnet模型有一个精度提升的过程,大概提升到20层左右,精度不再提升,相对于DenseNet模型一层epochs就到达最大精度会更好一些

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?